scrapy—下载中国大学慕课课程视频及文件

1、本文很大程度参考了https://blog.csdn.net/qq_37244001/article/details/84780430,在此表示感谢

2、scrapy 应用如下:

settings 文件

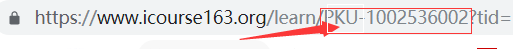

COURSE_ID="PKU-1002536002"

#{(a)标清 (b)高清 (c)超清 }

VIDEO_TYPE="a"

#download.url

DOWNLOAD_UEL="F:\\python 爬虫\\mooc\\"

BOT_NAME = 'moocScrapy'

SPIDER_MODULES = ['moocScrapy.spiders']

NEWSPIDER_MODULE = 'moocScrapy.spiders'

ROBOTSTXT_OBEY = False

COOKIES_ENABLED = False

# URL不去重

DUPEFILTER_CLASS = 'scrapy.dupefilters.BaseDupeFilter'

DOWNLOAD_DELAY = 0.25 # 250 ms of delay

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

}

import datetime

Today=datetime.datetime.now()

Log_file_path='log/scrapy_{}_{}_{}.log'.format(Today.year,Today.month,Today.day)# 则在目标下增加log文件夹项目下 和scrapy.cfg同级

#Log_file_path='scrapy_{}_{}_{}.log'.format(Today.year,Today.month,Today.day)#时间为名字

LOG_LEVEL="WARNING"#级别,则高于或者等于该等级的信息就能输出到我的日志中,低于该级别的信息则输出不到我的日志信息中

LOG_FILE =Log_file_path

#mongodb.config

MONGO_URI = 'localhost'

MONGO_DB = 'mooc'

DOWNLOADER_MIDDLEWARES = {

#'moocScrapy.middlewares.MoocscrapyDownloaderMiddleware': 543,

'moocScrapy.middlewares.RandomUserAgent': 543,

}

ITEM_PIPELINES = {

'moocScrapy.pipelines.MoocscrapyPipeline': 400,

'moocScrapy.pipelines.MongoPipeline': 300,

'moocScrapy.pipelines.CheckPipeline': 100,

}

在settings中

1、找出课件的id

COURSE_ID=“PKU-1002536002”

2、可以要求视频格式

#{(a)标清 (b)高清 (c)超清 }

VIDEO_TYPE=“a”

3、存放地址

DOWNLOAD_UEL="F:\\python 爬虫\\mooc\\"

items 文件

class MoocscrapyItem(scrapy.Item):

# define the fields for your item here like:

collection = 'course'

course_title = scrapy.Field()

course_collage = scrapy.Field()

course_id = scrapy.Field()

single_chaper = scrapy.Field()

single_chaper_id = scrapy.Field()

single_lesson = scrapy.Field()

single_lesson_id = scrapy.Field()

video_url = scrapy.Field()

pdf_url = scrapy.Field()

pass

pipelines 文件

1、 去重:

class CheckPipeline(object):

"""check item, and drop the duplicate one"""

def __init__(self):

self.names_seen = set()

def process_item(self, item, spider):

print(item)

if item['single_lesson_id']:

if item['single_lesson_id'] in self.names_seen:

raise DropItem("Duplicate item found: %s" % item)

else:

self.names_seen.add(item['single_lesson_id'])

return item

else:

raise DropItem("Missing price in %s" % item)

2 、储存到mongodb

class MongoPipeline(object):

def __init__(self, mongo_uri, mongo_db):

self.mongo_uri = mongo_uri

self.mongo_db = mongo_db

@classmethod

def from_crawler(cls, crawler):

return cls(mongo_uri=crawler.settings.get('MONGO_URI'), mongo_db=crawler.settings.get('MONGO_DB'))

def open_spider(self, spider):

self.client = pymongo.MongoClient(self.mongo_uri)

self.db = self.client[self.mongo_db]

def process_item(self, item, spider):

self.db[item.collection].update({'single_lesson_id': item['single_lesson_id']}, dict(item), True)

return item

3、下载pdf 和MP4文件

class MoocscrapyPipeline(object):

def process_item(self, item, spider):

if item["pdf_url"]:

pdf_file = requests.get(item["pdf_url"])

if not os.path.isdir(DOWNLOAD_UEL + 'PDFs'):

os.mkdir(DOWNLOAD_UEL + r'PDFs')

with open(DOWNLOAD_UEL + 'PDFs\\' + item["single_lesson"] + '.pdf', 'wb') as file:

file.write(pdf_file.content)

if item["video_url"]:

try :

mp4 = Url_mp4(url=item["video_url"],filename=item["single_lesson"]+".mp4",dir_name=DOWNLOAD_UEL)

mp4.run()

except Exception as e:

print(e)

url=replace(url=item["video_url"])

mp4 = Url_mp4(url=url, filename=item["single_lesson"] + ".mp4", dir_name=DOWNLOAD_UEL)

mp4.run()

return item

spider 文件

class MoocSpider(scrapy.Spider):

name = 'mooc'

allowed_domains = []

def __init__(self):

self.course_page_url = 'http://www.icourse163.org/learn/'

self.SOURCE_INFO_URL = 'http://www.icourse163.org/dwr/call/plaincall/CourseBean.getMocTermDto.dwr'

self.SOURCE_RESOURCE_URL = 'http://www.icourse163.org/dwr/call/plaincall/CourseBean.getLessonUnitLearnVo.dwr'

self.course=COURSE_ID

function_init()

def start_requests(self):

yield scrapy.Request(url=self.course_page_url+self.course, callback=self.parse)

def parse(self, response):

'''

通过解析的course_id获取当前所有可下载的资源信息

'''

data=get_course_info(course_page=response)

print(data)

print(data.get("course_id"))

# c0-param0:代表课程id

# batchId:可以为任意时间戳

# 其他字段为固定不变字段

post_data = {

'callCount': '1',

'scriptSessionId': '${scriptSessionId}190',

'c0-scriptName': 'CourseBean',

'c0-methodName': 'getMocTermDto',

'c0-id': '0',

'c0-param0': 'number:' + data.get("course_id"),

'c0-param1': 'number:1',

'c0-param2': 'boolean:true',

'batchId': '1492167717772'

}

yield scrapy.FormRequest( url=self.SOURCE_INFO_URL, formdata=post_data,meta=data,callback=self.get_course_all_source)

def get_course_all_source(self,response):

context=response.text.encode('utf-8').decode('unicode_escape')

print(context)

meta_data=response.meta

chapter_pattern_compile = re.compile(

r'homeworks=.*?;.+id=(\d+).*?name="(.*?)";')

# 查找所有一级级目录id和name

chapter_set = re.findall(chapter_pattern_compile, context)

with open(DOWNLOAD_UEL+'TOC.txt', 'w', encoding='utf-8') as file:

# 遍历所有一级目录id和name并写入目录

for index, single_chaper in enumerate(chapter_set):

file.write('%s \n' % (single_chaper[1]))

# 这里id为二级目录id

lesson_pattern_compile = re.compile(

r'chapterId=' + single_chaper[0] +

r'.*?contentType=1.*?id=(\d+).+name="(.*?)".*?test')

# 查找所有二级目录id和name

lesson_set = re.findall(lesson_pattern_compile,

context)

# 遍历所有二级目录id和name并写入目录

for sub_index, single_lesson in enumerate(lesson_set):

item=MoocscrapyItem()

item["course_title"]=meta_data["course_title"]

item["course_collage"]=meta_data["course_collage"]

item["course_id"]=meta_data["course_id"]

item["single_chaper"]=single_chaper[1]

item["single_chaper_id"]=single_chaper[0]

item["single_lesson"]=single_lesson[1]

item["single_lesson_id"]=single_lesson[0]

# item["video_url"]=

# item["pdf_url"]=

file.write(' %s \n' % (single_lesson[1]))

# 查找二级目录下视频,并返回 [contentid,contenttype,id,name]<class 'list'>: [('1009008053', '1', '1006837824', '4.1-损失函数')]

video_pattern_compile = re.compile(

r'contentId=(\d+).+contentType=(1).*?id=(\d+).*?lessonId='

+ single_lesson[0] + r'.*?name="(.+)"')

video_set = re.findall(video_pattern_compile,

context)

# 查找二级目录下文档,并返回 [contentid,contenttype,id,name]

pdf_pattern_compile = re.compile(

r'contentId=(\d+).+contentType=(3).+id=(\d+).+lessonId=' +

single_lesson[0] + r'.+name="(.+)"')

pdf_set = re.findall(pdf_pattern_compile,

context)

name_pattern_compile = re.compile(

r'^[第一二三四五六七八九十\d]+[\s\d\._章课节讲]*[\.\s、]\s*\d*')

# 遍历二级目录下视频集合,写入目录并下载

count_num = 0

param={

"type": 0,

"item":item

}

for video_index, single_video in enumerate(video_set):

rename = re.sub(name_pattern_compile, '', single_video[3])

file.write(' [视频] %s \n' % (rename))

param["type"]=single_video[1]

post_data=self.get_content(single_video, '%d.%d.%d [视频] %s' %(index + 1, sub_index + 1, video_index + 1, rename),VIDEO_TYPE)

yield scrapy.FormRequest(self.SOURCE_RESOURCE_URL, formdata=post_data, meta=param,

callback=self.get_download_url)

count_num += 1

# 遍历二级目录下pdf集合,写入目录并下载

for pdf_index, single_pdf in enumerate(pdf_set):

rename = re.sub(name_pattern_compile, '', single_pdf[3])

file.write(' [文档] %s \n' % (rename))

param["type"] = single_pdf[1]

post_data=self.get_content(single_pdf, '%d.%d.%d [文档] %s' %(index + 1, sub_index + 1, pdf_index + 1 + count_num, rename))

yield scrapy.FormRequest(self.SOURCE_RESOURCE_URL, formdata=post_data, meta=param,

callback=self.get_download_url)

def get_content(self,single_content, name, *args):

'''

如果是文档,则直接下载

如果是视频,则保存链接供第三方下载

'''

# 检查文件命名,防止网站资源有特殊字符本地无法保存

file_pattern_compile = re.compile(r'[\\/:\*\?"<>\|]')

name = re.sub(file_pattern_compile, '', name)

# 检查是否有重名的(即已经下载过的)

if os.path.exists(DOWNLOAD_UEL+'PDFs\\' + name + '.pdf'):

print(name + "------------->已下载")

return

post_data = {

'callCount': '1',

'scriptSessionId': '${scriptSessionId}190',

'httpSessionId': '5531d06316b34b9486a6891710115ebc',

'c0-scriptName': 'CourseBean',

'c0-methodName': 'getLessonUnitLearnVo',

'c0-id': '0',

'c0-param0': 'number:' + single_content[0], # 二级目录id

'c0-param1': 'number:' + single_content[1], # 判定文件还是视频

'c0-param2': 'number:0',

'c0-param3': 'number:' + single_content[2], # 具体资源id

'batchId': '1492168138043'

}

return post_data

def get_download_url(self,response):

context = response.text.encode('utf-8').decode('unicode_escape')

print(context)

meta_data = response.meta

item=meta_data.get("item")

if meta_data["type"] == '1':

try:

if VIDEO_TYPE == 'a':

download_pattern_compile = re.compile(

r'mp4SdUrl="(.*?\.mp4).*?"')

elif VIDEO_TYPE == "b":

download_pattern_compile = re.compile(

r'mp4HdUrl="(.*?\.mp4).*?"')

else:

download_pattern_compile = re.compile(

r'mp4ShdUrl="(.*?\.mp4).*?"')

video_down_url = re.search(download_pattern_compile,

context).group(1)

except AttributeError:

print('------------------------')

print(meta_data["item"].get("single_lesson") + '没有该清晰度格式,降级处理')

print('------------------------')

download_pattern_compile = re.compile(r'mp4SdUrl="(.*?\.mp4).*?"')

video_down_url = re.search(download_pattern_compile,

context).group(1)

item["video_url"]=video_down_url

item["pdf_url"]=None

print('正在存储链接:' + meta_data["item"].get("single_lesson") + '.mp4')

with open(DOWNLOAD_UEL+'Links.txt', 'a', encoding='utf-8') as file:

file.write('%s \n' % (video_down_url))

with open(DOWNLOAD_UEL+'Rename.bat', 'a', encoding='utf-8') as file:

video_down_url = re.sub(r'/', '_', video_down_url)

file.write('rename "' + re.search(

r'http:.*video_(.*.mp4)', video_down_url).group(1) + '" "' +

meta_data["item"].get("single_lesson") + '.mp4"' + '\n')

# 如果是文档的话

else:

pdf_download_url = re.search(r'textOrigUrl:"(.*?)"', context).group(1)

print('正在下载:' + meta_data["item"].get("single_lesson") + '.pdf')

item["pdf_url"] = pdf_download_url

item["video_url"] = None

# pdf_file = requests.get(pdf_download_url)

# if not os.path.isdir(DOWNLOAD_UEL+'PDFs'):

# os.mkdir(DOWNLOAD_UEL+r'PDFs')

# with open(DOWNLOAD_UEL+'PDFs\\' + meta_data["item"].get("single_lesson") + '.pdf', 'wb') as file:

# file.write(pdf_file.content)

yield item

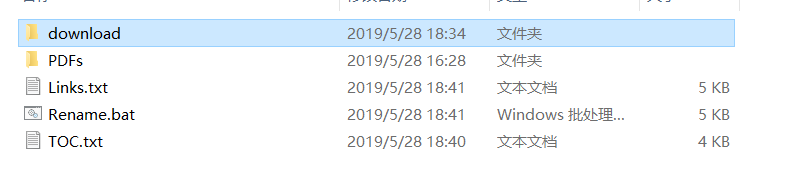

结果如下:

代码如下:

https://download.csdn.net/download/huangwencai123/11212620

530

530

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?