目录

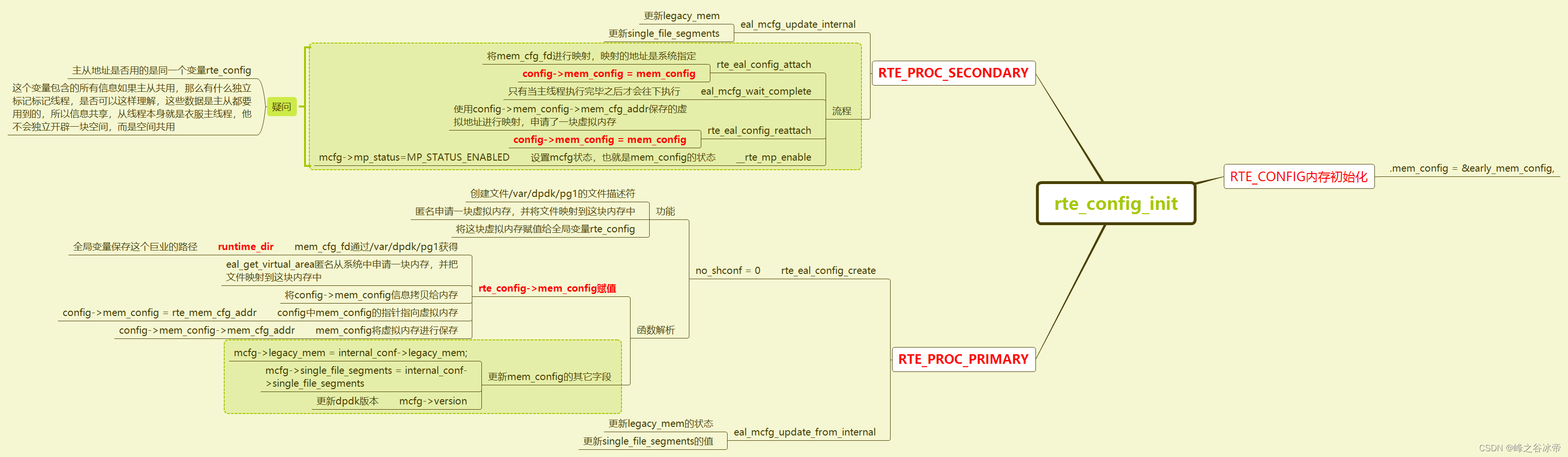

一、rte_config_init

1、实现功能

主进程:

(1)申请一块虚拟内存,并将内存信息保存的文件映射到这块虚拟内存中

(2)更新mem_config的配置信息

从进程:

(1)匿名申请申请一块共享内存,这次申请主要是为了获取这块内存的首地址,卸载之后重新申请指定该内存的一块地址,并将其挂载在rte_config.mem_config上

(2)更新从进程的配置信息

2、函数实现导图

3、代码详解

(1)主进程代码解读

void *

eal_get_virtual_area(void *requested_addr, size_t *size,

size_t page_sz, int flags, int mmap_flags)

{

bool addr_is_hint, allow_shrink, unmap, no_align;

uint64_t map_sz;

void *mapped_addr, *aligned_addr;

uint8_t try = 0;

if (system_page_sz == 0)

system_page_sz = sysconf(_SC_PAGESIZE);

mmap_flags |= MAP_PRIVATE | MAP_ANONYMOUS;

RTE_LOG(DEBUG, EAL, "Ask a virtual area of 0x%zx bytes\n", *size);

addr_is_hint = (flags & EAL_VIRTUAL_AREA_ADDR_IS_HINT) > 0;

allow_shrink = (flags & EAL_VIRTUAL_AREA_ALLOW_SHRINK) > 0;

unmap = (flags & EAL_VIRTUAL_AREA_UNMAP) > 0;

if (next_baseaddr == NULL && internal_config.base_virtaddr != 0 &&

rte_eal_process_type() == RTE_PROC_PRIMARY)

next_baseaddr = (void *) internal_config.base_virtaddr;

#ifdef RTE_ARCH_64

//获取next_baseaddr 地址

if (next_baseaddr == NULL && internal_config.base_virtaddr == 0 &&

rte_eal_process_type() == RTE_PROC_PRIMARY){

next_baseaddr = (void *) eal_get_baseaddr();

}

#endif

if (requested_addr == NULL && next_baseaddr != NULL) {

requested_addr = next_baseaddr;

requested_addr = RTE_PTR_ALIGN(requested_addr, page_sz);

addr_is_hint = true;

}

/* we don't need alignment of resulting pointer in the following cases:

*

* 1. page size is equal to system size

* 2. we have a requested address, and it is page-aligned, and we will

* be discarding the address if we get a different one.

*

* for all other cases, alignment is potentially necessary.

*/

//如果当前页的大小和系统页的大小一致,说明结构已经对齐

no_align = (requested_addr != NULL &&

requested_addr == RTE_PTR_ALIGN(requested_addr, page_sz) &&

!addr_is_hint) ||

page_sz == system_page_sz;

do {

map_sz = no_align ? *size : *size + page_sz;

if (map_sz > SIZE_MAX) {

RTE_LOG(ERR, EAL, "Map size too big\n");

rte_errno = E2BIG;

return NULL;

}

//匿名申请了一块map_sz大小的内存,内存的首地址mapped_addr

mapped_addr = mmap(requested_addr, (size_t)map_sz, PROT_NONE,

mmap_flags, -1, 0);

if (mapped_addr == MAP_FAILED && allow_shrink)

*size -= page_sz;

if (mapped_addr != MAP_FAILED && addr_is_hint &&

mapped_addr != requested_addr) {

try++;

next_baseaddr = RTE_PTR_ADD(next_baseaddr, page_sz);

if (try <= MAX_MMAP_WITH_DEFINED_ADDR_TRIES) {

/* hint was not used. Try with another offset */

munmap(mapped_addr, map_sz);

mapped_addr = MAP_FAILED;

requested_addr = next_baseaddr;

}

}

} while ((allow_shrink || addr_is_hint) &&

mapped_addr == MAP_FAILED && *size > 0);

/* align resulting address - if map failed, we will ignore the value

* anyway, so no need to add additional checks.

*/

aligned_addr = no_align ? mapped_addr :

RTE_PTR_ALIGN(mapped_addr, page_sz);

//是否是地址对齐的校验

if (*size == 0) {

RTE_LOG(ERR, EAL, "Cannot get a virtual area of any size: %s\n",

strerror(errno));

rte_errno = errno;

return NULL;

} else if (mapped_addr == MAP_FAILED) {

RTE_LOG(ERR, EAL, "Cannot get a virtual area: %s\n",

strerror(errno));

/* pass errno up the call chain */

rte_errno = errno;

return NULL;

} else if (requested_addr != NULL && !addr_is_hint &&

aligned_addr != requested_addr) {

RTE_LOG(ERR, EAL, "Cannot get a virtual area at requested address: %p (got %p)\n",

requested_addr, aligned_addr);

munmap(mapped_addr, map_sz);

rte_errno = EADDRNOTAVAIL;

return NULL;

} else if (requested_addr != NULL && addr_is_hint &&

aligned_addr != requested_addr) {

RTE_LOG(WARNING, EAL, "WARNING! Base virtual address hint (%p != %p) not respected!\n",

requested_addr, aligned_addr);

RTE_LOG(WARNING, EAL, " This may cause issues with mapping memory into secondary processes\n");

} else if (next_baseaddr != NULL) {

next_baseaddr = RTE_PTR_ADD(aligned_addr, *size);

}

RTE_LOG(DEBUG, EAL, "Virtual area found at %p (size = 0x%zx)\n",

aligned_addr, *size);

if (unmap) {

munmap(mapped_addr, map_sz);

} else if (!no_align) {

void *map_end, *aligned_end;

size_t before_len, after_len;

/* when we reserve space with alignment, we add alignment to

* mapping size. On 32-bit, if 1GB alignment was requested, this

* would waste 1GB of address space, which is a luxury we cannot

* afford. so, if alignment was performed, check if any unneeded

* address space can be unmapped back.

*/

map_end = RTE_PTR_ADD(mapped_addr, (size_t)map_sz);

aligned_end = RTE_PTR_ADD(aligned_addr, *size);

/* unmap space before aligned mmap address */

before_len = RTE_PTR_DIFF(aligned_addr, mapped_addr);

if (before_len > 0)

munmap(mapped_addr, before_len);

/* unmap space after aligned end mmap address */

after_len = RTE_PTR_DIFF(map_end, aligned_end);

if (after_len > 0)

munmap(aligned_end, after_len);

}

return aligned_addr;

}

static int

rte_eal_config_create(void)

{

size_t page_sz = sysconf(_SC_PAGE_SIZE);

size_t cfg_len = sizeof(*rte_config.mem_config);

size_t cfg_len_aligned = RTE_ALIGN(cfg_len, page_sz);

void *rte_mem_cfg_addr, *mapped_mem_cfg_addr;

int retval;

//var/run/dpdk/pg1/congig

const char *pathname = eal_runtime_config_path();

if (internal_config.no_shconf)

return 0;

/* map the config before hugepage address so that we don't waste a page */

if (internal_config.base_virtaddr != 0)

rte_mem_cfg_addr = (void *)

RTE_ALIGN_FLOOR(internal_config.base_virtaddr -

sizeof(struct rte_mem_config), page_sz);

else

rte_mem_cfg_addr = NULL;

if (mem_cfg_fd < 0){

mem_cfg_fd = open(pathname, O_RDWR | O_CREAT, 0600);

if (mem_cfg_fd < 0) {

RTE_LOG(ERR, EAL, "Cannot open '%s' for rte_mem_config\n",

pathname);

return -1;

}

}

//将文件设置成cfg_len大小

retval = ftruncate(mem_cfg_fd, cfg_len);

if (retval < 0){

close(mem_cfg_fd);

mem_cfg_fd = -1;

RTE_LOG(ERR, EAL, "Cannot resize '%s' for rte_mem_config\n",

pathname);

return -1;

}

//给文件加锁

retval = fcntl(mem_cfg_fd, F_SETLK, &wr_lock);

if (retval < 0){

close(mem_cfg_fd);

mem_cfg_fd = -1;

RTE_LOG(ERR, EAL, "Cannot create lock on '%s'. Is another primary "

"process running?\n", pathname);

return -1;

}

/* reserve space for config */

//映射了一块内存空间,返回这块空间的首地址

rte_mem_cfg_addr = eal_get_virtual_area(rte_mem_cfg_addr,

&cfg_len_aligned, page_sz, 0, 0);

if (rte_mem_cfg_addr == NULL) {

RTE_LOG(ERR, EAL, "Cannot mmap memory for rte_config\n");

close(mem_cfg_fd);

mem_cfg_fd = -1;

return -1;

}

//将fd映射到线程的地址空间

mapped_mem_cfg_addr = mmap(rte_mem_cfg_addr,

cfg_len_aligned, PROT_READ | PROT_WRITE,

MAP_SHARED | MAP_FIXED, mem_cfg_fd, 0);

if (mapped_mem_cfg_addr == MAP_FAILED) {

munmap(rte_mem_cfg_addr, cfg_len);

close(mem_cfg_fd);

mem_cfg_fd = -1;

RTE_LOG(ERR, EAL, "Cannot remap memory for rte_config\n");

return -1;

}

//将early_mem_config地址内容赋值给rte_mem_cfg_addr,但是并没有改变内存的地址,相当于一个初始化

memcpy(rte_mem_cfg_addr, &early_mem_config, sizeof(early_mem_config));

rte_config.mem_config = rte_mem_cfg_addr;

//将该映射地址进行保存,从进程之后会被映射使用

rte_config.mem_config->mem_cfg_addr = (uintptr_t) rte_mem_cfg_addr;

rte_config.mem_config->dma_maskbits = 0;

return 0;

}

rte_config_init(void)

{

rte_config.process_type = internal_config.process_type;

switch (rte_config.process_type){

case RTE_PROC_PRIMARY:

if (rte_eal_config_create() < 0)

return -1;

eal_mcfg_update_from_internal();

break;

case RTE_PROC_SECONDARY:

//重新映射了一块新内存

if (rte_eal_config_attach() < 0)

return -1;

//等到主线程初始化完之后从线程开始初始化

eal_mcfg_wait_complete();

if (eal_mcfg_check_version() < 0) {

RTE_LOG(ERR, EAL, "Primary and secondary process DPDK version mismatch\n");

return -1;

}

if (rte_eal_config_reattach() < 0)

return -1;

//更新内存信息

eal_mcfg_update_internal();

break;

case RTE_PROC_AUTO:

case RTE_PROC_INVALID:

RTE_LOG(ERR, EAL, "Invalid process type %d\n",

rte_config.process_type);

return -1;

}

return 0;

}从进程代码解析

rte_config_init(void)

{

rte_config.process_type = internal_config.process_type;

switch (rte_config.process_type){

case RTE_PROC_SECONDARY:

//重新映射了一块新内存

if (rte_eal_config_attach() < 0)

return -1;

//等到主线程初始化完之后从线程开始初始化

eal_mcfg_wait_complete();

if (eal_mcfg_check_version() < 0) {

RTE_LOG(ERR, EAL, "Primary and secondary process DPDK version mismatch\n");

return -1;

}

if (rte_eal_config_reattach() < 0)

return -1;

//更新内存信息

eal_mcfg_update_internal();

break;

}

static int

rte_eal_config_attach(void)

{

struct rte_mem_config *mem_config;

const char *pathname = eal_runtime_config_path();

if (internal_config.no_shconf)

return 0;

if (mem_cfg_fd < 0){

mem_cfg_fd = open(pathname, O_RDWR);

if (mem_cfg_fd < 0) {

RTE_LOG(ERR, EAL, "Cannot open '%s' for rte_mem_config\n",

pathname);

return -1;

}

}

/* map it as read-only first */

//重新映射一块共享只读的虚拟内存

mem_config = (struct rte_mem_config *) mmap(NULL, sizeof(*mem_config),

PROT_READ, MAP_SHARED, mem_cfg_fd, 0);

if (mem_config == MAP_FAILED) {

close(mem_cfg_fd);

mem_cfg_fd = -1;

RTE_LOG(ERR, EAL, "Cannot mmap memory for rte_config! error %i (%s)\n",

errno, strerror(errno));

return -1;

}

rte_config.mem_config = mem_config;

return 0;

}

static int

rte_eal_config_reattach(void)

{

struct rte_mem_config *mem_config;

void *rte_mem_cfg_addr;

if (internal_config.no_shconf)

return 0;

//取出主内存地址映射的一块地址信息

rte_mem_cfg_addr = (void *) (uintptr_t) rte_config.mem_config->mem_cfg_addr;

/* unmap original config */

//卸载内存

munmap(rte_config.mem_config, sizeof(struct rte_mem_config));

//映射了一块共享内存地址信息

mem_config = (struct rte_mem_config *) mmap(rte_mem_cfg_addr,

sizeof(*mem_config), PROT_READ | PROT_WRITE, MAP_SHARED,

mem_cfg_fd, 0);

close(mem_cfg_fd);

mem_cfg_fd = -1;

if (mem_config == MAP_FAILED || mem_config != rte_mem_cfg_addr) {

if (mem_config != MAP_FAILED) {

/* errno is stale, don't use */

RTE_LOG(ERR, EAL, "Cannot mmap memory for rte_config at [%p], got [%p]"

" - please use '--" OPT_BASE_VIRTADDR

"' option\n", rte_mem_cfg_addr, mem_config);

munmap(mem_config, sizeof(struct rte_mem_config));

return -1;

}

RTE_LOG(ERR, EAL, "Cannot mmap memory for rte_config! error %i (%s)\n",

errno, strerror(errno));

return -1;

}

//将地址信息挂到mem_config上,此时从进程和主进程使用的是否是同一个rte_config这个待验证

rte_config.mem_config = mem_config;

return 0;

}二、eal_hugepage_info_init

1.实现功能

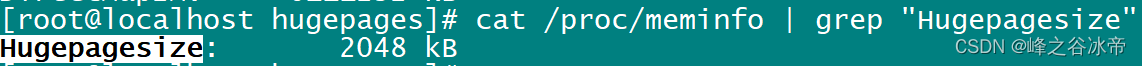

1、使用大页的大小

2、还有多少大页可用

2. 函数实现导图

3.函数解析

3.1系统大页下目录

3.2 缺省大页大小

3.3 挂载大页的配置

3.4 代码解析

static int

hugepage_info_init(void)

{ const char dirent_start_text[] = "hugepages-";

const size_t dirent_start_len = sizeof(dirent_start_text) - 1;

unsigned int i, num_sizes = 0;

DIR *dir;

struct dirent *dirent;

//sys/kernel/mm/hugepages/

dir = opendir(sys_dir_path);

if (dir == NULL) {

RTE_LOG(ERR, EAL,

"Cannot open directory %s to read system hugepage info\n",

sys_dir_path);

return -1;

}

//由于sys/kernel/mm/hugepages/目录下只有 hugepages-2048kB一个目录,所以只遍历一次

for (dirent = readdir(dir); dirent != NULL; dirent = readdir(dir)) {

struct hugepage_info *hpi;

//dirent=hugepages-2048kB;

if (strncmp(dirent->d_name, dirent_start_text,

dirent_start_len) != 0)

continue;

if (num_sizes >= MAX_HUGEPAGE_SIZES)

break;

hpi = &internal_config.hugepage_info[num_sizes];

// 2048*1024B

hpi->hugepage_sz =

rte_str_to_size(&dirent->d_name[dirent_start_len]);

/* first, check if we have a mountpoint */

// hpi->hugedir = /dev/hugepages

if (get_hugepage_dir(hpi->hugepage_sz,

hpi->hugedir, sizeof(hpi->hugedir)) < 0) {

uint32_t num_pages;

num_pages = get_num_hugepages(dirent->d_name);

if (num_pages > 0)

RTE_LOG(NOTICE, EAL,

"%" PRIu32 " hugepages of size "

"%" PRIu64 " reserved, but no mounted "

"hugetlbfs found for that size\n",

num_pages, hpi->hugepage_sz);

/* if we have kernel support for reserving hugepages

* through mmap, and we're in in-memory mode, treat this

* page size as valid. we cannot be in legacy mode at

* this point because we've checked this earlier in the

* init process.

*/

#ifdef MAP_HUGE_SHIFT

if (internal_config.in_memory) {

RTE_LOG(DEBUG, EAL, "In-memory mode enabled, "

"hugepages of size %" PRIu64 " bytes "

"will be allocated anonymously\n",

hpi->hugepage_sz);

calc_num_pages(hpi, dirent);

num_sizes++;

}

#endif

continue;

}

//hpi->hugedir = /dev/hugepages

/* try to obtain a writelock */

hpi->lock_descriptor = open(hpi->hugedir, O_RDONLY);

/* if blocking lock failed */

if (flock(hpi->lock_descriptor, LOCK_EX) == -1) {

RTE_LOG(CRIT, EAL,

"Failed to lock hugepage directory!\n");

break;

}

/* clear out the hugepages dir from unused pages */

if (clear_hugedir(hpi->hugedir) == -1)

break;

//计算huge_info有多少个未使用的页

calc_num_pages(hpi, dirent);

num_sizes++;

}

closedir(dir);

/* something went wrong, and we broke from the for loop above */

if (dirent != NULL)

return -1;

//多少中类型的页

internal_config.num_hugepage_sizes = num_sizes;

printf("%s[%d] num_huge:%u\r\n", __func__, __LINE__, num_sizes);

/* sort the page directory entries by size, largest to smallest */

qsort(&internal_config.hugepage_info[0], num_sizes,

sizeof(internal_config.hugepage_info[0]), compare_hpi);

//最后只做了一个检查

/* now we have all info, check we have at least one valid size */

for (i = 0; i < num_sizes; i++) {

/* pages may no longer all be on socket 0, so check all */

unsigned int j, num_pages = 0;

struct hugepage_info *hpi = &internal_config.hugepage_info[i];

for (j = 0; j < RTE_MAX_NUMA_NODES; j++)

num_pages += hpi->num_pages[j];

if (num_pages > 0)

return 0;

}

/* no valid hugepage mounts available, return error */

return -1;

}

static int

get_hugepage_dir(uint64_t hugepage_sz, char *hugedir, int len)

{

enum proc_mount_fieldnames {

DEVICE = 0,

MOUNTPT,

FSTYPE,

OPTIONS,

_FIELDNAME_MAX

};

static uint64_t default_size = 0;

const char proc_mounts[] = "/proc/mounts";

const char hugetlbfs_str[] = "hugetlbfs";

const size_t htlbfs_str_len = sizeof(hugetlbfs_str) - 1;

const char pagesize_opt[] = "pagesize=";

const size_t pagesize_opt_len = sizeof(pagesize_opt) - 1;

const char split_tok = ' ';

char *splitstr[_FIELDNAME_MAX];

char buf[BUFSIZ];

int retval = -1;

///proc/mounts

FILE *fd = fopen(proc_mounts, "r");

if (fd == NULL)

rte_panic("Cannot open %s\n", proc_mounts);

//default_size = 2048kb

if (default_size == 0)

default_size = get_default_hp_size();

/*从/proc/mounts文件中读取huge的配置信息,查看页配置的大小,从而确认使用的是设置的

大页信息还是使用的是默认信息,设置信息的优先级要高于默认的配置信息,由于当前的系统没有设置

大页,所以使用默认,设置的情况下配置信息如下

hugetlbfs /dev/hugepages hugetlbfs rw,relatime,pagesize=1024M 0 0*/

while (fgets(buf, sizeof(buf), fd)){

if (rte_strsplit(buf, sizeof(buf), splitstr, _FIELDNAME_MAX,

split_tok) != _FIELDNAME_MAX) {

RTE_LOG(ERR, EAL, "Error parsing %s\n", proc_mounts);

break; /* return NULL */

}

/* we have a specified --huge-dir option, only examine that dir */

if (internal_config.hugepage_dir != NULL &&

strcmp(splitstr[MOUNTPT], internal_config.hugepage_dir) != 0)

continue;

if (strncmp(splitstr[FSTYPE], hugetlbfs_str, htlbfs_str_len) == 0){

const char *pagesz_str = strstr(splitstr[OPTIONS], pagesize_opt);

/* if no explicit page size, the default page size is compared */

//没有设置则选用缺省值

if (pagesz_str == NULL){

if (hugepage_sz == default_size){

strlcpy(hugedir, splitstr[MOUNTPT], len);

retval = 0;

break;

}

}

/* there is an explicit page size, so check it */

else {

uint64_t pagesz = rte_str_to_size(&pagesz_str[pagesize_opt_len]);

if (pagesz == hugepage_sz) {

strlcpy(hugedir, splitstr[MOUNTPT], len);

retval = 0;

break;

}

}

} /* end if strncmp hugetlbfs */

} /* end while fgets */

fclose(fd);

return retval;

}

static void

calc_num_pages(struct hugepage_info *hpi, struct dirent *dirent)

{

uint64_t total_pages = 0;

unsigned int i;

/*

* first, try to put all hugepages into relevant sockets, but

* if first attempts fails, fall back to collecting all pages

* in one socket and sorting them later

*/

total_pages = 0;

/* we also don't want to do this for legacy init */

if (!internal_config.legacy_mem)

for (i = 0; i < rte_socket_count(); i++) {

int socket = rte_socket_id_by_idx(i);

unsigned int num_pages =

get_num_hugepages_on_node(

dirent->d_name, socket);

hpi->num_pages[socket] = num_pages;

total_pages += num_pages;

}

/*

* we failed to sort memory from the get go, so fall

* back to old way

*/

if (total_pages == 0) {

hpi->num_pages[0] = get_num_hugepages(dirent->d_name);

#ifndef RTE_ARCH_64

/* for 32-bit systems, limit number of hugepages to

* 1GB per page size */

hpi->num_pages[0] = RTE_MIN(hpi->num_pages[0],

RTE_PGSIZE_1G / hpi->hugepage_sz);

#endif

}

}

static uint32_t

get_num_hugepages_on_node(const char *subdir, unsigned int socket)

{

char path[PATH_MAX], socketpath[PATH_MAX];

DIR *socketdir;

unsigned long num_pages = 0;

const char *nr_hp_file = "free_hugepages";

//path:/sys/devices/system/node/node0/hugepages/hugepages-2048kB/free_hugepages

snprintf(socketpath, sizeof(socketpath), "%s/node%u/hugepages",

sys_pages_numa_dir_path, socket);

socketdir = opendir(socketpath);

if (socketdir) {

/* Keep calm and carry on */

closedir(socketdir);

} else {

/* Can't find socket dir, so ignore it */

return 0;

}

snprintf(path, sizeof(path), "%s/%s/%s",

socketpath, subdir, nr_hp_file);

if (eal_parse_sysfs_value(path, &num_pages) < 0)

return 0;

if (num_pages == 0)

RTE_LOG(WARNING, EAL, "No free hugepages reported in %s\n",

subdir);

/*

* we want to return a uint32_t and more than this looks suspicious

* anyway ...

*/

if (num_pages > UINT32_MAX)

num_pages = UINT32_MAX;

return num_pages;

}

执行完之后,huge_info结构体的信息如下:

struct hugepage_info {

uint64_t hugepage_sz =1024kB

char hugedir[PATH_MAX]=/dev/hugepages

uint32_t num_pages=512(free_hugepages)//node节点free_pages的页数

/**< number of hugepages of that size on each socket */

int lock_descriptor; /**< file descriptor for hugepage dir */

};

639

639

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?