目录

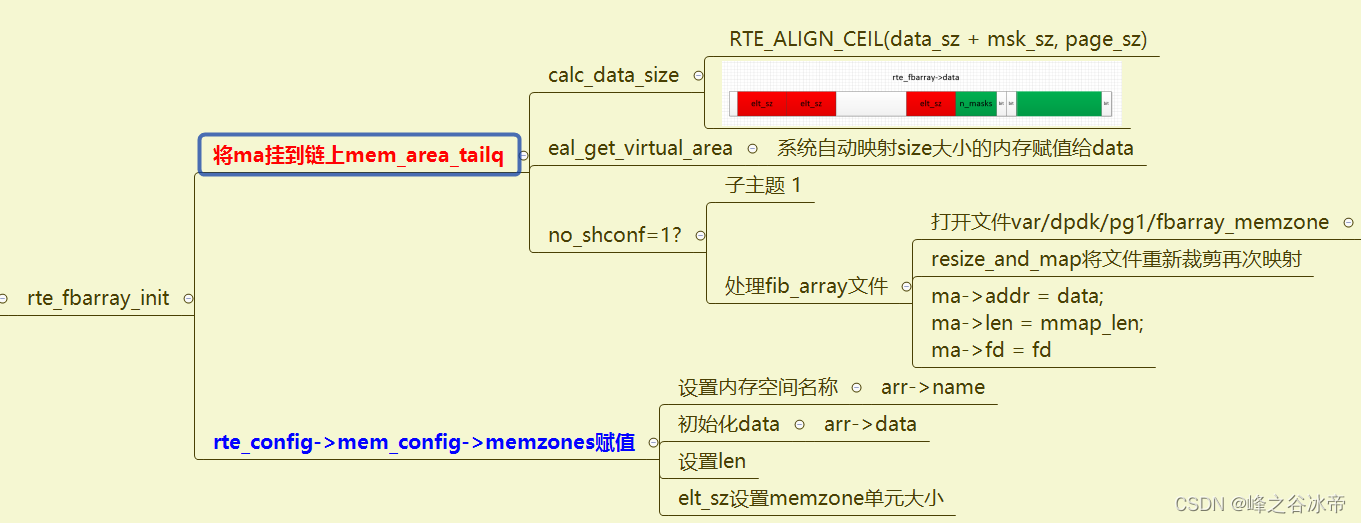

1 rte_eal_memzone_init

1.1 memzone存储架构图

1.2 函数解析

int

rte_eal_memzone_init(void)

{

struct rte_mem_config *mcfg;

int ret = 0;

/* get pointer to global configuration */

mcfg = rte_eal_get_configuration()->mem_config;

rte_rwlock_write_lock(&mcfg->mlock);

if (rte_eal_process_type() == RTE_PROC_PRIMARY &&

rte_fbarray_init(&mcfg->memzones, "memzone",

RTE_MAX_MEMZONE, sizeof(struct rte_memzone))) {

RTE_LOG(ERR, EAL, "Cannot allocate memzone list\n");

ret = -1;

} else if (rte_eal_process_type() == RTE_PROC_SECONDARY &&

rte_fbarray_attach(&mcfg->memzones)) {

RTE_LOG(ERR, EAL, "Cannot attach to memzone list\n");

ret = -1;

}

rte_rwlock_write_unlock(&mcfg->mlock);

return ret;

}

static size_t

calc_data_size(size_t page_sz, unsigned int elt_sz, unsigned int len)

{

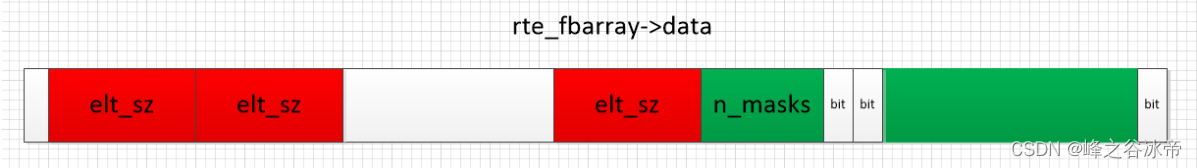

//len是指元素的个数,最终计算的是页对齐的数据和掩码的大小,结构如上图

size_t data_sz = elt_sz * len;

size_t msk_sz = calc_mask_size(len);

return RTE_ALIGN_CEIL(data_sz + msk_sz, page_sz);

}

int

rte_fbarray_init(struct rte_fbarray *arr, const char *name, unsigned int len,

unsigned int elt_sz)

{

size_t page_sz, mmap_len;

char path[PATH_MAX];

struct used_mask *msk;

struct mem_area *ma = NULL;

void *data = NULL;

int fd = -1;

if (arr == NULL) {

rte_errno = EINVAL;

return -1;

}

if (fully_validate(name, elt_sz, len))

return -1;

/* allocate mem area before doing anything */

ma = malloc(sizeof(*ma));

if (ma == NULL) {

rte_errno = ENOMEM;

return -1;

}

page_sz = sysconf(_SC_PAGESIZE);

if (page_sz == (size_t)-1) {

free(ma);

return -1;

}

/* calculate our memory limits */

//计算需要预留的内存空间大小

mmap_len = calc_data_size(page_sz, elt_sz, len);

//匿名申请一块内存mmap len大小的内存空间

data = eal_get_virtual_area(NULL, &mmap_len, page_sz, 0, 0);

if (data == NULL) {

free(ma);

return -1;

}

rte_spinlock_lock(&mem_area_lock);

fd = -1;

if (internal_config.no_shconf) {

/* remap virtual area as writable */

void *new_data = mmap(data, mmap_len, PROT_READ | PROT_WRITE,

MAP_FIXED | MAP_PRIVATE | MAP_ANONYMOUS, fd, 0);

if (new_data == MAP_FAILED) {

RTE_LOG(DEBUG, EAL, "%s(): couldn't remap anonymous memory: %s\n",

__func__, strerror(errno));

goto fail;

}

} else {

//path:/var/run/dpdk/pg1/fbarray_memzone

eal_get_fbarray_path(path, sizeof(path), name);

/*

* Each fbarray is unique to process namespace, i.e. the

* filename depends on process prefix. Try to take out a lock

* and see if we succeed. If we don't, someone else is using it

* already.

*/

fd = open(path, O_CREAT | O_RDWR, 0600);

if (fd < 0) {

RTE_LOG(DEBUG, EAL, "%s(): couldn't open %s: %s\n",

__func__, path, strerror(errno));

rte_errno = errno;

goto fail;

} else if (flock(fd, LOCK_EX | LOCK_NB)) {

RTE_LOG(DEBUG, EAL, "%s(): couldn't lock %s: %s\n",

__func__, path, strerror(errno));

rte_errno = EBUSY;

goto fail;

}

/* take out a non-exclusive lock, so that other processes could

* still attach to it, but no other process could reinitialize

* it.

*/

if (flock(fd, LOCK_SH | LOCK_NB)) {

rte_errno = errno;

goto fail;

}

//将文件设置成指定大小,同时将fd映射到虚拟内存空间

if (resize_and_map(fd, data, mmap_len))

goto fail;

}

ma->addr = data;

ma->len = mmap_len;//data size+mask_size

ma->fd = fd;

/* do not close fd - keep it until detach/destroy */

//将ma加入到链表

TAILQ_INSERT_TAIL(&mem_area_tailq, ma, next);

/* initialize the data */

memset(data, 0, mmap_len);

/* populate data structure */

//设置出参内容

strlcpy(arr->name, name, sizeof(arr->name));

arr->data = data;

arr->len = len;

arr->elt_sz = elt_sz;

arr->count = 0;

//获取mask的首地址,偏移n个data之后的地址

msk = get_used_mask(data, elt_sz, len);

//确认mask的index,有多少个mask

msk->n_masks = MASK_LEN_TO_IDX(RTE_ALIGN_CEIL(len, MASK_ALIGN));

rte_rwlock_init(&arr->rwlock);

rte_spinlock_unlock(&mem_area_lock);

return 0;

fail:

if (data)

munmap(data, mmap_len);

if (fd >= 0)

close(fd);

free(ma);

rte_spinlock_unlock(&mem_area_lock);

return -1;

}

1.3 总结

这段代码主要做了两件事:

1、申请一块虚拟的内存空间挂到memzone,并将fd映射到这块虚拟内存

2、申请mem_area保存在链表中

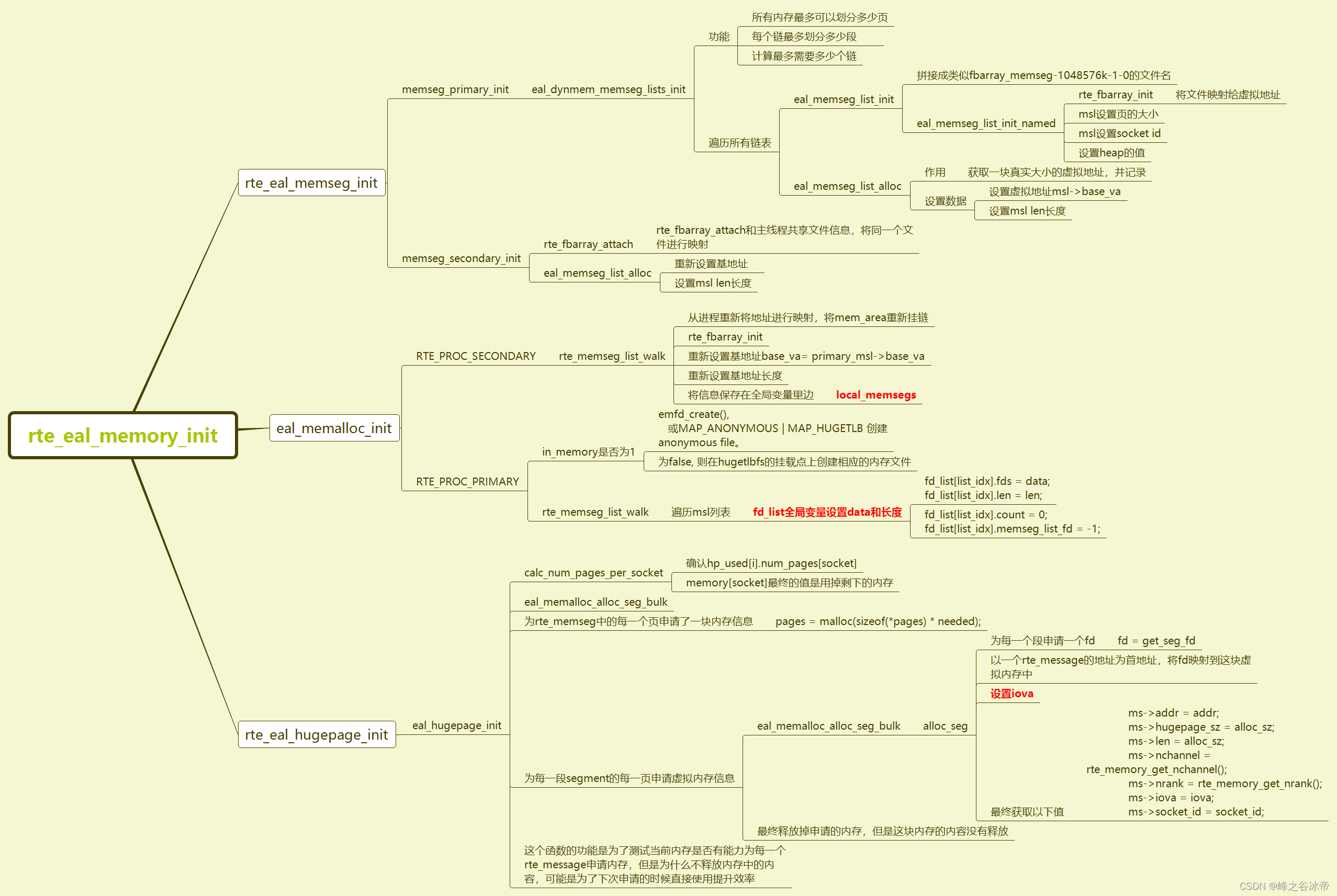

2 rte_eal_memory_init

2 rte_eal_memory_init

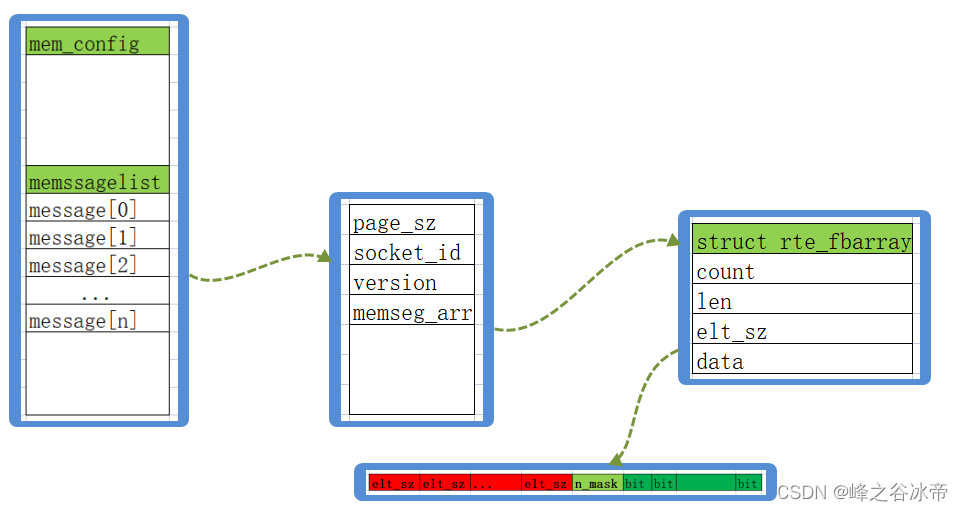

2.1 memseg存储架构图

2.2 函数解析

函数调用关系:

rte_eal_memory_init->rte_eal_memseg_init->memseg_primary_init

static int __rte_unused

memseg_primary_init(void)

{

struct rte_mem_config *mcfg = rte_eal_get_configuration()->mem_config;

struct memtype {

uint64_t page_sz;

int socket_id;

} *memtypes = NULL;

int i, hpi_idx, msl_idx, ret = -1; /* fail unless told to succeed */

struct rte_memseg_list *msl;

uint64_t max_mem, max_mem_per_type;

unsigned int max_seglists_per_type;

unsigned int n_memtypes, cur_type;

/* no-huge does not need this at all */

if (internal_config.no_hugetlbfs)

return 0;

/*

* figuring out amount of memory we're going to have is a long and very

* involved process. the basic element we're operating with is a memory

* type, defined as a combination of NUMA node ID and page size (so that

* e.g. 2 sockets with 2 page sizes yield 4 memory types in total).

*

* deciding amount of memory going towards each memory type is a

* balancing act between maximum segments per type, maximum memory per

* type, and number of detected NUMA nodes. the goal is to make sure

* each memory type gets at least one memseg list.

*

* the total amount of memory is limited by RTE_MAX_MEM_MB value.

*

* the total amount of memory per type is limited by either

* RTE_MAX_MEM_MB_PER_TYPE, or by RTE_MAX_MEM_MB divided by the number

* of detected NUMA nodes. additionally, maximum number of segments per

* type is also limited by RTE_MAX_MEMSEG_PER_TYPE. this is because for

* smaller page sizes, it can take hundreds of thousands of segments to

* reach the above specified per-type memory limits.

*

* additionally, each type may have multiple memseg lists associated

* with it, each limited by either RTE_MAX_MEM_MB_PER_LIST for bigger

* page sizes, or RTE_MAX_MEMSEG_PER_LIST segments for smaller ones.

*

* the number of memseg lists per type is decided based on the above

* limits, and also taking number of detected NUMA nodes, to make sure

* that we don't run out of memseg lists before we populate all NUMA

* nodes with memory.

*

* we do this in three stages. first, we collect the number of types.

* then, we figure out memory constraints and populate the list of

* would-be memseg lists. then, we go ahead and allocate the memseg

* lists.

*/

/* create space for mem types */

//由于本机只有一个socket使用,所以这个是一个1

n_memtypes = internal_config.num_hugepage_sizes * rte_socket_count();

memtypes = calloc(n_memtypes, sizeof(*memtypes));

if (memtypes == NULL) {

RTE_LOG(ERR, EAL, "Cannot allocate space for memory types\n");

return -1;

}

/* populate mem types */

cur_type = 0;

for (hpi_idx = 0; hpi_idx < (int) internal_config.num_hugepage_sizes;

hpi_idx++) {

struct hugepage_info *hpi;

uint64_t hugepage_sz;

hpi = &internal_config.hugepage_info[hpi_idx];

hugepage_sz = hpi->hugepage_sz;

for (i = 0; i < (int) rte_socket_count(); i++, cur_type++) {

int socket_id = rte_socket_id_by_idx(i);

#ifndef RTE_EAL_NUMA_AWARE_HUGEPAGES

/* we can still sort pages by socket in legacy mode */

if (!internal_config.legacy_mem && socket_id > 0)

break;

#endif

memtypes[cur_type].page_sz = hugepage_sz;

memtypes[cur_type].socket_id = socket_id;

RTE_LOG(DEBUG, EAL, "Detected memory type: "

"socket_id:%u hugepage_sz:%" PRIu64 "\n",

socket_id, hugepage_sz);

}

}

/* number of memtypes could have been lower due to no NUMA support */

n_memtypes = cur_type;

/* set up limits for types */

max_mem = (uint64_t)RTE_MAX_MEM_MB << 20;

//max_mem_per_type=128G

max_mem_per_type = RTE_MIN((uint64_t)RTE_MAX_MEM_MB_PER_TYPE << 20,

max_mem / n_memtypes);

/*

* limit maximum number of segment lists per type to ensure there's

* space for memseg lists for all NUMA nodes with all page sizes

*/

//max_seglists_per_type = 64

max_seglists_per_type = RTE_MAX_MEMSEG_LISTS / n_memtypes;

if (max_seglists_per_type == 0) {

RTE_LOG(ERR, EAL, "Cannot accommodate all memory types, please increase %s\n",

RTE_STR(CONFIG_RTE_MAX_MEMSEG_LISTS));

goto out;

}

/* go through all mem types and create segment lists */

msl_idx = 0;

for (cur_type = 0; cur_type < n_memtypes; cur_type++) {

unsigned int cur_seglist, n_seglists, n_segs;

unsigned int max_segs_per_type, max_segs_per_list;

struct memtype *type = &memtypes[cur_type];

uint64_t max_mem_per_list, pagesz;

int socket_id;

pagesz = type->page_sz;

socket_id = type->socket_id;

/*

* we need to create segment lists for this type. we must take

* into account the following things:

*

* 1. total amount of memory we can use for this memory type

* 2. total amount of memory per memseg list allowed

* 3. number of segments needed to fit the amount of memory

* 4. number of segments allowed per type

* 5. number of segments allowed per memseg list

* 6. number of memseg lists we are allowed to take up

*/

/* calculate how much segments we will need in total */

/*

以下是将一块内存分成几段,每段包含多少个页,每页有多大,计算过程如下所示具体结构图如上所 示

*/

//max_segs_per_type:65536页

max_segs_per_type = max_mem_per_type / pagesz;

/* limit number of segments to maximum allowed per type */

//max_segs_per_type:32768 页

max_segs_per_type = RTE_MIN(max_segs_per_type,

(unsigned int)RTE_MAX_MEMSEG_PER_TYPE);

/* limit number of segments to maximum allowed per list */

//max_segs_per_list:8192 页

max_segs_per_list = RTE_MIN(max_segs_per_type,

(unsigned int)RTE_MAX_MEMSEG_PER_LIST);

/* calculate how much memory we can have per segment list */

//max_mem_per_list:17179869184 //16G

max_mem_per_list = RTE_MIN(max_segs_per_list * pagesz,

(uint64_t)RTE_MAX_MEM_MB_PER_LIST << 20);

/* calculate how many segments each segment list will have */

//n_segs:8192

n_segs = RTE_MIN(max_segs_per_list, max_mem_per_list / pagesz);

/* calculate how many segment lists we can have */

//n_seglists:4

n_seglists = RTE_MIN(max_segs_per_type / n_segs,

max_mem_per_type / max_mem_per_list);

/* limit number of segment lists according to our maximum */

//n_seglists:4

n_seglists = RTE_MIN(n_seglists, max_seglists_per_type);

RTE_LOG(DEBUG, EAL, "Creating %i segment lists: "

"n_segs:%i socket_id:%i hugepage_sz:%" PRIu64 "\n",

n_seglists, n_segs, socket_id, pagesz);

/* create all segment lists */

for (cur_seglist = 0; cur_seglist < n_seglists; cur_seglist++) {

if (msl_idx >= RTE_MAX_MEMSEG_LISTS) {

RTE_LOG(ERR, EAL,

"No more space in memseg lists, please increase %s\n",

RTE_STR(CONFIG_RTE_MAX_MEMSEG_LISTS));

goto out;

}

msl = &mcfg->memsegs[msl_idx++];

//因为分成四段,所以要对四段内容进行设置,需要设置的一般是虚拟地址的首地址,长度,页数等,这个在下一个函数中有解析

if (alloc_memseg_list(msl, pagesz, n_segs,

socket_id, cur_seglist))

goto out;

if (alloc_va_space(msl)) {

RTE_LOG(ERR, EAL, "Cannot allocate VA space for memseg list\n");

goto out;

}

}

}

/* we're successful */

ret = 0;

out:

free(memtypes);

return ret;

}

static int

alloc_memseg_list(struct rte_memseg_list *msl, uint64_t page_sz,

int n_segs, int socket_id, int type_msl_idx)

{

char name[RTE_FBARRAY_NAME_LEN];

snprintf(name, sizeof(name), MEMSEG_LIST_FMT, page_sz >> 10, socket_id,

type_msl_idx);

//根据页的个数,每个页的大小申请一块虚拟内存,将内存挂到msl->memseg_arr下,设置了页的个数,以及每个元素的大小,并且申请一块内存空间挂载到mem_area_tailq链表下

if (rte_fbarray_init(&msl->memseg_arr, name, n_segs,

sizeof(struct rte_memseg))) {

RTE_LOG(ERR, EAL, "Cannot allocate memseg list: %s\n",

rte_strerror(rte_errno));

return -1;

}

//设置msl页的大小,socket id

msl->page_sz = page_sz;

msl->socket_id = socket_id;

msl->base_va = NULL;

msl->heap = 1; /* mark it as a heap segment */

RTE_LOG(DEBUG, EAL, "Memseg list allocated: 0x%zxkB at socket %i\n",

(size_t)page_sz >> 10, socket_id);

return 0;

}

static int

alloc_va_space(struct rte_memseg_list *msl)

{

uint64_t page_sz;

size_t mem_sz;

void *addr;

int flags = 0;

page_sz = msl->page_sz;

mem_sz = page_sz * msl->memseg_arr.len;//8092

//因为msl->base_va=NULL最开始是空,所以匿名申请一块虚拟内存

addr = eal_get_virtual_area(msl->base_va, &mem_sz, page_sz, 0, flags);

if (addr == NULL) {

if (rte_errno == EADDRNOTAVAIL)

RTE_LOG(ERR, EAL, "Could not mmap %llu bytes at [%p] - "

"please use '--" OPT_BASE_VIRTADDR "' option\n",

(unsigned long long)mem_sz, msl->base_va);

else

RTE_LOG(ERR, EAL, "Cannot reserve memory\n");

return -1;

}

//申请了一块虚拟内存,首地址和大小进行赋值,挂载到base_va

msl->base_va = addr;

msl->len = mem_sz;

return 0;

}eal_memalloc_init->fd_list_create_walk

static int

fd_list_create_walk(const struct rte_memseg_list *msl,

void *arg __rte_unused)

{

struct rte_mem_config *mcfg = rte_eal_get_configuration()->mem_config;

unsigned int len;

int msl_idx;

if (msl->external)

return 0;

msl_idx = msl - mcfg->memsegs;

len = msl->memseg_arr.len;

return alloc_list(msl_idx, len);

}

static int

alloc_list(int list_idx, int len)

{

int *data;

int i;

/* single-file segments mode does not need fd list */

if (!internal_config.single_file_segments) {

/* ensure we have space to store fd per each possible segment */

data = malloc(sizeof(int) * len);

if (data == NULL) {

RTE_LOG(ERR, EAL, "Unable to allocate space for file descriptors\n");

return -1;

}

/* set all fd's as invalid */

for (i = 0; i < len; i++)

data[i] = -1;

//为每一个段内存申请一块物理空间,赋值给了fd_list

fd_list[list_idx].fds = data;

fd_list[list_idx].len = len;

} else {

fd_list[list_idx].fds = NULL;

fd_list[list_idx].len = 0;

}

fd_list[list_idx].count = 0;

fd_list[list_idx].memseg_list_fd = -1;

return 0;

}rte_eal_hugepage_init->eal_hugepage_init

static int

eal_hugepage_init(void)

{

struct hugepage_info used_hp[MAX_HUGEPAGE_SIZES];

uint64_t memory[RTE_MAX_NUMA_NODES];

int hp_sz_idx, socket_id;

memset(used_hp, 0, sizeof(used_hp));

for (hp_sz_idx = 0;

hp_sz_idx < (int) internal_config.num_hugepage_sizes;

hp_sz_idx++) {

#ifndef RTE_ARCH_64

struct hugepage_info dummy;

unsigned int i;

#endif

/* also initialize used_hp hugepage sizes in used_hp */

struct hugepage_info *hpi;

hpi = &internal_config.hugepage_info[hp_sz_idx];

used_hp[hp_sz_idx].hugepage_sz = hpi->hugepage_sz;

#ifndef RTE_ARCH_64

/* for 32-bit, limit number of pages on socket to whatever we've

* preallocated, as we cannot allocate more.

*/

memset(&dummy, 0, sizeof(dummy));

dummy.hugepage_sz = hpi->hugepage_sz;

if (rte_memseg_list_walk(hugepage_count_walk, &dummy) < 0)

return -1;

for (i = 0; i < RTE_DIM(dummy.num_pages); i++) {

hpi->num_pages[i] = RTE_MIN(hpi->num_pages[i],

dummy.num_pages[i]);

printf("%s[%d] numpage:%u\r\n", __func__, __LINE__, hpi->num_pages[i]);

}

#endif

}

/* make a copy of socket_mem, needed for balanced allocation. */

for (hp_sz_idx = 0; hp_sz_idx < RTE_MAX_NUMA_NODES; hp_sz_idx++)

memory[hp_sz_idx] = internal_config.socket_mem[hp_sz_idx];

/* calculate final number of pages */

//计算used_hp使用多少大页, memory此时是剩余内存

if (calc_num_pages_per_socket(memory,

internal_config.hugepage_info, used_hp,

internal_config.num_hugepage_sizes) < 0)

return -1;

for (hp_sz_idx = 0;

hp_sz_idx < (int)internal_config.num_hugepage_sizes;

hp_sz_idx++) {

for (socket_id = 0; socket_id < RTE_MAX_NUMA_NODES;

socket_id++) {

struct rte_memseg **pages;

struct hugepage_info *hpi = &used_hp[hp_sz_idx];

unsigned int num_pages = hpi->num_pages[socket_id];

unsigned int num_pages_alloc;

if (num_pages == 0)

continue;

RTE_LOG(DEBUG, EAL, "Allocating %u pages of size %" PRIu64 "M on socket %i\n",

num_pages, hpi->hugepage_sz >> 20, socket_id);

/* we may not be able to allocate all pages in one go,

* because we break up our memory map into multiple

* memseg lists. therefore, try allocating multiple

* times and see if we can get the desired number of

* pages from multiple allocations.

*/

num_pages_alloc = 0;

do {

int i, cur_pages, needed;

needed = num_pages - num_pages_alloc;

//申请了一维指针

pages = malloc(sizeof(*pages) * needed);

/* do not request exact number of pages */

//为每一个段的rte_message申请一块虚拟内存

cur_pages = eal_memalloc_alloc_seg_bulk(pages,

needed, hpi->hugepage_sz,

socket_id, false);

if (cur_pages <= 0) {

free(pages);

return -1;

}

/* mark preallocated pages as unfreeable */

for (i = 0; i < cur_pages; i++) {

struct rte_memseg *ms = pages[i];

ms->flags |= RTE_MEMSEG_FLAG_DO_NOT_FREE;

}

//具体内容没有删除,但是最初申请的一段指针删除了

free(pages);

num_pages_alloc += cur_pages;

} while (num_pages_alloc != num_pages);

}

}

/* if socket limits were specified, set them */

if (internal_config.force_socket_limits) {

unsigned int i;

for (i = 0; i < RTE_MAX_NUMA_NODES; i++) {

uint64_t limit = internal_config.socket_limit[i];

if (limit == 0)

continue;

if (rte_mem_alloc_validator_register("socket-limit",

limits_callback, i, limit))

RTE_LOG(ERR, EAL, "Failed to register socket limits validator callback\n");

}

}

return 0;

}

int

eal_memalloc_alloc_seg_bulk(struct rte_memseg **ms, int n_segs, size_t page_sz,

int socket, bool exact)

{

int i, ret = -1;

#ifdef RTE_EAL_NUMA_AWARE_HUGEPAGES

bool have_numa = false;

int oldpolicy;

struct bitmask *oldmask;

#endif

struct alloc_walk_param wa;

struct hugepage_info *hi = NULL;

memset(&wa, 0, sizeof(wa));

/* dynamic allocation not supported in legacy mode */

if (internal_config.legacy_mem)

return -1;

for (i = 0; i < (int) RTE_DIM(internal_config.hugepage_info); i++) {

if (page_sz ==

internal_config.hugepage_info[i].hugepage_sz) {

hi = &internal_config.hugepage_info[i];

break;

}

}

if (!hi) {

RTE_LOG(ERR, EAL, "%s(): can't find relevant hugepage_info entry\n",

__func__);

return -1;

}

#ifdef RTE_EAL_NUMA_AWARE_HUGEPAGES

if (check_numa()) {

oldmask = numa_allocate_nodemask();

prepare_numa(&oldpolicy, oldmask, socket);

have_numa = true;

}

#endif

wa.exact = exact;

wa.hi = hi;

//page申请的内存挂在ws的结构体中,然后alloc_seg_walk申请内存信息

wa.ms = ms;

wa.n_segs = n_segs;

wa.page_sz = page_sz;

wa.socket = socket;

wa.segs_allocated = 0;

/* memalloc is locked, so it's safe to use thread-unsafe version */

//遍历所有的memsegs

ret = rte_memseg_list_walk_thread_unsafe(alloc_seg_walk, &wa);

if (ret == 0) {

RTE_LOG(ERR, EAL, "%s(): couldn't find suitable memseg_list\n",

__func__);

ret = -1;

} else if (ret > 0) {

ret = (int)wa.segs_allocated;

}

#ifdef RTE_EAL_NUMA_AWARE_HUGEPAGES

if (have_numa)

restore_numa(&oldpolicy, oldmask);

#endif

return ret;

}

static int

alloc_seg_walk(const struct rte_memseg_list *msl, void *arg)

{

struct rte_mem_config *mcfg = rte_eal_get_configuration()->mem_config;

struct alloc_walk_param *wa = arg;

struct rte_memseg_list *cur_msl;

size_t page_sz;

int cur_idx, start_idx, j, dir_fd = -1;

unsigned int msl_idx, need, i;

if (msl->page_sz != wa->page_sz)

return 0;

if (msl->socket_id != wa->socket)

return 0;

page_sz = (size_t)msl->page_sz;

msl_idx = msl - mcfg->memsegs;

cur_msl = &mcfg->memsegs[msl_idx];

need = wa->n_segs;

/* try finding space in memseg list */

if (wa->exact) {

/* if we require exact number of pages in a list, find them */

cur_idx = rte_fbarray_find_next_n_free(&cur_msl->memseg_arr, 0,

need);

if (cur_idx < 0)

return 0;

start_idx = cur_idx;

} else {

int cur_len;

/* we don't require exact number of pages, so we're going to go

* for best-effort allocation. that means finding the biggest

* unused block, and going with that.

*/

cur_idx = rte_fbarray_find_biggest_free(&cur_msl->memseg_arr,

0);

if (cur_idx < 0)

return 0;

start_idx = cur_idx;

/* adjust the size to possibly be smaller than original

* request, but do not allow it to be bigger.

*/

cur_len = rte_fbarray_find_contig_free(&cur_msl->memseg_arr,

cur_idx);

need = RTE_MIN(need, (unsigned int)cur_len);

}

/* do not allow any page allocations during the time we're allocating,

* because file creation and locking operations are not atomic,

* and we might be the first or the last ones to use a particular page,

* so we need to ensure atomicity of every operation.

*

* during init, we already hold a write lock, so don't try to take out

* another one.

*/

if (wa->hi->lock_descriptor == -1 && !internal_config.in_memory) {

dir_fd = open(wa->hi->hugedir, O_RDONLY);

if (dir_fd < 0) {

RTE_LOG(ERR, EAL, "%s(): Cannot open '%s': %s\n",

__func__, wa->hi->hugedir, strerror(errno));

return -1;

}

/* blocking writelock */

if (flock(dir_fd, LOCK_EX)) {

RTE_LOG(ERR, EAL, "%s(): Cannot lock '%s': %s\n",

__func__, wa->hi->hugedir, strerror(errno));

close(dir_fd);

return -1;

}

}

for (i = 0; i < need; i++, cur_idx++) {

struct rte_memseg *cur;

void *map_addr;

//为每一个segment确认一个fd

cur = rte_fbarray_get(&cur_msl->memseg_arr, cur_idx);

map_addr = RTE_PTR_ADD(cur_msl->base_va,

cur_idx * page_sz);

//map_addr根据每一页的大小偏移,也可以认为是每一个rte_message的首地址

if (alloc_seg(cur, map_addr, wa->socket, wa->hi,

msl_idx, cur_idx)) {

RTE_LOG(DEBUG, EAL, "attempted to allocate %i segments, but only %i were allocated\n",

need, i);

/* if exact number wasn't requested, stop */

if (!wa->exact)

goto out;

/* clean up */

for (j = start_idx; j < cur_idx; j++) {

struct rte_memseg *tmp;

struct rte_fbarray *arr =

&cur_msl->memseg_arr;

tmp = rte_fbarray_get(arr, j);

rte_fbarray_set_free(arr, j);

/* free_seg may attempt to create a file, which

* may fail.

*/

if (free_seg(tmp, wa->hi, msl_idx, j))

RTE_LOG(DEBUG, EAL, "Cannot free page\n");

}

/* clear the list */

if (wa->ms)

memset(wa->ms, 0, sizeof(*wa->ms) * wa->n_segs);

if (dir_fd >= 0)

close(dir_fd);

return -1;

}

if (wa->ms)

wa->ms[i] = cur;

rte_fbarray_set_used(&cur_msl->memseg_arr, cur_idx);

}

out:

wa->segs_allocated = i;

if (i > 0)

cur_msl->version++;

if (dir_fd >= 0)

close(dir_fd);

/* if we didn't allocate any segments, move on to the next list */

return i > 0;

}

static int

alloc_seg(struct rte_memseg *ms, void *addr, int socket_id,

struct hugepage_info *hi, unsigned int list_idx,

unsigned int seg_idx)

{

#ifdef RTE_EAL_NUMA_AWARE_HUGEPAGES

int cur_socket_id = 0;

#endif

uint64_t map_offset;

rte_iova_t iova;

void *va;

char path[PATH_MAX];

int ret = 0;

int fd;

size_t alloc_sz;

int flags;

void *new_addr;

alloc_sz = hi->hugepage_sz;

/* these are checked at init, but code analyzers don't know that */

if (internal_config.in_memory && !anonymous_hugepages_supported) {

RTE_LOG(ERR, EAL, "Anonymous hugepages not supported, in-memory mode cannot allocate memory\n");

return -1;

}

if (internal_config.in_memory && !memfd_create_supported &&

internal_config.single_file_segments) {

RTE_LOG(ERR, EAL, "Single-file segments are not supported without memfd support\n");

return -1;

}

/* in-memory without memfd is a special case */

int mmap_flags;

if (internal_config.in_memory && !memfd_create_supported) {

const int in_memory_flags = MAP_HUGETLB | MAP_FIXED |

MAP_PRIVATE | MAP_ANONYMOUS;

int pagesz_flag;

pagesz_flag = pagesz_flags(alloc_sz);

fd = -1;

mmap_flags = in_memory_flags | pagesz_flag;

/* single-file segments codepath will never be active

* here because in-memory mode is incompatible with the

* fallback path, and it's stopped at EAL initialization

* stage.

*/

map_offset = 0;

} else {

/* takes out a read lock on segment or segment list */

//为每一个segment申请一个fd

fd = get_seg_fd(path, sizeof(path), hi, list_idx, seg_idx);

if (fd < 0) {

RTE_LOG(ERR, EAL, "Couldn't get fd on hugepage file\n");

return -1;

}

//

if (internal_config.single_file_segments) {

map_offset = seg_idx * alloc_sz;

ret = resize_hugefile(fd, map_offset, alloc_sz, true);

if (ret < 0)

goto resized;

fd_list[list_idx].count++;

} else {

map_offset = 0;

if (ftruncate(fd, alloc_sz) < 0) {

RTE_LOG(DEBUG, EAL, "%s(): ftruncate() failed: %s\n",

__func__, strerror(errno));

goto resized;

}

if (internal_config.hugepage_unlink &&

!internal_config.in_memory) {

if (unlink(path)) {

RTE_LOG(DEBUG, EAL, "%s(): unlink() failed: %s\n",

__func__, strerror(errno));

goto resized;

}

}

}

mmap_flags = MAP_SHARED | MAP_POPULATE | MAP_FIXED;

}

/*

* map the segment, and populate page tables, the kernel fills

* this segment with zeros if it's a new page.

*/

//以每个segment的页申请一块虚拟内存

va = mmap(addr, alloc_sz, PROT_READ | PROT_WRITE, mmap_flags, fd,

map_offset);

if (va == MAP_FAILED) {

RTE_LOG(DEBUG, EAL, "%s(): mmap() failed: %s\n", __func__,

strerror(errno));

/* mmap failed, but the previous region might have been

* unmapped anyway. try to remap it

*/

goto unmapped;

}

if (va != addr) {

RTE_LOG(DEBUG, EAL, "%s(): wrong mmap() address\n", __func__);

munmap(va, alloc_sz);

goto resized;

}

/* In linux, hugetlb limitations, like cgroup, are

* enforced at fault time instead of mmap(), even

* with the option of MAP_POPULATE. Kernel will send

* a SIGBUS signal. To avoid to be killed, save stack

* environment here, if SIGBUS happens, we can jump

* back here.

*/

if (huge_wrap_sigsetjmp()) {

RTE_LOG(DEBUG, EAL, "SIGBUS: Cannot mmap more hugepages of size %uMB\n",

(unsigned int)(alloc_sz >> 20));

goto mapped;

}

/* we need to trigger a write to the page to enforce page fault and

* ensure that page is accessible to us, but we can't overwrite value

* that is already there, so read the old value, and write itback.

* kernel populates the page with zeroes initially.

*/

*(volatile int *)addr = *(volatile int *)addr;

//设置iova地址(后期解析的分析)

iova = rte_mem_virt2iova(addr);

if (iova == RTE_BAD_PHYS_ADDR) {

RTE_LOG(DEBUG, EAL, "%s(): can't get IOVA addr\n",

__func__);

goto mapped;

}

#ifdef RTE_EAL_NUMA_AWARE_HUGEPAGES

ret = get_mempolicy(&cur_socket_id, NULL, 0, addr,

MPOL_F_NODE | MPOL_F_ADDR);

if (ret < 0) {

RTE_LOG(DEBUG, EAL, "%s(): get_mempolicy: %s\n",

__func__, strerror(errno));

goto mapped;

} else if (cur_socket_id != socket_id) {

RTE_LOG(DEBUG, EAL,

"%s(): allocation happened on wrong socket (wanted %d, got %d)\n",

__func__, socket_id, cur_socket_id);

goto mapped;

}

#else

if (rte_socket_count() > 1)

RTE_LOG(DEBUG, EAL, "%s(): not checking hugepage NUMA node.\n",

__func__);

#endif

//申请了一快虚拟内存

ms->addr = addr;

ms->hugepage_sz = alloc_sz;

ms->len = alloc_sz;

ms->nchannel = rte_memory_get_nchannel();

ms->nrank = rte_memory_get_nrank();

ms->iova = iova;

ms->socket_id = socket_id;

return 0;

mapped:

munmap(addr, alloc_sz);

unmapped:

flags = MAP_FIXED;

new_addr = eal_get_virtual_area(addr, &alloc_sz, alloc_sz, 0, flags);

if (new_addr != addr) {

if (new_addr != NULL)

munmap(new_addr, alloc_sz);

/* we're leaving a hole in our virtual address space. if

* somebody else maps this hole now, we could accidentally

* override it in the future.

*/

RTE_LOG(CRIT, EAL, "Can't mmap holes in our virtual address space\n");

}

/* roll back the ref count */

if (internal_config.single_file_segments)

fd_list[list_idx].count--;

resized:

/* some codepaths will return negative fd, so exit early */

if (fd < 0)

return -1;

if (internal_config.single_file_segments) {

resize_hugefile(fd, map_offset, alloc_sz, false);

/* ignore failure, can't make it any worse */

/* if refcount is at zero, close the file */

if (fd_list[list_idx].count == 0)

close_hugefile(fd, path, list_idx);

} else {

/* only remove file if we can take out a write lock */

if (internal_config.hugepage_unlink == 0 &&

internal_config.in_memory == 0 &&

lock(fd, LOCK_EX) == 1)

unlink(path);

close(fd);

fd_list[list_idx].fds[seg_idx] = -1;

}

return -1;

}2.3 总结

(1)rte_eal_memseg_init主要功能是将内存划分成段,每个段存在多少个页,申请了一块虚拟内存,将/var/run/dpdk/pg1/fbarray_memzone对应的fd映射到这块内存

(2)eal_memalloc_init为memseg_list设置fd信息

(3)rte_eal_hugepage_init这个函数有意思的地方是为每一个segment中rte_message申请内存信息,但是最终却释放了,这样做是为了测试当前内存是否能够满足申请内存,释放内存只是把最外层的指针释放,但是内层指针指向的内容没有释放

642

642

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?