This series of articles are the study notes of " Machine Learning ", by Prof. Andrew Ng., Stanford University. This article is the notes of week 3, Solving the Problem of Overfitting, Part III. This article contains some topic about how to implementation logistic regression with regularization to addressing overfitting.

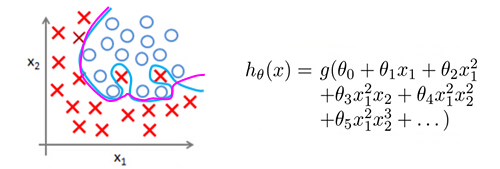

Regularized logistic regression

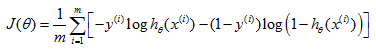

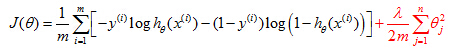

1. Cost Function of Logistic Regression

- This is the original cost function for logistic regression without regularization

And if we want to modify it to use regularization, all we need to do is add to it the following term plusλ/2m, sum from j = 1, and as usual sum fromj = 1 soon up to theta n from being too large.

- This is the cost function for logistic regression with regularization

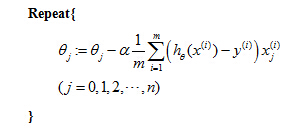

2. Gradient descent for logistic regression

Gradient descent for logistic regression (without regularization)

we can write the equation separately as follow

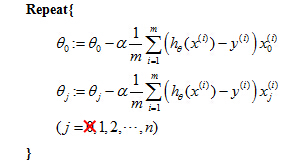

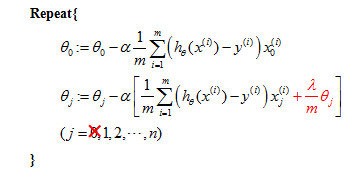

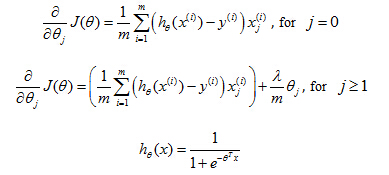

Gradient descent for logistic regression (with regularization)

We're working out gradient descent for regularized linear regression. And of course, just to wrap up this discussion,this term here in the square brackets is the new partial derivative for respect ofθj of the new cost functionJ(θ). WhereJ(θ) here is the cost function we defined on a previous that does use regularization.

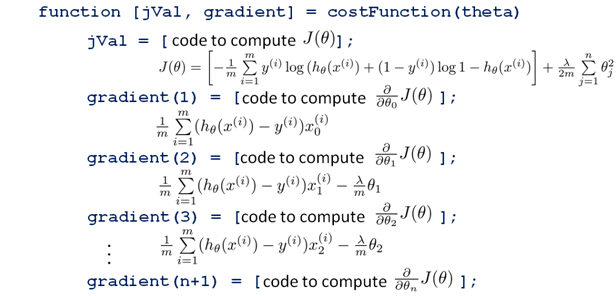

3. Advanced Optimization

796

796

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?