yum install -y yum-utils device-mapper-persistent-data lvm2

yum -y install wget vim

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

yum -y install docker-ce-18.06.1.ce-3.el7

docker --version

mkdir /etc/docker

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://jo6348gu.mirror.aliyuncs.com"]

}

EOF

systemctl enable docker && systemctl start docker

vi /etc/security/limits.conf #末尾追加

es soft nofile 65536

es hard nofile 65536

es soft nproc 65536

es hard nproc 65536

vi /etc/security/limits.d/20-nproc.conf #将*改为用户名es

es soft nproc 4096

root soft nproc unlimited

vi /etc/sysctl.conf

vm.max_map_count=655360

sysctl -p

mkdir -p /data/es/config

cd /data

wget https://mirrors.huaweicloud.com/elasticsearch/7.8.0/elasticsearch-7.8.0-linux-x86_64.tar.gz

tar -zxvf elasticsearch-7.8.0-linux-x86_64.tar.gz

cp -r elasticsearch-7.8.0/config/* /data/es/config

vi /data/es/config/elasticsearch.yml #追加配置文件

discovery.type: single-node #单节点模式

network.host: 0.0.0.0

useradd es

passwd es

cd /data

chown -R es:es es

chmod -R 777 es

docker run -d --name es -p 9200:9200 -p 9300:9300 -v /data/es/config/:/usr/share/elasticsearch/config -v /data/es/data/:/usr/share/elasticsearch/data elasticsearch:7.8.0

2.部署kibana

mkdir /data/kibana

cd /data

vim kibana/kibana.yml

server.host: 0.0.0.0

elasticsearch.hosts: ["http://192.168.0.34:9200"]

i18n.locale: "zh-CN"

docker run -d --name kibana -p 5601:5601 -v /data/kibana/kibana.yml:/usr/share/kibana/config/kibana.yml:ro kibana:7.8.0

3.logstash部署

mkdir -p /data/logstash/config

wget https://artifacts.elastic.co/downloads/logstash/logstash-7.8.0.tar.gz

tar -zxvf logstash-7.8.0.tar.gz

cp -r /data/logstash-7.8.0/config/* /data/logstash/config/

vi /data/logstash/config/logstash.conf

input {

beats {

port => 5044

}

}

filter {

dissect {

mapping => { "message" => "[%{Time}] %{LogLevel} %{message}" }

}

}

output {

if "secure.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "secure.log"

}

}

else if "logstash.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "logstash.log"

}

}

}

vi /data/logstash/config/logstash.yml

http.host: "0.0.0.0"

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.hosts: [ "http://192.168.0.34:9200" ]

vim /data/logstash/config/pipelines.yml

- pipeline.id: docker

path.config: "/usr/share/logstash/config/logstash.conf" #注意此处为容器内部路径

docker run -d -p 5044:5044 -p 9600:9600 --name logstash -v /data/logstash/config:/usr/share/logstash/config logstash:7.8.0

docker logs -f -t --tail 100 logstash #查看容器最后十行日志

解决主机内存不够无法启动问题,已经容器之间网络互通问题

docker run -d -e ES_JAVA_OPTS="-Xms256m -Xmx256m" --network my_net --name es -p 9200:9200 -p 9300:9300 -v /data/es/config/:/usr/share/elasticsearch/config -v /data/es/data/:/usr/share/elasticsearch/data elasticsearch:7.8.0

docker run -d --name kibana -p 5601:5601 --network my_net -v /data/kibana/kibana.yml:/usr/share/kibana/config/kibana.yml:ro kibana:7.8.0

docker容器之间相互访问

docker network ls

docker network create my_net

docker network inspect my_net

server.host: 0.0.0.0

docker run -d -e ES_JAVA_OPTS="-Xms256m -Xmx256m" --network my_net --name es -p 9200:9200 -p 9300:9300 -v /data/es/config/:/usr/share/elasticsearch/config -v /data/es/data/:/usr/share/elasticsearch/data elasticsearch:7.8.0

docker run -d --name kibana -p 5601:5601 --network my_net -v /data/kibana/kibana.yml:/usr/share/kibana/config/kibana.yml:ro kibana:7.8.0

elasticsearch.hosts: ["http://172.18.0.1:9200"]

elasticsearch.hosts: ["http://es:9200"] #容器名也可以连4.filebeat日志收集

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.8.0-linux-x86_64.tar.gz

tar -zxvf filebeat-7.8.0-linux-x86_64.tar.gz

vi /data/filebeat-7.8.0-linux-x86_64/filebeat.yml #filebeat.yml配置文件指定监听日志路径

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/*.log

fields:

level: debug

tags: ["secure.log"]

- type: log

enabled: true

paths:

- /data/logstash-7.8.0/logs/*.log

fields:

level: debug

tags: ["logstash.log"]

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

setup.kibana:

output.logstash:

hosts: ["192.168.0.34:5044"]

processors:

- add_host_metadata: ~

- add_cloud_metadata: ~

- add_docker_metadata: ~

- add_kubernetes_metadata: ~ cd /data/filebeat-7.8.0-linux-x86_64

sudo ./filebeat -e -c filebeat.yml -d "publish" #前台启动filebeat

nohup ./filebeat -e -c filebeat.yml >/dev/null 2>&1& #后台启动

5.搭建redis

mkdir -p /data/redis/data

vim redis/data/redis.conf

bind 0.0.0.0

daemonize no

pidfile "/var/run/redis.pid"

port 6380

timeout 300

loglevel warning

logfile "redis.log"

databases 16

rdbcompression yes

dbfilename "redis.rdb"

dir "/data"

requirepass "123456"

masterauth "123456"

maxclients 10000

maxmemory 1000mb

maxmemory-policy allkeys-lru

appendonly yes

appendfsync alwaysdocker run -d --name redis -p 6380:6380 -v `pwd`/redis/data/:/data redis:5.0 redis-server redis.conf6.增加启用redis配置

vi /data/logstash/config/logstash.conf

input {

redis {

host => "192.168.0.34"

port => 6380

db => 0

key => "localhost"

password => "123456"

data_type => "list"

threads => 4

batch_count => "1"

#tags => "user.log"

}

}

filter {

dissect {

mapping => { "message" => "[%{Time}] %{LogLevel} %{message}" }

}

}

output {

if "auction.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "auction.log-%{+YYYY.MM.dd}"

}

}

if "merchant.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "merchant.log-%{+YYYY.MM.dd}"

}

}

if "order.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "order.log-%{+YYYY.MM.dd}"

}

}

if "panel.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "panel.log-%{+YYYY.MM.dd}"

}

}

if "payment.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "payment.log-%{+YYYY.MM.dd}"

}

}

if "user.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "user.log-%{+YYYY.MM.dd}"

}

}

###############dev#################

if "devauction.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "devauction.log-%{+YYYY.MM.dd}"

}

}

if "devmerchant.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "devmerchant.log-%{+YYYY.MM.dd}"

}

}

if "devorder.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "devorder.log-%{+YYYY.MM.dd}"

}

}

if "devpanel.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "devpanel.log-%{+YYYY.MM.dd}"

}

}

if "devpayment.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "devpayment.log-%{+YYYY.MM.dd}"

}

}

if "devuser.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "devuser.log-%{+YYYY.MM.dd}"

}

}

###############test#################

if "testauction.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "testauction.log-%{+YYYY.MM.dd}"

}

}

if "testmerchant.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "testmerchant.log-%{+YYYY.MM.dd}"

}

}

if "testorder.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "testorder.log-%{+YYYY.MM.dd}"

}

}

if "testpanel.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "testpanel.log-%{+YYYY.MM.dd}"

}

}

if "testpayment.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "testpayment.log-%{+YYYY.MM.dd}"

}

}

if "testuser.log" in [tags] {

elasticsearch {

hosts => ["http://192.168.0.34:9200"]

index => "testuser.log-%{+YYYY.MM.dd}"

}

}

}vi /data/filebeat-7.8.0-linux-x86_64/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /home/auction/logs/*.log

fields:

tags: ["auction.log"]

- type: log

enabled: true

paths:

- /home/merchant/logs/*.log

fields:

tags: ["merchant.log"]

- type: log

enabled: true

paths:

- /home/order/logs/*.log

fields:

tags: ["order.log"]

- type: log

enabled: true

paths:

- /home/panel/logs/*.log

fields:

tags: ["panel.log"]

- type: log

enabled: true

paths:

- /home/payment/logs/*.log

fields:

tags: ["payment.log"]

- type: log

enabled: true

paths:

- /home/user/logs/*.log

fields:

tags: ["user.log"]

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

setup.kibana:

output.redis:

enabled: true

hosts: ["192.168.0.34:6380"]

password: "123456"

db: 0

key: localhost

worker: 4

timeout: 5

max_retries: 3

datatype: list

processors:

- add_host_metadata: ~

- add_cloud_metadata: ~

- add_docker_metadata: ~

- add_kubernetes_metadata: ~

7.filebeat源码安装启动一段时间后会自动停止,解决方案采用rpm安装filebeat

下载地址:https://www.elastic.co/cn/downloads/past-releases#filebeat

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.8.0-x86_64.rpm

rpm -ivh filebeat-7.8.0-x86_64.rpm

\cp /data/filebeat-7.8.0-linux-x86_64/filebeat.yml /etc/filebeat/filebeat.yml启动:systemctl start filebeat

停止:systemctl stop filebeat

重启:systemctl restart filebeat

开机自启动:systemctl enable filebeat

开机不启动:systemctl disable filebeat

ps -ef | grep filebeatdocker system df -v #查看镜像和容器占用磁盘大小

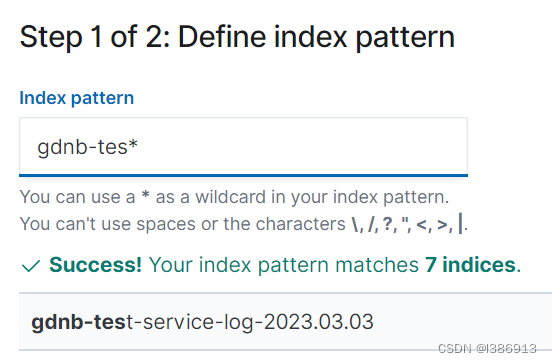

8.创建索引并且筛选日志,创建索引的时候名字后面的日期用*号代替,不然只能检索指定日期

筛选后的日志过滤了无用的信息,一目了然了

解决docker占用磁盘过大,docker systemctl df -v 查看镜像和容器磁盘占用都不高,最好查到是/var/lib/docker/containers/container_id/container-json.log过大,临时解决方案清空日志,下次还是会过大,cat /dev/null >json.log 清空这个文件的内容,释放磁盘空间; 需要重启容器,才能继续记录日志。

#添加max-size参数来限制文件大小

docker run -it --log-opt max-size=10m --log-opt max-file=3 alpine ash #最大不超过10m,不超过三个日志文件

cat /dev/null > 9cb7650c139325cacf1973151220139cf273c9458ba3e95f51eca0ec8cf5a395-json.log

解决日志过大全局永久配置

vi /etc/docker/daemon.json

{

"log-driver": "json-file",

"log-opt": {

"max-size": "10m",

"max-file": "3"

}

容器运行时 每个日志文件最大为10M ,每个容器 最多可以保存3份日志文件

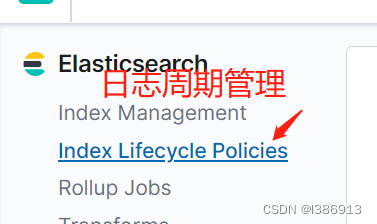

1.Kibana设置elasticsearch索引过期时间,到期自动删除

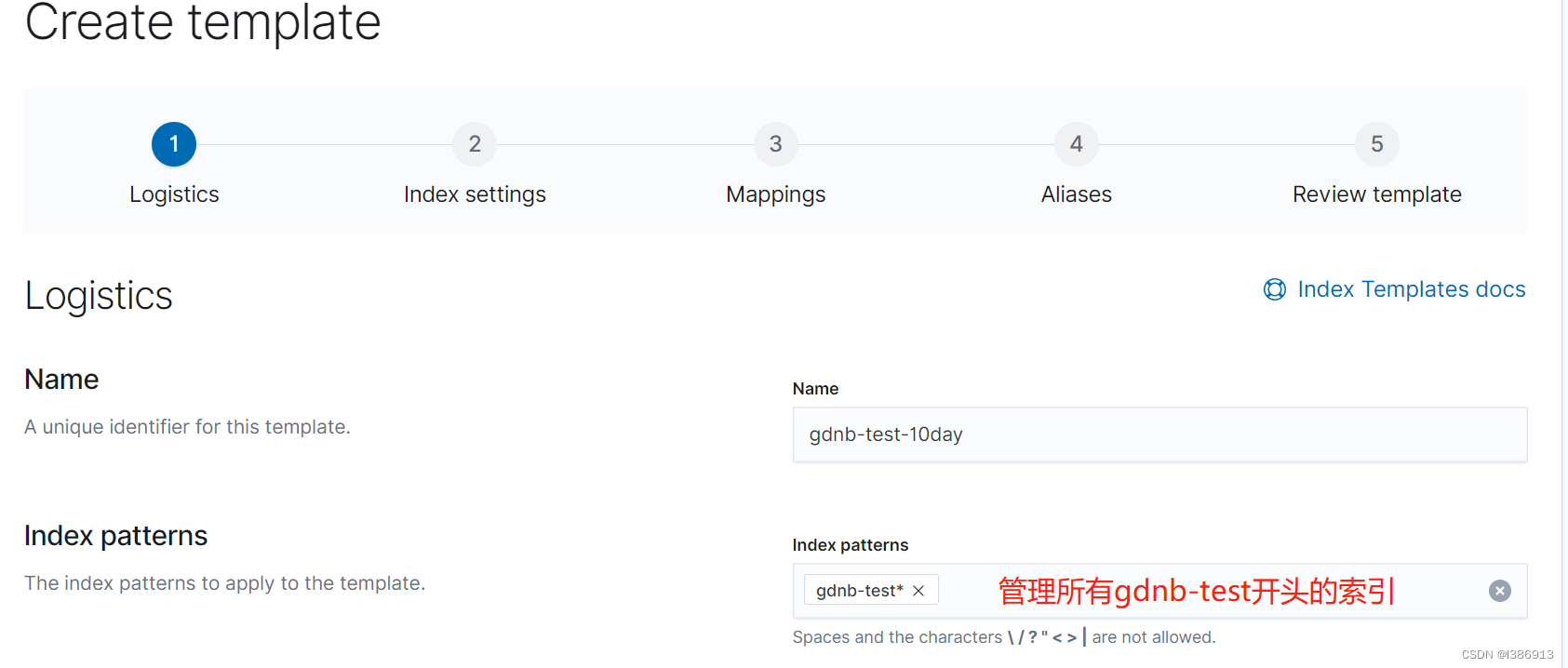

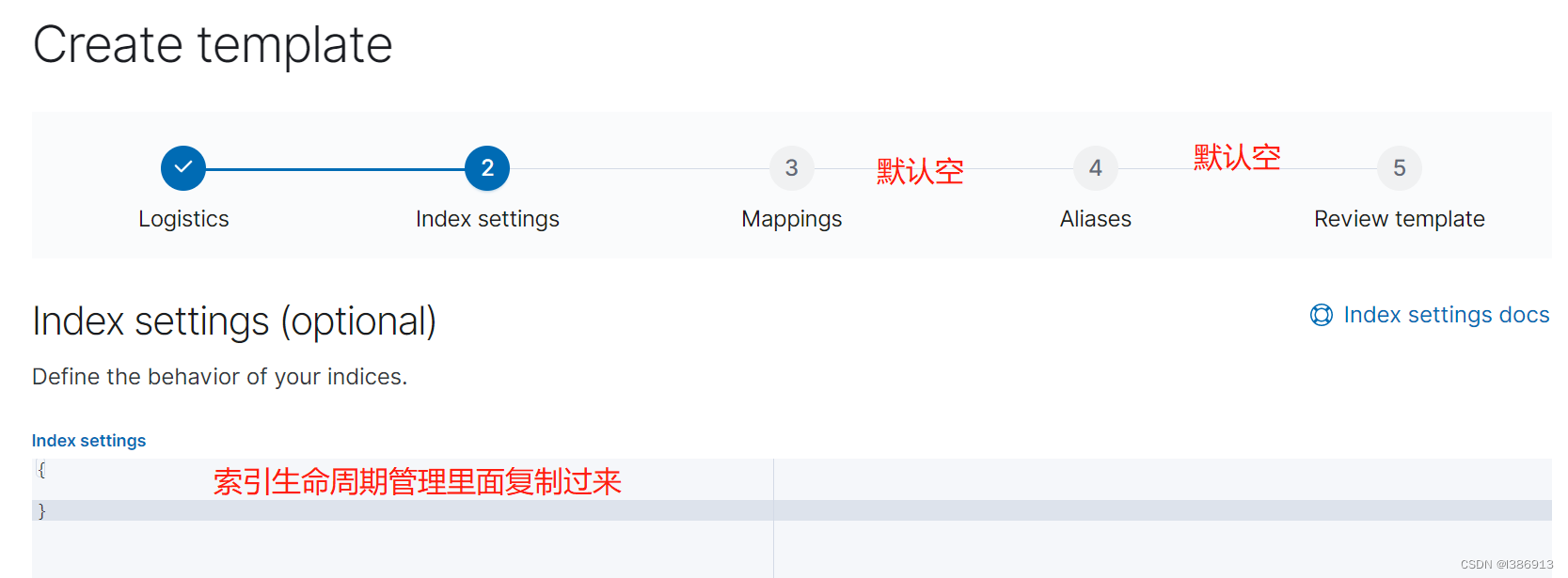

首先创建一个索引,索引必须名字后面*这样所有日期都能检索出。然后在创建一个十天的日志生命周期管理,在创建一个索引模板,索引模板可以检索出所有需要检索的日志,这个索引模板可以直接复制日志生命周期管理代码,也可之后日志生命周期里面加入这个索引模板。

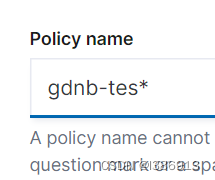

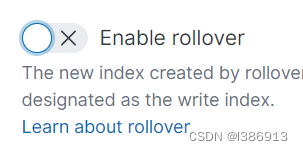

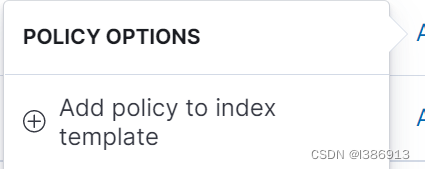

2.创建一个索引生命周期策略

Index Management 索引管理

Index Lifecycle Policies 索引生命周期策略

Delete phase 删除阶段

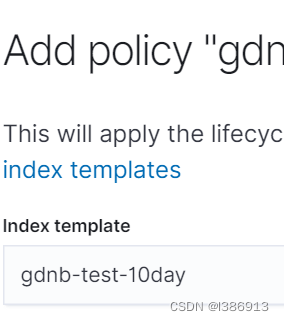

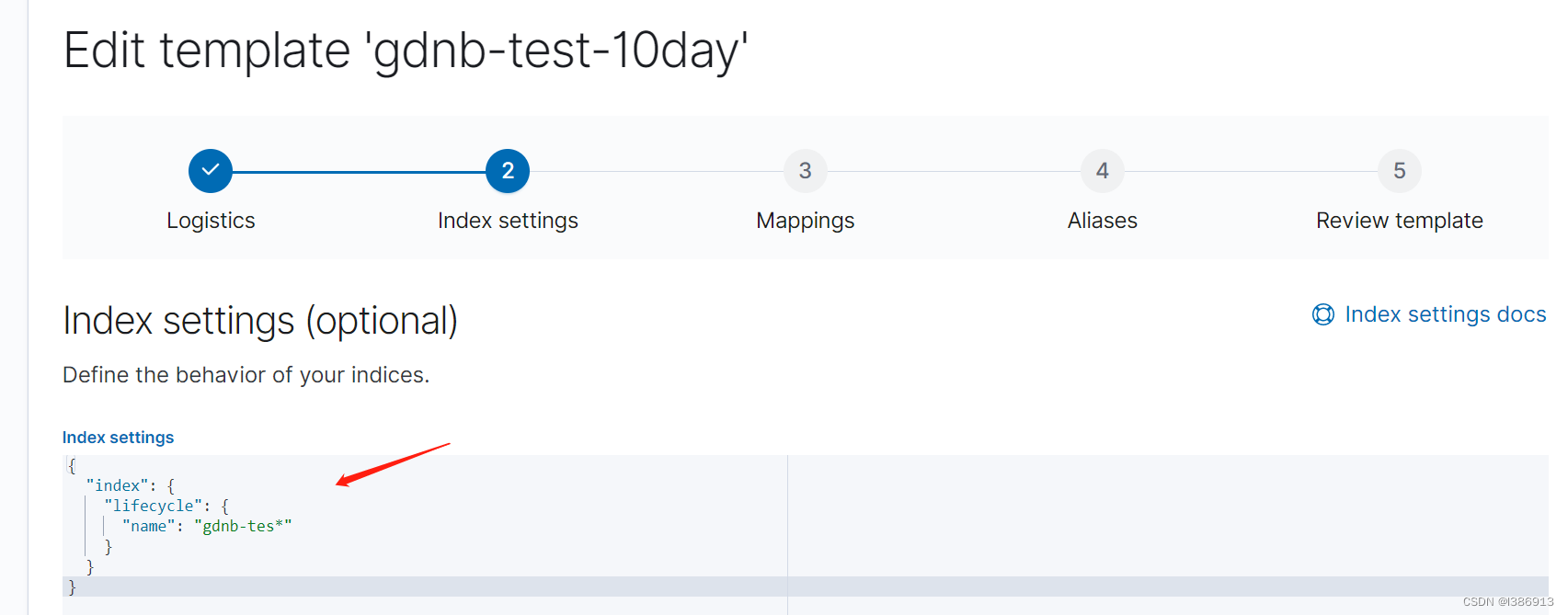

3.创建一个索引模板用来管理所有索引

Index Templates 索引模板

{

"index": {

"lifecycle": {

"name": "gdnb-tes*"

}

}

}可将索引周期管理的代码复制过去,也可直接到索引周期管理里面选择gdnb-test-10day这个索引模板

3.将需要保存十天的日志索引模板加入刚创建的周期生命管理

407

407

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?