TITLE: ThiNet: A Filter Level Pruning Method for Deep Neural Network Compression

AUTHOR: Jian-Hao Luo, Jianxin Wu, Weiyao Lin

ASSOCIATION: Nanjing University, Shanghai Jiao Tong University

FROM: arXiv:1707.06342

CONTRIBUTIONS

- A simple yet effective framework, namely ThiNet, is proposed to simultaneously accelerate and compress CNN models.

- Filter pruning is formally established as an optimization problem, and statistics information computed from its next layer is used to prune filters.

METHOD

Framework

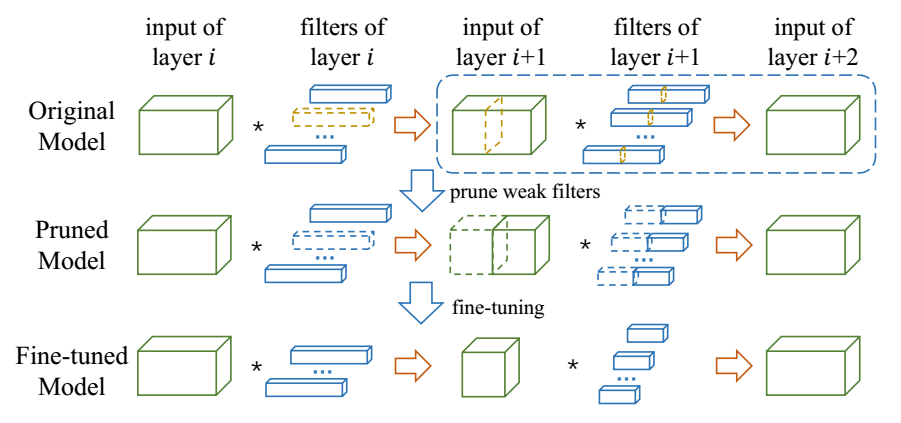

The framework of ThiNet compression procedure is illustrated in the following figure. The yellow dotted boxes are the weak channels and their corresponding filgers that would be pruned.

- Filter selection. The output of layer

i+1

is used to guide the pruning in layer

i

. The key idea is: if a subset of channels in layer

(i+1)

’s input can approximate the output in layer

i+1

, the other channels can be safely removed from the input of layer

i+1

. Note that one channel in layer

(i+1)

’s input is produced by one filter in layer

i

, hence the corresponding filter in layer

i can be safely pruned. - Pruning. Weak channels in layer (i+1) ’s input and their corresponding filters in layer i would be pruned away, leading to a much smaller model. Note that, the pruned network has exactly the same structure but with fewer filters and channels.

- Fine-tuning. Fine-tuning is a necessary step to recover the generalization ability damaged by filter pruning. For time-saving considerations, fine-tune one or two epochs after the pruning of one layer. In order to get an accurate model, more additional epochs would be carried out when all layers have been pruned.

- Iterate to step 1 to prune the next layer.

Data-driven channel selection

Denote the convolution process in layer

i

as A triplet

Collecting training examples

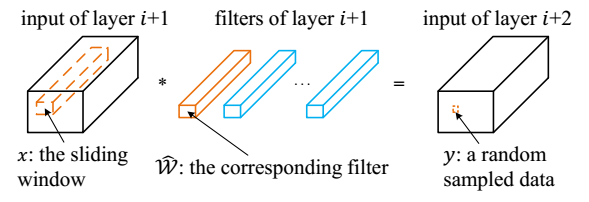

The training set is randomly sampled from the tensor Ii+2 as illustrated in the following figure.

The convolution operation can be formalized in a simple way

A greedy algorithm for channel selection

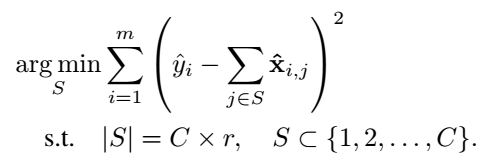

Given a set of

m

training examples

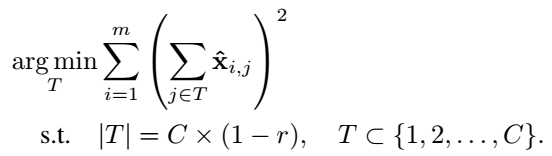

and it eauivalently can be the following alternative objective,

where S∪T={1,2,...,C} and S∩T=∅ . This problem can be sovled by greedy algorithm.

Minimize the reconstruction error

After the subset T <script type="math/tex" id="MathJax-Element-566">T</script> is obtained, a scaling factor for each filter weights is learned to minimize the reconstruction error.

Some Ideas

- Maybe the finetue can help avoid the final step of minimizing the reconstruction error.

- If we use this work on non-classification task, such as detection and segmentation, the performance remains to be checked.

585

585

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?