1.如何控制网络请求分发

先看如何利用volley框架发送一个简单地网络请求,在探究其中的请求分发机制。如下实例代码:

final TextView mTextView = (TextView) findViewById(R.id.text);

...

// Instantiate the RequestQueue.

RequestQueue queue = Volley.newRequestQueue(this);

String url ="http://www.google.com";

// Request a string response from the provided URL.

StringRequest stringRequest = new StringRequest(Request.Method.GET, url,

new Response.Listener<String>() {

@Override

public void onResponse(String response) {

// Display the first 500 characters of the response string.

mTextView.setText("Response is: "+ response.substring(0,500));

}

}, new Response.ErrorListener() {

@Override

public void onErrorResponse(VolleyError error) {

mTextView.setText("That didn't work!");

}

});

// Add the request to the RequestQueue.

queue.add(stringRequest);先利用Volley.newRequestQueue静态方法创建一个RequestQueue ,然后创建Volley提供的一个简单请求StringRequest ,在其中的监听器有服务器响应成功和失败的回调方法,这些方法是在主线程中执行,所以可以直接跟新UI。最后只要RequestQueue 的add方法把这个请求加入到队列中就可以了。

下面来研究这么简单地几行代码是如何做到发送网络请求的,上面这段代码有两句关键代码;

RequestQueue queue = Volley.newRequestQueue(this);和

queue.add(stringRequest);先看下newRequestQueue方法是如何创建一个RequestQueue,查看其具体的方法:

/**

* Creates a default instance of the worker pool and calls {@link RequestQueue#start()} on it.

*

* @param context A {@link Context} to use for creating the cache dir.

* @param stack An {@link HttpStack} to use for the network, or null for default.

* @return A started {@link RequestQueue} instance.

*/

public static RequestQueue newRequestQueue(Context context, HttpStack stack) {

File cacheDir = new File(context.getCacheDir(), DEFAULT_CACHE_DIR);

String userAgent = "volley/0";

try {

String packageName = context.getPackageName();

PackageInfo info = context.getPackageManager().getPackageInfo(packageName, 0);

userAgent = packageName + "/" + info.versionCode;

} catch (NameNotFoundException e) {

}

if (stack == null) {

if (Build.VERSION.SDK_INT >= 9) {

stack = new HurlStack();

} else {

// Prior to Gingerbread, HttpUrlConnection was unreliable.

// See: http://android-developers.blogspot.com/2011/09/androids-http-clients.html

stack = new HttpClientStack(AndroidHttpClient.newInstance(userAgent));

}

}

Network network = new BasicNetwork(stack);

RequestQueue queue = new RequestQueue(new DiskBasedCache(cacheDir), network);

queue.start();

return queue;

}

/**

* Creates a default instance of the worker pool and calls {@link RequestQueue#start()} on it.

*

* @param context A {@link Context} to use for creating the cache dir.

* @return A started {@link RequestQueue} instance.

*/

public static RequestQueue newRequestQueue(Context context) {

return newRequestQueue(context, null);

}由注释可以看到这个方法创建了一个worker池,并调用RequestQueue的start方法来启动。函数传入连个参数一个是Context,一个是HttpStack ,HttpStack 默认为空,那么会创建一个HttpStack的实例,如果手机系统版本号大于9,那么创建一个HurlStack的实例,否则就创建一个HttpClientStack的实例。HurlStack内部使用HttpURLConnection进行网络通讯的,而HttpClientStack的内部则是使用HttpClient进行网络通讯的。

接下来又利用HurlStack来创建了一个Network对象,来处理网络请求,最后就是真正RequestQueue 的创建,需要传入两个参数,一个是用来传送请求的Network,一个用来处理缓存的cache,Network就是利用刚才创建的,cache利用DiskBasedCache,DiskBasedCache提供了一个文件对应一个请求的的缓存形式(a one-file-per-response cache with an in-memory index)。

需要注意的是,为了节约资源和避免RequestQueue 的重复创建,一般使用单利模式管理RequestQueue 。

RequestQueue 创建好之后,就调用了start方法,让这个RequestQueue 运行起来了。那究竟是怎么运行的,start方法中做了些什么,继续分析start方法。

/**

* Starts the dispatchers in this queue.

*/

public void start() {

stop(); // Make sure any currently running dispatchers are stopped.

// Create the cache dispatcher and start it.

mCacheDispatcher = new CacheDispatcher(mCacheQueue, mNetworkQueue, mCache, mDelivery);

mCacheDispatcher.start();

// Create network dispatchers (and corresponding threads) up to the pool size.

for (int i = 0; i < mDispatchers.length; i++) {

NetworkDispatcher networkDispatcher = new NetworkDispatcher(mNetworkQueue, mNetwork,

mCache, mDelivery);

mDispatchers[i] = networkDispatcher;

networkDispatcher.start();

}

}

由注释可以知道,start是开启了RequestQueue 中的分发者(dispatchers ),具体是什么意思呢,这里的dispatcher是继承自Thread,也就是说是一个线程。先创建了一个CacheDispatcher(缓存线程)然后运行,然后调用for循环(默认循环4次)重复创建了NetworkDispatcher(网络请求线程),并依次调用start()运行。也就是说RequestQueue的start方法执行完之后就有五个线程一直在运行,不断等待网络请求的到来。

注意到在创建CacheDispatcher和networkDispatcher 的时候需要传入几个参数:mCacheQueue、mNetworkQueue、mCache、mDelivery,mCacheQueue和mNetworkQueue都是PriorityBlockingQueue

/**

* Adds a Request to the dispatch queue.

* @param request The request to service

* @return The passed-in request

*/

public <T> Request<T> add(Request<T> request) {

// Tag the request as belonging to this queue and add it to the set of current requests.

request.setRequestQueue(this);

synchronized (mCurrentRequests) {

mCurrentRequests.add(request);

}

// Process requests in the order they are added.

request.setSequence(getSequenceNumber());

request.addMarker("add-to-queue");

// If the request is uncacheable, skip the cache queue and go straight to the network.

if (!request.shouldCache()) {

mNetworkQueue.add(request);

return request;

}

// Insert request into stage if there's already a request with the same cache key in flight.

synchronized (mWaitingRequests) {

String cacheKey = request.getCacheKey();

if (mWaitingRequests.containsKey(cacheKey)) {

// There is already a request in flight. Queue up.

Queue<Request<?>> stagedRequests = mWaitingRequests.get(cacheKey);

if (stagedRequests == null) {

stagedRequests = new LinkedList<Request<?>>();

}

stagedRequests.add(request);

mWaitingRequests.put(cacheKey, stagedRequests);

if (VolleyLog.DEBUG) {

VolleyLog.v("Request for cacheKey=%s is in flight, putting on hold.", cacheKey);

}

} else {

// Insert 'null' queue for this cacheKey, indicating there is now a request in

// flight.

mWaitingRequests.put(cacheKey, null);

mCacheQueue.add(request);

}

return request;

}

}首先将add方法传入的request请求绑定到当前的RequestQueue,并且加入到当前的请求集中,然后给request进行编号方便按照编号依次处理。接下来就是具体的添加逻辑。

先判断当前的请求是否可以缓存,如果不能缓存则直接将这条请求加入网络请求队列,可以缓存的话则将这条请求加入缓存队列。在默认情况下,每条请求都是可以缓存的,就加入到了缓存队列中,这样之前提到的缓存线程(CacheDispatcher)就开始运行起来了,具体怎么对队列中的request进行处理,就要分析CacheDispatcher的run方法。

@Override

public void run() {

if (DEBUG) VolleyLog.v("start new dispatcher");

Process.setThreadPriority(Process.THREAD_PRIORITY_BACKGROUND);

// Make a blocking call to initialize the cache.

mCache.initialize();

while (true) {

try {

// Get a request from the cache triage queue, blocking until

// at least one is available.

final Request<?> request = mCacheQueue.take();

request.addMarker("cache-queue-take");

// If the request has been canceled, don't bother dispatching it.

if (request.isCanceled()) {

request.finish("cache-discard-canceled");

continue;

}

// Attempt to retrieve this item from cache.

Cache.Entry entry = mCache.get(request.getCacheKey());

if (entry == null) {

request.addMarker("cache-miss");

// Cache miss; send off to the network dispatcher.

mNetworkQueue.put(request);

continue;

}

// If it is completely expired, just send it to the network.

if (entry.isExpired()) {

request.addMarker("cache-hit-expired");

request.setCacheEntry(entry);

mNetworkQueue.put(request);

continue;

}

// We have a cache hit; parse its data for delivery back to the request.

request.addMarker("cache-hit");

Response<?> response = request.parseNetworkResponse(

new NetworkResponse(entry.data, entry.responseHeaders));

request.addMarker("cache-hit-parsed");

if (!entry.refreshNeeded()) {

// Completely unexpired cache hit. Just deliver the response.

mDelivery.postResponse(request, response);

} else {

// Soft-expired cache hit. We can deliver the cached response,

// but we need to also send the request to the network for

// refreshing.

request.addMarker("cache-hit-refresh-needed");

request.setCacheEntry(entry);

// Mark the response as intermediate.

response.intermediate = true;

// Post the intermediate response back to the user and have

// the delivery then forward the request along to the network.

mDelivery.postResponse(request, response, new Runnable() {

@Override

public void run() {

try {

mNetworkQueue.put(request);

} catch (InterruptedException e) {

// Not much we can do about this.

}

}

});

}

} catch (InterruptedException e) {

// We may have been interrupted because it was time to quit.

if (mQuit) {

return;

}

continue;

}

}

}这个处理逻辑是在while(true)循环中运行的,说明缓存线程一直是运行中的。如果request已经被取消,那么就不要进行请求分发;接着尝试从缓存当中取出响应结果,如何为空的话则把这条请求加入到网络请求队列中,如果不为空的话再判断该缓存是否已过期,如果已经过期了则同样把这条请求加入到网络请求队列中,否则就认为不需要重发网络请求,直接使用缓存中的数据即可。如果缓存的数据需要刷新,那么缓存的数据就是软过期的,可以直接返回缓存的响应,但是也需要把这个请求发送到网络请求队列中。

最后利用request的parseNetworkResponse()方法来对response数据进行解析,比如我们之前用过的StringRequest,将结果解析为字符串,如果我们自定义request,就会调用重写的parseNetworkResponse()方法进行数据解析。

类似的,NetworkDispatcher中 的run方法也是大同小异。

@Override

public void run() {

Process.setThreadPriority(Process.THREAD_PRIORITY_BACKGROUND);

while (true) {

long startTimeMs = SystemClock.elapsedRealtime();

Request<?> request;

try {

// Take a request from the queue.

request = mQueue.take();

} catch (InterruptedException e) {

// We may have been interrupted because it was time to quit.

if (mQuit) {

return;

}

continue;

}

try {

request.addMarker("network-queue-take");

// If the request was cancelled already, do not perform the

// network request.

if (request.isCanceled()) {

request.finish("network-discard-cancelled");

continue;

}

addTrafficStatsTag(request);

// Perform the network request.

NetworkResponse networkResponse = mNetwork.performRequest(request);

request.addMarker("network-http-complete");

// If the server returned 304 AND we delivered a response already,

// we're done -- don't deliver a second identical response.

if (networkResponse.notModified && request.hasHadResponseDelivered()) {

request.finish("not-modified");

continue;

}

// Parse the response here on the worker thread.

Response<?> response = request.parseNetworkResponse(networkResponse);

request.addMarker("network-parse-complete");

// Write to cache if applicable.

// TODO: Only update cache metadata instead of entire record for 304s.

if (request.shouldCache() && response.cacheEntry != null) {

mCache.put(request.getCacheKey(), response.cacheEntry);

request.addMarker("network-cache-written");

}

// Post the response back.

request.markDelivered();

mDelivery.postResponse(request, response);

} catch (VolleyError volleyError) {

volleyError.setNetworkTimeMs(SystemClock.elapsedRealtime() - startTimeMs);

parseAndDeliverNetworkError(request, volleyError);

} catch (Exception e) {

VolleyLog.e(e, "Unhandled exception %s", e.toString());

VolleyError volleyError = new VolleyError(e);

volleyError.setNetworkTimeMs(SystemClock.elapsedRealtime() - startTimeMs);

mDelivery.postError(request, volleyError);

}

}

}网络请求线程调用Network的performRequest()方法来去发送网络请求来获取响应,然后放入缓存中。在解析完了NetworkResponse中的数据之后,又会调用ExecutorDelivery的postResponse()方法来回调解析出的数据,代码如下:

@Override

public void postResponse(Request<?> request, Response<?> response, Runnable runnable) {

request.markDelivered();

request.addMarker("post-response");

mResponsePoster.execute(new ResponseDeliveryRunnable(request, response, runnable));

}

其中,在mResponsePoster的execute()方法中传入了一个ResponseDeliveryRunnable对象,这个是ExecutorDelivery的内部类,代码如下:

/**

* A Runnable used for delivering network responses to a listener on the

* main thread.

*/

@SuppressWarnings("rawtypes")

private class ResponseDeliveryRunnable implements Runnable {

private final Request mRequest;

private final Response mResponse;

private final Runnable mRunnable;

public ResponseDeliveryRunnable(Request request, Response response, Runnable runnable) {

mRequest = request;

mResponse = response;

mRunnable = runnable;

}

@SuppressWarnings("unchecked")

@Override

public void run() {

// If this request has canceled, finish it and don't deliver.

if (mRequest.isCanceled()) {

mRequest.finish("canceled-at-delivery");

return;

}

// Deliver a normal response or error, depending.

if (mResponse.isSuccess()) {

mRequest.deliverResponse(mResponse.result);

} else {

mRequest.deliverError(mResponse.error);

}

// If this is an intermediate response, add a marker, otherwise we're done

// and the request can be finished.

if (mResponse.intermediate) {

mRequest.addMarker("intermediate-response");

} else {

mRequest.finish("done");

}

// If we have been provided a post-delivery runnable, run it.

if (mRunnable != null) {

mRunnable.run();

}

}

}有注释可以知道,主要是用来将网络请求响应返回到主线程中,那个这个线程怎么就是在主线程中执行呢,可以看一下mResponsePoster的构造方法:

public ExecutorDelivery(final Handler handler) {

// Make an Executor that just wraps the handler.

mResponsePoster = new Executor() {

@Override

public void execute(Runnable command) {

handler.post(command);

}

};

}

/**

* Creates the worker pool. Processing will not begin until {@link #start()} is called.

*

* @param cache A Cache to use for persisting responses to disk

* @param network A Network interface for performing HTTP requests

* @param threadPoolSize Number of network dispatcher threads to create

*/

public RequestQueue(Cache cache, Network network, int threadPoolSize) {

this(cache, network, threadPoolSize,

new ExecutorDelivery(new Handler(Looper.getMainLooper())));

}这下就明白了,execute其实就是执行的是handler.post(Runnable runnable)方法,这个handler是通过Looper.getMainLooper()方法获得的,这样就保证是在主线程中执行,可以顺利地跟新UI。

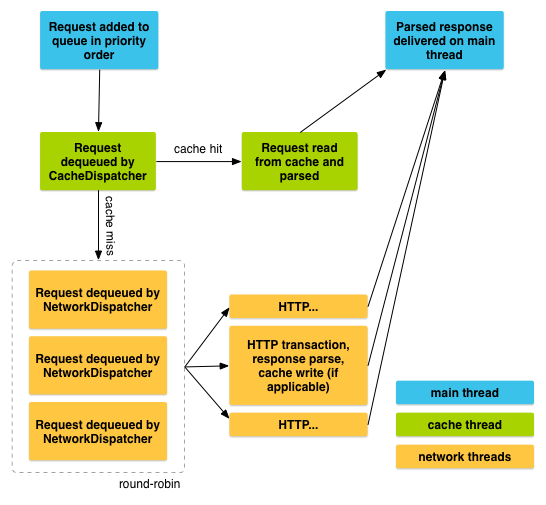

最后,这个流程可以利用官方的一个流程图来展示:

其中蓝色部分代表主线程,绿色部分代表缓存线程,橙色部分代表网络线程。我们在主线程中调用RequestQueue的add()方法来添加一条网络请求,这条请求会先被加入到缓存队列当中,如果发现可以找到相应的缓存结果就直接读取缓存并解析,然后回调给主线程。如果在缓存中没有找到结果,则将这条请求加入到网络请求队列中,然后处理发送HTTP请求,解析响应结果,写入缓存,并回调主线程。

3.网络请求优先级如何实现?

可以覆写getPrioriity()方法,优先级有LOW,NORMAL,HIGH,IMMEDIATE

/**

* Returns the {@link Priority} of this request; {@link Priority#NORMAL} by default.

*/

public Priority getPriority() {

return Priority.NORMAL;

} 4.网络请求如何取消?

可以利用tag来取消,下面是示例代码:

1. Define your tag and add it to your requests.

public static final String TAG = "MyTag";

StringRequest stringRequest; // Assume this exists.

RequestQueue mRequestQueue; // Assume this exists.

// Set the tag on the request.

stringRequest.setTag(TAG);

// Add the request to the RequestQueue.

mRequestQueue.add(stringRequest);- 在activity的onStop()方法中取消

@Override

protected void onStop () {

super.onStop();

if (mRequestQueue != null) {

mRequestQueue.cancelAll(TAG);

}

}最后再看下volley是怎么实现http缓存的。

先看是在哪里发送http请求,是在BasicNetwork这个类中,这个类继承了Network接口,实现了其中了的performRequest()方法,用来发送一个request并返回对应的NetworkResponse

public NetworkResponse performRequest(Request<?> request) throws VolleyError;具体实现如下:

@Override

public NetworkResponse performRequest(Request<?> request) throws VolleyError {

long requestStart = SystemClock.elapsedRealtime();

while (true) {

HttpResponse httpResponse = null;

byte[] responseContents = null;

Map<String, String> responseHeaders = Collections.emptyMap();

try {

// Gather headers.

Map<String, String> headers = new HashMap<String, String>();

addCacheHeaders(headers, request.getCacheEntry());

httpResponse = mHttpStack.performRequest(request, headers);

StatusLine statusLine = httpResponse.getStatusLine();

int statusCode = statusLine.getStatusCode();

responseHeaders = convertHeaders(httpResponse.getAllHeaders());

// Handle cache validation.

if (statusCode == HttpStatus.SC_NOT_MODIFIED) {

Entry entry = request.getCacheEntry();

if (entry == null) {

return new NetworkResponse(HttpStatus.SC_NOT_MODIFIED, null,

responseHeaders, true,

SystemClock.elapsedRealtime() - requestStart);

}

// A HTTP 304 response does not have all header fields. We

// have to use the header fields from the cache entry plus

// the new ones from the response.

// http://www.w3.org/Protocols/rfc2616/rfc2616-sec10.html#sec10.3.5

entry.responseHeaders.putAll(responseHeaders);

return new NetworkResponse(HttpStatus.SC_NOT_MODIFIED, entry.data,

entry.responseHeaders, true,

SystemClock.elapsedRealtime() - requestStart);

}

// Some responses such as 204s do not have content. We must check.

if (httpResponse.getEntity() != null) {

responseContents = entityToBytes(httpResponse.getEntity());

} else {

// Add 0 byte response as a way of honestly representing a

// no-content request.

responseContents = new byte[0];

}

// if the request is slow, log it.

long requestLifetime = SystemClock.elapsedRealtime() - requestStart;

logSlowRequests(requestLifetime, request, responseContents, statusLine);

if (statusCode < 200 || statusCode > 299) {

throw new IOException();

}

return new NetworkResponse(statusCode, responseContents, responseHeaders, false,

SystemClock.elapsedRealtime() - requestStart);

} catch (SocketTimeoutException e) {

attemptRetryOnException("socket", request, new TimeoutError());

} catch (ConnectTimeoutException e) {

attemptRetryOnException("connection", request, new TimeoutError());

} catch (MalformedURLException e) {

throw new RuntimeException("Bad URL " + request.getUrl(), e);

} catch (IOException e) {

int statusCode = 0;

NetworkResponse networkResponse = null;

if (httpResponse != null) {

statusCode = httpResponse.getStatusLine().getStatusCode();

} else {

throw new NoConnectionError(e);

}

VolleyLog.e("Unexpected response code %d for %s", statusCode, request.getUrl());

if (responseContents != null) {

networkResponse = new NetworkResponse(statusCode, responseContents,

responseHeaders, false, SystemClock.elapsedRealtime() - requestStart);

if (statusCode == HttpStatus.SC_UNAUTHORIZED ||

statusCode == HttpStatus.SC_FORBIDDEN) {

attemptRetryOnException("auth",

request, new AuthFailureError(networkResponse));

} else {

// TODO: Only throw ServerError for 5xx status codes.

throw new ServerError(networkResponse);

}

} else {

throw new NetworkError(networkResponse);

}

}

}

}

private void addCacheHeaders(Map<String, String> headers, Cache.Entry entry) {

// If there's no cache entry, we're done.

if (entry == null) {

return;

}

if (entry.etag != null) {

headers.put("If-None-Match", entry.etag);

}

if (entry.lastModified > 0) {

Date refTime = new Date(entry.lastModified);

headers.put("If-Modified-Since", DateUtils.formatDate(refTime));

}

}

可以看到,在发送请求前,先调用了 addCacheHeaders(headers, request.getCacheEntry())这个方法,这个方法的作用就是如果一个请求没有缓存的实体,那么什么也不做,如果有缓存的实体,那么从缓存实体中取出Etag,Last-Modify,对应的放入If-Modified-Since、If-None-Match这两个信息中,,组成一个header,利用mHttpStack发送请求,在

httpResponse = mHttpStack.performRequest(request, headers);

这个方法中将这个header和请求一起发送给服务器。即把上次请求返回的Etag值,以及上一次修改的时间,发送给服务器。服务器在接收到这个请求的时候,先解析Header里头的信息,然后校验该头部信息。如果该文件从上次时间到现在都没有过修改或者Etag信息没有变化,则服务端将直接返回一个304的状态,表示这个缓存是新鲜的,可以直接使用缓存,那么客户端只需要将这个缓存实体entity包装成NetworkResponse返回即可,另外的状态使用请求返回的httpResponse中的byte[]数据包装成NetworkResponse返回。

httpResponse 中返回的头信息responseHeaders也被一同放入 NetworkResponse中。

上面主要是用来对过期缓存或者不新鲜缓存,进行请求再验证,那么怎么判断一个请求是不是需要缓存呢,主要是在HttpHeaderParser这个类中,里面有个parseCacheHeaders()方法

/**

* Extracts a {@link Cache.Entry} from a {@link NetworkResponse}.

*

* @param response The network response to parse headers from

* @return a cache entry for the given response, or null if the response is not cacheable.

*/

public static Cache.Entry parseCacheHeaders(NetworkResponse response) {

long now = System.currentTimeMillis();

Map<String, String> headers = response.headers;

long serverDate = 0;

long lastModified = 0;

long serverExpires = 0;

long softExpire = 0;

long finalExpire = 0;

long maxAge = 0;

long staleWhileRevalidate = 0;

boolean hasCacheControl = false;

boolean mustRevalidate = false;

String serverEtag = null;

String headerValue;

headerValue = headers.get("Date");

if (headerValue != null) {

serverDate = parseDateAsEpoch(headerValue);

}

headerValue = headers.get("Cache-Control");

if (headerValue != null) {

hasCacheControl = true;

String[] tokens = headerValue.split(",");

for (int i = 0; i < tokens.length; i++) {

String token = tokens[i].trim();

if (token.equals("no-cache") || token.equals("no-store")) {

return null;

} else if (token.startsWith("max-age=")) {

try {

maxAge = Long.parseLong(token.substring(8));

} catch (Exception e) {

}

} else if (token.startsWith("stale-while-revalidate=")) {

try {

staleWhileRevalidate = Long.parseLong(token.substring(23));

} catch (Exception e) {

}

} else if (token.equals("must-revalidate") || token.equals("proxy-revalidate")) {

mustRevalidate = true;

}

}

}传入的参数就是我们之前返回的包装好的NetworkResponse,返回的是Cache.Entry,Cache是一个接口,Entry是一个静态内部类,代表缓存实体,其成员变量和方法

byte[] data 请求返回的数据(Body 实体)

String etag Http 响应首部中用于缓存新鲜度验证的 ETag

long serverDate Http 响应首部中的响应产生时间

long ttl 缓存的过期时间

long softTtl 缓存的新鲜时间

boolean isExpired() 判断缓存是否过期,过期缓存不能继续使用

boolean refreshNeeded() 判断缓存是否新鲜,不新鲜的缓存需要发到服务端做新鲜度的检测

方法体中,通过NetworkResponse的 Header 和 Body 内容,构建缓存实体。先对header进行解析,如果 Header 的 Cache-Control 字段含有no-cache或no-store表示不缓存,返回 null。

(1). 根据 Date 首部,获取响应生成时间

(2). 根据 ETag 首部,获取响应实体标签

(3). 根据 Cache-Control 和 Expires 首部,计算出缓存的过期时间,和缓存的新鲜度时间

最后构建成Cache.Entry返回。

159

159

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?