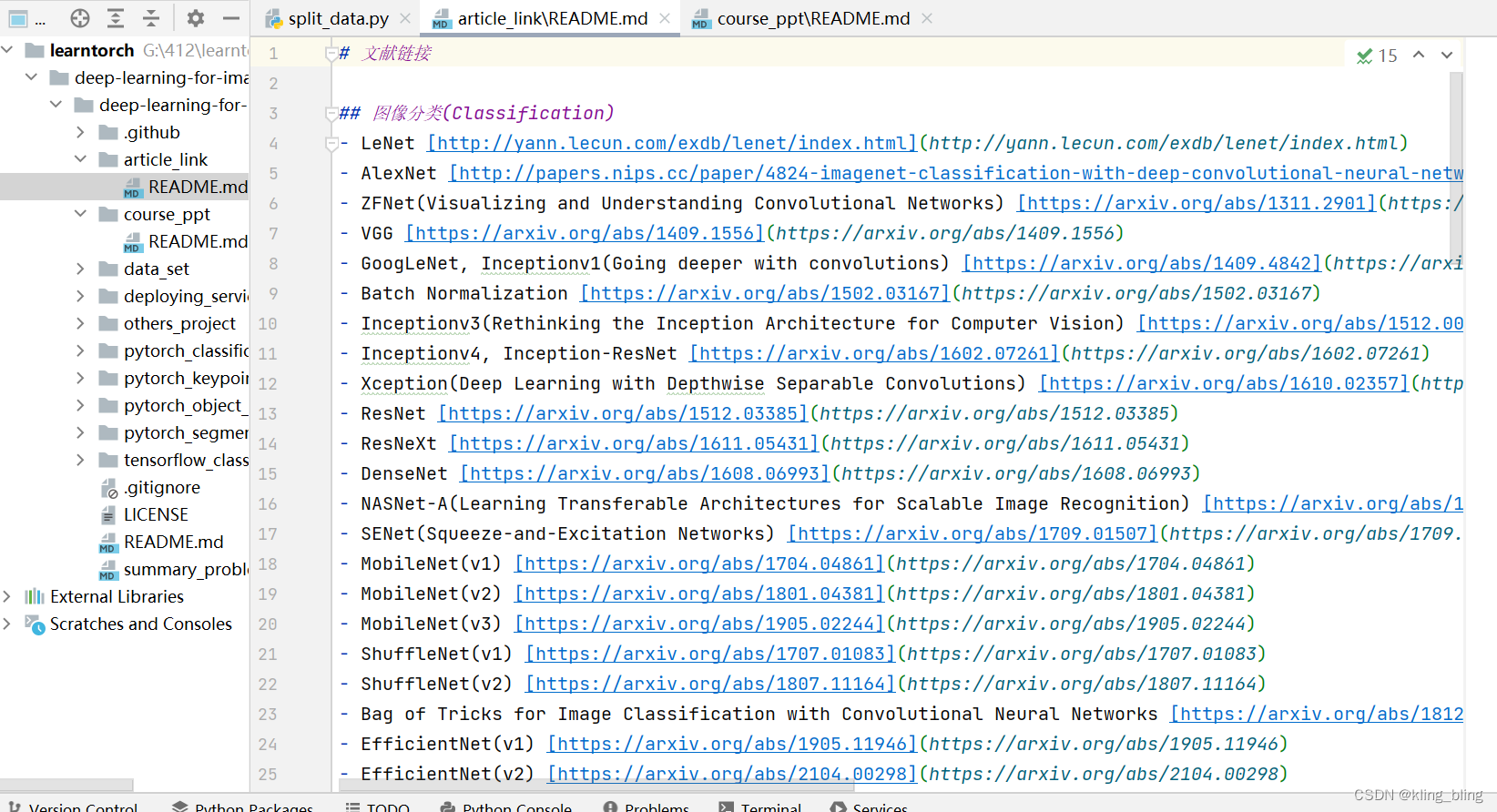

deep-learning-for-image-process这个文件可以从github下载

首先观察readme这个文件包括了图像分类,目标检测,分割等等常见的应用,

从data_set入手:数据集提供是花分类数据,提供split_data.py将数据集划分为训练和验证

import os

from shutil import copy, rmtree

import random

def mk_file(file_path: str):

if os.path.exists(file_path):

# 如果文件夹存在,则先删除原文件夹在重新创建

rmtree(file_path)

os.makedirs(file_path)

def main():

# 保证随机可复现

random.seed(0)

# 将数据集中10%的数据划分到验证集中

split_rate = 0.1

# 指向你解压后的flower_photos文件夹

cwd = os.getcwd()

data_root = os.path.join(cwd, "flower_data")

origin_flower_path = os.path.join(data_root, "flower_photos")

assert os.path.exists(origin_flower_path), "path '{}' does not exist.".format(origin_flower_path)

flower_class = [cla for cla in os.listdir(origin_flower_path)

if os.path.isdir(os.path.join(origin_flower_path, cla))]

# 建立保存训练集的文件夹

train_root = os.path.join(data_root, "train")

mk_file(train_root)

for cla in flower_class:

# 建立每个类别对应的文件夹

mk_file(os.path.join(train_root, cla))

# 建立保存验证集的文件夹

val_root = os.path.join(data_root, "val")

mk_file(val_root)

for cla in flower_class:

# 建立每个类别对应的文件夹

mk_file(os.path.join(val_root, cla))

for cla in flower_class:

cla_path = os.path.join(origin_flower_path, cla)

images = os.listdir(cla_path)

num = len(images)

# 随机采样验证集的索引

eval_index = random.sample(images, k=int(num*split_rate))

for index, image in enumerate(images):

if image in eval_index:

# 将分配至验证集中的文件复制到相应目录

image_path = os.path.join(cla_path, image)

new_path = os.path.join(val_root, cla)

copy(image_path, new_path)

else:

# 将分配至训练集中的文件复制到相应目录

image_path = os.path.join(cla_path, image)

new_path = os.path.join(train_root, cla)

copy(image_path, new_path)

print("\r[{}] processing [{}/{}]".format(cla, index+1, num), end="") # processing bar

print()

print("processing done!")

if __name__ == '__main__':

main()

6-10行如果路径存在,就删掉 if os.path.exists():rmtree(),不存在就创建os.makedirs()

主函数中:

划分0.1给验证集split_rate=0.1,

打开当前目录:os.getcwd()

也可以使用切换目录os.chdir()

常用的路径方法:os.path.join(x,y)将路径拼在一起

├── flower_data

├── flower_photos(解压的数据集文件夹,3670个样本)

├── train(生成的训练集,3306个样本)

└── val(生成的验证集,364个样本)

```

下载后的文件是这个样子的

random.seed(0)

data_root=os.path.join(cwd,'flower_data')

or_fl_path=os.path.join(data_root,"flower_photos")

进入到存放图片的文件夹

assert用法:assert x, y相当于,if not x: y

os.path.isdir():判断括号内容是否属于路径

建立flower_class列表:

flower_class=[cla for cla in os.listdir(or_fl_path) if os.path.join(or_fl_path,cla))]

将文件中的图片的名字记录在一个列表中

将文件内的数据分成训练集和验证集

val_root=os.path.join(data_root,"val")

mk_file(val_root)

for cla in flower_class:

cla_path=os.path.join(or_fl_path,cla)

images=os.listdir(cla_path)

num=len(images)

eval_index=random.sample(images,k=int(num*split_rate))

random.sample:可以从指定的序列中,随机的截取指定长度的片断,不作原地修改。

enumerate:给图片编号:

for index,image in enumerate(images):

if image in eval_index:(被划分为验证集)

image_path=os.path.join(cla_path,image)

new_path=os.path.join(val_path,cla)

copy(image_path,new_path)(shutil.copy,用来赋值文件)

else:(训练集)

image_path=os.path.join(cla_path,image)

new_path=os.path.join(train_root,cla)

copy(image_path,new_path)

从分类开始学习

以convnext举例,一般的格式都为:model,dataset,train,predict,utils,首先看dataset

dataset:

from PIL import Image

import torch

from torch.utils.data import Dataset

class MyDataSet(Dataset):

"""自定义数据集"""

def __init__(self, images_path: list, images_class: list, transform=None):

self.images_path = images_path

self.images_class = images_class

self.transform = transform

def __len__(self):

return len(self.images_path)

def __getitem__(self, item):

img = Image.open(self.images_path[item])

# RGB为彩色图片,L为灰度图片

if img.mode != 'RGB':

raise ValueError("image: {} isn't RGB mode.".format(self.images_path[item]))

label = self.images_class[item]

if self.transform is not None:

img = self.transform(img)

return img, label

@staticmethod

def collate_fn(batch):

# 官方实现的default_collate可以参考

# https://github.com/pytorch/pytorch/blob/67b7e751e6b5931a9f45274653f4f653a4e6cdf6/torch/utils/data/_utils/collate.py

images, labels = tuple(zip(*batch))

images = torch.stack(images, dim=0)

labels = torch.as_tensor(labels)

return images, labels

mydataset(dataset)从torch.utils.data集成dataset类,初始化,需要的参数有:路径,类别和是否需要transform,需要一个计算图片数的函数,

PIL专门用来处理图片的库,设计一个函数,返回图片和他的标签

img=Image.open(self.images_path[item])

if img.mode!='RGB':

raise ValueError ("image: {} isn't RGB mode.".format(self.images_path[item]))

label=self.images_class[item]

if self.transform is not None:

img=self.transform(img )

return img,label

设置函数将两者打包:

def collate_fn(batch):

images,labels=tuple(zip(*batch))

*batch表示可以接收任意多的元素数,将他们打包成{images:labels}再将这些放进元组中

torch.stack:把多个2维的张量凑成一个3维的张量;多个3维的凑成一个4维的张量…以此类推,也就是在增加新的维度进行堆叠。

images=torch.stack(images,dim=0)

labels=torch.as_tensor(labels)

return images,labels

然后看train.py

import os

import argparse

import torch

import torch.optim as optim

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

from my_dataset import MyDataSet

from model import convnext_tiny as create_model

from utils import read_split_data, create_lr_scheduler, get_params_groups, train_one_epoch, evaluate

def main(args):

device = torch.device(args.device if torch.cuda.is_available() else "cpu")

print(f"using {device} device.")

if os.path.exists("./weights") is False:

os.makedirs("./weights")

tb_writer = SummaryWriter()

train_images_path, train_images_label, val_images_path, val_images_label = read_split_data(args.data_path)

img_size = 224

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(img_size),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

"val": transforms.Compose([transforms.Resize(int(img_size * 1.143)),

transforms.CenterCrop(img_size),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

# 实例化训练数据集

train_dataset = MyDataSet(images_path=train_images_path,

images_class=train_images_label,

transform=data_transform["train"])

# 实例化验证数据集

val_dataset = MyDataSet(images_path=val_images_path,

images_class=val_images_label,

transform=data_transform["val"])

batch_size = args.batch_size

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

print('Using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size,

shuffle=True,

pin_memory=True,

num_workers=nw,

collate_fn=train_dataset.collate_fn)

val_loader = torch.utils.data.DataLoader(val_dataset,

batch_size=batch_size,

shuffle=False,

pin_memory=True,

num_workers=nw,

collate_fn=val_dataset.collate_fn)

model = create_model(num_classes=args.num_classes).to(device)

if args.weights != "":

assert os.path.exists(args.weights), "weights file: '{}' not exist.".format(args.weights)

weights_dict = torch.load(args.weights, map_location=device)["model"]

# 删除有关分类类别的权重

for k in list(weights_dict.keys()):

if "head" in k:

del weights_dict[k]

print(model.load_state_dict(weights_dict, strict=False))

if args.freeze_layers:

for name, para in model.named_parameters():

# 除head外,其他权重全部冻结

if "head" not in name:

para.requires_grad_(False)

else:

print("training {}".format(name))

# pg = [p for p in model.parameters() if p.requires_grad]

pg = get_params_groups(model, weight_decay=args.wd)

optimizer = optim.AdamW(pg, lr=args.lr, weight_decay=args.wd)

lr_scheduler = create_lr_scheduler(optimizer, len(train_loader), args.epochs,

warmup=True, warmup_epochs=1)

best_acc = 0.

for epoch in range(args.epochs):

# train

train_loss, train_acc = train_one_epoch(model=model,

optimizer=optimizer,

data_loader=train_loader,

device=device,

epoch=epoch,

lr_scheduler=lr_scheduler)

# validate

val_loss, val_acc = evaluate(model=model,

data_loader=val_loader,

device=device,

epoch=epoch)

tags = ["train_loss", "train_acc", "val_loss", "val_acc", "learning_rate"]

tb_writer.add_scalar(tags[0], train_loss, epoch)

tb_writer.add_scalar(tags[1], train_acc, epoch)

tb_writer.add_scalar(tags[2], val_loss, epoch)

tb_writer.add_scalar(tags[3], val_acc, epoch)

tb_writer.add_scalar(tags[4], optimizer.param_groups[0]["lr"], epoch)

if best_acc < val_acc:

torch.save(model.state_dict(), "./weights/best_model.pth")

best_acc = val_acc

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--num_classes', type=int, default=5)

parser.add_argument('--epochs', type=int, default=10)

parser.add_argument('--batch-size', type=int, default=8)

parser.add_argument('--lr', type=float, default=5e-4)

parser.add_argument('--wd', type=float, default=5e-2)

# 数据集所在根目录

# https://storage.googleapis.com/download.tensorflow.org/example_images/flower_photos.tgz

parser.add_argument('--data-path', type=str,

default="/data/flower_photos")

# 预训练权重路径,如果不想载入就设置为空字符

# 链接: https://pan.baidu.com/s/1aNqQW4n_RrUlWUBNlaJRHA 密码: i83t

parser.add_argument('--weights', type=str, default='./convnext_tiny_1k_224_ema.pth',

help='initial weights path')

# 是否冻结head以外所有权重

parser.add_argument('--freeze-layers', type=bool, default=False)

parser.add_argument('--device', default='cuda:0', help='device id (i.e. 0 or 0,1 or cpu)')

opt = parser.parse_args()

main(opt)

函数main得到的参是(args是后文中对参数的调整,这样更方便),首先设置设备是cpu还是gpu跑程序

device=torch.device(args.device if torch.cuda.is_available() else "cpu")

ps:安装gpu真的好麻烦好痛苦

设置对模型存储的路径

if os.path.exists("./weights") is False:

os.makedirs("./weights")

tb_writer=SummaryWriter()定义位置,方便后面add_scalar()

train_images_path,train_images_label,val_images_path,val_images_label=read_split_data(args.data_path)

这里的read_split_data在utils中还会再写,这里只是调用一下,将数据分出训练和验证

img_size=224控制大小一样,更好操作

data_transform={ 这个操作也是很常见,参数可以调整,设置成字典,这样可以区分train和val

“train”:trainsforms.Compose([transforms.RandomResizedCrop(img_size),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

"val": transforms.Compose([transforms.Resize(int(img_size * 1.143)),

transforms.CenterCrop(img_size),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

实例化训练集数据,调用my_data中的类

train_dataset=Mydataset(image_path=train_images_path,

images_class_train_images_label,

transform=data_transform["train"])

val_dataset=Mydataset(images_path=val_images_path,

images_class=val_images_label,

transform=data_transform["val"])

batch_size=args.batch_size表示几个一起做,这个越大越快,一般取2的方

nw=min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

这里加载数据也是固定的

train_loader=torch.utils.data.Dataloader(train_dataset,batch_batch_size,shuffle=True,

pin_memory=True, num_workers=nw, collate_fn=train_dataset.collate_fn)

collate_fn如何取样本的,我们可以定义自己的函数来准确地实现想要的功能。

drop_last:告诉如何处理数据集长度除于batch_size余下的数据。True就抛弃,否则保留。

val_loader = torch.utils.data.DataLoader(val_dataset,

batch_size=batch_size,

shuffle=False,

pin_memory=True,

num_workers=nw,

collate_fn=val_dataset.collate_fn)

model = create_model(num_classes=args.num_classes).to(device)从model.py选择需要的模型

if args.weights!="";

assert os.path.exists(args.weights), "weights file: '{}' not exist.".format(args.weights)

weights_dict=torch.load(args.weights, map_location=device)["model"]

for k in list(weights_dict.keys()):

if "head" in k:

del weights_dict[k]

print(model.load_state_dict(weights_dict, strict=False))

if args.freeze_layers:

冷冻层,保留head,冻结其他层

使网络在训练过程中,这些层都在不参与的状态,即网络中的某些参数设置就不会更改(已有的训练模型,类似于基于迁移学习的过程),如此大大加快了网络的训练过程,减少了训练的时间。此方法多用于基于迁移学习的模型训练与同时分别训练不同的网络。

for name,para in model.named_parameter( ):

if "head" not in name:

para.requires_grad_(False)

requires_grad: 如果需要为张量计算梯度,则为True,否则为False。我们使用pytorch创建tensor时,可以指定requires_grad为True(默认为False),

grad_fn: grad_fn用来记录变量是怎么来的,方便计算梯度,y = x*3,grad_fn记录了y由x计算的过程。

grad:当执行完了backward()之后,通过x.grad查看x的梯度值。

设置参数为false表示这些参数不需要学习

pg=get_params_groups(model,weight_decay=args.wd)

获取需要的参数

optimizer=optim.AdamW(pg,lr=args.lr,weight_decay=args.wd)

权重衰减:防止过拟合

lr_scheduler=create_lr_scheduler(optimizer,len(train_loader,args.epochs,warmup=True,warmup_epoch=1)

这个函数在utils里面会详细描写

beat_acc=0

开始训练

for epoch in range(args.epochs):开始训练

这个函数后面也会有

train_loss.train_acc=train_onr_epoch(model=model,optimizer=optimizer,data_loader=train_loader,device=device,epoch=epoch,lr_schrduler=lr_scheduler)

val_loss,val_acc=evaluate(model=model,data_loader=val_dataloaser,device=device,epoch=epoch)

这个评估函数后面也有,但是通常是直接写进train里面的,这样写会比较简洁而已

tags = ["train_loss", "train_acc", "val_loss", "val_acc", "learning_rate"] tb_writer.add_scalar(tags[0], train_loss, epoch) tb_writer.add_scalar(tags[1], train_acc, epoch) tb_writer.add_scalar(tags[2], val_loss, epoch) tb_writer.add_scalar(tags[3], val_acc, epoch) tb_writer.add_scalar(tags[4], optimizer.param_groups[0]["lr"], epoch)

这个是给tensorboard summarywriter用来画图的,需要安插组件并且需要科学上网,建议平时使用plot就可以了

if best_acc<val_acc:

torch.save(model.state_dict(), "./weights/best_model.pth")

best_acc=val_acc保留验证集中最好的模型,但是我们在做的时候也可以保留每一次训练的模型

if__name__ =='__main__':

parse=argparse.ArgumentParaser()这里是常用的简单控制整体学习的方法,记住就可以,当然也可以不用,向里面添加你需要的参数,一般都是数据地址,训练轮数,类别数,学习率,batch_size,设备这些东西

parser.add_argument('

--num_classes', type=int, default=5)

parser.add_argument('--epochs', type=int, default=10)

parser.add_argument('--batch-size', type=int, default=8)

parser.add_argument('--lr', type=float, default=5e-4)

parser.add_argument('--wd', type=float, default=5e-2)

parser.add_argument('--data-path', type=str,

default="/data/flower_photos")

# 预训练权重路径,如果不想载入就设置为空字符

# 链接: https://pan.baidu.com/s/1aNqQW4n_RrUlWUBNlaJRHA 密码: i83t

parser.add_argument('--weights', type=str, default='./convnext_tiny_1k_224_ema.pth',

help='initial weights path')

# 是否冻结head以外所有权重

parser.add_argument('--freeze-layers', type=bool, default=False)

parser.add_argument('--device', default='cuda:0', help='device id (i.e. 0 or 0,1 or cpu)')

opt = parser.parse_args()

main(opt)

之后,看predict.py文件

import os

import json

import torch

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

from model import convnext_tiny as create_model

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(f"using {device} device.")

num_classes = 5

img_size = 224

data_transform = transforms.Compose(

[transforms.Resize(int(img_size * 1.14)),

transforms.CenterCrop(img_size),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])

# load image

img_path = "../tulip.jpg"

assert os.path.exists(img_path), "file: '{}' dose not exist.".format(img_path)

img = Image.open(img_path)

plt.imshow(img)

# [N, C, H, W]

img = data_transform(img)

# expand batch dimension

img = torch.unsqueeze(img, dim=0)

# read class_indict

json_path = './class_indices.json'

assert os.path.exists(json_path), "file: '{}' dose not exist.".format(json_path)

with open(json_path, "r") as f:

class_indict = json.load(f)

# create model

model = create_model(num_classes=num_classes).to(device)

# load model weights

model_weight_path = "./weights/best_model.pth"

model.load_state_dict(torch.load(model_weight_path, map_location=device))

model.eval()

with torch.no_grad():

# predict class

output = torch.squeeze(model(img.to(device))).cpu()

predict = torch.softmax(output, dim=0)

predict_cla = torch.argmax(predict).numpy()

print_res = "class: {} prob: {:.3}".format(class_indict[str(predict_cla)],

predict[predict_cla].numpy())

plt.title(print_res)

for i in range(len(predict)):

print("class: {:10} prob: {:.3}".format(class_indict[str(i)],

predict[i].numpy()))

plt.show()

if __name__ == '__main__':

main()

首先在main函数中,首先确定device

def main():

device=torch.device("cuda:0",if torch,cuda.is_available() else :cpu)

num_classes=5总共分为5类

img_size=22

data_transform=transforms.Compose(

[transforms.Resize(int(img_size*1.14)),transforms.CenterCrop(img_size),

transforms.Totensor(),transforms.Normalize([0.485,0.456,0.406],[0.229,0.224,0.225])])

img_path="../tulip.jpg"

assert os.path.exists(img_path),"file: '{}' dose not exist.".format(img_path)

养成一个好习惯,每次使用路径,最好都加入这句话

img=Image.open(img_path)

plt.imshow(img)

img=data_transform(img)

对测试集的图片进行transform处理,他的处理和训练、验证是不同的,这里记住就好,一般是一样的。

img = torch.unsqueeze(img, dim=0)

读json文件,确定类别和图片

json_path='./class_indices.json'

assert os.path.exists(json_path),"file: '{}' does not exist.".format(json_path)

with open(json_path,"r") as f:读文件

class_indict=json.load(f)

model=create_model(num_classes=num_classes).to(device)

model_weight_path="./weights/best_model.pth"

model.load_state_dict(torch.load(model_weight_path,map_location=device)

model.eval()

with torch.no_grad():

output=torch.squeeze(model(img.to(device))).cpu()

predict=torch.softmax(output,dim=0)

predict_cla=torch.agrmax(predict).numpy()

打印出来就行了

for i in range(len(predict)):

print("class: {:10} prob: {:.3}".format(class_indict[str(i)],

predict[i].numpy()))

plt.show()

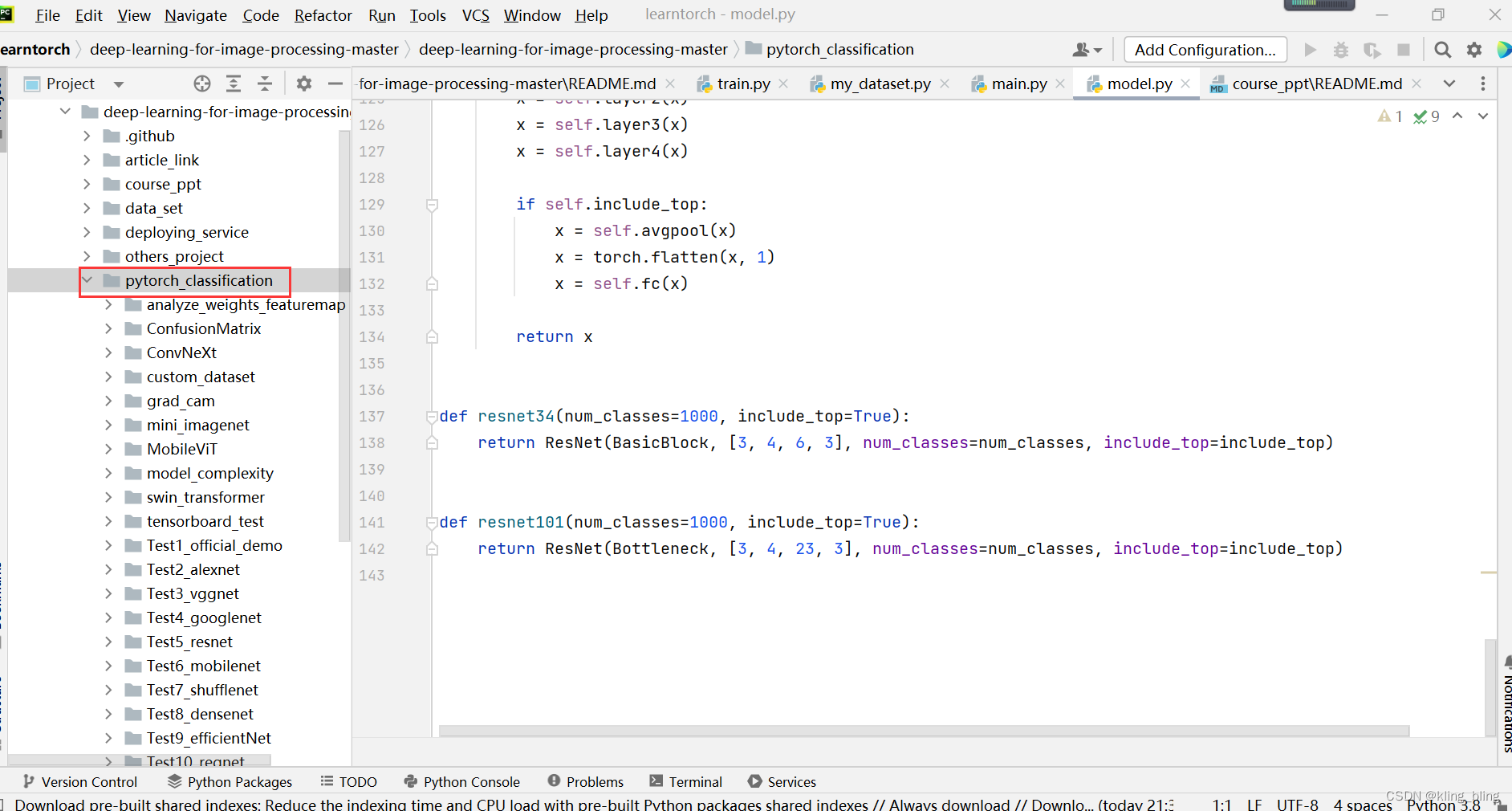

接下来看model这里是可学可不学,需要网络基础,一般情况下model都有现成的代码,当然也可以自己写,看个人需要。

首先介绍convnext:

基本思想类似于resnet,对标Swin-Transformer,

仅仅是依照 Transformer 网络的一些先进思想对现有的经典 ResNet50/200 网络做一些调整改进,将 Transformer 网络的最新的部分思想和技术引入到 CNN 网络现有的模块中从而结合这两种网络的优势,提高 CNN 网络的性能表现。其进行的优化设计主要有以下几点: 1. Macro design 2. ResNeXt 3. Inverted bottleneck 4. Large kernel size 5. Various layer-wise Micro designs

使用了DropPath/drop_path 是一种正则化手段,和Dropout思想类似,其效果是将深度学习模型中的多分支结构的子路径随机”删除“,可以防止过拟合,提升模型表现,而且克服了网络退化问题。

Dropout:将神经元间的连接随机删除。

Droppath:将深度学习模型中的多分支结构子路径随机”删除。

"""

original code from facebook research:

https://github.com/facebookresearch/ConvNeXt

"""

import torch

import torch.nn as nn

import torch.nn.functional as F

def drop_path(x, drop_prob: float = 0., training: bool = False):

"""Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

This is the same as the DropConnect impl I created for EfficientNet, etc networks, however,

the original name is misleading as 'Drop Connect' is a different form of dropout in a separate paper...

See discussion: https://github.com/tensorflow/tpu/issues/494#issuecomment-532968956 ... I've opted for

changing the layer and argument names to 'drop path' rather than mix DropConnect as a layer name and use

'survival rate' as the argument.

"""

if drop_prob == 0. or not training:

return x

keep_prob = 1 - drop_prob

shape = (x.shape[0],) + (1,) * (x.ndim - 1) # work with diff dim tensors, not just 2D ConvNets

random_tensor = keep_prob + torch.rand(shape, dtype=x.dtype, device=x.device)

random_tensor.floor_() # binarize

output = x.div(keep_prob) * random_tensor

return output

class DropPath(nn.Module):

"""Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

"""

def __init__(self, drop_prob=None):

super(DropPath, self).__init__()

self.drop_prob = drop_prob

def forward(self, x):

return drop_path(x, self.drop_prob, self.training)

class LayerNorm(nn.Module):

r""" LayerNorm that supports two data formats: channels_last (default) or channels_first.

The ordering of the dimensions in the inputs. channels_last corresponds to inputs with

shape (batch_size, height, width, channels) while channels_first corresponds to inputs

with shape (batch_size, channels, height, width).

"""

def __init__(self, normalized_shape, eps=1e-6, data_format="channels_last"):

super().__init__()

self.weight = nn.Parameter(torch.ones(normalized_shape), requires_grad=True)

self.bias = nn.Parameter(torch.zeros(normalized_shape), requires_grad=True)

self.eps = eps

self.data_format = data_format

if self.data_format not in ["channels_last", "channels_first"]:

raise ValueError(f"not support data format '{self.data_format}'")

self.normalized_shape = (normalized_shape,)

def forward(self, x: torch.Tensor) -> torch.Tensor:

if self.data_format == "channels_last":

return F.layer_norm(x, self.normalized_shape, self.weight, self.bias, self.eps)

elif self.data_format == "channels_first":

# [batch_size, channels, height, width]

mean = x.mean(1, keepdim=True)

var = (x - mean).pow(2).mean(1, keepdim=True)

x = (x - mean) / torch.sqrt(var + self.eps)

x = self.weight[:, None, None] * x + self.bias[:, None, None]

return x

class Block(nn.Module):

r""" ConvNeXt Block. There are two equivalent implementations:

(1) DwConv -> LayerNorm (channels_first) -> 1x1 Conv -> GELU -> 1x1 Conv; all in (N, C, H, W)

(2) DwConv -> Permute to (N, H, W, C); LayerNorm (channels_last) -> Linear -> GELU -> Linear; Permute back

We use (2) as we find it slightly faster in PyTorch

Args:

dim (int): Number of input channels.

drop_rate (float): Stochastic depth rate. Default: 0.0

layer_scale_init_value (float): Init value for Layer Scale. Default: 1e-6.

"""

def __init__(self, dim, drop_rate=0., layer_scale_init_value=1e-6):

super().__init__()

self.dwconv = nn.Conv2d(dim, dim, kernel_size=7, padding=3, groups=dim) # depthwise conv

self.norm = LayerNorm(dim, eps=1e-6, data_format="channels_last")

self.pwconv1 = nn.Linear(dim, 4 * dim) # pointwise/1x1 convs, implemented with linear layers

self.act = nn.GELU()

self.pwconv2 = nn.Linear(4 * dim, dim)

self.gamma = nn.Parameter(layer_scale_init_value * torch.ones((dim,)),

requires_grad=True) if layer_scale_init_value > 0 else None

self.drop_path = DropPath(drop_rate) if drop_rate > 0. else nn.Identity()

def forward(self, x: torch.Tensor) -> torch.Tensor:

shortcut = x

x = self.dwconv(x)

x = x.permute(0, 2, 3, 1) # [N, C, H, W] -> [N, H, W, C]

x = self.norm(x)

x = self.pwconv1(x)

x = self.act(x)

x = self.pwconv2(x)

if self.gamma is not None:

x = self.gamma * x

x = x.permute(0, 3, 1, 2) # [N, H, W, C] -> [N, C, H, W]

x = shortcut + self.drop_path(x)

return x

class ConvNeXt(nn.Module):

r""" ConvNeXt

A PyTorch impl of : `A ConvNet for the 2020s` -

https://arxiv.org/pdf/2201.03545.pdf

Args:

in_chans (int): Number of input image channels. Default: 3

num_classes (int): Number of classes for classification head. Default: 1000

depths (tuple(int)): Number of blocks at each stage. Default: [3, 3, 9, 3]

dims (int): Feature dimension at each stage. Default: [96, 192, 384, 768]

drop_path_rate (float): Stochastic depth rate. Default: 0.

layer_scale_init_value (float): Init value for Layer Scale. Default: 1e-6.

head_init_scale (float): Init scaling value for classifier weights and biases. Default: 1.

"""

def __init__(self, in_chans: int = 3, num_classes: int = 1000, depths: list = None,

dims: list = None, drop_path_rate: float = 0., layer_scale_init_value: float = 1e-6,

head_init_scale: float = 1.):

super().__init__()

self.downsample_layers = nn.ModuleList() # stem and 3 intermediate downsampling conv layers

stem = nn.Sequential(nn.Conv2d(in_chans, dims[0], kernel_size=4, stride=4),

LayerNorm(dims[0], eps=1e-6, data_format="channels_first"))

self.downsample_layers.append(stem)

# 对应stage2-stage4前的3个downsample

for i in range(3):

downsample_layer = nn.Sequential(LayerNorm(dims[i], eps=1e-6, data_format="channels_first"),

nn.Conv2d(dims[i], dims[i+1], kernel_size=2, stride=2))

self.downsample_layers.append(downsample_layer)

self.stages = nn.ModuleList() # 4 feature resolution stages, each consisting of multiple blocks

dp_rates = [x.item() for x in torch.linspace(0, drop_path_rate, sum(depths))]

cur = 0

# 构建每个stage中堆叠的block

for i in range(4):

stage = nn.Sequential(

*[Block(dim=dims[i], drop_rate=dp_rates[cur + j], layer_scale_init_value=layer_scale_init_value)

for j in range(depths[i])]

)

self.stages.append(stage)

cur += depths[i]

self.norm = nn.LayerNorm(dims[-1], eps=1e-6) # final norm layer

self.head = nn.Linear(dims[-1], num_classes)

self.apply(self._init_weights)

self.head.weight.data.mul_(head_init_scale)

self.head.bias.data.mul_(head_init_scale)

def _init_weights(self, m):

if isinstance(m, (nn.Conv2d, nn.Linear)):

nn.init.trunc_normal_(m.weight, std=0.2)

nn.init.constant_(m.bias, 0)

def forward_features(self, x: torch.Tensor) -> torch.Tensor:

for i in range(4):

x = self.downsample_layers[i](x)

x = self.stages[i](x)

return self.norm(x.mean([-2, -1])) # global average pooling, (N, C, H, W) -> (N, C)

def forward(self, x: torch.Tensor) -> torch.Tensor:

x = self.forward_features(x)

x = self.head(x)

return x

def convnext_tiny(num_classes: int):

# https://dl.fbaipublicfiles.com/convnext/convnext_tiny_1k_224_ema.pth

model = ConvNeXt(depths=[3, 3, 9, 3],

dims=[96, 192, 384, 768],

num_classes=num_classes)

return model

def convnext_small(num_classes: int):

# https://dl.fbaipublicfiles.com/convnext/convnext_small_1k_224_ema.pth

model = ConvNeXt(depths=[3, 3, 27, 3],

dims=[96, 192, 384, 768],

num_classes=num_classes)

return model

def convnext_base(num_classes: int):

# https://dl.fbaipublicfiles.com/convnext/convnext_base_1k_224_ema.pth

# https://dl.fbaipublicfiles.com/convnext/convnext_base_22k_224.pth

model = ConvNeXt(depths=[3, 3, 27, 3],

dims=[128, 256, 512, 1024],

num_classes=num_classes)

return model

def convnext_large(num_classes: int):

# https://dl.fbaipublicfiles.com/convnext/convnext_large_1k_224_ema.pth

# https://dl.fbaipublicfiles.com/convnext/convnext_large_22k_224.pth

model = ConvNeXt(depths=[3, 3, 27, 3],

dims=[192, 384, 768, 1536],

num_classes=num_classes)

return model

def convnext_xlarge(num_classes: int):

# https://dl.fbaipublicfiles.com/convnext/convnext_xlarge_22k_224.pth

model = ConvNeXt(depths=[3, 3, 27, 3],

dims=[256, 512, 1024, 2048],

num_classes=num_classes)

return model

首先是droppath函数,从Module集成类,

class DropPath(nn.Module):

def __init__(self,drop_prob=None):

super(Dropth,self).__init_()

self.drop_prob=drop_prob

def forward(self,x):

return drop_path(x,self.drop_prob,self.training)

分两个类别考虑,一个是正常计算,一个是直接跳转,这个有点复杂,可以根据流程图学习

接下来是重要一点的utils

import os

import sys

import json

import pickle

import random

import math

import torch

from tqdm import tqdm

import matplotlib.pyplot as plt

def read_split_data(root: str, val_rate: float = 0.2):

random.seed(0) # 保证随机结果可复现

assert os.path.exists(root), "dataset root: {} does not exist.".format(root)

# 遍历文件夹,一个文件夹对应一个类别

flower_class = [cla for cla in os.listdir(root) if os.path.isdir(os.path.join(root, cla))]

# 排序,保证顺序一致

flower_class.sort()

# 生成类别名称以及对应的数字索引

class_indices = dict((k, v) for v, k in enumerate(flower_class))

json_str = json.dumps(dict((val, key) for key, val in class_indices.items()), indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

train_images_path = [] # 存储训练集的所有图片路径

train_images_label = [] # 存储训练集图片对应索引信息

val_images_path = [] # 存储验证集的所有图片路径

val_images_label = [] # 存储验证集图片对应索引信息

every_class_num = [] # 存储每个类别的样本总数

supported = [".jpg", ".JPG", ".png", ".PNG"] # 支持的文件后缀类型

# 遍历每个文件夹下的文件

for cla in flower_class:

cla_path = os.path.join(root, cla)

# 遍历获取supported支持的所有文件路径

images = [os.path.join(root, cla, i) for i in os.listdir(cla_path)

if os.path.splitext(i)[-1] in supported]

# 获取该类别对应的索引

image_class = class_indices[cla]

# 记录该类别的样本数量

every_class_num.append(len(images))

# 按比例随机采样验证样本

val_path = random.sample(images, k=int(len(images) * val_rate))

for img_path in images:

if img_path in val_path: # 如果该路径在采样的验证集样本中则存入验证集

val_images_path.append(img_path)

val_images_label.append(image_class)

else: # 否则存入训练集

train_images_path.append(img_path)

train_images_label.append(image_class)

print("{} images were found in the dataset.".format(sum(every_class_num)))

print("{} images for training.".format(len(train_images_path)))

print("{} images for validation.".format(len(val_images_path)))

assert len(train_images_path) > 0, "not find data for train."

assert len(val_images_path) > 0, "not find data for eval"

plot_image = False

if plot_image:

# 绘制每种类别个数柱状图

plt.bar(range(len(flower_class)), every_class_num, align='center')

# 将横坐标0,1,2,3,4替换为相应的类别名称

plt.xticks(range(len(flower_class)), flower_class)

# 在柱状图上添加数值标签

for i, v in enumerate(every_class_num):

plt.text(x=i, y=v + 5, s=str(v), ha='center')

# 设置x坐标

plt.xlabel('image class')

# 设置y坐标

plt.ylabel('number of images')

# 设置柱状图的标题

plt.title('flower class distribution')

plt.show()

return train_images_path, train_images_label, val_images_path, val_images_label

def plot_data_loader_image(data_loader):

batch_size = data_loader.batch_size

plot_num = min(batch_size, 4)

json_path = './class_indices.json'

assert os.path.exists(json_path), json_path + " does not exist."

json_file = open(json_path, 'r')

class_indices = json.load(json_file)

for data in data_loader:

images, labels = data

for i in range(plot_num):

# [C, H, W] -> [H, W, C]

img = images[i].numpy().transpose(1, 2, 0)

# 反Normalize操作

img = (img * [0.229, 0.224, 0.225] + [0.485, 0.456, 0.406]) * 255

label = labels[i].item()

plt.subplot(1, plot_num, i+1)

plt.xlabel(class_indices[str(label)])

plt.xticks([]) # 去掉x轴的刻度

plt.yticks([]) # 去掉y轴的刻度

plt.imshow(img.astype('uint8'))

plt.show()

def write_pickle(list_info: list, file_name: str):

with open(file_name, 'wb') as f:

pickle.dump(list_info, f)

def read_pickle(file_name: str) -> list:

with open(file_name, 'rb') as f:

info_list = pickle.load(f)

return info_list

def train_one_epoch(model, optimizer, data_loader, device, epoch, lr_scheduler):

model.train()

loss_function = torch.nn.CrossEntropyLoss()

accu_loss = torch.zeros(1).to(device) # 累计损失

accu_num = torch.zeros(1).to(device) # 累计预测正确的样本数

optimizer.zero_grad()

sample_num = 0

data_loader = tqdm(data_loader, file=sys.stdout)

for step, data in enumerate(data_loader):

images, labels = data

sample_num += images.shape[0]

pred = model(images.to(device))

pred_classes = torch.max(pred, dim=1)[1]

accu_num += torch.eq(pred_classes, labels.to(device)).sum()

loss = loss_function(pred, labels.to(device))

loss.backward()

accu_loss += loss.detach()

data_loader.desc = "[train epoch {}] loss: {:.3f}, acc: {:.3f}, lr: {:.5f}".format(

epoch,

accu_loss.item() / (step + 1),

accu_num.item() / sample_num,

optimizer.param_groups[0]["lr"]

)

if not torch.isfinite(loss):

print('WARNING: non-finite loss, ending training ', loss)

sys.exit(1)

optimizer.step()

optimizer.zero_grad()

# update lr

lr_scheduler.step()

return accu_loss.item() / (step + 1), accu_num.item() / sample_num

@torch.no_grad()

def evaluate(model, data_loader, device, epoch):

loss_function = torch.nn.CrossEntropyLoss()

model.eval()

accu_num = torch.zeros(1).to(device) # 累计预测正确的样本数

accu_loss = torch.zeros(1).to(device) # 累计损失

sample_num = 0

data_loader = tqdm(data_loader, file=sys.stdout)

for step, data in enumerate(data_loader):

images, labels = data

sample_num += images.shape[0]

pred = model(images.to(device))

pred_classes = torch.max(pred, dim=1)[1]

accu_num += torch.eq(pred_classes, labels.to(device)).sum()

loss = loss_function(pred, labels.to(device))

accu_loss += loss

data_loader.desc = "[valid epoch {}] loss: {:.3f}, acc: {:.3f}".format(

epoch,

accu_loss.item() / (step + 1),

accu_num.item() / sample_num

)

return accu_loss.item() / (step + 1), accu_num.item() / sample_num

def create_lr_scheduler(optimizer,

num_step: int,

epochs: int,

warmup=True,

warmup_epochs=1,

warmup_factor=1e-3,

end_factor=1e-6):

assert num_step > 0 and epochs > 0

if warmup is False:

warmup_epochs = 0

def f(x):

"""

根据step数返回一个学习率倍率因子,

注意在训练开始之前,pytorch会提前调用一次lr_scheduler.step()方法

"""

if warmup is True and x <= (warmup_epochs * num_step):

alpha = float(x) / (warmup_epochs * num_step)

# warmup过程中lr倍率因子从warmup_factor -> 1

return warmup_factor * (1 - alpha) + alpha

else:

current_step = (x - warmup_epochs * num_step)

cosine_steps = (epochs - warmup_epochs) * num_step

# warmup后lr倍率因子从1 -> end_factor

return ((1 + math.cos(current_step * math.pi / cosine_steps)) / 2) * (1 - end_factor) + end_factor

return torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda=f)

def get_params_groups(model: torch.nn.Module, weight_decay: float = 1e-5):

# 记录optimize要训练的权重参数

parameter_group_vars = {"decay": {"params": [], "weight_decay": weight_decay},

"no_decay": {"params": [], "weight_decay": 0.}}

# 记录对应的权重名称

parameter_group_names = {"decay": {"params": [], "weight_decay": weight_decay},

"no_decay": {"params": [], "weight_decay": 0.}}

for name, param in model.named_parameters():

if not param.requires_grad:

continue # frozen weights

if len(param.shape) == 1 or name.endswith(".bias"):

group_name = "no_decay"

else:

group_name = "decay"

parameter_group_vars[group_name]["params"].append(param)

parameter_group_names[group_name]["params"].append(name)

print("Param groups = %s" % json.dumps(parameter_group_names, indent=2))

return list(parameter_group_vars.values())

utils有7个函数,第一个

read_split_data,用于数据集的划分

def read_split_data(root:str,val_rate:float=0.2):

random.seed(0)

assert os.path.exists(root) "dataset root:{} does not exists ." .format(root)

遍历文件夹,一个文件对应一个类别

flow_class=[cla for cla in os.listdir(root) if os.path.isdir(os.path.join(root,cla))]

flower_ckass.sort()

生成类别名称和数字索引

class_indices=dict(k,v) for v,k in enumerate(flower_class))

json_str=json.dumps(dict(val,key) for key val in class_indices.items()), indent=4)

with open('class_indices.json','w') as json_file:

json_file.writre(json_str)

设置几个路径存训练图,训练图索引,验证

train_images_path=[]

train_images_label = [] # 存储训练集图片对应索引信息 val_images_path = [] # 存储验证集的所有图片路径 val_images_label = [] # 存储验证集图片对应索引信息 every_class_num = [] # 存储每个类别的样本总数 supported = [".jpg", ".JPG", ".png", ".PNG"] # 支持的文件后缀类型 # 遍历每个文件夹下的文件

for cla in flower_class:遍历每一个文件

cla_path=os.path.join(root,cla)

images=[os.path.join(cla_path,i) for i in os.listdir(cla_path) if os.path.splitext(i)[-1] in supported]

image_class=image_indices[cla]

every_class_num.append(len(images))

按比例划分样本

val_path=random.sample(images,k=int(len(images)*val_rate))

for img_path in images:

if img_path in val_path:若是路径在验证集中

val_images_path.append(img_path)

val_images_label.apend(image_class)

else:

train_images_path.append(img_path)

train_images_label.append(image_class)

return train_images_path,train_images_label,val_images_path,val_images_label

train_one_epoch函数:这个是重点

def train_one_epoch(model,optimizer,data_loader,device,epoch,lr_scheduler):

model.train()

loss_function=torch.nn.CrossEntroyLoss()

acu_loss=torch.zeros(1).to(device)累计损失

accunum=torch.zeros(1).to(device)累计正确的样本数

optimizer.zeero_grad()

sample_num=0

data_loader=tqdm(data_loader,file=sys.stdout)显示进度

for step,data in enumerate(data_loader):

images,label=data

sample_num+=images.shape[0]

pred=model(images.to(device))

pre_class=torch.max(pred,dim=1)[1]

acc_num+=torch.eq(pred_classes,labels.to(device)).sum()

loss=loss_function(pred,labels.to(device))

loss.backward()

acc_loss+=loss.detach()

data_loader.desc = "[train epoch {}] loss: {:.3f}, acc: {:.3f}, lr: {:.5f}".format(

epoch,

accu_loss.item() / (step + 1),

accu_num.item() / sample_num,

optimizer.param_groups[0]["lr"]

)

optimizer.step()

optimizer.zero_grad()

lr_scheduler.step()

return accu_los.item()/(step+1),acc_num.item()/sample_num

evalute函数:

def evaluate(model,data_loader,device,epoch):

loss_function=torch.nn.CrossEntryLoss()

model.eval()

accu_num=torch.zeros(1).to(device) # 累计预测正确的样本数

accu_loss = torch.zeros(1).to(device) # 累计损失

sample_num=0

data_loader=tqdm(data_loader,file=sys.stdout)

for step,data in enumerate(dat_loader):

images,labels=data

sample_num+=images.shape[0]

pred=model(images.to(device)

pred_classes=torch.max(pred,dim=1)[1]

acc_num+=torch,eq(pred_classes,labels.to(device)).sum()相同就相加

loss=loss_function(pred,labels.to(device))

accu_loss+=loss

data_loader.desc = "[valid epoch {}] loss: {:.3f}, acc: {:.3f}".format(

epoch,

accu_loss.item() / (step + 1),

accu_num.item() / sample_num

)

return accu_loss.item() / (step + 1), accu_num.item() / sample_numj

大概的流程就是以上这样的,但是还是感觉很复杂,好难,哭 ![]()

462

462

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?