文章目录

Pandas案例1: 对于一组电影数据, 希望统计电影分类(genre)的情况.

重新构造一个全为0的数组,列名为分类,如果某一条数据中分类出现过,让0变为1

import pandas as pd

import numpy as np

from matplotlib import pyplot as plt

file_path='IMDB-Movie-Data.csv'

df = pd.read_csv(file_path)

print(df.head(1))

print(df['Genre'])

print('*'*60)

#统计分类的列表

temp_list= df['Genre'].str.split(',').tolist()

genre_list = list(set([i for j in temp_list for i in j]))

#构造全为0的数组

zeros_df = pd.DataFrame(np.zeros((df.shape[0],len(genre_list))), columns = genre_list)

print(zeros_df)

print('*'*80)

#给每个电影出现分类的位置赋值1:

for i in range(df.shape[0]):

zeros_df.loc[i,temp_list[i]] = 1

print(zeros_df.head(3))

print('*'*80)

#统计每个分类电影的和

genre_count = zeros_df.sum(axis = 0)

print(genre_count)

#排序

genre_count = genre_count.sort_values()

_x=genre_count.index

_y=genre_count.values

#画图

plt.figure(figsize=(20,8),dpi = 80)

plt.bar(range(len(_x)),_y,width=0.3,color='orange')

plt.xticks(range(len(_x)),_x)

plt.savefig('day6-1.png')

plt.show()

Rank Title ... Revenue (Millions) Metascore

0 1 Guardians of the Galaxy ... 333.13 76.0

[1 rows x 12 columns]

0 Action,Adventure,Sci-Fi

1 Adventure,Mystery,Sci-Fi

2 Horror,Thriller

3 Animation,Comedy,Family

4 Action,Adventure,Fantasy

...

995 Crime,Drama,Mystery

996 Horror

997 Drama,Music,Romance

998 Adventure,Comedy

999 Comedy,Family,Fantasy

Name: Genre, Length: 1000, dtype: object

************************************************************

Mystery Sci-Fi Family Fantasy ... History Animation Western War

0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

1 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

2 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

3 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

4 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

.. ... ... ... ... ... ... ... ... ...

995 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

996 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

997 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

998 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

999 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

[1000 rows x 20 columns]

********************************************************************************

Mystery Sci-Fi Family Fantasy ... History Animation Western War

0 0.0 1.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

1 1.0 1.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

2 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

[3 rows x 20 columns]

********************************************************************************

Mystery 106.0

Sci-Fi 120.0

Family 51.0

Fantasy 101.0

Horror 119.0

Comedy 279.0

Musical 5.0

Adventure 259.0

Thriller 195.0

Crime 150.0

Action 303.0

Biography 81.0

Music 16.0

Sport 18.0

Romance 141.0

Drama 513.0

History 29.0

Animation 49.0

Western 7.0

War 13.0

dtype: float64

数据合并之join

join: 默认情况下把行索引相同的数据合并到一起

import pandas as pd

import numpy as np

df1 = pd.DataFrame(np.ones((2,4)),index = ['A', 'B'], columns = list('abcd'))

print(df1)

print('*'*80)

df2 = pd.DataFrame(np.zeros((3,3)),index=['A','B','C'],columns=list('xyz'))

print(df2)

print('*'*80)

print(df1.join(df2))

print('*'*80)

print(df2.join(df1))

print('*'*80)

a b c d

A 1.0 1.0 1.0 1.0

B 1.0 1.0 1.0 1.0

********************************************************************************

x y z

A 0.0 0.0 0.0

B 0.0 0.0 0.0

C 0.0 0.0 0.0

********************************************************************************

a b c d x y z

A 1.0 1.0 1.0 1.0 0.0 0.0 0.0

B 1.0 1.0 1.0 1.0 0.0 0.0 0.0

********************************************************************************

x y z a b c d

A 0.0 0.0 0.0 1.0 1.0 1.0 1.0

B 0.0 0.0 0.0 1.0 1.0 1.0 1.0

C 0.0 0.0 0.0 NaN NaN NaN NaN

********************************************************************************

数据合并之Merge

默认合并方式 inner合集

merge outer 交集, NaN补全

merge left 左边为准,NaN补全

merge right 右边为准, Nan补全

print(df1.merge(df3,on='a'))

print('*'*80)

df3.loc[1,'a'] =1

print(df1.merge(df3,on='a'))

print('*'*80)

df4= pd.DataFrame(np.arange(9).reshape((3,3)),columns=list('fax'))

print(df4)

print('*'*80)

print(df1.merge(df4, on='a'))

print('*'*80)

df1.loc['A','a'] = 100

print(df1.merge(df4, on='a'))

print('*'*80)

df1.loc['A','a'] = 100

print(df1.merge(df4, how='inner'))

print('*'*80)

df1.loc['A','a'] = 100

print(df1.merge(df4, how='outer'))

print('*'*80)

df1.loc['A','a'] = 100

print(df1.merge(df4, how='left'))

print('*'*80)

df1.loc['A','a'] = 100

print(df1.merge(df4, how='right'))

print('*'*80)

Empty DataFrame

Columns: [a, b, c, d, f, x]

Index: []

********************************************************************************

a b c d f x

0 1.0 1.0 1.0 1.0 0.0 0.0

1 1.0 1.0 1.0 1.0 0.0 0.0

********************************************************************************

f a x

0 0 1 2

1 3 4 5

2 6 7 8

********************************************************************************

a b c d f x

0 1.0 1.0 1.0 1.0 0 2

1 1.0 1.0 1.0 1.0 0 2

********************************************************************************

a b c d f x

0 1.0 1.0 1.0 1.0 0 2

********************************************************************************

a b c d f x

0 1.0 1.0 1.0 1.0 0 2

********************************************************************************

a b c d f x

0 100.0 1.0 1.0 1.0 NaN NaN

1 1.0 1.0 1.0 1.0 0.0 2.0

2 4.0 NaN NaN NaN 3.0 5.0

3 7.0 NaN NaN NaN 6.0 8.0

********************************************************************************

a b c d f x

0 100.0 1.0 1.0 1.0 NaN NaN

1 1.0 1.0 1.0 1.0 0.0 2.0

********************************************************************************

a b c d f x

0 1.0 1.0 1.0 1.0 0 2

1 4.0 NaN NaN NaN 3 5

2 7.0 NaN NaN NaN 6 8

********************************************************************************

分组和聚合

分组 groupby

聚合

count 分组中非NA值的数量

sum 非NA值的和

mean 非NA值的平均值

median 非NA值的算数中位数

std,var 无偏(分母为n-1)标准差和方差

min,max 非NA值的最小值和最大值

Pandas案例2: 美国和中国哪个星巴克数量多,以及中国每个省份星巴克数量

import pandas as pd

import numpy as np

file_path = 'directory.csv'

df= pd.read_csv(file_path)

print(df.head(1))

print(df.info())

grouped = df.groupby(by='Country')

print(grouped)

#DataFrameGroupBy

#可以进行遍历#

# for i,j in grouped:

# print(i)

# print('-' * 100)

# print(j)

# print('*'*100)

#调用聚合方法

country_count = grouped['Brand'].count()

print(country_count['US'])

print(country_count['CN'])

#统计中国每个省份店铺的数量

china_data = df[df['Country']=='CN']

grouped = china_data.groupby(by='State/Province').count()['Brand']

print(grouped)

Brand Store Number ... Longitude Latitude

0 Starbucks 47370-257954 ... 1.53 42.51

[1 rows x 13 columns]

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 25600 entries, 0 to 25599

Data columns (total 13 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Brand 25600 non-null object

1 Store Number 25600 non-null object

2 Store Name 25600 non-null object

3 Ownership Type 25600 non-null object

4 Street Address 25598 non-null object

5 City 25585 non-null object

6 State/Province 25600 non-null object

7 Country 25600 non-null object

8 Postcode 24078 non-null object

9 Phone Number 18739 non-null object

10 Timezone 25600 non-null object

11 Longitude 25599 non-null float64

12 Latitude 25599 non-null float64

dtypes: float64(2), object(11)

memory usage: 2.5+ MB

None

<pandas.core.groupby.generic.DataFrameGroupBy object at 0x000001C3151248B0>

13608

2734

State/Province

11 236

12 58

13 24

14 8

15 8

21 57

22 13

23 16

31 551

32 354

33 315

34 26

35 75

36 13

37 75

41 21

42 76

43 35

44 333

45 21

46 16

50 41

51 104

52 9

53 24

61 42

62 3

63 3

64 2

91 162

92 13

Name: Brand, dtype: int64

按照多个条件分组

#数据按照多个条件进行分组, 返回Series

grouped = df['Brand'].groupby(by=[df['Country'],df['State/Province']]).count()

print(grouped)

print(type(grouped))

#数据按照多个条件进行分组, 返回DataFrame

grouped1 = df[['Brand']].groupby(by=[df['Country'],df['State/Province']]).count()

grouped2 = df.groupby(by=[df['Country'],df['State/Province']])[['Brand']].count()

grouped3 = df.groupby(by=[df['Country'],df['State/Province']]).count()[['Brand']]

print(type(grouped1))

print(type(grouped2))

print(type(grouped3))

Country State/Province

AD 7 1

AE AJ 2

AZ 48

DU 82

FU 2

..

US WV 25

WY 23

VN HN 6

SG 19

ZA GT 3

Name: Brand, Length: 545, dtype: int64

<class 'pandas.core.series.Series'>

<class 'pandas.core.frame.DataFrame'>

<class 'pandas.core.frame.DataFrame'>

<class 'pandas.core.frame.DataFrame'>

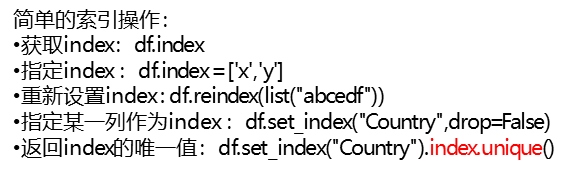

索引和复合索引

Pandas案例3: 利用Matplotlib呈现出店铺总数排名前十的国家,呈现出中国每个城市的店铺数量

import pandas as pd

import numpy as np

from matplotlib import pyplot as plt

file_path = 'directory.csv'

df = pd.read_csv(file_path)

#使用matplotlib呈现出店铺总数前10的国家

data1 = df.groupby(by='Country').count()['Brand'].sort_values(ascending=False)[:10]

_x = data1.index

_y = data1.values

#画图

plt.figure(figsize=(20,8), dpi = 80)

plt.bar(range(len(_x)),_y)

plt.xticks(range(len(_x)),_x)

plt.savefig('day6-1.png')

plt.show()

import pandas as pd

import numpy as np

from matplotlib import pyplot as plt

from matplotlib import font_manager

my_font = font_manager.FontProperties(fname='C:\Windows\Fonts\simsun.ttc')

file_path = 'directory.csv'

df = pd.read_csv(file_path)

df = df[df["Country"]=='CN']

#使用matplotlib呈现中国每个城市的店铺数量

data1 = df.groupby(by='City').count()['Brand'].sort_values(ascending=False)[:25]

_x = data1.index

_y = data1.values

#画图

plt.figure(figsize=(20,12), dpi = 80)

#plt.bar(range(len(_x)),_y,width=0.3, color='orange')

plt.barh(range(len(_x)),_y,height=0.3, color='orange')

plt.yticks(range(len(_x)),_x,fontproperties=my_font)

plt.savefig('day6-3.png')

plt.show()

Pandas案例4: 有全球排名前10000本书的数据,1, 不同年份书的数量, 2, 不同年份书的平均评分情况

import pandas as pd

import numpy as np

from matplotlib import pyplot as plt

from matplotlib import font_manager

my_font = font_manager.FontProperties(fname='C:\Windows\Fonts\simsun.ttc')

file_path = 'books.csv'

df = pd.read_csv(file_path)

print(df.head(2))

print(df.info())

#不同年份书的数量

# data1 = df[pd.notnull(df['original_publication_year'])]

# grouped = data1.groupby(by='original_publication_year').count()['title']

#不同年份书的平均评分情况

#去除original_publication_year列中nan的行

data1 = df[pd.notnull(df['original_publication_year'])]

grouped = data1['average_rating'].groupby(by=data1['original_publication_year']).mean()

print(grouped)

_x = grouped.index

_y = grouped.values

plt.figure(figsize=(20,8),dpi=80)

plt.plot(range(len(_x)),_y)

plt.xticks(list(range(len(_x)))[::10],_x[::10], rotation=45)

plt.savefig('day6-4.png')

plt.show()

26

26

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?