描述

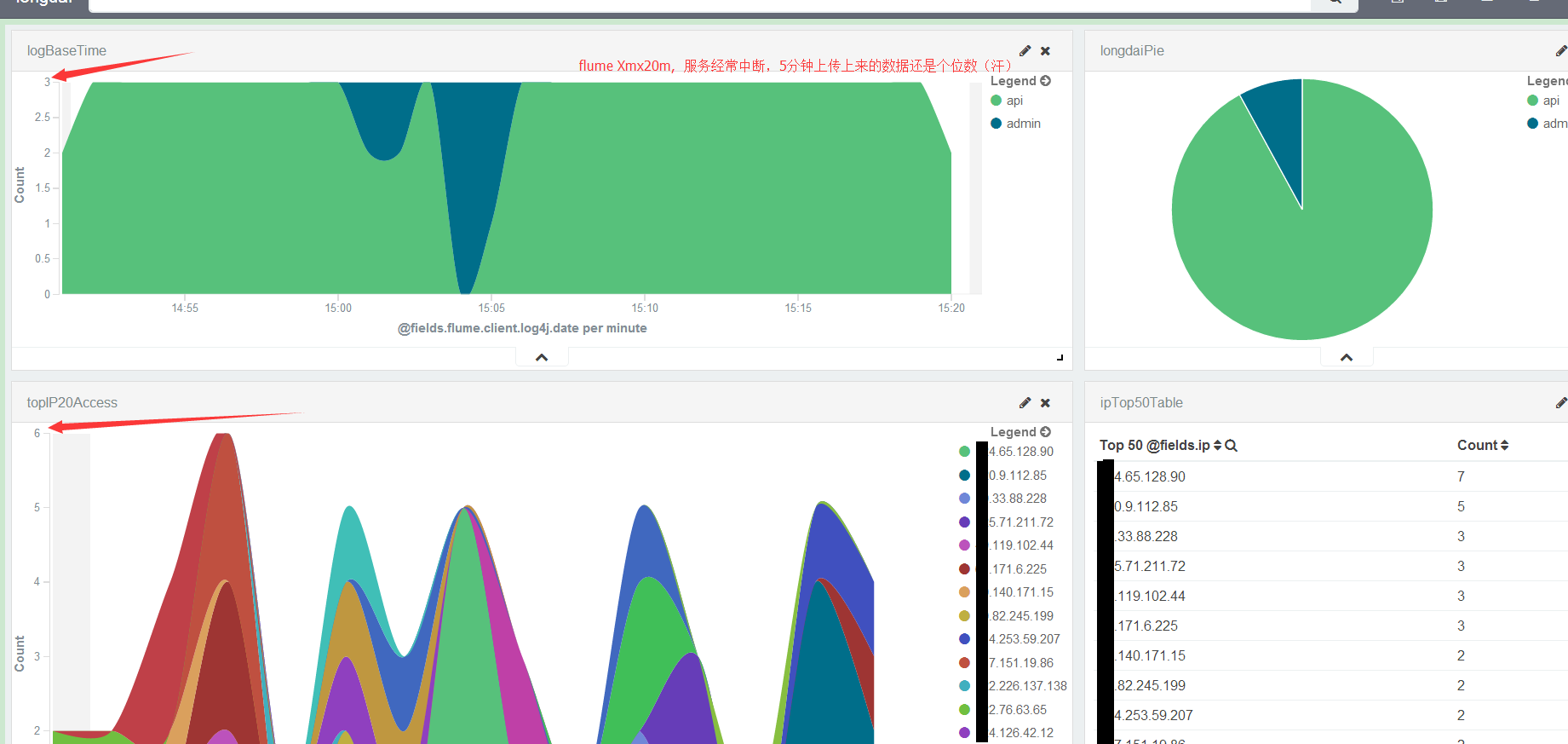

最近使用 log4jFlumeAppender ,通信一会就会中断,自己将 log4jFlumeAppender 改造了2星期,重新看了 NettyAvroRpcClient 和 Netty 源码,最后还是经常性的异常,导致需要重新连接服务器,导致worker线程频繁性关闭和新建。最后决定在看服务器的代码,后来通过配置capacity(增大),

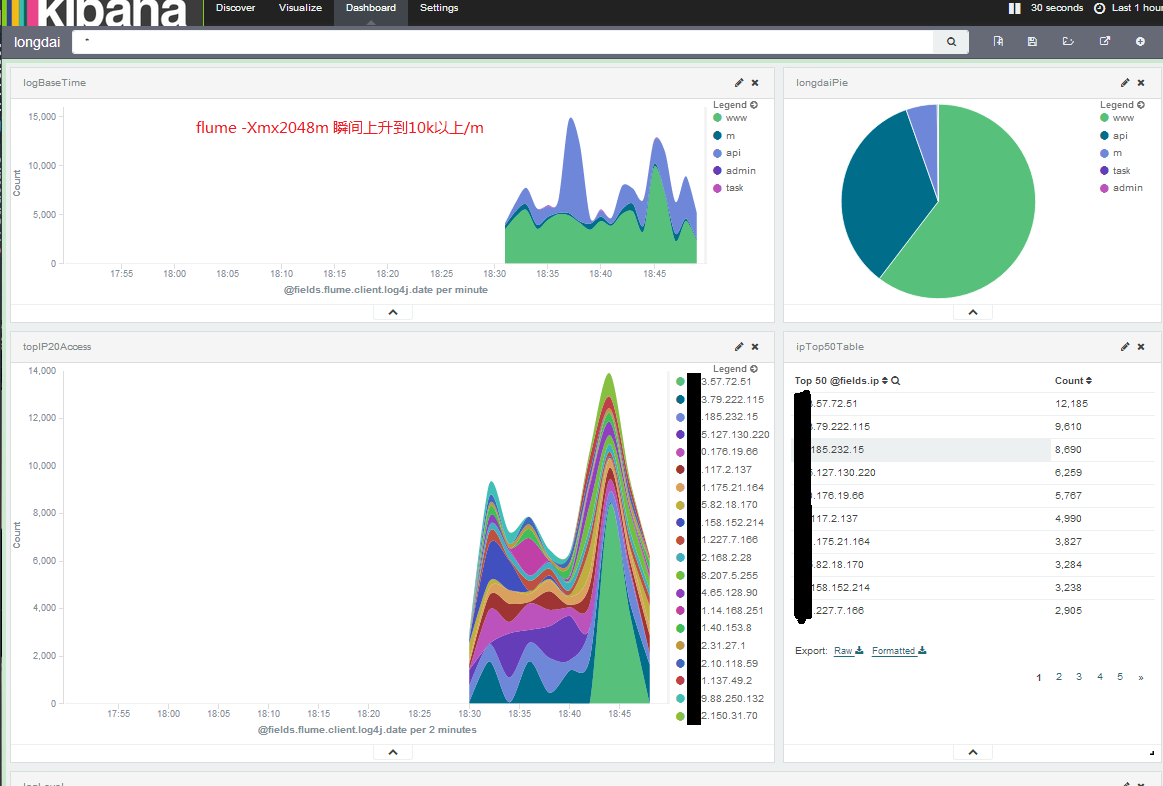

一般比较稳定,但还是每隔几分钟就会异常。使劲增大 capacity, 然后导致了 channel满和内存问题的情况,这才找到了问题所在,查看了一下flume服务端默认最大堆内存竟然是20m,果断修改成2048,通过kibana观察发现瞬间上传上来的日志数量达到10K了(之前还是个位数)。

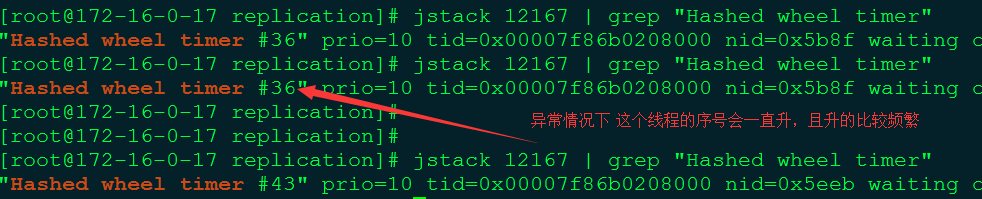

经过2小时的观察,通过下面的命令查看 flume 客户端,这次2小时都没有出现过任何异常

# jstack $clienPID | grep "Hashed wheel timer"

`Hashed wheel timer #82`出现过的堆栈错误

已改造 FlumeAppender

这个问题经常出现

log4j:ERROR rpcClient.append EventDeliveryException

org.apache.flume.EventDeliveryException: NettyAvroRpcClient { host: 172.16.0.19, port: 1234 }: Failed to send event

at org.apache.flume.api.NettyAvroRpcClient.append(NettyAvroRpcClient.java:250)

at org.apache.log4j.client.FlumeAppender.append(FlumeAppender.java:144)

at org.apache.log4j.AppenderSkeleton.doAppend(AppenderSkeleton.java:251)

at org.apache.log4j.helpers.AppenderAttachableImpl.appendLoopOnAppenders(AppenderAttachableImpl.java:66)

at org.apache.log4j.AsyncAppender$Dispatcher.run(AsyncAppender.java:586)

at java.lang.Thread.run(Thread.java:745)

Caused by: org.apache.flume.EventDeliveryException: NettyAvroRpcClient { host: 172.16.0.19, port: 1234 }: RPC request timed out

at org.apache.flume.api.NettyAvroRpcClient.waitForStatusOK(NettyAvroRpcClient.java:400)

at org.apache.flume.api.NettyAvroRpcClient.append(NettyAvroRpcClient.java:297)

at org.apache.flume.api.NettyAvroRpcClient.append(NettyAvroRpcClient.java:238)

... 5 more

Caused by: java.util.concurrent.TimeoutException

at org.apache.avro.ipc.CallFuture.get(CallFuture.java:132)

at org.apache.flume.api.NettyAvroRpcClient.waitForStatusOK(NettyAvroRpcClient.java:389)

... 7 moreflume-agent:avro

修改 capacity 增大几个数量级,就出现了下面的异常

org.apache.flume.ChannelException: Take list for MemoryTransaction, capacity 100 full, consider committing more frequently, increasing capacity, or increasing thread count

at org.apache.flume.channel.MemoryChannel$MemoryTransaction.doTake(MemoryChannel.java:96)

at org.apache.flume.channel.BasicTransactionSemantics.take(BasicTransactionSemantics.java:113)

at org.apache.flume.channel.BasicChannelSemantics.take(BasicChannelSemantics.java:95)

at org.apache.flume.sink.elasticsearch.ElasticSearchSink.process(ElasticSearchSink.java:183)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:68)

at org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:147)

at java.lang.Thread.run(Thread.java:745)

2016-01-06 17:22:40,783 (New I/O worker #1) [INFO - org.apache.avro.ipc.NettyServer$NettyServerAvroHandler.handleUpstream(NettyServer.java:171)]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471)

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:304) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:178)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293) ... 3 more

2016-01-06 17:22:46,123 (New I/O worker #2) [WARN - org.apache.avro.ipc.Responder.respond(Responder.java:174)] system errororg.apache.avro.AvroRuntimeException: Unknown datum type: java.lang.IllegalStateException: Channel closed [channel=fileCh]. Due to java.lang.NullPointerException: null

at org.apache.avro.generic.GenericData.getSchemaName(GenericData.java:593)

at org.apache.avro.generic.GenericData.resolveUnion(GenericData.java:558)

at org.apache.avro.generic.GenericDatumWriter.resolveUnion(GenericDatumWriter.java:144)

at org.apache.avro.generic.GenericDatumWriter.write(GenericDatumWriter.java:71)

at org.apache.avro.generic.GenericDatumWriter.write(GenericDatumWriter.java:58)

at org.apache.avro.ipc.specific.SpecificResponder.writeError(SpecificResponder.java:74)

at org.apache.avro.ipc.Responder.respond(Responder.java:169)

at org.apache.avro.ipc.NettyServer$NettyServerAvroHandler.messageReceived(NettyServer.java:188)

at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70)

at org.apache.avro.ipc.NettyServer$NettyServerAvroHandler.handleUpstream(NettyServer.java:173)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:558)

at org.jboss.netty.channel.DefaultChannelPipeline$DefaultChannelHandlerContext.sendUpstream(DefaultChannelPipeline.java:786)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:296)

at org.jboss.netty.handler.codec.frame.FrameDecoder.unfoldAndFireMessageReceived(FrameDecoder.java:458)

at org.jboss.netty.handler.codec.frame.FrameDecoder.callDecode(FrameDecoder.java:439)

at org.jboss.netty.handler.codec.frame.FrameDecoder.messageReceived(FrameDecoder.java:303)

at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:558)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:553)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:268)

MemoryTransaction, capacity 500 full, consider committing more frequently, increasing capacity,

Exception in thread "Avro NettyTransceiver I/O Worker-1" java.lang.OutOfMemoryError: GC overhead limit exceeded

wsException in thread "SinkRunner-PollingRunner-DefaultSinkProcessor" java.lang.OutOfMemoryError: GC overhead limit exceeded

at java.util.concurrent.LinkedBlockingDeque.offerFirst(LinkedBlockingDeque.java:340)

at java.util.concurrent.LinkedBlockingDeque.addFirst(LinkedBlockingDeque.java:322)

at org.apache.flume.channel.MemoryChannel$MemoryTransaction.doRollback(MemoryChannel.java:172)

at org.apache.flume.channel.BasicTransactionSemantics.rollback(BasicTransactionSemantics.java:168)

at org.apache.flume.sink.elasticsearch.ElasticSearchSink.process(ElasticSearchSink.java:212)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:68)

at org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:147)

at java.lang.Thread.run(Thread.java:745)memechannel.capacity默认值(100)不够用,增加到2000就几个服务同时走一个agent.memechannel就会出现内存不够用的情况,可以将memechannel 替换成file channel(固态硬盘速度刚刚的)

org.apache.flume.ChannelException: Take list for MemoryTransaction, capacity 100 full, consider committing more frequently, increasing capacity, or increasing thread count

at org.apache.flume.channel.MemoryChannel$MemoryTransaction.doTake(MemoryChannel.java:96)

at org.apache.flume.channel.BasicTransactionSemantics.take(BasicTransactionSemantics.java:113)

at org.apache.flume.channel.BasicChannelSemantics.take(BasicChannelSemantics.java:95)

at org.apache.flume.sink.elasticsearch.ElasticSearchSink.process(ElasticSearchSink.java:183)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:68)

at org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:147)

at java.lang.Thread.run(Thread.java:745)解决方案:

修改 bin/flume-ng, 重新设置 Xmx, 如 4096m, 同时适当增大 capacity

memechannel 替换成 file channel (固态硬盘)

avro.conf

avroAgent.sources = avro

avroAgent.channels = memoryChannel fileCh

avroAgent.sinks = elasticSearch logfile

# For each one of the sources, the type is defined

avroAgent.sources.avro.type = avro

avroAgent.sources.avro.bind = 0.0.0.0

avroAgent.sources.avro.port = 1234

avroAgent.sources.avro.threads = 20

avroAgent.sources.avro.channels = memoryChannel

# Each sink's type must be defined

avroAgent.sinks.elasticSearch.type = org.apache.flume.sink.elasticsearch.ElasticSearchSink

#Specify the channel the sink should use

avroAgent.sinks.elasticSearch.channel = memoryChannel

avroAgent.sinks.elasticSearch.batchSize = 100

avroAgent.sinks.elasticSearch.hostNames=172.16.0.18:9300

avroAgent.sinks.elasticSearch.indexName=longdai

avroAgent.sinks.elasticSearch.indexType=longdai

avroAgent.sinks.elasticSearch.clusterName=longdai

avroAgent.sinks.elasticSearch.client = transport

avroAgent.sinks.elasticSearch.serializer=org.apache.flume.sink.elasticsearch.ElasticSearchLogStashEventSerializer

avroAgent.sinks.logfile.type = org.apache.flume.sink.log4j.Log4jSink

avroAgent.sinks.logfile.channel = memoryChannel

avroAgent.sinks.logfile.configFile = /home/apache-flume-1.6.0-bin/conf/log4j.xml

avroAgent.channels.fileCh.type = file

avroAgent.channels.fileCh.keep-alive = 3

avroAgent.channels.fileCh.overflowCapacity = 1000000

avroAgent.channels.fileCh.dataDirs = /data/flume/ch/data

# Each channel's type is defined.

avroAgent.channels.memoryChannel.type = memory

avroAgent.channels.memoryChannel.capacity = 2000

avroAgent.channels.memoryChannel.transactionCapacity = 2000

avroAgent.channels.memoryChannel.keep-alive = 30

agent.channels.fch.type = file

agent.channels.fch.checkpointDir = /data/flume/data/checkpointDir

agent.channels.fch.dataDirs = /data/flume/data/dataDirs

#-- END --有问题:

改造后

1101

1101

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?