准备1:zookeeper下载与安装

1.下载zookeeper,解压,并配置到系统环境变量中 ~/.bash_profile中

port ZK_HOME=/home/hadoop/app/zookeeper-3.4.5-cdh5.7.0

export PATH=$ZK_HOME/bin:$PATH

source ~/.bash_profile使其生效

2.home/hadoop/app/zookeeper-3.4.5-cdh5.7.0/conf下拷贝zoo_sample.cfg到zoo.cfg,修改文件储存位置,tmp目录下每次重启都会清空,所以新建目录app/tmp/zk

dataDir=/home/hadoop/app/tmp/zk

3.开启zookeeper

zkServer.sh start

通过 jps 命令可以方便地查看 Java 进程的启动类、传入参数和 Java 虚拟机参数等信息。jps 不带参数,默认显示 进程ID 和 启动类的名称。详见:https://blog.csdn.net/qq_27361945/article/details/85691123

jps

2930 Jps

2892 QuorumPeerMain

连接本机zookeeper服务

zkCli.sh

[hadoop@hadoop000 Desktop]$ zkCli.sh

Connecting to localhost:2181

2021-04-18 16:04:49,336 [myid:] - INFO [main:Environment@100] - Client environment:zookeeper.version=3.4.5-cdh5.7.0--1, built on 03/23/2016 18:31 GMT

2021-04-18 16:04:49,338 [myid:] - INFO [main:Environment@100] - Client environment:host.name=hadoop000

2021-04-18 16:04:49,338 [myid:] - INFO [main:Environment@100] - Client environment:java.version=1.8.0_144

2021-04-18 16:04:49,340 [myid:] - INFO [main:Environment@100] - Client environment:java.vendor=Oracle Corporation

2021-04-18 16:04:49,340 [myid:] - INFO [main:Environment@100] - Client environment:java.home=/home/hadoop/app/jdk1.8.0_144/jre

2021-04-18 16:04:49,340 [myid:] - INFO [main:Environment@100] - Client environment:java.class.path=/home/hadoop/app/zookeeper-3.4.5-cdh5.7.0/bin/../build/classes:/home/hadoop/app/zookeeper-3.4.5-cdh5.7.0/bin/../build/lib/*.jar:/home/hadoop/app/zookeeper-3.4.5-cdh5.7.0/bin/../share/zookeeper/zookeeper-3.4.5-cdh5.7.0.jar:/home/hadoop/app/zookeeper-3.4.5-cdh5.7.0/bin/../share/zookeeper/slf4j-log4j12-1.7.5.jar:/home/hadoop/app/zookeeper-3.4.5-cdh5.7.0/bin/../share/zookeeper/slf4j-api-1.7.5.jar:/home/hadoop/app/zookeeper-3.4.5-cdh5.7.0/bin/../share/zookeeper/netty-3.2.2.Final.jar:/home/hadoop/app/zookeeper-3.4.5-cdh5.7.0/bin/../share/zookeeper/log4j-1.2.16.jar:/home/hadoop/app/zookeeper-3.4.5-cdh5.7.0/bin/../share/zookeeper/jline-2.11.jar:/home/hadoop/app/zookeeper-3.4.5-cdh5.7.0/bin/../src/java/lib/*.jar:/home/hadoop/app/zookeeper-3.4.5-cdh5.7.0/bin/../conf:

2021-04-18 16:04:49,340 [myid:] - INFO [main:Environment@100] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2021-04-18 16:04:49,340 [myid:] - INFO [main:Environment@100] - Client environment:java.io.tmpdir=/tmp

2021-04-18 16:04:49,340 [myid:] - INFO [main:Environment@100] - Client environment:java.compiler=<NA>

2021-04-18 16:04:49,341 [myid:] - INFO [main:Environment@100] - Client environment:os.name=Linux

2021-04-18 16:04:49,341 [myid:] - INFO [main:Environment@100] - Client environment:os.arch=amd64

2021-04-18 16:04:49,341 [myid:] - INFO [main:Environment@100] - Client environment:os.version=2.6.32-358.el6.x86_64

2021-04-18 16:04:49,341 [myid:] - INFO [main:Environment@100] - Client environment:user.name=hadoop

2021-04-18 16:04:49,341 [myid:] - INFO [main:Environment@100] - Client environment:user.home=/home/hadoop

2021-04-18 16:04:49,341 [myid:] - INFO [main:Environment@100] - Client environment:user.dir=/home/hadoop/Desktop

2021-04-18 16:04:49,342 [myid:] - INFO [main:ZooKeeper@438] - Initiating client connection, connectString=localhost:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@1a86f2f1

Welcome to ZooKeeper!

2021-04-18 16:04:49,383 [myid:] - INFO [main-SendThread(hadoop000:2181):ClientCnxn$SendThread@975] - Opening socket connection to server hadoop000/192.168.107.128:2181. Will not attempt to authenticate using SASL (unknown error)

JLine support is enabled

2021-04-18 16:04:49,514 [myid:] - INFO [main-SendThread(hadoop000:2181):ClientCnxn$SendThread@852] - Socket connection established, initiating session, client: /192.168.107.128:58088, server: hadoop000/192.168.107.128:2181

2021-04-18 16:04:49,528 [myid:] - INFO [main-SendThread(hadoop000:2181):ClientCnxn$SendThread@1235] - Session establishment complete on server hadoop000/192.168.107.128:2181, sessionid = 0x178e3f3d28f0000, negotiated timeout = 30000

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zk: localhost:2181(CONNECTED) 0] help

ZooKeeper -server host:port cmd args

stat path [watch]

set path data [version]

ls path [watch]

delquota [-n|-b] path

ls2 path [watch]

setAcl path acl

setquota -n|-b val path

history

redo cmdno

printwatches on|off

delete path [version]

sync path

listquota path

rmr path

get path [watch]

create [-s] [-e] path data acl

addauth scheme auth

quit

getAcl path

close

connect host:port

[zk: localhost:2181(CONNECTED) 1]

连接远程zookeeper服务,跟上参数:

zkCli.sh -server ip:port

I.单节点单Broker部署及使用

准备2:kafka下载与安装

因为使用的Scala版本为2.11,所以选择kafka_2.11-0.9.0.0.tgz

1.下载并解压kafka_2.11-0.9.0.0.tgz到~/app/下,并添加系统环境变量,生效系统环境变量

vi ~/.bash_profile

export KAFKA_HOME=/home/hadoop/app/kafka_2.11-0.9.0.0

export PATH=$KAFKA_HOME/bin:$PATH

source ~/.bash_profile

2./home/hadoop/app/kafka_2.11-0.9.0.0/config修改kafka配置文件,修改server.properties。在/home/hadoop/app/tmp/下新建kafka-logs目录存放kafka日志,而不是在系统的tmp目录下(重启会丢失)。注意如下配置:

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=0

listeners=PLAINTEXT://:9092

# Hostname the broker will bind to. If not set, the server will bind to all interfaces

host.name=hadoop000

# A comma seperated list of directories under which to store log files

log.dirs=/home/hadoop/app/tmp/kafka-logs

# The default number of log partitions per topic. More partitions allow greater

# parallelism for consumption, but this will also result in more files across

# the brokers.

num.partitions=1

# Zookeeper connection string (see zookeeper docs for details).

# This is a comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002".

# You can also append an optional chroot string to the urls to specify the

# root directory for all kafka znodes.

zookeeper.connect=hadoop000:2181

3.启动kafka,前提:启动zookeeper

kafka-server-start.sh $KAFKA_HOME/config/server.properties

打开另一个terminal终端会话查看进程,参数 -m 可以输出传递给 Java 进程(main 方法)的参数。

[hadoop@hadoop000 config]$ jps -m

6002 Kafka /home/hadoop/app/kafka_2.11-0.9.0.0/config/server.properties

6151 Jps -m

2892 QuorumPeerMain /home/hadoop/app/zookeeper-3.4.5-cdh5.7.0/bin/../conf/zoo.cfg

4.创建topic,指定zookeeper

kafka-topics.sh --create --zookeeper hadoop000:2181 --replication-factor 1 --partitions 1 --topic hellp_topic

查看所有topic

kafka-topics.sh --list --zookeeper hadoop000:2181

查看所有topic的详细信息,Isr代表存活的副本

kafka-topics.sh --describe --zookeeper hadoop000:2181

查看指定topic的详细信息

kafka-topics.sh --describe --zookeeper hadoop000:2181 --topic hellp_topic

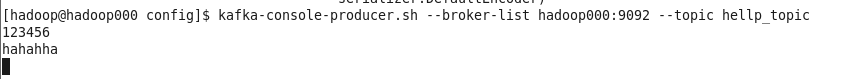

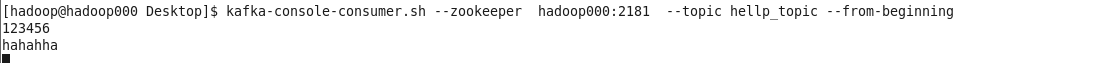

5.生产发送消息,指定broker

–broker-list 的9092是server.properties中的listeners属性指定的端口

kafka-console-producer.sh --broker-list hadoop000:9092 --topic hellp_topic

6.消费消息,指定zookeeper

–from-beginning 在没设置偏移量的情况下,从日志中最早的消息开始读取,而不是最新的

kafka-console-consumer.sh --zookeeper hadoop000:2181 --topic hellp_topic --from-beginning

此时,在生产端发送数据,可以在消费端接收到消息。

II.单节点多Broker部署及使用。一个机器多个kafka

1.为每一个broker创建一个配置文件

在/home/hadoop/app/kafka_2.11-0.9.0.0/config文件夹下,复制server.properties到server-1.properties,server-2.properties,server-3.properties,…

修改server-1.properties

broker.id=1

listeners=PLAINTEXT://:9093

log.dirs=/home/hadoop/app/tmp/kafka-logs-1

修改server-2.properties

broker.id=2

listeners=PLAINTEXT://:9094

log.dirs=/home/hadoop/app/tmp/kafka-logs-2

修改server-3.properties

broker.id=3

listeners=PLAINTEXT://:9095

log.dirs=/home/hadoop/app/tmp/kafka-logs-3

2.启动多个kafka,前提:启动zookeeper

-daemon 以后台的方式运行

kafka-server-start.sh -daemon $KAFKA_HOME/config/server-1.properties

kafka-server-start.sh -daemon $KAFKA_HOME/config/server-2.properties

kafka-server-start.sh -daemon $KAFKA_HOME/config/server-3.properties

查看进程

[hadoop@hadoop000 kafka-logs]$ jps -m

7875 Kafka /home/hadoop/app/kafka_2.11-0.9.0.0/config/server-3.properties

7747 Kafka /home/hadoop/app/kafka_2.11-0.9.0.0/config/server-1.properties

7751 Kafka /home/hadoop/app/kafka_2.11-0.9.0.0/config/server-2.properties

7976 Jps -m

2892 QuorumPeerMain /home/hadoop/app/zookeeper-3.4.5-cdh5.7.0/bin/../conf/zoo.cfg

3.创建topic

创建一个分区,三个副本

kafka-topics.sh --create --zookeeper hadoop000:2181 --replication-factor 3 --partitions 1 --topic hellp-replicated-topic

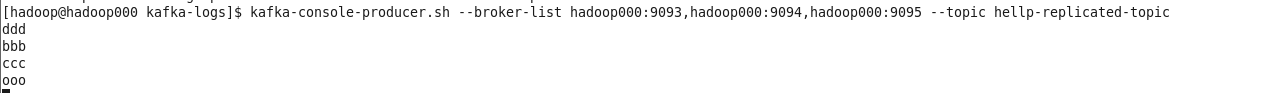

4.生产发送消息,指定broker

–broker-list 的9093,9094,9095是server.properties中的listeners属性指定的端口

kafka-console-producer.sh --broker-list hadoop000:9093,hadoop000:9094,hadoop000:9095 --topic hellp-replicated-topic

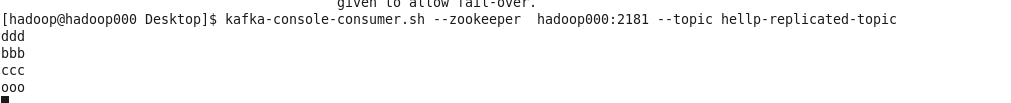

5.消费消息,指定zookeeper

kafka-console-consumer.sh --zookeeper hadoop000:2181 --topic hellp-replicated-topic

此时,在生产端发送数据,可以在消费端接收到消息。

909

909

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?