一、部署kube-prometheus

0、系统信息

[root@test-centos ~]# cat /etc/redhat-release

CentOS Linux release 7.6.1810 (Core)

1、安装git工具

yum install git -y

2、官方下载地址

https://github.com/prometheus-operator/kube-prometheus/

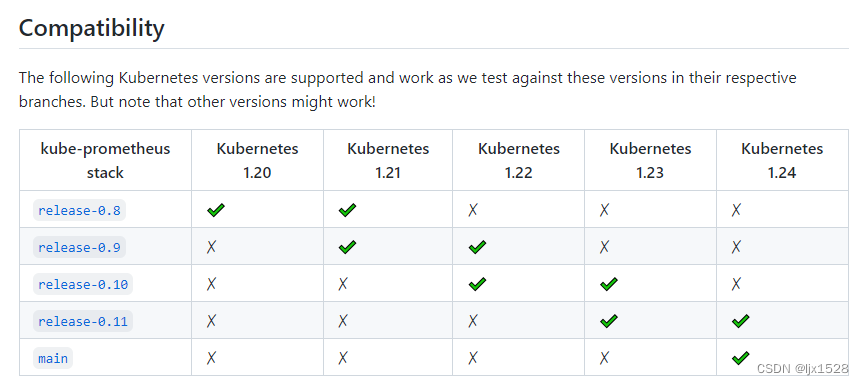

说明:根据不通的k8s版本下载对应的 kube-prometheus

3、克隆kube-prometheus

git clone https://github.com/prometheus-operator/kube-prometheus.git

4、查看manifest目录文件

[root@test-centos manifests]# pwd

/root/kube-prometheus/manifests

[root@test-centos manifests]# ll

total 1736

-rw-r--r-- 1 root root 782 Jan 20 22:29 alertmanager-alertmanager.yaml

-rw-r--r-- 1 root root 5075 Jan 20 22:29 alertmanager-prometheusRule.yaml

-rw-r--r-- 1 root root 1169 Jan 20 22:29 alertmanager-secret.yaml

-rw-r--r-- 1 root root 301 Jan 20 22:29 alertmanager-serviceAccount.yaml

-rw-r--r-- 1 root root 540 Jan 20 22:29 alertmanager-serviceMonitor.yaml

-rw-r--r-- 1 root root 577 Jan 20 22:29 alertmanager-service.yaml

-rw-r--r-- 1 root root 278 Jan 20 22:29 blackbox-exporter-clusterRoleBinding.yaml

-rw-r--r-- 1 root root 287 Jan 20 22:29 blackbox-exporter-clusterRole.yaml

-rw-r--r-- 1 root root 1392 Jan 20 22:29 blackbox-exporter-configuration.yaml

-rw-r--r-- 1 root root 2994 Jan 20 22:29 blackbox-exporter-deployment.yaml

-rw-r--r-- 1 root root 96 Jan 20 22:29 blackbox-exporter-serviceAccount.yaml

-rw-r--r-- 1 root root 680 Jan 20 22:29 blackbox-exporter-serviceMonitor.yaml

-rw-r--r-- 1 root root 540 Jan 20 22:29 blackbox-exporter-service.yaml

-rw-r--r-- 1 root root 550 Jan 20 22:29 grafana-dashboardDatasources.yaml

-rw-r--r-- 1 root root 1403543 Jan 20 22:29 grafana-dashboardDefinitions.yaml

-rw-r--r-- 1 root root 454 Jan 20 22:29 grafana-dashboardSources.yaml

-rw-r--r-- 1 root root 7722 Jan 20 22:29 grafana-deployment.yaml

-rw-r--r-- 1 root root 86 Jan 20 22:29 grafana-serviceAccount.yaml

-rw-r--r-- 1 root root 379 Jan 20 22:29 grafana-serviceMonitor.yaml

-rw-r--r-- 1 root root 218 Jan 20 22:29 grafana-service.yaml

-rw-r--r-- 1 root root 2861 Jan 20 22:29 kube-prometheus-prometheusRule.yaml

-rw-r--r-- 1 root root 62711 Jan 20 22:29 kubernetes-prometheusRule.yaml

-rw-r--r-- 1 root root 464 Jan 20 22:29 kube-state-metrics-clusterRoleBinding.yaml

-rw-r--r-- 1 root root 1739 Jan 20 22:29 kube-state-metrics-clusterRole.yaml

-rw-r--r-- 1 root root 2803 Jan 20 22:29 kube-state-metrics-deployment.yaml

-rw-r--r-- 1 root root 1647 Jan 20 22:29 kube-state-metrics-prometheusRule.yaml

-rw-r--r-- 1 root root 280 Jan 20 22:29 kube-state-metrics-serviceAccount.yaml

-rw-r--r-- 1 root root 1011 Jan 20 22:29 kube-state-metrics-serviceMonitor.yaml

-rw-r--r-- 1 root root 580 Jan 20 22:29 kube-state-metrics-service.yaml

-rw-r--r-- 1 root root 444 Jan 20 22:29 node-exporter-clusterRoleBinding.yaml

-rw-r--r-- 1 root root 461 Jan 20 22:29 node-exporter-clusterRole.yaml

-rw-r--r-- 1 root root 2907 Jan 20 22:29 node-exporter-daemonset.yaml

-rw-r--r-- 1 root root 11229 Jan 20 22:29 node-exporter-prometheusRule.yaml

-rw-r--r-- 1 root root 270 Jan 20 22:29 node-exporter-serviceAccount.yaml

-rw-r--r-- 1 root root 850 Jan 20 22:29 node-exporter-serviceMonitor.yaml

-rw-r--r-- 1 root root 492 Jan 20 22:29 node-exporter-service.yaml

-rw-r--r-- 1 root root 482 Jan 20 22:29 prometheus-adapter-apiService.yaml

-rw-r--r-- 1 root root 576 Jan 20 22:29 prometheus-adapter-clusterRoleAggregatedMetricsReader.yaml

-rw-r--r-- 1 root root 494 Jan 20 22:29 prometheus-adapter-clusterRoleBindingDelegator.yaml

-rw-r--r-- 1 root root 471 Jan 20 22:29 prometheus-adapter-clusterRoleBinding.yaml

-rw-r--r-- 1 root root 378 Jan 20 22:29 prometheus-adapter-clusterRoleServerResources.yaml

-rw-r--r-- 1 root root 409 Jan 20 22:29 prometheus-adapter-clusterRole.yaml

-rw-r--r-- 1 root root 1568 Jan 20 22:29 prometheus-adapter-configMap.yaml

-rw-r--r-- 1 root root 1804 Jan 20 22:29 prometheus-adapter-deployment.yaml

-rw-r--r-- 1 root root 515 Jan 20 22:29 prometheus-adapter-roleBindingAuthReader.yaml

-rw-r--r-- 1 root root 287 Jan 20 22:29 prometheus-adapter-serviceAccount.yaml

-rw-r--r-- 1 root root 677 Jan 20 22:29 prometheus-adapter-serviceMonitor.yaml

-rw-r--r-- 1 root root 501 Jan 20 22:29 prometheus-adapter-service.yaml

-rw-r--r-- 1 root root 447 Jan 20 22:29 prometheus-clusterRoleBinding.yaml

-rw-r--r-- 1 root root 394 Jan 20 22:29 prometheus-clusterRole.yaml

-rw-r--r-- 1 root root 4143 Jan 20 22:29 prometheus-operator-prometheusRule.yaml

-rw-r--r-- 1 root root 715 Jan 20 22:29 prometheus-operator-serviceMonitor.yaml

-rw-r--r-- 1 root root 10546 Jan 20 22:29 prometheus-prometheusRule.yaml

-rw-r--r-- 1 root root 1153 Jan 21 10:38 prometheus-prometheus.yaml

-rw-r--r-- 1 root root 471 Jan 20 22:29 prometheus-roleBindingConfig.yaml

-rw-r--r-- 1 root root 1547 Jan 20 22:29 prometheus-roleBindingSpecificNamespaces.yaml

-rw-r--r-- 1 root root 366 Jan 20 22:29 prometheus-roleConfig.yaml

-rw-r--r-- 1 root root 1705 Jan 20 22:29 prometheus-roleSpecificNamespaces.yaml

-rw-r--r-- 1 root root 271 Jan 20 22:29 prometheus-serviceAccount.yaml

-rw-r--r-- 1 root root 6836 Jan 20 22:29 prometheus-serviceMonitorApiserver.yaml

-rw-r--r-- 1 root root 440 Jan 20 22:29 prometheus-serviceMonitorCoreDNS.yaml

-rw-r--r-- 1 root root 6355 Jan 20 22:29 prometheus-serviceMonitorKubeControllerManager.yaml

-rw-r--r-- 1 root root 7141 Jan 20 22:29 prometheus-serviceMonitorKubelet.yaml

-rw-r--r-- 1 root root 530 Jan 20 22:29 prometheus-serviceMonitorKubeScheduler.yaml

-rw-r--r-- 1 root root 527 Jan 20 22:29 prometheus-serviceMonitor.yaml

-rw-r--r-- 1 root root 558 Jan 20 22:29 prometheus-service.yaml

drwxr-xr-x 2 root root 4096 Jan 20 22:29 setup

5、修改镜像地址

国外镜像源某些镜像无法拉取,我们这里修改prometheus-operator,prometheus,alertmanager,kube-state-metrics,node-exporter,prometheus-adapter的镜像源为国内镜像源。我这里使用中科大的镜像源。

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' setup/prometheus-operator-deployment.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' prometheus-prometheus.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' alertmanager-alertmanager.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' kube-state-metrics-deployment.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' node-exporter-daemonset.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' prometheus-adapter-deployment.yaml

6、修改promethes,alertmanager,grafana的service类型为NodePort类型

6.1、修改prometheus的service

[root@test-centos manifests]# cat prometheus-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.24.0

prometheus: k8s

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9090

targetPort: web

selector:

app: prometheus

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

prometheus: k8s

sessionAffinity: ClientIP

6.2、修改alertmanager的service

[root@test-centos manifests]# cat alertmanager-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

alertmanager: main

app.kubernetes.io/component: alert-router

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.21.0

name: alertmanager-main

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9093

targetPort: web

selector:

alertmanager: main

app: alertmanager

app.kubernetes.io/component: alert-router

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

sessionAffinity: ClientIP

6.3、修改grafana的service

[root@test-centos manifests]# cat grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: grafana

name: grafana

namespace: monitoring

spec:

ports:

- name: http

port: 3000

targetPort: http

selector:

app: grafana

type: NodePort

7、安装kube-prometheus

7.1、安装CRD和prometheus-operator

[root@test-centos manifests]# kubectl apply -f setup/

namespace/monitoring created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

deployment.apps/prometheus-operator created

service/prometheus-operator created

7.2、查看prometheus-operator状态

[root@test-centos manifests]# kubectl get pod -n monitoring

NAME READY STATUS RESTARTS AGE

prometheus-operator-c5c9679cd-4wwf7 2/2 Running 0 10m

7.3、安装prometheus,、alertmanager,、grafana、 kube-state-metrics,、node-exporter

[root@test-centos manifests]# kubectl apply -f .

alertmanager.monitoring.coreos.com/main created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager created

secret/grafana-datasources created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-statefulset created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

service/grafana created

serviceaccount/grafana created

servicemonitor.monitoring.coreos.com/grafana created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

service/node-exporter created

serviceaccount/node-exporter created

servicemonitor.monitoring.coreos.com/node-exporter created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus-operator created

prometheus.monitoring.coreos.com/k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-rules created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created

7.4、查看各服务状态

[root@test-centos manifests1]# kubectl get pod -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 4h39m

alertmanager-main-1 2/2 Running 0 4h39m

alertmanager-main-2 2/2 Running 0 4h39m

blackbox-exporter-85b45b544b-vvpzp 3/3 Running 0 4h39m

grafana-6645c76f4-npzqm 1/1 Running 0 4h39m

kube-state-metrics-598b6577d9-qvtbn 3/3 Running 0 4h39m

node-exporter-gqh2l 2/2 Running 0 4h39m

node-exporter-ndq72 2/2 Running 0 4h39m

prometheus-adapter-7c8d9fb446-flxck 1/1 Running 0 4h39m

prometheus-k8s-0 2/2 Running 3 4h39m

prometheus-k8s-1 2/2 Running 3 4h39m

prometheus-operator-cb98796f8-h8r9t 2/2 Running 0 7h

8、测试prometheus,alert-manager,grafana服务是否正常

8.1、访问prometheus

8.2、访问alert-manager

8.3、访问grafana

账号密码默认 admin/admin

二、自定义监控(以监控etcd服务为例)

1、监控etcd服务

1.1、安装etcd(单节点)

yum -y install etcd

1.2、启动etcd服务

systemctl start etcd

1.3、编写etcd_svc_ep文件

[root@test-centos manifests1]# cat etcd_svc_ep.yaml

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: etcd

name: etcd-k8s

namespace: kube-system

spec:

type: ClusterIP

clusterIP: None

ports:

- name: port

port: 2379

protocol: TCP

---

apiVersion: v1

kind: Endpoints

metadata:

labels:

k8s-app: etcd

name: etcd-k8s

namespace: kube-system

subsets:

- addresses:

- ip: 10.0.2.95 #etcd服务地址

- ip: 10.0.0.4 #etcd服务地址

nodeName: etcd-master

ports:

- name: port

port: 2379 #etcd服务端口

protocol: TCP

1.4、应用etcd_svc_ep文件

kubectl apply -f etcd_svc_ep.yaml

1.5、创建prometheus配置文件

[root@test-centos manifests1]# cat prometheus-additional.yaml

- job_name: "etcd"

scheme: http

static_configs:

- targets:

- 10.0.2.95:2379

- 10.0.0.4:2379

1.6、应用prometheus-additional文件

注意:此文件会以secret方式创建

[root@test-centos manifests1]# kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring

[root@test-centos manifests1]# kubectl get secret -n monitoring

NAME TYPE DATA AGE

additional-configs Opaque 1 7h52m

1.7、修改prometheus主文件,将prometheus-additional文件加入

[root@test-centos manifests1]# cat prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.24.0

prometheus: k8s

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- name: alertmanager-main

namespace: monitoring

port: web

image: quay.io/prometheus/prometheus:v2.24.0

nodeSelector:

kubernetes.io/os: linux

podMetadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.24.0

podMonitorNamespaceSelector: {}

podMonitorSelector: {}

probeNamespaceSelector: {}

probeSelector: {}

replicas: 2

resources:

requests:

memory: 400Mi

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

additionalScrapeConfigs:

name: additional-configs #新加配置

key: prometheus-additional.yaml #新加配置

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: 2.24.0

1.8、应用prometheus主文件

kubectl apply -f prometheus-prometheus.yaml

1.9、稍等后即可查看是否生效

2797

2797

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?