Multivariate Linear Regression

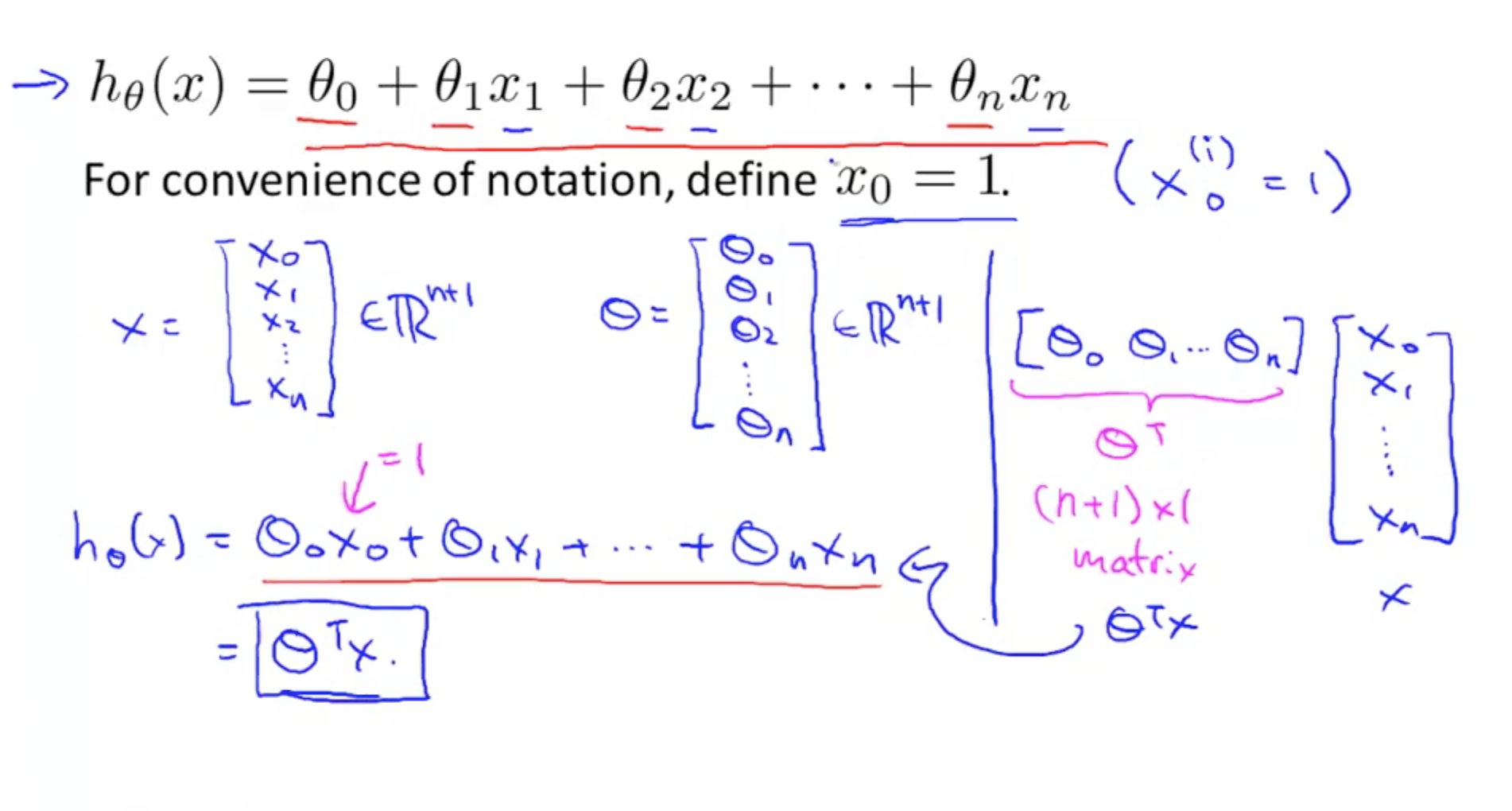

Build up the model(Matrix notion)

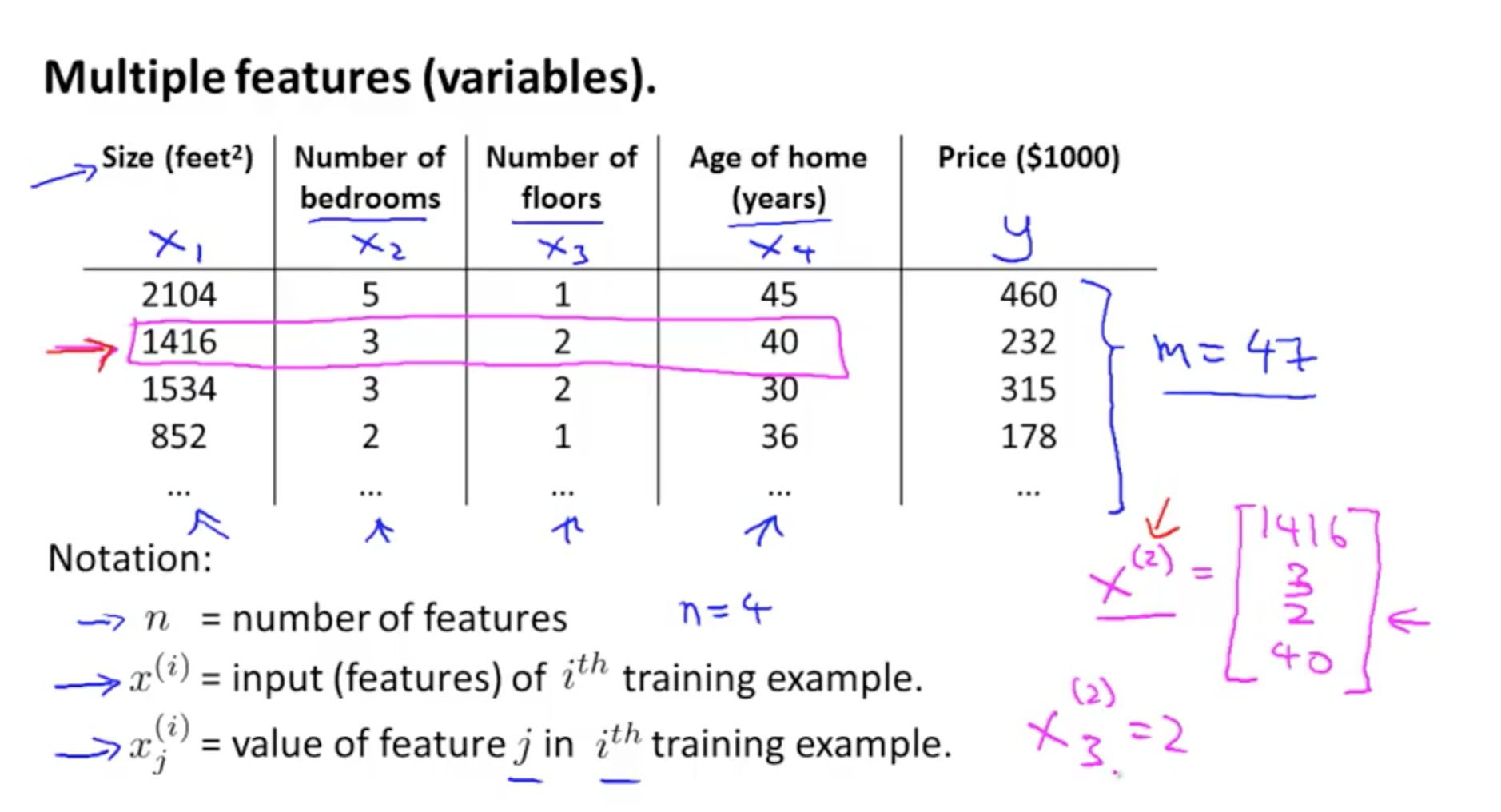

notions used to describe the model:

n=variable numbers, m=training example numbers

x(i)j

which i denotes No.i training examples j denotes No.j variable

in order to do the vectorise, we need to change the model a little bit which create a constant variable x0=1 and make the parameters vector into θT (1*(n+1)matrix) and make the variables vector into X ((n+1)*1 matrix )with the n denotes amount of variables.

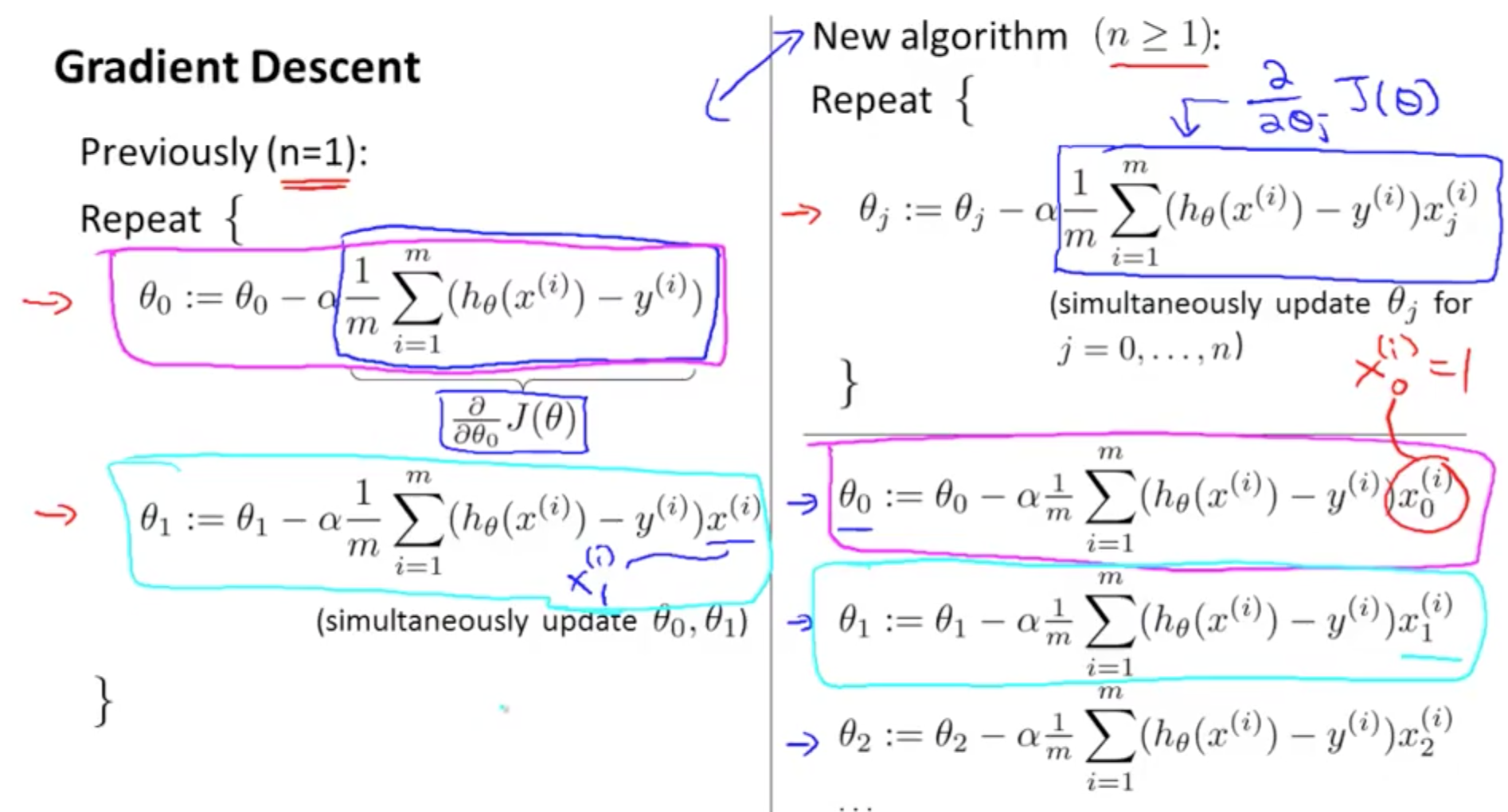

Skills used to do the gradient descent

Skill used on training data:

gradient decsent

Important skill in doing the gradient descent:

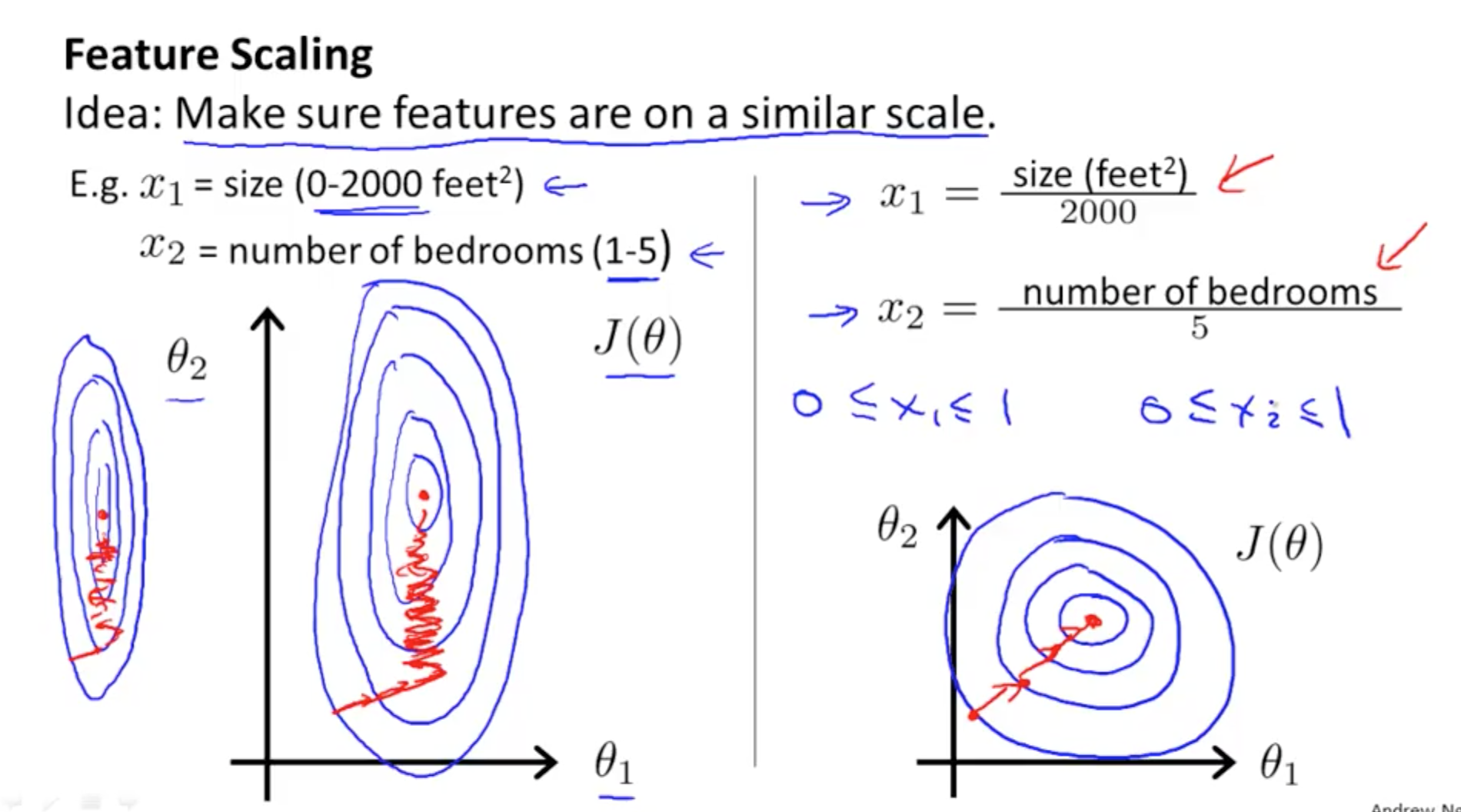

make the variable into the same scale(approximately near to [-1,1])

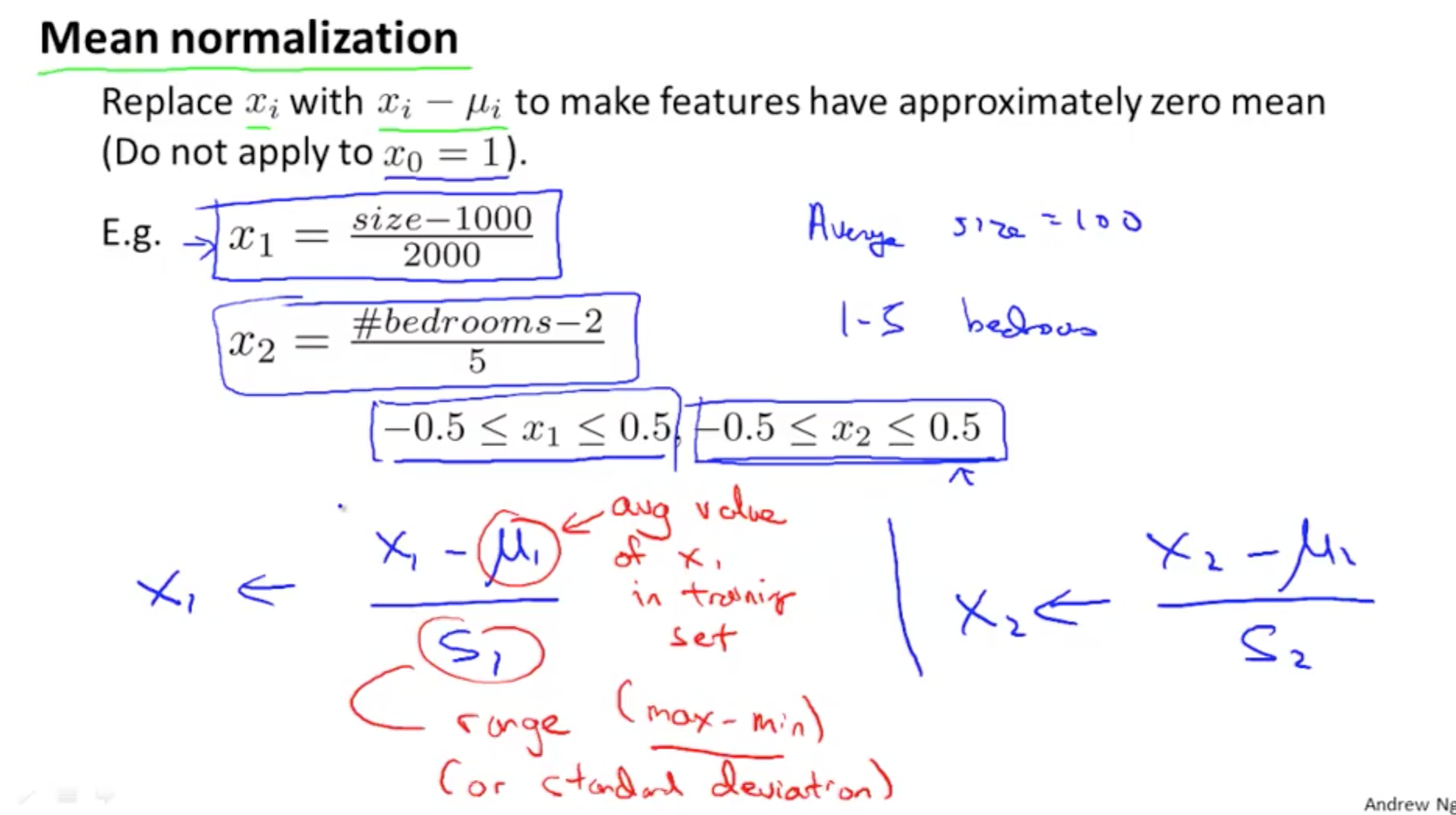

mean normalization

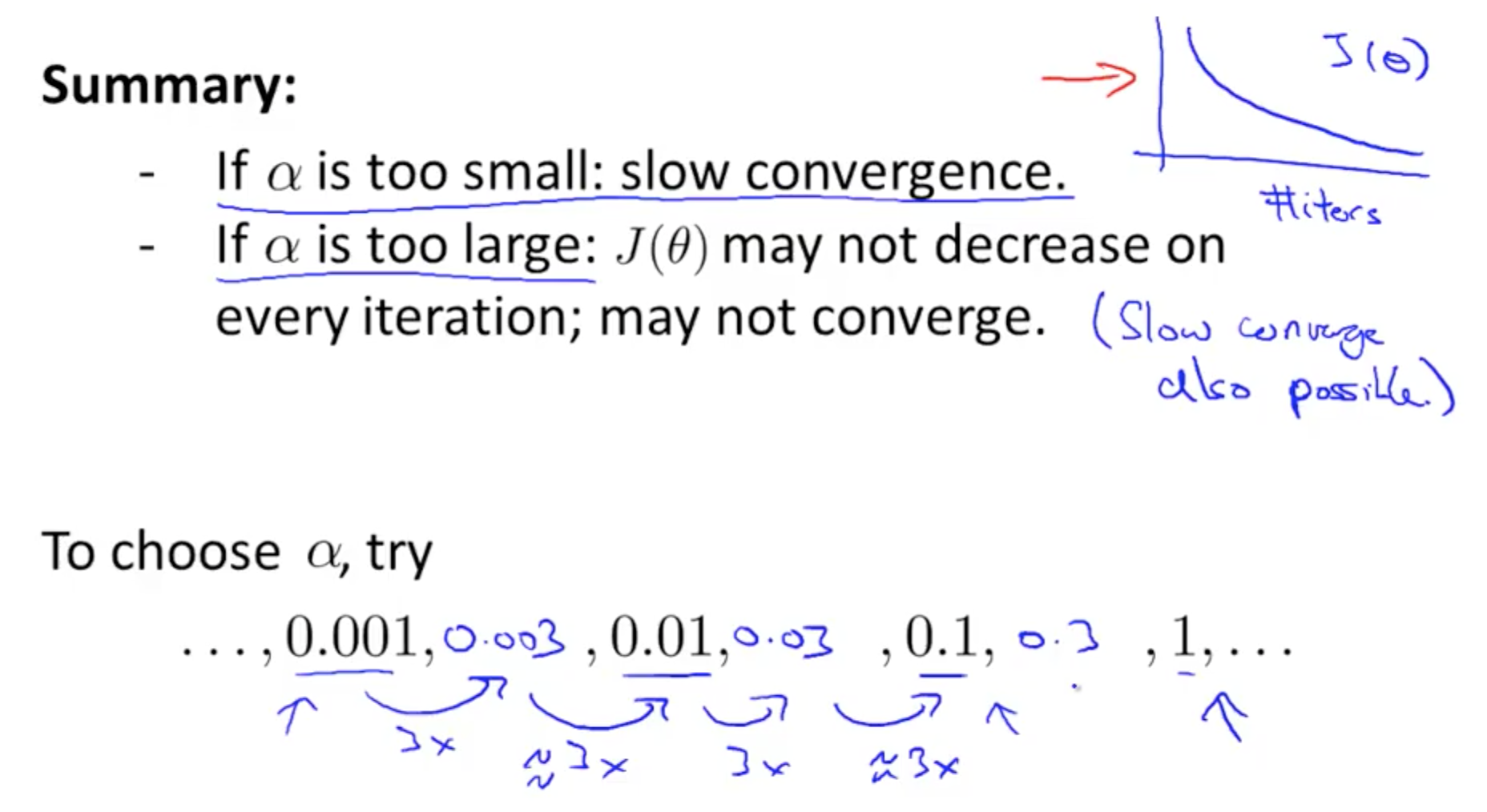

Choose the right learning rate:

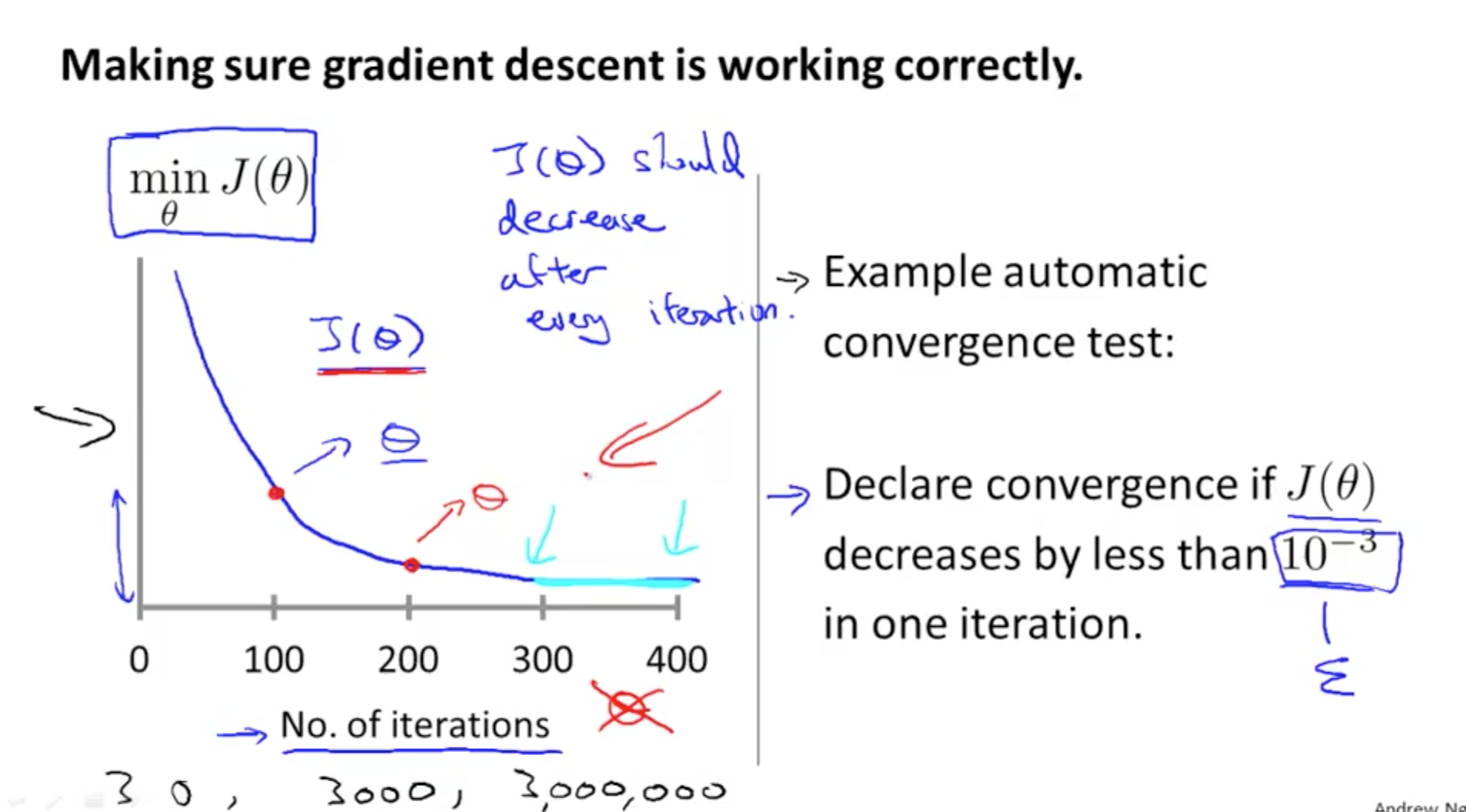

make sure the gradient descent is working correctly

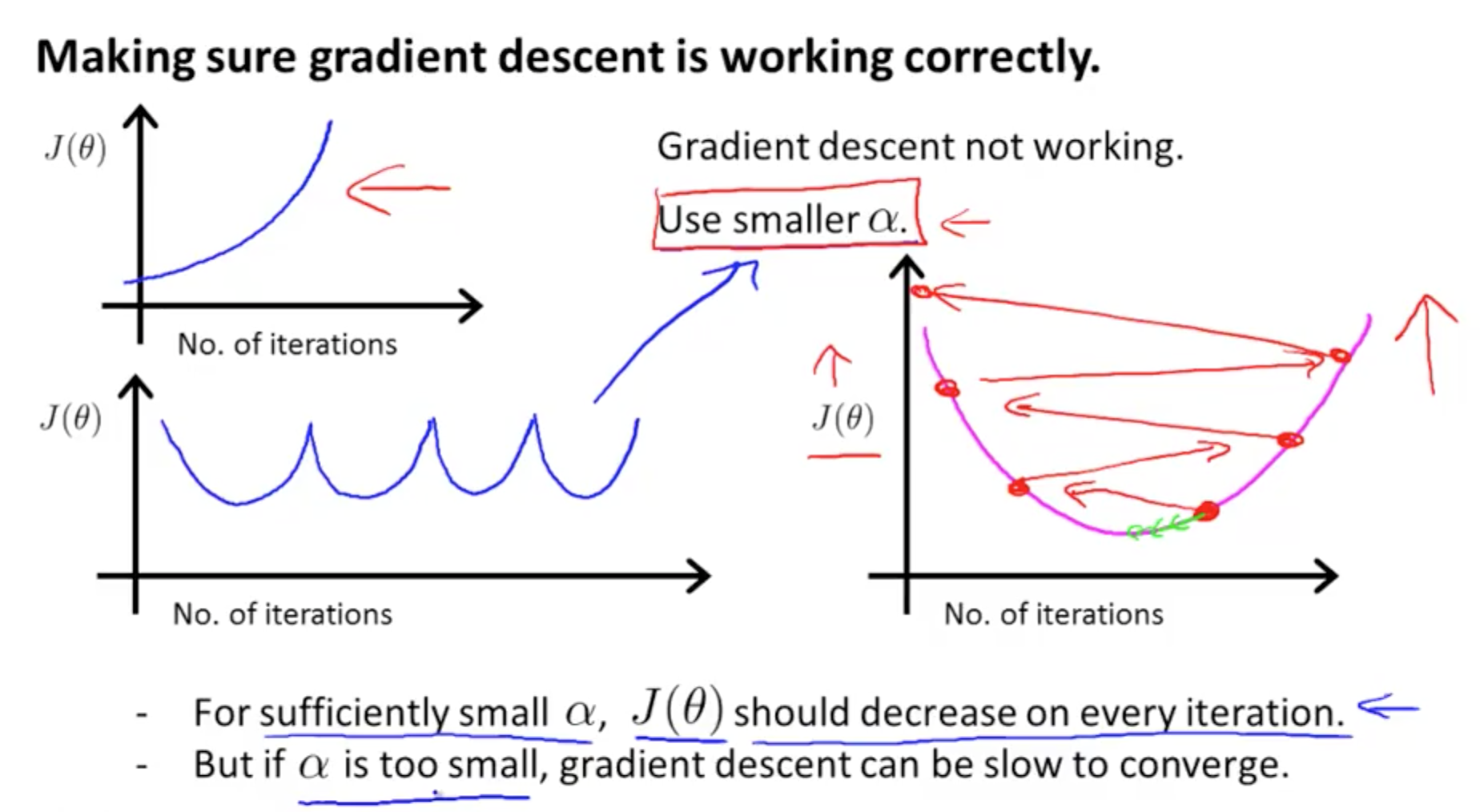

choose the right learning rate not too big or too small

too small will cause the

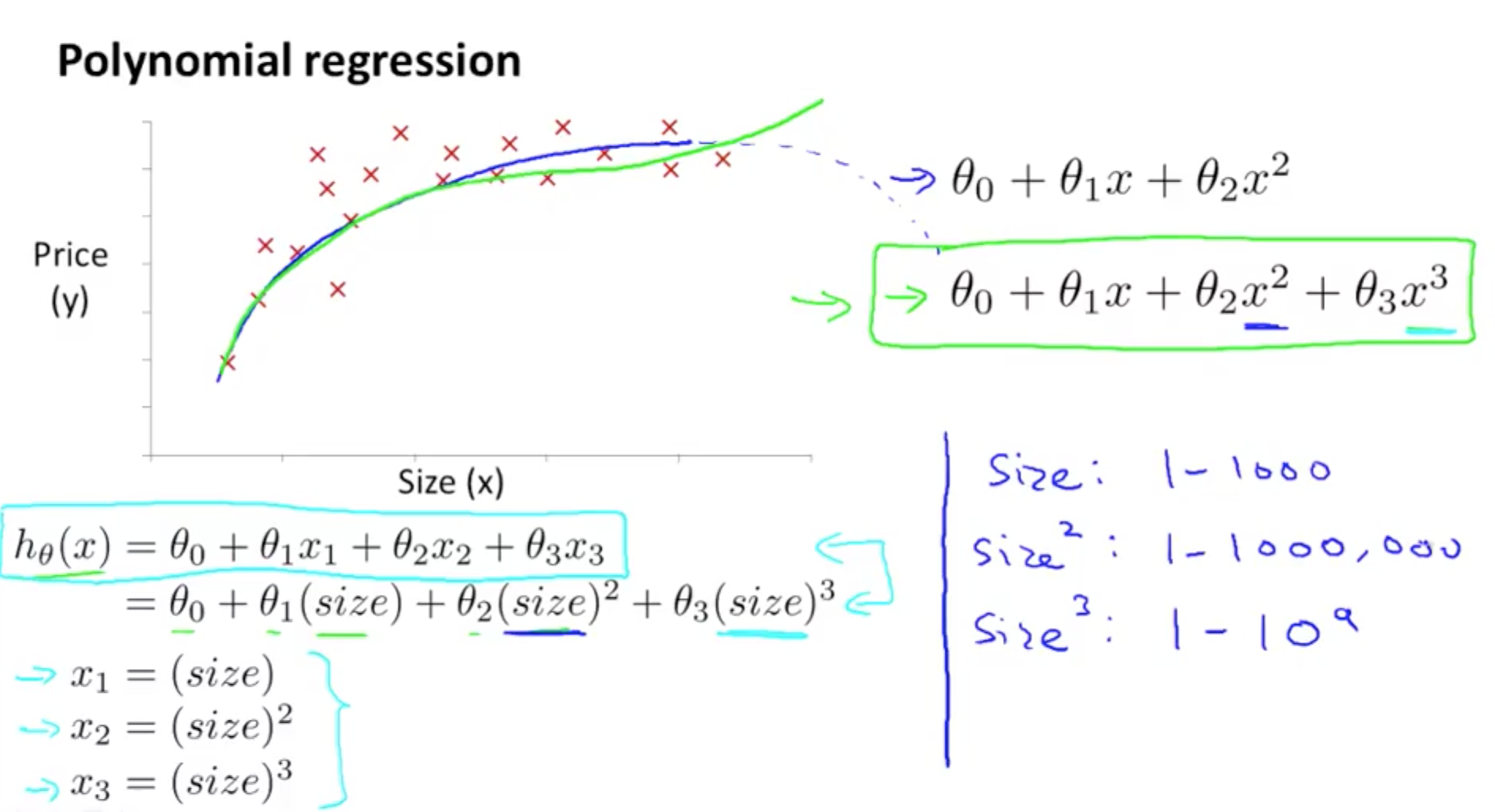

Polynomial Regression

Some times not only linear but also have quadratic or polynomial regression

Tips: Feature scaling without mean normalisation is only divided by the scale

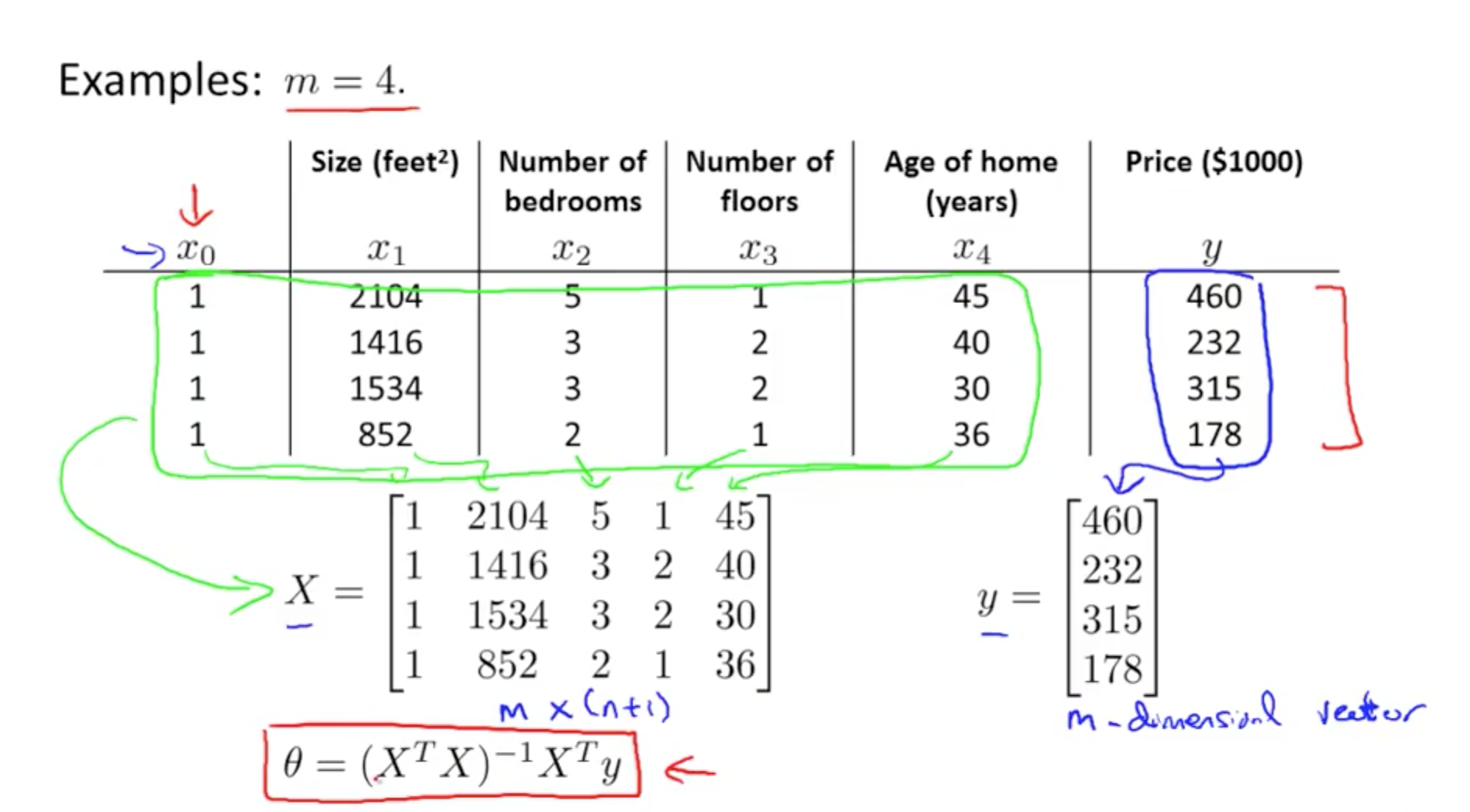

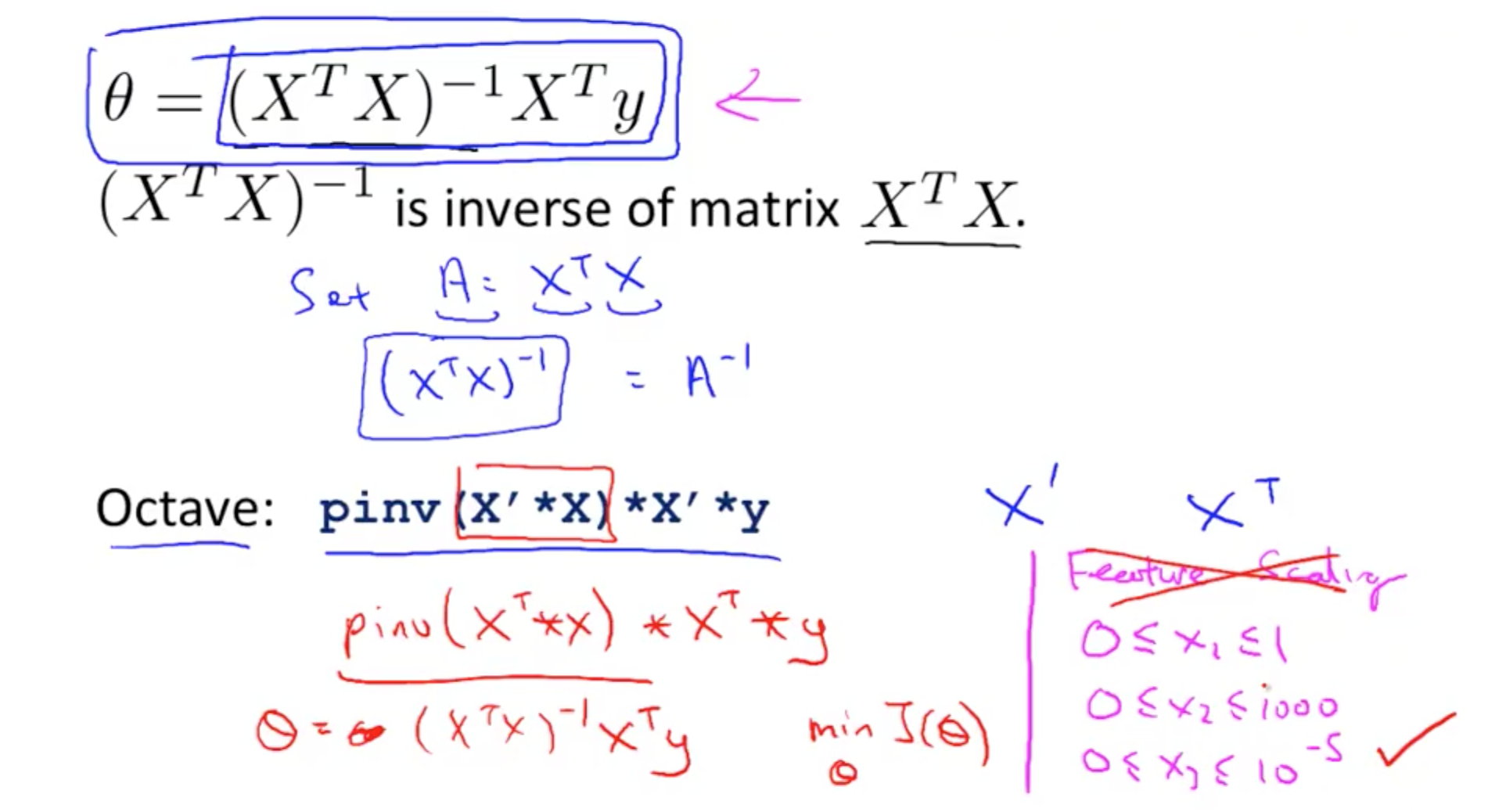

Normal Equation

Use the same notion with gradient descent to calculate the parameters vector by normal equation

By using the normal equation, don’t need to do the feature scaling or mean normalisation, and the corresponding Matlab syntax is also in the graf

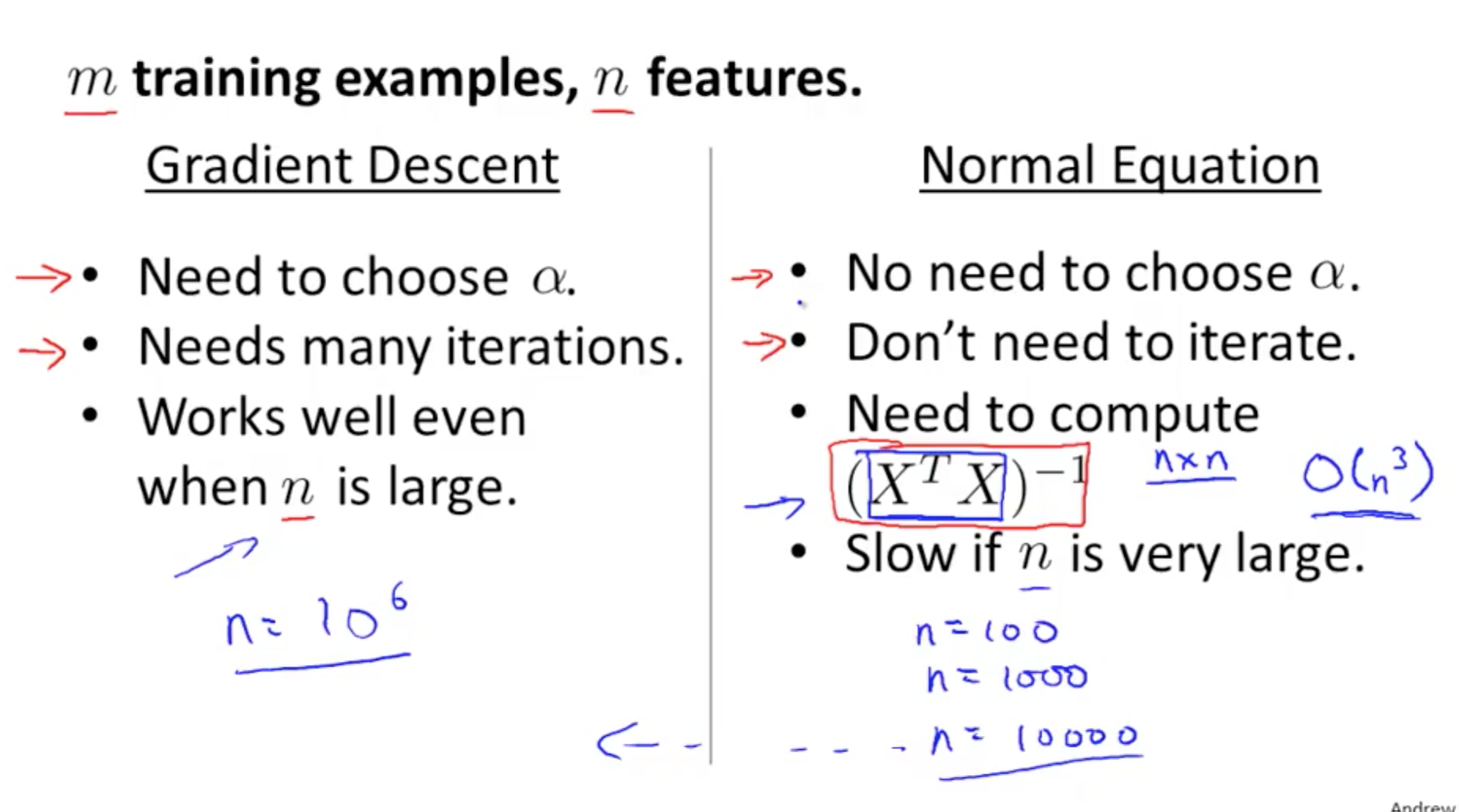

Compare the gradient descent and normal equation

The normal equation don’t need to choose learning rate and do the iteration but Gradient descent may work well when the number of features is large i.e.

n=106

Matlab Syntax

Input/Output/Change Route

>>cd'/Users/lyking/Desktop'

>>ls

>>who

>>whos

>>clear

>>save hello.txt/hello.mat variable -ascii %human readable format, if don't use this will be binary format

>>load hello.txt % hello.dat/('shit.dat') is also OKBuild Up Matrix

>>A=[1 2;3 4;5 6]

>>A(3,2)

ans=

6

>>A(:,2)

ans=

2

4

6

>>A([1 3],:)%take the corresponding rows in the vector

ans=

1 2

5 6

>>A(:,2)=[10;11;12]%change value

A=

1 10

3 11

5 12

>>A=[A,[101;102;103]]%append another column

A=

1 10 101

3 11 102

5 12 103

>>A(:)

ans=

1

3

5

10

11

12

101

102

103

>>A=[1;2]

>>B=[3;4]

>>C=[A B]

C=

1 3

2 4

>>C=[A;B]

C=

1

2

3

4Matrix Operation

>>A=[1 2;3 4;5 6]

>>B=[11 12;13 14;15 16]

>>C=[1 1;2 2]

>>A*C

ans=

5 5

11 11

17 17

>>A.*B%element wies

ans=

11 24

39 56

75 96

>>A.^2

ans=

1 4

9 16

25 36

>>V=[1;2;3]

>>1./V

>>log(V)

>>exp(V)

>>abs(V)

>>-V

>>V+ones(length(V),1)

>>V+1

>>A'%transpose

>>max(V)

>>[r,c]=max(V)

>>A=magic(3)%magic matrix

>>[r,c]=find(A>=7)

>>a=[1 2 3 4]

>>sum(a)

>>prod(a)%time all the elements together

>>floor(a)%used for decimal

>>ceil(a)%used for decimal

>>rand(3)%like magic matrix but is randomly generated between (0,1)

>>max(A,[],1)%find the biggest in the column

>>max(A,[],2)%find the biggest in the row

>>max(A(:))%find out the biggest in all elements

>>sum(A,1)%column sum

>>sum(A,2)%row sum

>>eye(9)%identity matrix

>>flipud(eye(9))%flip up down

>>A.*eye(9)%only left the value in the diagonal

>>pinv(a)%inverse of matrix a

1279

1279

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?