0.前置条件

准备已经安装好的k8s集群,最少一个master节点和工作节点,master节点已经初始化,工作节点已经加入到master节点。

k8s版本:1.18.0

KubeSphere版本:v3.1.1

master节点:2核5g 40GB

node1节点:2核3g 25GB

node2节点:2核3g 25GB

master,node1,node2为主机名

master 192.168.177.132

node1 192.168.177.133

node2 192.168.177.134

1.配置k8s集群中的默认存储类型NFS

1.所有节点执行

# 所有机器安装

yum install -y nfs-utils

2.主节点执行

# nfs主节点

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

mkdir -p /nfs/data

# 设置开机自启 & 现在启动 -- 远程绑定服务

systemctl enable rpcbind --now

# nfs服务

systemctl enable nfs-server --now

# 配置生效

exportfs -r

# 查看

exportfs

3.从节点执行

192.168.177.132替换成你的master节点地址

# 查看远程机器有哪些目录可以同步 -- 使用master机器ip地址

showmount -e 192.168.177.132

# 执行以下命令挂载 nfs 服务器上的共享目录到本机路径

mkdir -p /nfs/data

# 同步远程机器数据

mount -t nfs 192.168.177.132:/nfs/data /nfs/data

4.测试

# 在任意机器写入一个测试文件

echo "hello nfs" > /nfs/data/test.txt

# 在其它机器查看数据

cat /nfs/data/test.txt

2.配置动态供应的默认存储类

1.创建名为storageclass.yaml的文件

内容如下:需要修改

将192.168.177.132替换成你的master节点地址,其余不变

## 创建了一个存储类

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:

archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2

# resources:

# limits:

# cpu: 10m

# requests:

# cpu: 10m

volumeMounts:

- name: nfs-client-root

mountPath: persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.177.132 ## 指定自己nfs服务器地址

- name: NFS_PATH

value: /nfs/data ## nfs服务器共享的目录

volumes:

- name: nfs-client-root

nfs:

server: 192.168.177.132 ##nfs服务器共享的目录

path: /nfs/data

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

2.执行storageclass.yaml

kubectl apply -f storageclass.yaml

3.查看存储类

kubectl get sc

4.查看Pod,其否正常启动

kubectl get pods -A

[root@master k8s]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default nfs-client-provisioner-6679cc7756-klqzl 1/1 Running 0 65s

5.创建一个PVC测试一下动态供应能力

创建名为pvc.yaml的文件,内容如下:不用修改

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 200Mi

storageClassName: nfs-storage

6.执行pvc.yaml

kubectl apply -f pvc.yaml

7.查看pvc,pv

kubectl get pvc

kubectl get pv

[root@master k8s]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-pvc Bound pvc-ec053c94-f8b1-4e99-9a3d-fc1d3d7cc932 200Mi RWX nfs-storage 8s

[root@master k8s]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-ec053c94-f8b1-4e99-9a3d-fc1d3d7cc932 200Mi RWX Delete Bound default/test-pvc nfs-storage 13s

3.安装群指标监控组件metrics-server

集群指标监控组件

创建名为metrics-server.yaml的文件

内容如下:不用修改

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --kubelet-insecure-tls

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/metrics-server:v0.4.3

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: readyz

port: https

scheme: HTTPS

periodSeconds: 10

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

Metrics-Server:它是集群指标监控组件,用于和API Server交互,获取(采集)Kubernetes集群中各项指标数据的。有了它我们可以查看各个Pod,Node等其他资源的CPU,Mem(内存)使用情况。

KubeSphere可以充当Kubernetes的dashboard(可视化面板)因此KubeSphere要想获取Kubernetes的各项数据,就需要某个组件去提供给想数据,这个数据采集功能由Metrics-Server实现。

1.修改kube-apiserver.yaml

修改每个 API Server 的 kube-apiserver.yaml 配置开启 Aggregator Routing

不开启Aggregator Routing的话不能使用

kubectl top nodes

kubectl top pods

kubectl top pods -A

这些

开始修改

vi /etc/kubernetes/manifests/kube-apiserver.yaml

只添加这一句

- --enable-aggregator-routing=true

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=192.168.177.131

- --allow-privileged=true

- --authorization-mode=Node,RBAC

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --enable-admission-plugins=NodeRestriction

- --enable-bootstrap-token-auth=true

- --enable-aggregator-routing=true #添加,开启Aggregator Routing(聚合路由)

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

2.重启kubelet

systemctl daemon-reload

systemctl restart kubelet

3.拉取镜像

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.4.3

4.执行metrics-server.yaml

kubectl apply -f metrics-server.yaml

5.查看Metrics Server服务状态

kubectl get pods -n kube-system

metrics-server-7457bfc9f4-mj6zh 1/1 Running 0 109s

6.查看数据

kubectl top nodes

kubectl top pods

kubectl top pods -A

4.安装KubeSphere

1.下载kubesphere-installer.yaml和cluster-configuration.yaml

wget https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/kubesphere-installer.yaml

wget https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml

2.安装

kubectl apply -f kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

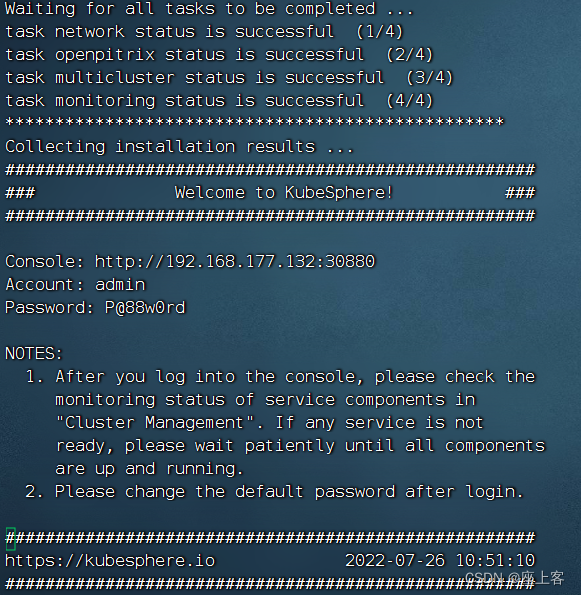

3.检查安装日志

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

当出现如下界面时表示成功

查看所有 Pod 是否在 KubeSphere 的相关命名空间中正常运行

[root@master ~]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default nfs-client-provisioner-6679cc7756-klqzl 1/1 Running 0 29m

kube-system coredns-7ff77c879f-t5mqq 1/1 Running 0 66m

kube-system coredns-7ff77c879f-vnpvw 1/1 Running 0 66m

kube-system etcd-master 1/1 Running 0 66m

kube-system kube-apiserver-master 1/1 Running 0 16m

kube-system kube-controller-manager-master 1/1 Running 0 66m

kube-system kube-flannel-ds-amd64-58gkg 1/1 Running 0 31m

kube-system kube-flannel-ds-amd64-7cxlw 1/1 Running 0 31m

kube-system kube-flannel-ds-amd64-hklcq 1/1 Running 0 31m

kube-system kube-proxy-8gsjz 1/1 Running 0 66m

kube-system kube-proxy-bzfw2 1/1 Running 0 64m

kube-system kube-proxy-r645l 1/1 Running 0 63m

kube-system kube-scheduler-master 1/1 Running 0 66m

kube-system metrics-server-7457bfc9f4-h54t5 1/1 Running 0 13m

kube-system snapshot-controller-0 1/1 Running 0 7m30s

kubesphere-controls-system default-http-backend-857d7b6856-tjjrw 1/1 Running 0 7m13s

kubesphere-controls-system kubectl-admin-db9fc54f5-cbwqr 1/1 Running 0 3m10s

kubesphere-monitoring-system alertmanager-main-0 2/2 Running 0 5m4s

kubesphere-monitoring-system alertmanager-main-1 2/2 Running 0 5m4s

kubesphere-monitoring-system alertmanager-main-2 2/2 Running 0 5m4s

kubesphere-monitoring-system kube-state-metrics-d6645c6b-qltbz 3/3 Running 0 5m20s

kubesphere-monitoring-system node-exporter-2x4k8 2/2 Running 0 5m22s

kubesphere-monitoring-system node-exporter-5zpqz 2/2 Running 0 5m22s

kubesphere-monitoring-system node-exporter-qx8l7 2/2 Running 0 5m22s

kubesphere-monitoring-system notification-manager-deployment-674dddcbd9-hpr4j 1/1 Running 0 37s

kubesphere-monitoring-system notification-manager-deployment-674dddcbd9-trzs8 1/1 Running 0 37s

kubesphere-monitoring-system notification-manager-operator-7877c6574f-kff2v 2/2 Running 0 4m50s

kubesphere-monitoring-system prometheus-k8s-0 3/3 Running 1 5m3s

kubesphere-monitoring-system prometheus-k8s-1 3/3 Running 1 5m3s

kubesphere-monitoring-system prometheus-operator-7d7684fc68-tpdhj 2/2 Running 0 5m25s

kubesphere-system ks-apiserver-75ffb669c4-grm6t 1/1 Running 0 3m55s

kubesphere-system ks-console-74cf8b9487-7gbxr 1/1 Running 0 7m3s

kubesphere-system ks-controller-manager-6dd469d8dd-ttr9x 1/1 Running 0 3m55s

kubesphere-system ks-installer-7bd6b699df-9sg72 1/1 Running 0 9m11s

检查控制台的端口

[root@master ~]# kubectl get svc/ks-console -n kubesphere-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ks-console NodePort 10.102.163.40 <none> 80:30880/TCP 5m39s

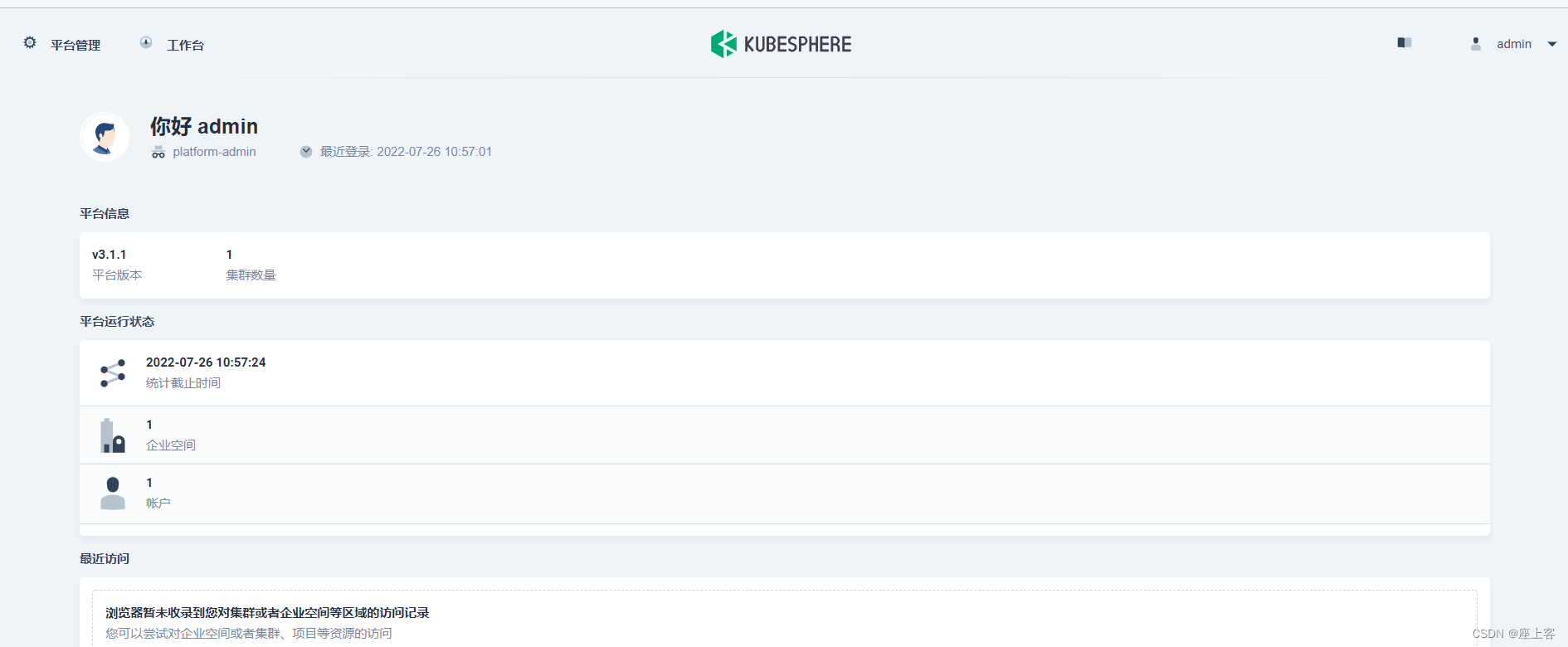

浏览器访问集群任意机器IP:30880

192.168.177.132:30880

192.168.177.133:30880

192.168.177.134:30880

初始账号密码: admin/P@88w0rd

登录后截图

参考文章:

https://blog.csdn.net/m0_57776598/article/details/124025230

173

173

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?