Spring Cloud整合ELK(Elasticsearch+Kibana+Logstash)

Windows环境搭建

下载地址

Elasticsearch下载地址:https://www.elastic.co/cn/downloads/elasticsearch

Kibana下载地址:https://www.elastic.co/cn/downloads/kibana

Logstash下载地址:https://www.elastic.co/cn/downloads/beats/logstash

安装参考

Elasticsearch安装:https://blog.csdn.net/m0_46267097/article/details/105556002

Kibana安装:https://blog.csdn.net/m0_46267097/article/details/105554128

Logstash安装:https://blog.csdn.net/m0_46267097/article/details/105651217

配置文件

Elasticsearch配置

修改\elasticsearch-7.6.2\config\elasticsearch.yml配置文件

cluster.name: testNode1

node.name: 192.168.2.100

path.logs: D:\efk\logs\elasticsearch

network.host: 192.168.2.100

http.port: 9200

transport.port: 9300

discovery.seed_hosts: ["192.168.2.100","192.168.111.129"]

cluster.initial_master_nodes: ["192.168.2.100"]

http.cors.enabled: true

http.cors.allow-origin: "*"

node.master: true

node.data: true

Kibana配置

修改\kibana-7.6.2\config\kibana.yml配置文件

server.port: 5601

server.host: "192.168.2.100"

server.name: "192.168.2.100"

elasticsearch.hosts: ["http://192.168.2.100:9200","http://192.168.111.129:9200"]

Logstash配置

添加\logstash-7.6.2\bin\logstash.conf配置文件

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

tcp {

port => 5045

codec => json_lines

}

}

output {

elasticsearch {

hosts => ["http://192.168.2.100:9200","http://192.168.111.129:9200"]

index => "logstash-%{+YYYY.MM.dd}"

#user => "elastic"

#password => "changeme"

}

}

SpringBoot配置

在pom.xml中添加如下依赖

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>6.3</version>

</dependency>

在resources目录下找到logback.xml配置文件,在其configuration标签内添加如下代码

<appender name="logStash" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>localhost:5045</destination>

<encoder charset="UTF-8"

class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<providers>

<timestamp>

<timeZone>UTC</timeZone>

</timestamp>

<pattern>

<pattern>

{

"logLevel": "%level",

"serviceName": "${springAppName:-}",

"pid": "${PID:-}",

"thread": "%thread",

"class": "%logger{40}",

"rest": "%message"

}

</pattern>

</pattern>

</providers>

</encoder>

</appender>

<root level="info">

<appender-ref ref="logStash" />

<appender-ref ref="console" />

</root>

添加完成后,在启动项下面添加logger日志,启动项目

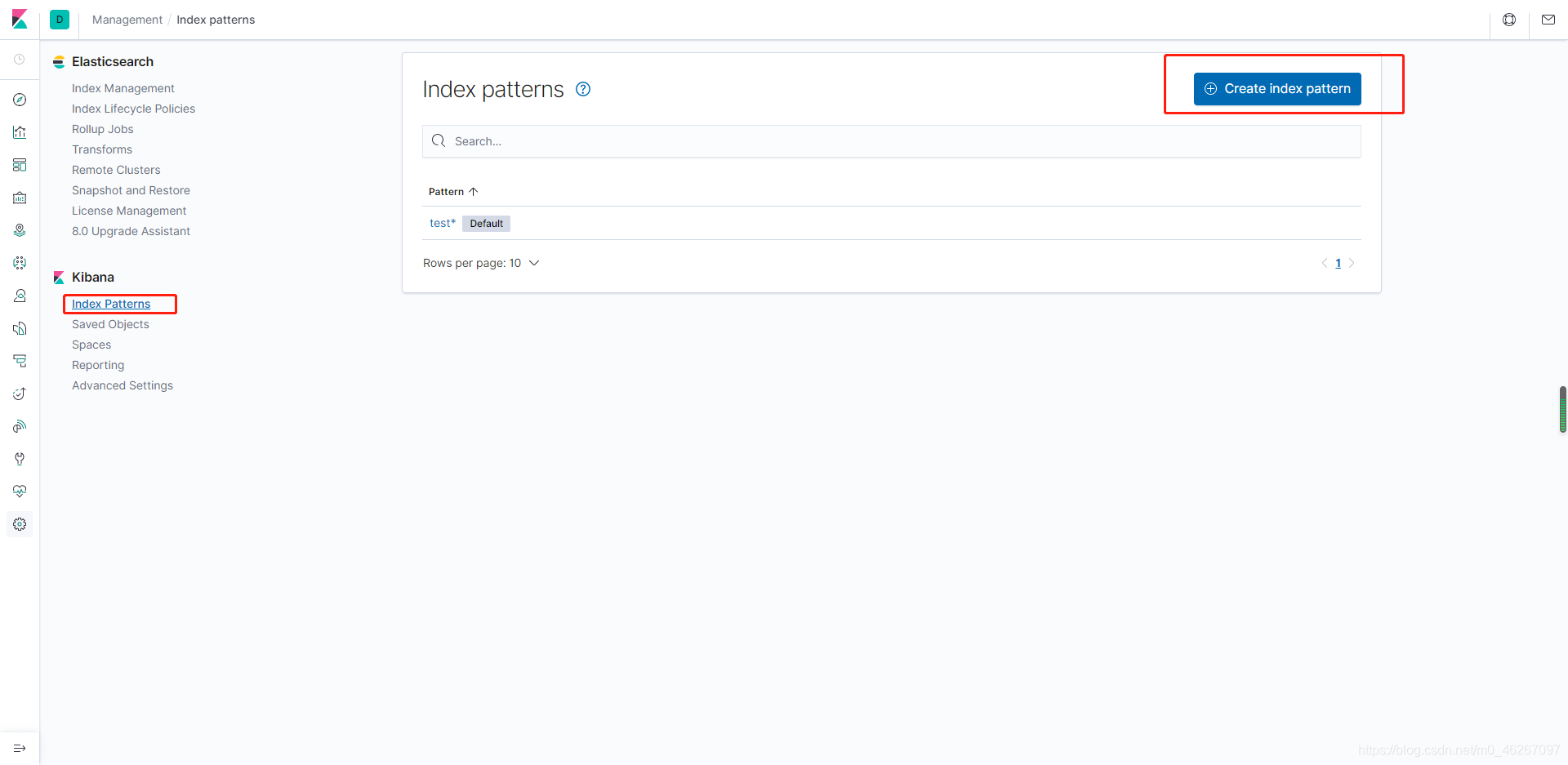

打开我们的Kibana,进入Discover的Management页面,点击Index Patterns,点击Create index pattern按钮

在输入框中输入

logstash*

点击Next step按钮,选择Time Filter field name后点击Create index pattern

创建成功

然后我们就能进入Discover查看所有日志喽,因为日志太长都变形了。。。没关系,我们的ELK也算部署完成了。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?