参考文档: ubuntu20.04下使用juju+maas环境部署k8s-8-基本操作-1-访问 kubernetes dashboard

在openstack中部署完charmed kubernetes,按 ubuntu20.04下使用juju+maas环境部署k8s-8-基本操作-1-访问 kubernetes dashboard方法,准备同步k8s配置数据。

juju scp kubernetes-master/1:config ~/.kube/config

输出是类似:

can't connect 192.168.0.43

首先查看openstack的拓扑图:

从拓扑图上看,路由没什么问题。

开始觉得应该是从本机10.0.0.3应该有一条静态路由到192.168.0.0/24网段,因为能ping通192.168.0.1这个网关。就没有在本机显示路由。

在openstack的vm上ping测试

登陆到openstack最开始创建的测试机

能通所有vm的内网地址,看来内网所在的vlan互联互通是没有问题的。

查看安全组

发现安全组中没有允许icmp和ssh。

遂在安全组中增加原先在测试时配置的default组。

增加浮动ip

又发现juju新建的vm们,没有浮动ip

又将所有的vm,增加了浮动ip。开始在图形界面增加,实在太慢了。遂在命令行中增加:

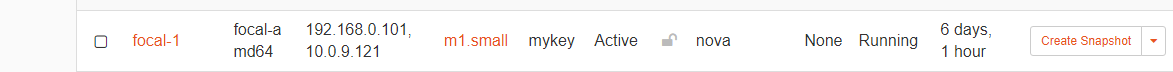

显示实例

openstack server list

+--------------------------------------+--------------------------+--------+-----------------------------------+-------------+----------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+--------------------------+--------+-----------------------------------+-------------+----------+

| 069f8ee3-2eb1-4e10-babc-0de25faedec0 | juju-d3562c-k8s-13 | ACTIVE | int_net=10.0.9.97, 192.168.0.145 | focal-amd64 | r16G |

| bd591201-506e-4078-bd37-cc728538e199 | juju-d3562c-k8s-12 | ACTIVE | int_net=10.0.9.55, 192.168.0.85 | focal-amd64 | r16G |

| 52233035-1c94-4c06-89f0-f84cf5451319 | juju-d3562c-k8s-11 | ACTIVE | int_net=10.0.9.65, 192.168.0.40 | focal-amd64 | v2M4R16 |

| c2fa2e24-b21a-40f6-8c80-f01dca4043fd | juju-d3562c-k8s-7 | ACTIVE | int_net=10.0.9.100, 192.168.0.166 | focal-amd64 | v4m4r16 |

| dabf4ed4-6a1e-4976-9559-bbad8d110cdc | juju-d3562c-k8s-10 | ACTIVE | int_net=10.0.9.64, 192.168.0.161 | focal-amd64 | r16G |

| 173b815a-6682-4dd5-83fb-77d62d7a5f5f | juju-d3562c-k8s-5 | ACTIVE | int_net=192.168.0.47 | focal-amd64 | v2M4R16 |

| f2c57ad7-6d46-4943-832f-9da343a7c211 | juju-d3562c-k8s-6 | ACTIVE | int_net=192.168.0.43 | focal-amd64 | v2M4R16 |

| 13ac8f35-9a8d-48e7-83b5-0aea3971ba4a | juju-d3562c-k8s-8 | ACTIVE | int_net=192.168.0.49 | focal-amd64 | v4m4r16 |

| f7840404-2b1e-47cc-84bb-8c3181a8253d | juju-d3562c-k8s-4 | ACTIVE | int_net=192.168.0.157 | focal-amd64 | m4r16 |

| baca1e8b-fdd4-4818-94b1-3c3ba9dea7d3 | juju-d3562c-k8s-9 | ACTIVE | int_net=10.0.9.110, 192.168.0.136 | focal-amd64 | v4m4r16 |

| fe9c5a0c-e36d-4cd7-a829-2e07967ec55e | juju-d3562c-k8s-3 | ACTIVE | int_net=192.168.0.110 | focal-amd64 | r16G |

| 306fc000-cfcd-4440-b475-e51bb017e0a2 | juju-d3562c-k8s-2 | ACTIVE | int_net=192.168.0.175 | focal-amd64 | r16G |

| f64b471d-76f7-4bd5-9eaf-417b27e19401 | juju-d3562c-k8s-1 | ACTIVE | int_net=192.168.0.15 | focal-amd64 | r16G |

| 93ae9def-432a-4f26-89ad-dba737cfb567 | juju-d3562c-k8s-0 | ACTIVE | int_net=10.0.9.102, 192.168.0.107 | focal-amd64 | r16G |

| 47b81b26-b5d0-4759-b4da-06aaaddf76ba | juju-5a7fd0-controller-0 | ACTIVE | int_net=10.0.9.175, 192.168.0.52 | focal-amd64 | v3584M |

| 2c803a1a-19a4-4e58-b90f-3138629b39ba | focal-1 | ACTIVE | int_net=10.0.9.121, 192.168.0.101 | focal-amd64 | m1.small |

+--------------------------------------+--------------------------+--------+-----------------------------------+-------------+----------+

给某一实例附加浮动ip

openstack floating ip create -f value -c floating_ip_address ext_net

10.0.9.162

openstack server add floating ip juju-d3562c-k8s-1 10.0.9.162

也可以用这两个命令附加:

FIP=$(openstack floating ip create -f value -c floating_ip_address ext_net)

root@vivodo-3:~# openstack server add floating ip juju-d3562c-k8s-4 $FIP

最后的结果:

openstack server list

+--------------------------------------+--------------------------+--------+-----------------------------------+-------------+----------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+--------------------------+--------+-----------------------------------+-------------+----------+

| 069f8ee3-2eb1-4e10-babc-0de25faedec0 | juju-d3562c-k8s-13 | ACTIVE | int_net=10.0.9.97, 192.168.0.145 | focal-amd64 | r16G |

| bd591201-506e-4078-bd37-cc728538e199 | juju-d3562c-k8s-12 | ACTIVE | int_net=10.0.9.55, 192.168.0.85 | focal-amd64 | r16G |

| 52233035-1c94-4c06-89f0-f84cf5451319 | juju-d3562c-k8s-11 | ACTIVE | int_net=10.0.9.65, 192.168.0.40 | focal-amd64 | v2M4R16 |

| c2fa2e24-b21a-40f6-8c80-f01dca4043fd | juju-d3562c-k8s-7 | ACTIVE | int_net=10.0.9.100, 192.168.0.166 | focal-amd64 | v4m4r16 |

| dabf4ed4-6a1e-4976-9559-bbad8d110cdc | juju-d3562c-k8s-10 | ACTIVE | int_net=10.0.9.64, 192.168.0.161 | focal-amd64 | r16G |

| 173b815a-6682-4dd5-83fb-77d62d7a5f5f | juju-d3562c-k8s-5 | ACTIVE | int_net=10.0.9.146, 192.168.0.47 | focal-amd64 | v2M4R16 |

| f2c57ad7-6d46-4943-832f-9da343a7c211 | juju-d3562c-k8s-6 | ACTIVE | int_net=10.0.9.26, 192.168.0.43 | focal-amd64 | v2M4R16 |

| 13ac8f35-9a8d-48e7-83b5-0aea3971ba4a | juju-d3562c-k8s-8 | ACTIVE | int_net=10.0.9.174, 192.168.0.49 | focal-amd64 | v4m4r16 |

| f7840404-2b1e-47cc-84bb-8c3181a8253d | juju-d3562c-k8s-4 | ACTIVE | int_net=10.0.9.156, 192.168.0.157 | focal-amd64 | m4r16 |

| baca1e8b-fdd4-4818-94b1-3c3ba9dea7d3 | juju-d3562c-k8s-9 | ACTIVE | int_net=10.0.9.110, 192.168.0.136 | focal-amd64 | v4m4r16 |

| fe9c5a0c-e36d-4cd7-a829-2e07967ec55e | juju-d3562c-k8s-3 | ACTIVE | int_net=10.0.9.33, 192.168.0.110 | focal-amd64 | r16G |

| 306fc000-cfcd-4440-b475-e51bb017e0a2 | juju-d3562c-k8s-2 | ACTIVE | int_net=10.0.9.168, 192.168.0.175 | focal-amd64 | r16G |

| f64b471d-76f7-4bd5-9eaf-417b27e19401 | juju-d3562c-k8s-1 | ACTIVE | int_net=10.0.9.162, 192.168.0.15 | focal-amd64 | r16G |

| 93ae9def-432a-4f26-89ad-dba737cfb567 | juju-d3562c-k8s-0 | ACTIVE | int_net=10.0.9.102, 192.168.0.107 | focal-amd64 | r16G |

| 47b81b26-b5d0-4759-b4da-06aaaddf76ba | juju-5a7fd0-controller-0 | ACTIVE | int_net=10.0.9.175, 192.168.0.52 | focal-amd64 | v3584M |

| 2c803a1a-19a4-4e58-b90f-3138629b39ba | focal-1 | ACTIVE | int_net=10.0.9.121, 192.168.0.101 | focal-amd64 | m1.small |

+--------------------------------------+--------------------------+--------+-----------------------------------+-------------+----------+

juju status

Model Controller Cloud/Region Version SLA Timestamp

k8s openstack-cloud-regionone openstack-cloud/RegionOne 2.9.18 unsupported 22:23:12+08:00

App Version Status Scale Charm Store Channel Rev OS Message

containerd go1.13.8 active 5 containerd charmstore stable 178 ubuntu Container runtime available

easyrsa 3.0.1 active 1 easyrsa charmstore stable 420 ubuntu Certificate Authority connected.

etcd 3.4.5 active 3 etcd charmstore stable 634 ubuntu Healthy with 3 known peers

flannel 0.11.0 active 5 flannel charmstore stable 597 ubuntu Flannel subnet 10.1.31.1/24

kubeapi-load-balancer 1.18.0 active 1 kubeapi-load-balancer charmstore stable 844 ubuntu Loadbalancer ready.

kubernetes-master 1.22.4 active 2 kubernetes-master charmstore stable 1078 ubuntu Kubernetes master running.

kubernetes-worker 1.22.4 active 3 kubernetes-worker charmstore stable 816 ubuntu Kubernetes worker running.

openstack-integrator xena active 1 openstack-integrator charmstore stable 182 ubuntu Ready

Unit Workload Agent Machine Public address Ports Message

easyrsa/0* active idle 0 10.0.9.102 Certificate Authority connected.

etcd/0* active idle 1 10.0.9.162 2379/tcp Healthy with 3 known peers

etcd/1 active idle 2 10.0.9.168 2379/tcp Healthy with 3 known peers

etcd/2 active idle 3 10.0.9.33 2379/tcp Healthy with 3 known peers

kubeapi-load-balancer/0* active idle 4 10.0.9.156 443/tcp,6443/tcp Loadbalancer ready.

kubernetes-master/1* active idle 6 10.0.9.26 6443/tcp Kubernetes master running.

containerd/3 active idle 10.0.9.26 Container runtime available

flannel/3 active idle 10.0.9.26 Flannel subnet 10.1.69.1/24

kubernetes-master/2 active idle 11 10.0.9.65 6443/tcp Kubernetes master running.

containerd/4 active idle 10.0.9.65 Container runtime available

flannel/4 active idle 10.0.9.65 Flannel subnet 10.1.4.1/24

kubernetes-worker/0 active idle 7 10.0.9.100 80/tcp,443/tcp Kubernetes worker running.

containerd/2 active idle 10.0.9.100 Container runtime available

flannel/2 active idle 10.0.9.100 Flannel subnet 10.1.16.1/24

kubernetes-worker/1* active idle 8 10.0.9.174 80/tcp,443/tcp Kubernetes worker running.

containerd/0* active idle 10.0.9.174 Container runtime available

flannel/0* active idle 10.0.9.174 Flannel subnet 10.1.31.1/24

kubernetes-worker/2 active idle 9 10.0.9.110 80/tcp,443/tcp Kubernetes worker running.

containerd/1 active idle 10.0.9.110 Container runtime available

flannel/1 active idle 10.0.9.110 Flannel subnet 10.1.20.1/24

openstack-integrator/2* active idle 13 10.0.9.97 Ready

Machine State DNS Inst id Series AZ Message

0 started 10.0.9.102 93ae9def-432a-4f26-89ad-dba737cfb567 focal nova ACTIVE

1 started 10.0.9.162 f64b471d-76f7-4bd5-9eaf-417b27e19401 focal nova ACTIVE

2 started 10.0.9.168 306fc000-cfcd-4440-b475-e51bb017e0a2 focal nova ACTIVE

3 started 10.0.9.33 fe9c5a0c-e36d-4cd7-a829-2e07967ec55e focal nova ACTIVE

4 started 10.0.9.156 f7840404-2b1e-47cc-84bb-8c3181a8253d focal nova ACTIVE

6 started 10.0.9.26 f2c57ad7-6d46-4943-832f-9da343a7c211 focal nova ACTIVE

7 started 10.0.9.100 c2fa2e24-b21a-40f6-8c80-f01dca4043fd focal nova ACTIVE

8 started 10.0.9.174 13ac8f35-9a8d-48e7-83b5-0aea3971ba4a focal nova ACTIVE

9 started 10.0.9.110 baca1e8b-fdd4-4818-94b1-3c3ba9dea7d3 focal nova ACTIVE

11 started 10.0.9.65 52233035-1c94-4c06-89f0-f84cf5451319 focal nova ACTIVE

13 started 10.0.9.97 069f8ee3-2eb1-4e10-babc-0de25faedec0 focal nova ACTIVE

现在machine们的地址变成浮动ip地址了。

在10.0.0.3增加本地路由到192.168.0.0/24

但是这个还是不能解答本机10.0.0.3为什么不能ping通除192.168.0.1外,其他vm的192.168.0.1的地址均不能ping通。

突然想到,maas所在的本机,是不在openstack范围内的,能够ping通192.168.0.1这个网关,是因为192.168.0.1/10.0.9.193是个路由器,恰好证明从10.0.0.3这台机器缺乏一条到192.168.0.0/24这个网段的静态路由。

显示了下本机路由:

route -v

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default _gateway 0.0.0.0 UG 0 0 0 eno1

10.0.0.0 0.0.0.0 255.255.240.0 U 0 0 0 eno1

10.20.153.0 0.0.0.0 255.255.255.0 U 0 0 0 lxdbr0

192.168.122.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr0

果然没有到192.168.0.0/24的静态路由。

增加到192.168.0.0/24的静态路由:

route add -net 192.168.0.0/24 gw 10.0.9.193

注:10.0.9.193为虚拟路由器地址。

显示路由:

route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default _gateway 0.0.0.0 UG 0 0 0 eno1

10.0.0.0 0.0.0.0 255.255.240.0 U 0 0 0 eno1

10.20.153.0 0.0.0.0 255.255.255.0 U 0 0 0 lxdbr0

192.168.0.0 10.0.9.193 255.255.255.0 UG 0 0 0 eno1

192.168.122.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr0

ping 测试:

ping 192.168.0.145

PING 192.168.0.145 (192.168.0.145) 56(84) bytes of data.

64 bytes from 192.168.0.145: icmp_seq=1 ttl=63 time=4.10 ms

64 bytes from 192.168.0.145: icmp_seq=2 ttl=63 time=1.88 ms

^C

--- 192.168.0.145 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 1.876/2.990/4.104/1.114 ms

root@vivodo-3:~# ping 192.168.0.85

PING 192.168.0.85 (192.168.0.85) 56(84) bytes of data.

64 bytes from 192.168.0.85: icmp_seq=1 ttl=63 time=4.57 ms

64 bytes from 192.168.0.85: icmp_seq=2 ttl=63 time=2.01 ms

^C

--- 192.168.0.85 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 2.006/3.287/4.568/1.281 ms

root@vivodo-3:~# ping 192.168.0.40

PING 192.168.0.40 (192.168.0.40) 56(84) bytes of data.

64 bytes from 192.168.0.40: icmp_seq=1 ttl=63 time=2.83 ms

64 bytes from 192.168.0.40: icmp_seq=2 ttl=63 time=1.39 ms

^C

--- 192.168.0.40 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 1.391/2.112/2.833/0.721 ms

root@vivodo-3:~# ping 192.168.0.166

PING 192.168.0.166 (192.168.0.166) 56(84) bytes of data.

64 bytes from 192.168.0.166: icmp_seq=1 ttl=63 time=3.59 ms

64 bytes from 192.168.0.166: icmp_seq=2 ttl=63 time=1.81 ms

^C

--- 192.168.0.166 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 1.814/2.700/3.587/0.886 ms

root@vivodo-3:~# ping 192.168.0.161

PING 192.168.0.161 (192.168.0.161) 56(84) bytes of data.

64 bytes from 192.168.0.161: icmp_seq=1 ttl=63 time=4.54 ms

64 bytes from 192.168.0.161: icmp_seq=2 ttl=63 time=1.85 ms

64 bytes from 192.168.0.161: icmp_seq=3 ttl=63 time=1.19 ms

^C

--- 192.168.0.161 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2003ms

rtt min/avg/max/mdev = 1.185/2.523/4.539/1.450 ms

root@vivodo-3:~# ping 192.168.0.47

PING 192.168.0.47 (192.168.0.47) 56(84) bytes of data.

64 bytes from 192.168.0.47: icmp_seq=1 ttl=63 time=4.44 ms

64 bytes from 192.168.0.47: icmp_seq=2 ttl=63 time=1.82 ms

^C

--- 192.168.0.47 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 1.824/3.131/4.438/1.307 ms

root@vivodo-3:~# ping 192.168.0.43

PING 192.168.0.43 (192.168.0.43) 56(84) bytes of data.

64 bytes from 192.168.0.43: icmp_seq=1 ttl=63 time=2.81 ms

^C

--- 192.168.0.43 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 2.808/2.808/2.808/0.000 ms

root@vivodo-3:~# ping 192.168.0.49

PING 192.168.0.49 (192.168.0.49) 56(84) bytes of data.

64 bytes from 192.168.0.49: icmp_seq=1 ttl=63 time=4.73 ms

64 bytes from 192.168.0.49: icmp_seq=2 ttl=63 time=1.37 ms

^C

--- 192.168.0.49 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 1.371/3.050/4.730/1.679 ms

root@vivodo-3:~# ping 192.168.0.157

PING 192.168.0.157 (192.168.0.157) 56(84) bytes of data.

64 bytes from 192.168.0.157: icmp_seq=1 ttl=63 time=3.91 ms

^C

--- 192.168.0.157 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 3.906/3.906/3.906/0.000 ms

root@vivodo-3:~# ping 192.168.0.136

PING 192.168.0.136 (192.168.0.136) 56(84) bytes of data.

64 bytes from 192.168.0.136: icmp_seq=1 ttl=63 time=3.88 ms

64 bytes from 192.168.0.136: icmp_seq=2 ttl=63 time=1.87 ms

^C

--- 192.168.0.136 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 1.871/2.875/3.880/1.004 ms

root@vivodo-3:~# ping 192.168.0.110

PING 192.168.0.110 (192.168.0.110) 56(84) bytes of data.

64 bytes from 192.168.0.110: icmp_seq=1 ttl=63 time=2.55 ms

^C

--- 192.168.0.110 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 2.545/2.545/2.545/0.000 ms

root@vivodo-3:~# ping 192.168.0.175

PING 192.168.0.175 (192.168.0.175) 56(84) bytes of data.

64 bytes from 192.168.0.175: icmp_seq=1 ttl=63 time=3.66 ms

^C

--- 192.168.0.175 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 3.657/3.657/3.657/0.000 ms

root@vivodo-3:~# ping 192.168.0.15

PING 192.168.0.15 (192.168.0.15) 56(84) bytes of data.

64 bytes from 192.168.0.15: icmp_seq=1 ttl=63 time=2.60 ms

64 bytes from 192.168.0.15: icmp_seq=2 ttl=63 time=1.41 ms

^C

--- 192.168.0.15 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 1.413/2.006/2.599/0.593 ms

root@vivodo-3:~# ping 192.168.0.107

PING 192.168.0.107 (192.168.0.107) 56(84) bytes of data.

64 bytes from 192.168.0.107: icmp_seq=1 ttl=63 time=4.26 ms

64 bytes from 192.168.0.107: icmp_seq=2 ttl=63 time=1.96 ms

^C

--- 192.168.0.107 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 1.960/3.109/4.258/1.149 ms

root@vivodo-3:~# ping 192.168.0.52

PING 192.168.0.52 (192.168.0.52) 56(84) bytes of data.

64 bytes from 192.168.0.52: icmp_seq=1 ttl=63 time=2.88 ms

64 bytes from 192.168.0.52: icmp_seq=2 ttl=63 time=1.54 ms

^C

--- 192.168.0.52 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 1.542/2.210/2.878/0.668 ms

root@vivodo-3:~# ping 192.168.0.101

PING 192.168.0.101 (192.168.0.101) 56(84) bytes of data.

64 bytes from 192.168.0.101: icmp_seq=1 ttl=63 time=4.64 ms

64 bytes from 192.168.0.101: icmp_seq=2 ttl=63 time=2.02 ms

^C

--- 192.168.0.101 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 2.017/3.328/4.640/1.311 ms

均能ping通。

再次同步kubernetes配置:

juju scp kubernetes-master/1:config ~/.kube/config

验证配置:

kubectl cluster-info

Kubernetes control plane is running at https://10.0.9.156:443

CoreDNS is running at https://10.0.9.156:443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Metrics-server is running at https://10.0.9.156:443/api/v1/namespaces/kube-system/services/https:metrics-server:/proxy

总结:

1 首先要确定vm们是否有权限进行icmp和ssh。

2 浮动ip应该不是要全部加,只需要在需要暴露给外界的vm上添加浮动ip。

3 和不用juju和maas配置的openstack相比,命令行是在maas所在服务器上执行,需要添加一条maas到内网的静态路由。

1883

1883

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?