大数据项目(基于spark)–新冠疫情防控指挥作战平台项目

第一章 项目介绍

1.1 项目背景

新冠疫情防控指挥作战平台项目的需求由传智播客提出,北京大数据研究院博雅智慧公司策划,双方共同研发。项目实现了疫情态势、基层防控、物资保障、复工复产等多个专题板块,包括新冠疫情防控指挥大屏子系统和新冠疫情防控指挥平台后台管理子系统。

通过新冠疫情防控指挥作战平台的建设及实施,使得从局部作战到中心指挥,让战“疫”指挥官对疫情防控心中有“数”,科学决策,下好疫情防控、救治、复工复产“一盘棋”,更高效地帮助防疫指挥部开展统筹、协调、决策工作,尽快打赢疫情防控战役。

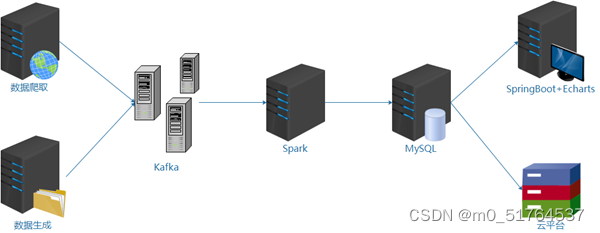

1.2 项目架构

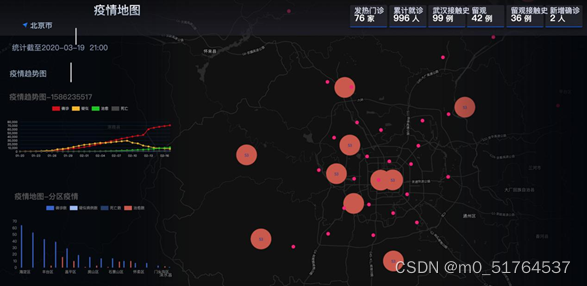

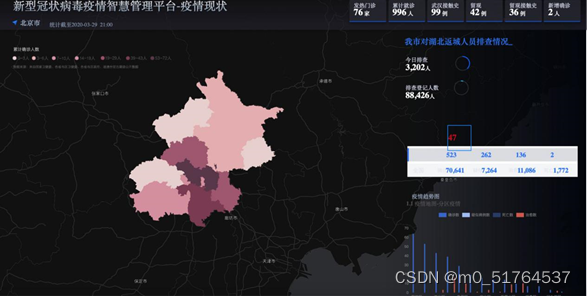

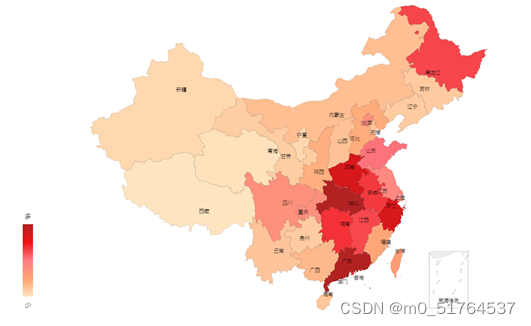

1.3 项目截图

1.4 功能模块

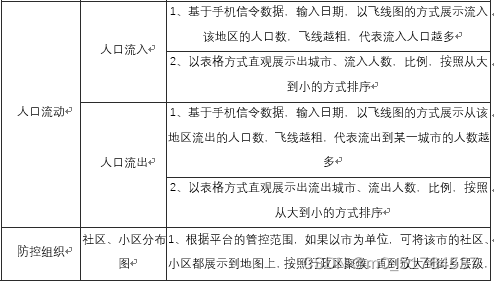

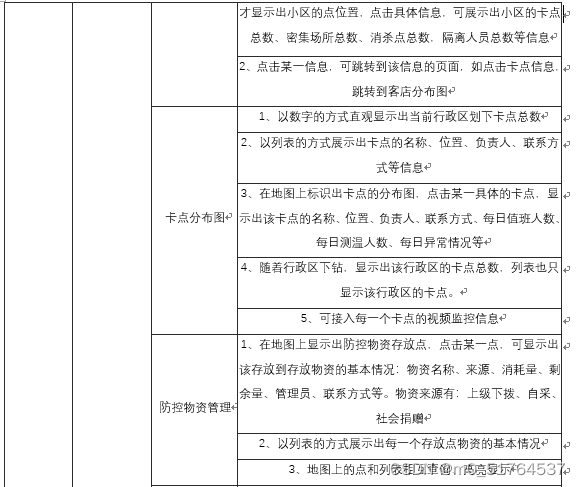

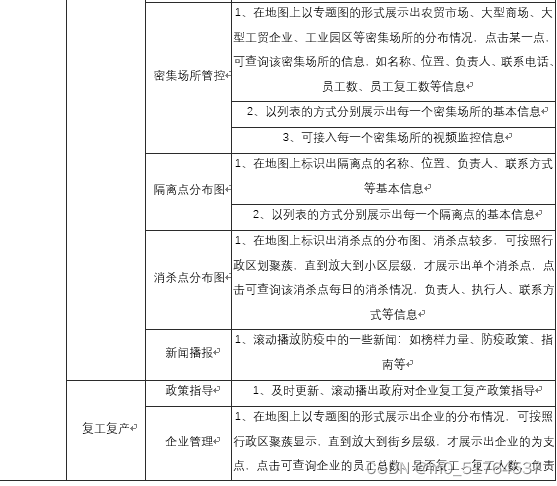

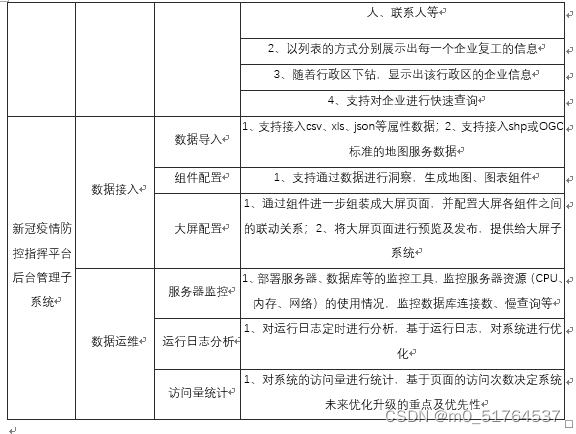

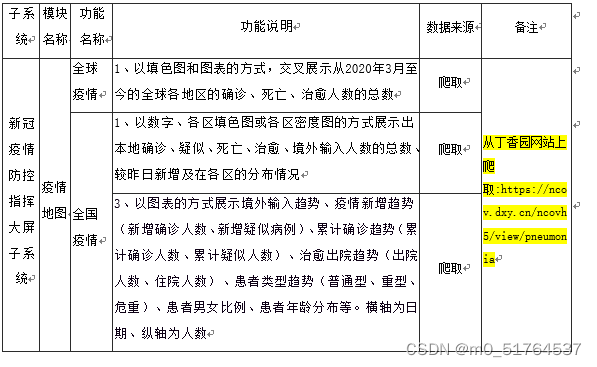

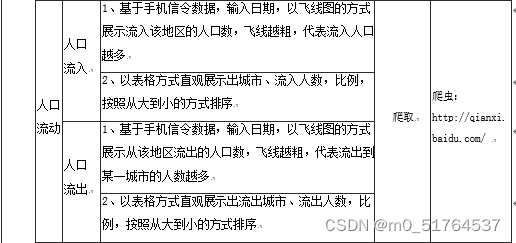

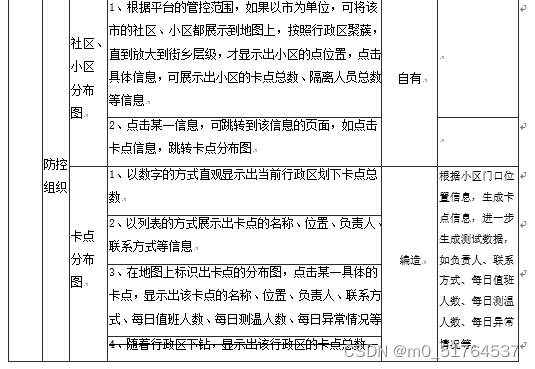

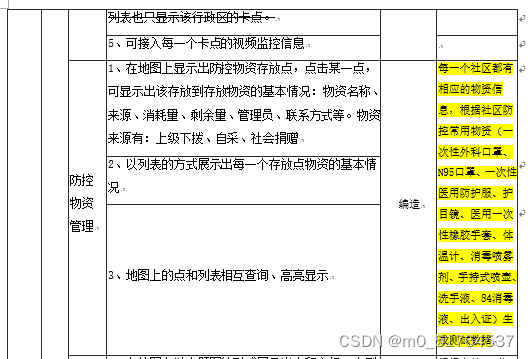

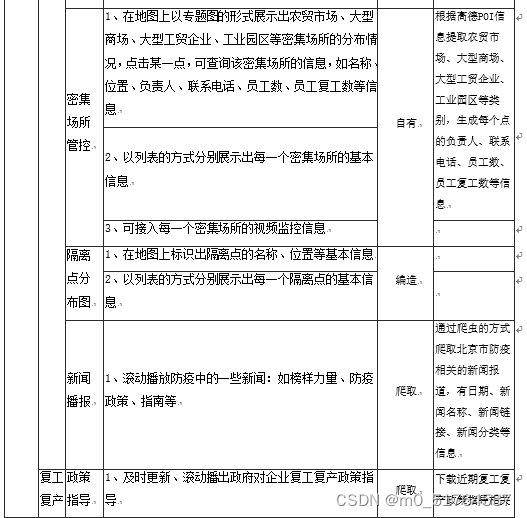

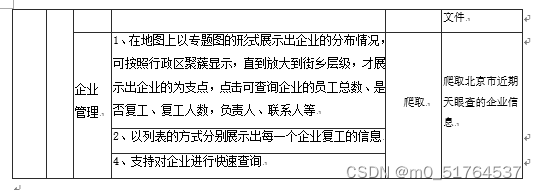

新冠疫情防控指挥作战平台包括新冠疫情防控指挥大屏子系统和新冠疫情防控指挥平台后台管理子系统,其中大屏子系统提供给用户使用,后台管理系统提供给管理员及运维人员使用,每个子系统对应的模块级功能点、功能描述如下表所示。

| 子系统 | 模块名称 | 功能名称 | 功能名说明 |

|---|---|---|---|

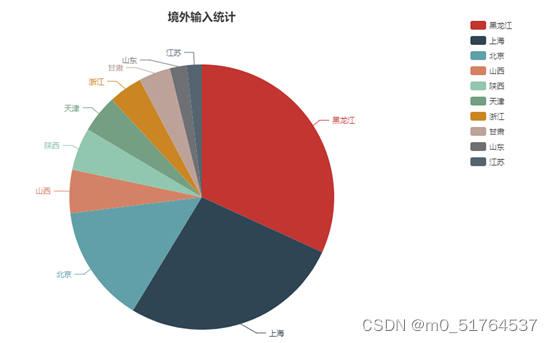

| 新冠疫情防控指挥大屏子系统 | 疫情地图 | 各区疫情 | 1、以数字、各区填色图或各区密度图的方式展示出本地确诊、疑似、死亡、治愈、境外输入人数的总数、较昨日新增及在各区的分布情况 |

| 2、专题地图可按照行政区逐级下钻,如市、区县、街乡,下钻后展示的数字是当前行政区层级的汇总 | |||

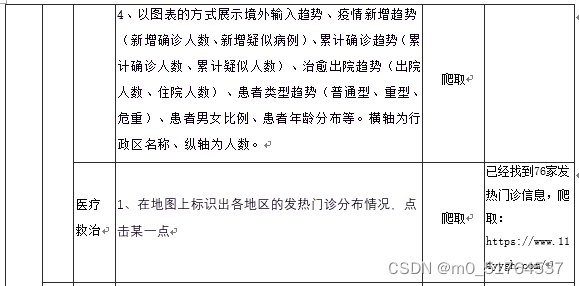

| 3、以图表的方式展示境外输入趋势、疫情新增趋势(新增确诊人数、新增疑似病例)、累计确诊趋势(累计确诊人数、累计疑似人数)、治愈出院趋势(出院人数、住院人数)、患者类型趋势(普通型、重型、危重)、患者男女比例、患者年龄分布等。横轴为日期、纵轴为人数 | |||

| 4、以图表的方式展示境外输入趋势、疫情新增趋势(新增确诊人数、新增疑似病例)、累计确诊趋势(累计确诊人数、累计疑似人数)、治愈出院趋势(出院人数、住院人数)、患者类型趋势(普通型、重型、危重)、患者男女比例、患者年龄分布等。横轴为行政区名称、纵轴为人数,并且随着行政区下钻,横轴所示的行政区会自动下钻到下一级的行政区 | |||

| 患者轨迹 | 1、对确诊患者在地图上以连续的OD连线的方式展示患者的轨迹;2、以列表的方式直观展示出患者的行程 | ||

| 医疗救治 | 1、在地图上标识出各地区的发热门诊分布情况,点击某一点,可展示出该门诊的病人数、剩余床位数等信息 | ||

| 疫情小区 | 在地图上标出确诊患者所在小区的分布图,点击某一点,可展示出小区的位置、楼数、患者所在的楼编号等信息 | ||

| 传染关系 | 传染关系 | 1、根据确诊患者、疑似患者上报的接触人及接触地点,生成患者传染关系图;每一个患者就是一个节点,点击各节点显示患者基本信息及密切接触者个数,点击各节点间联系显示患者间相互关系。节点大小反应其密切接触者人数;2.可通过行政区快速过滤该行政区内的节点 |

第二章 数据爬取

2.1 数据清单

2.2 疫情数据爬取

2.2.1 环境准备

2.2.1.1 pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<artifactId>crawler</artifactId>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.2.7.RELEASE</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>cn.itcast</groupId>

<version>0.0.1-SNAPSHOT</version>

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-devtools</artifactId>

<scope>runtime</scope>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

<exclusions>

<exclusion>

<groupId>org.junit.vintage</groupId>

<artifactId>junit-vintage-engine</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.22</version>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.5.3</version>

</dependency>

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.10.3</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.7</version>

</dependency>

<dependency>

<groupId>commons-io</groupId>

<artifactId>commons-io</artifactId>

<version>2.6</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.25</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

2.2.1.2 application.properties

server.port=9999

#kafka

#服务器地址

kafka.bootstrap.servers=node01:9092,node02:9092,node03:9092

#重试发送消息次数

kafka.retries_config=0

#批量发送的基本单位,默认16384Byte,即16KB

kafka.batch_size_config=4096

#批量发送延迟的上限

kafka.linger_ms_config=100

#buffer内存大小

kafka.buffer_memory_config=40960

#主题

kafka.topic=covid19

2.2.2 工具类

2.2.2.1 HttpUtils

package cn.itcast.util;

import org.apache.http.client.config.RequestConfig;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.impl.conn.PoolingHttpClientConnectionManager;

import org.apache.http.util.EntityUtils;

import java.util.ArrayList;

import java.util.List;

import java.util.Random;

public abstract class HttpUtils {

private static PoolingHttpClientConnectionManager cm;

private static List<String> userAgentList = null;

static {

cm = new PoolingHttpClientConnectionManager();

//设置最大连接数

cm.setMaxTotal(200);

//设置每个主机的并发数

cm.setDefaultMaxPerRoute(20);

userAgentList = new ArrayList<>();

userAgentList.add("Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.132 Safari/537.36");

userAgentList.add("Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:73.0) Gecko/20100101 Firefox/73.0");

userAgentList.add("Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_3) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/13.0.5 Safari/605.1.15");

userAgentList.add("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36 Edge/16.16299");

userAgentList.add("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36");

userAgentList.add("Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:63.0) Gecko/20100101 Firefox/63.0");

}

//获取内容

public static String getHtml(String url) {

CloseableHttpClient httpClient = HttpClients.custom().setConnectionManager(cm).build();

HttpGet httpGet = new HttpGet(url);

int index = new Random().nextInt(userAgentList.size());

httpGet.setHeader("User-Agent", userAgentList.get(index));

httpGet.setConfig(getConfig());

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

if (response.getStatusLine().getStatusCode() == 200) {

String html = "";

if (response.getEntity() != null) {

html = EntityUtils.toString(response.getEntity(), "UTF-8");

}

return html;

}

} catch (Exception e) {

e.printStackTrace();

} finally {

try {

if (response != null) {

response.close();

}

// httpClient.close();//不能关闭,现在使用的是连接管理器

} catch (Exception e) {

e.printStackTrace();

}

}

return null;

}

//获取请求参数对象

private static RequestConfig getConfig() {

RequestConfig config = RequestConfig.custom().setConnectTimeout(1000)

.setConnectionRequestTimeout(500)

.setSocketTimeout(10000)

.build();

return config;

}

}

2.2.2.2 TimeUtils

package cn.itcast.util;

import org.apache.commons.lang3.time.FastDateFormat;

/**

* Author itcast

* Date 2020/5/11 14:00

* Desc

*/

public abstract class TimeUtils {

public static String format(Long timestamp,String pattern){

return FastDateFormat.getInstance(pattern).format(timestamp);

}

public static void main(String[] args) {

String format = TimeUtils.format(System.currentTimeMillis(), "yyyy-MM-dd");

System.out.println(format);

}

}

2.2.2.3 KafkaProducerConfig

package cn.itcast.util;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.common.serialization.IntegerSerializer;

import org.apache.kafka.common.serialization.StringSerializer;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.kafka.core.DefaultKafkaProducerFactory;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.kafka.core.ProducerFactory;

import java.util.HashMap;

import java.util.Map;

@Configuration // 表示该类是一个配置类

public class KafkaProducerConfig {

@Value("${kafka.bootstrap.servers}")

private String bootstrap_servers;

@Value("${kafka.retries_config}")

private String retries_config;

@Value("${kafka.batch_size_config}")

private String batch_size_config;

@Value("${kafka.linger_ms_config}")

private String linger_ms_config;

@Value("${kafka.buffer_memory_config}")

private String buffer_memory_config;

@Value("${kafka.topic}")

private String topic;

@Bean //表示方法返回值对象是受Spring所管理的一个Bean

public KafkaTemplate kafkaTemplate() {

// 构建工厂需要的配置

Map<String, Object> configs = new HashMap<>();

configs.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, bootstrap_servers);

configs.put(ProducerConfig.RETRIES_CONFIG, retries_config);

configs.put(ProducerConfig.BATCH_SIZE_CONFIG, batch_size_config);

configs.put(ProducerConfig.LINGER_MS_CONFIG, linger_ms_config);

configs.put(ProducerConfig.BUFFER_MEMORY_CONFIG, buffer_memory_config);

configs.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, IntegerSerializer.class);

configs.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

// 指定自定义分区

configs.put(ProducerConfig.PARTITIONER_CLASS_CONFIG, RoundRobinPartitioner.class);

// 创建生产者工厂

ProducerFactory<String, String> producerFactory = new DefaultKafkaProducerFactory(configs);

// 返回KafkTemplate的对象

KafkaTemplate kafkaTemplate = new KafkaTemplate(producerFactory);

//System.out.println("kafkaTemplate"+kafkaTemplate);

return kafkaTemplate;

}

2.2.2.4 RoundRobinPartitioner

package cn.itcast.util;

import org.apache.kafka.clients.producer.Partitioner;

import org.apache.kafka.common.Cluster;

import java.util.Map;

public class RoundRobinPartitioner implements Partitioner {

@Override

public int partition(String topic, Object key, byte[] keyBytes, Object value, byte[] valueBytes, Cluster cluster) {

Integer k = (Integer)key;

Integer partitions = cluster.partitionCountForTopic(topic);//获取分区数量

int curpartition = k % partitions;

//System.out.println("分区编号为:"+curpartition);

return curpartition;

}

@Override

public void close() {

}

@Override

public void configure(Map<String, ?> configs) {

}

}

2.2.3 实体类

2.2.3.1 CovidBean

package cn.itcast.bean;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

@Data

@NoArgsConstructor

@AllArgsConstructor

public class CovidBean {

private String provinceName;

private String provinceShortName;

private String cityName;

private Integer currentConfirmedCount;

private Integer confirmedCount;

private Integer suspectedCount;

private Integer curedCount;

private Integer deadCount;

private Integer locationId;

private Integer pid;

private String cities;

private String statisticsData;

private String datetime;

}

2.2.3.2 MaterialBean

package cn.itcast.bean;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

@Data

@NoArgsConstructor

@AllArgsConstructor

public class MaterialBean {

private String name;

private String from;

private Integer count;

}

2.2.4 入口程序

package cn.itcast;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.scheduling.annotation.EnableScheduling;

@SpringBootApplication

@EnableScheduling//开启定时任务

public class Covid19ProjectApplication {

public static void main(String[] args) {

SpringApplication.run(Covid19ProjectApplication.class, args);

}

}

2.2.5 数据爬取

package cn.itcast.crawler;

import cn.itcast.bean.CovidBean;

import cn.itcast.util.HttpUtils;

import cn.itcast.util.TimeUtils;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONArray;

import com.alibaba.fastjson.JSONObject;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.scheduling.annotation.Scheduled;

import org.springframework.stereotype.Component;

import java.util.List;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

/**

* Author itcast

* Date 2020/5/11 10:35

* Desc

* 查看主题:

* /export/servers/kafka/bin/kafka-topics.sh --list --zookeeper node01:2181

* 删除主题

* /export/servers/kafka/bin/kafka-topics.sh --delete --zookeeper node01:2181 --topic covid19

* 创建主题:

* /export/servers/kafka/bin/kafka-topics.sh --create --zookeeper node01:2181 --replication-factor 2 --partitions 3 --topic covid19

* 再次查看主题:

* /export/servers/kafka/bin/kafka-topics.sh --list --zookeeper node01:2181

* 启动控制台消费者

* /export/servers/kafka/bin/kafka-console-consumer.sh --bootstrap-server node01:9092 --from-beginning --topic covid19

* 启动控制台生产者

* /export/servers/kafka/bin/kafka-console-producer.sh --topic covid19 --broker-list node01:9092

*

*/

@Component

public class Covid19DataCrawler {

@Autowired

KafkaTemplate kafkaTemplate;

@Scheduled(initialDelay = 1000, fixedDelay = 1000 * 60 *60 * 12)

//@Scheduled(cron = "0 0 8 * * ?")//每天8点执行

public void crawling() throws Exception {

System.out.println("每隔10s执行一次");

String datetime = TimeUtils.format(System.currentTimeMillis(), "yyyy-MM-dd");

String html = HttpUtils.getHtml("https://ncov.dxy.cn/ncovh5/view/pneumonia");

//System.out.println(html);

Document document = Jsoup.parse(html);

System.out.println(document);

String text = document.select("script[id=getAreaStat]").toString();

System.out.println(text);

String pattern = "\\[(.*)\\]";

Pattern reg = Pattern.compile(pattern);

Matcher matcher = reg.matcher(text);

String jsonStr = "";

if (matcher.find()) {

jsonStr = matcher.group(0);

System.out.println(jsonStr);

} else {

System.out.println("NO MATCH");

}

List<CovidBean> pCovidBeans = JSON.parseArray(jsonStr, CovidBean.class);

for (CovidBean pBean : pCovidBeans) {

//System.out.println(pBean);

pBean.setDatetime(datetime);

List<CovidBean> covidBeans = JSON.parseArray(pBean.getCities(), CovidBean.class);

for (CovidBean bean : covidBeans) {

bean.setDatetime(datetime);

bean.setPid(pBean.getLocationId());

bean.setProvinceShortName(pBean.getProvinceShortName());

//System.out.println(bean);

String json = JSON.toJSONString(bean);

System.out.println(json);

kafkaTemplate.send("covid19",bean.getPid(),json);//发送城市疫情数据

}

String statisticsDataUrl = pBean.getStatisticsData();

String statisticsData = HttpUtils.getHtml(statisticsDataUrl);

JSONObject jsb = JSON.parseObject(statisticsData);

JSONArray datas = JSON.parseArray(jsb.getString("data"));

pBean.setStatisticsData(datas.toString());

pBean.setCities(null);

//System.out.println(pBean);

String pjson = JSON.toJSONString(pBean);

System.out.println(pjson);

kafkaTemplate.send("covid19",pBean.getLocationId(),pjson);//发送省份疫情数据,包括时间序列数据

}

System.out.println("发送到kafka成功");

}

}

2.3 防疫数据生成

package cn.itcast.generator;

import cn.itcast.bean.MaterialBean;

import com.alibaba.fastjson.JSON;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.scheduling.annotation.Scheduled;

import org.springframework.stereotype.Component;

import java.util.Random;

/**

* 物资 库存 需求 消耗 捐赠

* N95口罩 4293 9395 3254 15000

* 医用外科口罩 9032 7425 8382 55000

* 医用防护服 1938 2552 1396 3500

* 内层工作服 2270 3189 1028 2800

* 一次性手术衣 3387 1000 1413 5000

* 84消毒液/升 9073 3746 3627 10000

* 75%酒精/升 3753 1705 1574 8000

* 防护目镜/个 2721 3299 1286 4500

* 防护面屏/个 2000 1500 1567 3500

*/

@Component

public class Covid19DataGenerator {

@Autowired

KafkaTemplate kafkaTemplate;

@Scheduled(initialDelay = 1000, fixedDelay = 1000 * 10)

public void generate() {

System.out.println("每隔10s生成10条数据");

Random random = new Random();

for (int i = 0; i < 10; i++) {

MaterialBean materialBean = new MaterialBean(wzmc[random.nextInt(wzmc.length)], wzlx[random.nextInt(wzlx.length)], random.nextInt(1000));

String jsonString = JSON.toJSONString(materialBean);

System.out.println(materialBean);

kafkaTemplate.send("covid19_wz", random.nextInt(4),jsonString);

}

}

private static String[] wzmc = new String[]{

"N95口罩/个", "医用外科口罩/个", "84消毒液/瓶", "电子体温计/个", "一次性橡胶手套/副", "防护目镜/副", "医用防护服/套"};

private static String[] wzlx = new String[]{

"采购", "下拨", "捐赠", "消耗","需求"};

}

第三章 实时数据处理和分析

3.1. 环境准备

3.1.1. pom.xml

<properties>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<encoding>UTF-8</encoding>

<scala.version>2.11.8</scala.version>

<spark.version>2.2.0</spark.version>

</properties>

<dependencies>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-10_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql-kafka-0-10_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>com.typesafe</groupId>

<artifactId>config</artifactId>

<version>1.3.3</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.38</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.44</version>

</dependency>

</dependencies>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<plugins>

<!-- 指定编译java的插件 -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.5.1</version>

</plugin>

<!-- 指定编译scala的插件 -->

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.2.2</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals<

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

8269

8269

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?