先自我介绍一下,小编浙江大学毕业,去过华为、字节跳动等大厂,目前阿里P7

深知大多数程序员,想要提升技能,往往是自己摸索成长,但自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年最新网络安全全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上网络安全知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

如果你需要这些资料,可以添加V获取:vip204888 (备注网络安全)

正文

<!-- Windows OS vulnerabilities -->

<provider name="msu">

<enabled>yes</enabled>

<url>http://172.31.101.57/msu-updates.json.gz</url>

<update_interval>1h</update_interval>

</provider>

<!-- Aggregate vulnerabilities -->

<provider name="nvd">

<enabled>yes</enabled>

<url start="2010" end="2021">http://172.31.101.57/nvd/nvd-feed[-].json.gz</url>

<update_interval>1h</update_interval>

</provider>

#!/bin/bash

cd /var/www/wazuh/

# 下载Ubuntu 20.04的漏洞种子

wget -N https://people.canonical.com/~ubuntu-security/oval/com.ubuntu.focal.cve.oval.xml.bz2

# 下载RHEL 6/7/8的漏洞种子

wget -N https://www.redhat.com/security/data/oval/v2/RHEL6/rhel-6-including-unpatched.oval.xml.bz2

wget -N https://www.redhat.com/security/data/oval/v2/RHEL7/rhel-7-including-unpatched.oval.xml.bz2

wget -N https://www.redhat.com/security/data/oval/v2/RHEL8/rhel-8-including-unpatched.oval.xml.bz2

# 下载微软漏洞文件

wget -N https://feed.wazuh.com/vulnerability-detector/windows/msu-updates.json.gz

#下载Redhat的安全数据Json文件

/bin/bash /var/www/wazuh/rh-generator.sh /var/www/wazuh/redhat

# 下载NVD的安全数据库(CVE)

/bin/bash /var/www/wazuh/nvd-generator.sh 2010 /var/www/wazuh/nvd

# 更新文件权限

chown -R nginx:nginx /var/www/wazuh

# 重启服务,更新数据库

systemctl restart wazuh-manager.service

- 查询漏扫情况

- 邮件告警

SHELL

# 开启全局邮件通知

# Wazuh本身没有邮件功能,它依赖系统的邮件投递

<global>

<email_notification>yes</email_notification>

<email_to>sujx@live.cn</email_to>

<smtp_server>exmail.qq.com</smtp_server>

<email_from>i@sujx.net</email_from>

<email_maxperhour>12</email_maxperhour>

</global>

# 定义邮件告警级别,设定事件级别>=12级即发送邮件告警

<alerts>

<log_alert_level>3</log_alert_level>

<email_alert_level>12</email_alert_level>

</alerts>

# 定义每日高危漏洞通知(>=13级漏洞)

<reports>

<level>13</level>

<title>Daily report: Alerts with level higher than 13</title>

<email_to>sujx@live.cn</email_to>

</reports>

# 强制发送告警邮件,即不管上述如何设定一定要发邮件告警,是配置在rule上,而不是全局conf中

<rule id="502" level="3">

<if_sid>500</if_sid>

<options>alert_by_email</options>

<match>Ossec started</match>

<description>Ossec server started.</description>

</rule>

- 邮件投递

SHELL

yum install -y mailx

# 使用mailx发送内部邮件

cat >> /etc/mail.rc<<EOF

# 设定内部匿名邮件服务器

set smtp=smtp.example.com

EOF

# 使用公共邮箱发送邮件

yum install -y postfix mailx cyrus-sasl cyrus-sasl-plain

# 建立邮件中继

cat >> /etc/postfix/main.cf<<EOF

relayhost = [smtp.exmail.qq.com]:587

smtp_sasl_auth_enable = yes

smtp_sasl_password_maps = hash:/etc/postfix/sasl_passwd

smtp_sasl_security_options = noanonymous

smtp_tls_CAfile = /etc/ssl/certs/ca-bundle.crt

smtp_use_tls = yes

EOF

# 设定使用i@sujx.net发送邮件给指定接收方

echo [smtp.exmail.qq.com]:587 i@sujx.net:PASSWORD > /etc/postfix/sasl_passwd

postmap /etc/postfix/sasl_passwd

chmod 400 /etc/postfix/sasl_passwd

chown root:root /etc/postfix/sasl_passwd /etc/postfix/sasl_passwd.db

chmod 0600 /etc/postfix/sasl_passwd /etc/postfix/sasl_passwd.db

# 重置服务

systemctl reload postfix

# 邮件测试

echo "Test mail from postfix" | mail -s "Test Postfix" -r "i@sujx.net" sujx@live.cn

# 邮件告警

Wazuh Notification.

2021 Jul 03 23:21:09

Received From: (server002.sujx.net) any->syscheck

Rule: 550 fired (level 7) -> "Integrity checksum changed."

Portion of the log(s):

File '/etc/sysconfig/iptables.save' modified

Mode: scheduled

Changed attributes: mtime,md5,sha1,sha256

…………

--END OF NOTIFICATION

# 邮件报告

Report 'Daily report: Alerts with level higher than 13.' completed.

------------------------------------------------

->Processed alerts: 481384

->Post-filtering alerts: 1953

->First alert: 2021 Jun 29 00:06:08

->Last alert: 2021 Jun 29 23:59:17

Top entries for 'Level':

------------------------------------------------

Severity 13 |1953 |

Top entries for 'Group':

------------------------------------------------

gdpr_IV_35.7.d |1953 |

pci_dss_11.2.1 |1953 |

pci_dss_11.2.3 |1953 |

tsc_CC7.1 |1953 |

tsc_CC7.2 |1953 |

vulnerability-detector |1953 |

Top entries for 'Location':

……

- 使用Kibana产生PDF版本的漏洞报告

生产建议

- 由于Wazuh的漏扫模块很容易将CPU资源打满,建议使用wazuh的群集模式部署相应数量的worker来承担漏扫功能。

- Wazuh的worker建议使用4核4G的配置进行部署,其内存占用2G左右,但CPU占用较高,且多核性能不理想。官方回复是以后版本会改进。

- 如果要求每天(24hour)产生一次全网漏扫报告的话,建议使用200:1的比例部署worker;

- 由于Wazuh的多线程优化以及Cluster模式下worker管理的优化存在问题,在面对>1000台以上的agent环境中,建议使用高配物理机部署。

使用案例

使用系统官方库

- 安装数据库

SHELL

yum makecache

# 系统自带版本为10

yum install -y postgresql postgresql-server

- 启动数据库

SHELL

# 启动服务

postgresql-setup initdb

systemctl enable postgresql.service --now

- 执行漏洞检测

使用软件官方库

- 安装数据库 SHELL

# 安装PG官方源

yum install -y https://download.postgresql.org/pub/repos/yum/reporpms/EL-8-x86_64/pgdg-redhat-repo-latest.noarch.rpm

# 添加更新源,安装版本以12版本为例

yum makecache

# 安装数据库

yum install -y postgresql12 postgresql12-server

- 启动数据库 SHELL

# 创建目录

mkdir -p /var/lib/pgsql/12/data/

chown postgres:postgres /var/lib/pgsql/12/ -R

# 启动服务

postgresql-12-setup initdb

systemctl enable postgresql-12.service --now

- 执行漏洞检测

- 无检测结果

问题所在

Wazuh使用软件包名或者KB名来进行对比,对于RPM系发行版就是访问rpminfo数据库来进行对比。

以postgresql为例

- Redhat官方漏洞库

BASH

# redhat官方的漏洞库中是有postgresql12的漏洞的

<criterion comment="Module postgresql:12 is enabled" test_ref="oval:com.redhat.cve:tst:202120229037"/>

<criterion comment="postgresql-plperl is installed" test_ref="oval:com.redhat.cve:tst:202120229001"/>

<criterion comment="postgresql-plperl is signed with Red Hat redhatrelease2 key" test_ref="oval:com.redhat.cve:tst:202120229002"/>

<criterion comment="postgresql-server-devel is installed" test_ref="oval:com.redhat.cve:tst:202120229007"/>

<criterion comment="postgresql-server-devel is signed with Red Hat redhatrelease2 key" test_ref="oval:com.redhat.cve:tst:202120229008"/>

<criterion comment="postgresql-plpython3 is installed" test_ref="oval:com.redhat.cve:tst:202120229009"/>

<criterion comment="postgresql-plpython3 is signed with Red Hat redhatrelease2 key" test_ref="oval:com.redhat.cve:tst:202120229010"/>

<criterion comment="postgresql is installed" test_ref="oval:com.redhat.cve:tst:202120229011"/>

<criterion comment="postgresql is signed with Red Hat redhatrelease2 key" test_ref="oval:com.redhat.cve:tst:202120229012"/>

<criterion comment="postgresql-static is installed" test_ref="oval:com.redhat.cve:tst:202120229013"/>

<criterion comment="postgresql-static is signed with Red Hat redhatrelease2 key" test_ref="oval:com.redhat.cve:tst:202120229014"/>

<criterion comment="postgresql-upgrade is installed" test_ref="oval:com.redhat.cve:tst:202120229015"/>

<criterion comment="postgresql-upgrade is signed with Red Hat redhatrelease2 key" test_ref="oval:com.redhat.cve:tst:202120229016"/>

<criterion comment="postgresql-docs is installed" test_ref="oval:com.redhat.cve:tst:202120229017"/>

<criterion comment="postgresql-docs is signed with Red Hat redhatrelease2 key" test_ref="oval:com.redhat.cve:tst:202120229018"/>

<criterion comment="postgresql-contrib is installed" test_ref="oval:com.redhat.cve:tst:202120229019"/>

<criterion comment="postgresql-contrib is signed with Red Hat redhatrelease2 key" test_ref="oval:com.redhat.cve:tst:202120229020"/>

<criterion comment="postgresql-pltcl is installed" test_ref="oval:com.redhat.cve:tst:202120229023"/>

<criterion comment="postgresql-pltcl is signed with Red Hat redhatrelease2 key" test_ref="oval:com.redhat.cve:tst:202120229024"/>

<criterion comment="postgresql-test-rpm-macros is installed" test_ref="oval:com.redhat.cve:tst:202120229025"/>

<criterion comment="postgresql-test-rpm-macros is signed with Red Hat redhatrelease2 key" test_ref="oval:com.redhat.cve:tst:202120229026"/>

<criterion comment="postgresql-debugsource is installed" test_ref="oval:com.redhat.cve:tst:202120229029"/>

<criterion comment="postgresql-debugsource is signed with Red Hat redhatrelease2 key" test_ref="oval:com.redhat.cve:tst:202120229030"/>

<criterion comment="postgresql-server is installed" test_ref="oval:com.redhat.cve:tst:202120229031"/>

<criterion comment="postgresql-server is signed with Red Hat redhatrelease2 key" test_ref="oval:com.redhat.cve:tst:202120229032"/>

<criterion comment="postgresql-upgrade-devel is installed" test_ref="oval:com.redhat.cve:tst:202120229033"/>

<criterion comment="postgresql-upgrade-devel is signed with Red Hat redhatrelease2 key" test_ref="oval:com.redhat.cve:tst:202120229034"/>

2. PG官方包的名称

BASH

[sujx@postgresql ~]$ rpm -qa |grep postgresql

postgresql12-12.7-2PGDG.rhel8.x86_64

postgresql12-libs-12.7-2PGDG.rhel8.x86_64

postgresql12-server-12.7-2PGDG.rhel8.x86_64

结论

postgresql12 ≠ postgresql,所以wazuh使用redhat的OVAL文件可以搜出redhat打包的postgresql的漏洞,其他就不可以。

维护管理

客户端的删除

SHELL

cd /var/ossec/bin/

# 执行管理程序

[root@vlnx101057 bin]# ./manage_agents

****************************************

* Wazuh v4.1.5 Agent manager. *

* The following options are available: *

****************************************

(A)dd an agent (A). 手动增加客户端

(E)xtract key for an agent (E). 提取客户端秘钥

(L)ist already added agents (L). 列出当前客户端

(R)emove an agent (R). 删除客户端

(Q)uit. 退出

Choose your action: A,E,L,R or Q:R 输入R,确认删除客户端

Provide the ID of the agent to be removed (or '\q' to quit): 180 输入agent id

Confirm deleting it?(y/n): 确认删除

Agent '180' removed. 完成删除

客户端的离线注册

Wazuh-Manager和Wazuh-Agent之间通过1515/tcp端口来进行认证的,具体实现形式类似CA证书的形式。Manager有自建Key,然后Agent通过注册的形式提交主机名、IP地址获取相应的ID和Key。注册之后,Manager和Agent就不再使用1515端口进行认证,只使用1514/TCP端口进行加密的数据通讯。

在无法开通1515/tcp端口或者其他特定情况下,我们需要离线注册Wazuh-Agent。大体步骤为:

- 在管理端注册Agent主机名和IP地址,并获取相应主机ID;

- 导出客户端注册Key;

- 在Agent上导入上述Key;

- 重启Agent服务。

SHELL

# on the Manger

/var/ossec/bin/manage_agents -a <agent_IP> -n <agent_name>

/var/ossec/bin/manage_agents -l | grep <agent_name>

ID: 001, Name: agent_1, IP: any

/var/ossec/bin/manage_agents -e <agent_id>

Agent key information for '001' is:

MDAxIDE4NWVlNjE1Y2YzYiBhbnkgMGNmMDFiYTM3NmMxY2JjNjU0NDAwYmFhZDY1ZWU1YjcyMGI2NDY3ODhkNGQzMjM5ZTdlNGVmNzQzMGFjMDA4Nw==

# on the Agent

/var/ossec/bin/manage_agents -i <key>

# 修改Agent上的Manager IP

vim /var/ossec/etc/ossec.conf

<client>

<server>

<address>MANAGER_IP</address>

...

</server>

</client>

systemctl restart wazuh-agent

其他

此外,Wazuh Manager默认是开放认证的,即只要知道Manager的IP就可直接注册。但它也支持通过密码认证、通过SSL证书认证、通过API认证、通过主机环境认证(限定主机名和主机组来认证)。

以下,仅提供密码认证的配置介绍:

SHELL

# 在Manager主机上打开密码认证选项

# vim /var/ossec/etc/ossec.conf

<auth>

...

<use_password>yes</use_password>

...

</auth>

# 添加随机密码

systemctl restart wazuh-manager

grep "Random password" /var/ossec/logs/ossec.log

# 添加指定密码

# 如不进行如下操作,wazuh实际就会产生一个随机密码

echo "<custom_password>" > /var/ossec/etc/authd.pass

systemctl restart wazuh-manager

# 在Agent上开启密码认证并注册

/var/ossec/bin/agent-auth -m <manager_IP> -P "<custom_password>"

systemctl restart wazuh-agent

# 或者将密码注入相应文件

echo "<custom_password>" > /var/ossec/etc/authd.pass

/var/ossec/bin/agent-auth -m <manager_IP>

systemctl restart wazuh-agent

系统调优

wazuh本身是一个对资源要求较低的系统,但长期运行下来,还是会出现各种各样资源使用不足的问题。因此,需要进行一定程度的优化和调整

调整ES参数

开启内存锁定参数

SHELL

# 锁定物理内存地址,防止ES内存被交换出去,避免频繁使用swap分区

cat >> /etc/elasticsearch/elasticsearch.yml <<EOF

bootstrap.memory_lock: true

EOF

限制系统资源

SHELL

# 配置Elasticsearch的systemd调用

mkdir -p /etc/systemd/system/elasticsearch.service.d/

cat > /etc/systemd/system/elasticsearch.service.d/elasticsearch.conf << EOF

[Service]

LimitMEMLOCK=infinity

EOF

调整内核参数

SHELL

cat >> /etc/security/limits.conf <<EOF

elasticsearch soft memlock unlimited

elasticsearch hard memlock unlimited

EOF

调整JVM参数

SHELL

# 在可用内存使用率不超过50%,且使用量不超过32GB内存的情况下,设定Xms和Xmx的值为当年内存总量的一半

# 当前内存总量为8GB

cat >> /etc/elasticsearch/jvm.options <<EOF

-Xms4g

-Xmx4g

EOF

重启服务

SHELL

systemctl daemon-reload

systemctl restart elasticsearch

设置分片和副本数据量

Wazuh设置单节点ES主机

SHELL

# 获取Wazuh ES 模板

curl https://raw.githubusercontent.com/wazuh/wazuh/v4.1.5/extensions/elasticsearch/7.x/wazuh-template.json -o w-elastic-template.json

# 结合实际环境,设置分片数为1,副本数为0

{

"order": 1,

"index_patterns": ["wazuh-alerts-4.x-*"],

"settings": {

"index.refresh_interval": "5s",

"index.number_of_shards": "1",

"index.number_of_replicas": "0",

"index.auto_expand_replicas": "0-1",

"index.mapping.total_fields.limit": 2000

},

"mappings": {

"...": "..."

}

}

# 应用模板

curl -X PUT "http://localhost:9200/_template/wazuh-custom" -H 'Content-Type: application/json' -d @w-elastic-template.json

设置永久分片数

SHELL

# 设置ES的永久分片数为20000

curl -XPUT "127.0.0.1:9200/_cluster/settings" -H 'Content-Type: application/json' -d '{"persistent":{"cluster":{"max_shards_per_node":20000}}}'

删除过期分片

SHELL

# 删除2021.06所有分片

curl -XDELETE http://127.0.0.1:9200/wazuh-alerts-4.x-2021.06.*

硬件调优

使用固态磁盘

更多的CPU和内存

群集部署

在中大型网络环境中,单台Allinone的Wazuh系统或者单节点的分布式部署Wazuh系统从性能上已经无法满足日志分析和漏洞扫描的需求,因此应当采用高可用、多节点的分布式部署来满足Wazuh对CPU和存储的要求。

| 序号 | 系统描述 | 配置 | 网络地址 | 系统角色 |

|---|---|---|---|---|

| 1 | Lvsnode1 | 1c/1g | 192.168.79.51 | LVS+KeepLived 提供VIP和负载均衡 |

| 2 | Lvsnode2 | 1c/1g | 192.168.79.52 | LVS+KeepLived 提供VIP和负载均衡 |

| 3 | Wazuhnode0 | 2c/2g | 192.168.79.60 | Wazuh主节点,提供认证以及cve库 |

| 4 | Wazuhnode1 | 1c/1g | 192.168.79.61 | WazuhWorker,工作节点,提供事件日志分析和漏洞扫描 |

| 5 | Wazuhnode2 | 1c/1g | 192.168.79.62 | WazuhWorker,工作节点,提供事件日志分析和漏洞扫描 |

| 6 | KibanaNode | 2c/4g | 192.168.79.80 | Kibana展示节点 |

| 7 | ElasticNode1 | 4c/4g | 192.168.79.81 | ElasticSearch 群集节点 |

| 8 | ElasticNode2 | 4c/4g | 192.168.79.82 | ElasticSearch 群集节点 |

| 9 | ElasticNode3 | 4c/4g | 192.168.79.83 | ElasticSearch 群集节点 |

| 10 | UbuntuNode | 1c/1g | 192.168.79.127 | Ubuntu 20.04 LTS 测试机 + Wordpress |

| 11 | CentOSNode | 1c/1g | 192.168.79.128 | CentOS 8.4 测试机 + PostgreSQL |

| 12 | WindowsNode | 2c/2g | 192.168.79.129 | Windows Server 2012R2 测试机+ SQL Server |

| 13 | VIP | ——- | 192.168.79.50 | 前端访问IP |

| 14 | Gateway | 1c/1g | 192.168.79.254 | 使用iKuai提供网关服务和外部DNS服务 |

后端存储群集

- ElasticSearch三节点部署

CODE

# 安装前置软件

yum install -y zip unzip curl

# 导入秘钥

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

# 增加官方源

cat > /etc/yum.repos.d/elastic.repo << EOF

[elasticsearch-7.x]

name=Elasticsearch repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

EOF

# 安装软件

yum makecache

yum upgrade -y

yum install -y elasticsearch-7.11.2

# 导入配置文件

cp -a /etc/elasticsearch/elasticsearch.yml{,_$(date +%F)}

# 依次在各个节点上设置

cat > /etc/elasticsearch/elasticsearch.yml << EOF

network.host: 192.168.79.81

node.name: elasticnode1

cluster.name: elastic

cluster.initial_master_nodes:

- elasticnode1

- elasticnode2

- elasticnode3

discovery.seed_hosts:

- 192.168.79.81

- 192.168.79.82

- 192.168.79.83

EOF

# 开通防火墙

firewall-cmd --permanent --add-service=elasticsearch

firewall-cmd --reload

# 启动服务

systemctl daemon-reload

systemctl enable elasticsearch

systemctl start elasticsearch

# 禁用软件源,避免非控升级组件

sed -i "s/^enabled=1/enabled=0/" /etc/yum.repos.d/elastic.repo

# 在各个节点上依次部署,注意变更主机名和IP地址

- ElasticSearch群集验证

CODE

sujx@LEGION:~$ curl http://192.168.79.81:9200/_cluster/health?pretty

{

"cluster_name" : "elastic",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 3,

"active_primary_shards" : 0,

"active_shards" : 0,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

sujx@LEGION:~$ curl http://192.168.79.81:9200/_cat/nodes?v

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

192.168.79.83 10 86 0 0.08 0.08 0.03 cdhilmrstw - elasticnode3

192.168.79.82 18 97 0 0.01 0.12 0.08 cdhilmrstw * elasticnode2

192.168.79.81 16 95 0 0.06 0.08 0.08 cdhilmrstw - elasticnode1

处理系统群集

1. Wazuh Master的部署

CODE

```

# 安装前置软件

yum install -y zip unzip curl

# 导入秘钥

rpm --import https://packages.wazuh.com/key/GPG-KEY-WAZUH

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

# 配置官方软件源

cat > /etc/yum.repos.d/wazuh.repo << EOF

[wazuh]

gpgcheck=1

gpgkey=https://packages.wazuh.com/key/GPG-KEY-WAZUH

enabled=1

name=EL-$releasever - Wazuh

baseurl=https://packages.wazuh.com/4.x/yum/

protect=1

EOF

cat > /etc/yum.repos.d/elastic.repo << EOF

[elasticsearch-7.x]

name=Elasticsearch repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

EOF

# 安装软件

yum makecache

yum upgrade -y

yum install -y wazuh-manager

yum install -y filebeat-7.11.2

# 配置Filebeat

cp -a /etc/filebeat/filebeat.yml{,_$(date +%F)}

cat > /etc/filebeat/filebeat.yml<<EOF

filebeat.modules:

- module: wazuh

alerts:

enabled: true

archives:

enabled: false

setup.template.json.enabled: true

setup.template.json.path: '/etc/filebeat/wazuh-template.json'

setup.template.json.name: 'wazuh'

setup.template.overwrite: true

setup.ilm.enabled: false

output.elasticsearch.hosts: ['http://192.168.79.81:9200','http://192.168.79.82:9200','http://192.168.79.83:9200']

EOF

# 导入filebeat的wazuh日志模板

curl -so /etc/filebeat/wazuh-template.json https://raw.githubusercontent.com/wazuh/wazuh/4.1/extensions/elasticsearch/7.x/wazuh-template.json

chmod go+r /etc/filebeat/wazuh-template.json

# 导入filebeat的wazuh日志模型

curl -s https://packages.wazuh.com/4.x/filebeat/wazuh-filebeat-0.1.tar.gz | tar -xvz -C /usr/share/filebeat/module

# 配置防火墙规则

firewall-cmd --permanent --add-port={1514/tcp,1515/tcp,1516/tcp,55000/tcp}

firewall-cmd --reload

# 禁用软件源,避免非控升级组件

sed -i "s/^enabled=1/enabled=0/" /etc/yum.repos.d/elastic.repo

sed -i "s/^enabled=1/enabled=0/" /etc/yum.repos.d/wazuh.repo

# 启动服务

systemctl daemon-reload

systemctl enable --now wazuh-manager

systemctl enable --now filebeat

# 测试filebeat

[root@WazuhNode0 wazuh]# filebeat test output

elasticsearch: http://192.168.79.81:9200...

parse url... OK

connection...

parse host... OK

dns lookup... OK

addresses: 192.168.79.81

dial up... OK

TLS... WARN secure connection disabled

talk to server... OK

version: 7.11.2

elasticsearch: http://192.168.79.82:9200...

parse url... OK

connection...

parse host... OK

dns lookup... OK

addresses: 192.168.79.82

dial up... OK

TLS... WARN secure connection disabled

talk to server... OK

version: 7.11.2

elasticsearch: http://192.168.79.83:9200...

parse url... OK

connection...

parse host... OK

dns lookup... OK

addresses: 192.168.79.83

dial up... OK

TLS... WARN secure connection disabled

talk to server... OK

version: 7.11.2

```

2. Wazuh worker的部署

CODE

```

# 同Master部署一致

# 安装软件

yum install -y wazuh-manager

yum install -y filebeat-7.11.2

# 配置Filebeat

cp -a /etc/filebeat/filebeat.yml{,_$(date +%F)}

cat > /etc/filebeat/filebeat.yml<<EOF

filebeat.modules:

- module: wazuh

alerts:

enabled: true

archives:

enabled: false

setup.template.json.enabled: true

setup.template.json.path: '/etc/filebeat/wazuh-template.json'

setup.template.json.name: 'wazuh'

setup.template.overwrite: true

setup.ilm.enabled: false

output.elasticsearch.hosts: ['http://192.168.79.81:9200','http://192.168.79.82:9200','http://192.168.79.83:9200']

EOF

# 导入filebeat的wazuh日志模板

curl -so /etc/filebeat/wazuh-template.json https://raw.githubusercontent.com/wazuh/wazuh/4.1/extensions/elasticsearch/7.x/wazuh-template.json

chmod go+r /etc/filebeat/wazuh-template.json

# 导入filebeat的wazuh日志模型

curl -s https://packages.wazuh.com/4.x/filebeat/wazuh-filebeat-0.1.tar.gz | tar -xvz -C /usr/share/filebeat/module

# 配置防火墙规则

firewall-cmd --permanent --add-port={1514/tcp,1516/tcp}

firewall-cmd --reload

# 启动服务

systemctl daemon-reload

systemctl enable --now wazuh-manager

systemctl enable --now filebeat

```

3. 实现wazuh群集

CODE

```

# 进行群集认证

# Master节点

#生成随机串值

openssl rand -hex 16

d84691d111f86e70e8ed7eff80cde39e

# 编辑ossec.conf的cluster

<cluster>

<name>wazuh</name>

<node_name>wazuhnode0</node_name>

<node_type>master</node_type>

<key>d84691d111f86e70e8ed7eff80cde39e</key>

<port>1516</port>

<bind_addr>0.0.0.0</bind_addr>

<nodes>

<node>192.168.79.60</node>

</nodes>

<hidden>no</hidden>

<disabled>no</disabled>

</cluster>

# Worker节点

# 编辑ossec.conf的cluster

<cluster>

<name>wazuh</name>

<node_name>wazuhnode1</node_name>

<node_type>worker</node_type>

<key>d84691d111f86e70e8ed7eff80cde39e</key>

<port>1516</port>

<bind_addr>0.0.0.0</bind_addr>

<nodes>

<node>192.168.79.60</node>

</nodes>

<hidden>no</hidden>

<disabled>no</disabled>

</cluster>

# 验证

[root@WazuhNode0 bin]# ./cluster_control -l

NAME TYPE VERSION ADDRESS

wazuhnode0 master 4.1.5 192.168.79.60

wazuhnode1 worker 4.1.5 192.168.79.61

wauzhnode2 worker 4.1.5 192.168.79.62

```

前端群集

- 前端部署采用Keeplived+Nginx代理的模式,提供一个VIP供Wazuh的agent进行部署。

- 部署Nginx的TCP代理节点

BASH

# 部署第一个节点Node1

# 开放防火墙端口

firewall-cmd --permanent --add-port={1514/tcp,1515/tcp}

firewall-cmd --reload

# 新增官方源地址

cat > /etc/yum.repos.d/nginx.repo <<\EOF

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/$releasever/$basearch/

gpgcheck=0

enabled=1

EOF

# 安装Nginx

yum makecache

yum install -y nginx

systemctl daemon-reload

systemctl enable nginx.service --now

# 配置stream

cd /etc/nginx

cp -a nginx.conf{,_$(date +%F)}

cat >> /etc/nginx/nginx.conf <<EOF

include /etc/nginx/stream.d/*.conf;

EOF

mkdir ./stream.d

touch /etc/nginx/stream.d/wazuh.conf

cat > /etc/nginx/stream.d/wazuh.conf<<EOF

stream {

upstream cluster {

hash $remote_addr consistent;

server 192.168.79.61:1514;

server 192.168.79.62:1514;

}

upstream master {

server 192.168.79.60:1515;

}

server {

listen 1514;

proxy_pass cluster;

}

server {

listen 1515;

proxy_pass master;

}

}

EOF

# 重启Nginx

systemctl restart nginx

# 检查端口情况

[root@lvsnode1 nginx]# netstat -tlnp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:1514 0.0.0.0:* LISTEN 1897/nginx: master

tcp 0 0 0.0.0.0:1515 0.0.0.0:* LISTEN 1897/nginx: master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 1897/nginx: master

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1022/sshd

tcp6 0 0 :::80 :::* LISTEN 1897/nginx: master

tcp6 0 0 :::22 :::* LISTEN 1022/sshd

# 安装Keeplived

yum install -y keepalived

cd /etc/keepalived/

cp -a keepalived.conf{,_$(date +%F)}

# 进行配置

cat > keepalived.conf<<EOF

# Configuration File for keepalived

#

global_defs {

router_id nginxnode1

vrrp_mcast_group4 224.0.0.18

lvs_timeouts tcp 900 tcpfin 30 udp 300

lvs_sync_daemon ens160 route_lvs

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance route_lvs {

state BACKUP

priority 100

virtual_router_id 18

interface ens160

track_interface {

ens160

}

advert_int 3

authentication {

auth_type PASS

auth_pass password

}

virtual_ipaddress {

192.168.79.50/24 dev ens160 label ens160:0

}

}

EOF

systemctl enable keepalived.service --now

- 验证服务

SHELL

sujx@LEGION:~$ ping 192.168.79.50

PING 192.168.79.50 (192.168.79.50) 56(84) bytes of data.

64 bytes from 192.168.79.50: icmp_seq=1 ttl=64 time=0.330 ms

64 bytes from 192.168.79.50: icmp_seq=2 ttl=64 time=0.306 ms

--- 192.168.79.50 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2002ms

rtt min/avg/max/mdev = 0.306/0.430/0.655/0.159 ms

sujx@LEGION:~$ telnet 192.168.79.50 1515

Trying 192.168.79.140...

Connected to 192.168.79.140.

Escape character is '^]'.

sujx@LEGION:~$ telnet 192.168.79.50 1514

Trying 192.168.79.140...

Connected to 192.168.79.140.

Escape character is '^]'.

访问面板

- 部署Elastic协调节点

SHELL

# 如果 Elasticsearch 集群有多个节点,分发 Kibana 节点之间请求的最简单的方法就是在 Kibana 机器上运行一个 Elasticsearch 协调(Coordinating only node) 的节点。Elasticsearch 协调节点本质上是智能负载均衡器,也是集群的一部分,如果有需要,这些节点会处理传入 HTTP 请求,重定向操作给集群中其它节点,收集并返回结果

# 在Kibana节点上安装Elasticsearch

# 安装前置软件

yum install -y zip unzip curl

# 导入源秘钥

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

# 增加官方源

cat > /etc/yum.repos.d/elastic.repo << EOF

[elasticsearch-7.x]

name=Elasticsearch repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

EOF

# 安装软件

yum makecache

yum upgrade -y

yum install -y elasticsearch-7.11.2

#配置防火墙

firewall-cmd --permanent --add-service=http

firewall-cmd --permanent --add-service=elasticsearch

firewall-cmd --reload

# 修改配置

# 其他ES节点也需要增加该主机NodeIP,并重启服务

cat >> /etc/elasticsearch/elasticsearch.yml<<EOF

node.name: kibananode0

cluster.name: elastic

node.master: false

node.data: false

node.ingest: false

network.host: localhost

http.port: 9200

transport.host: 192.168.79.80

transport.tcp.port: 9300

discovery.seed_hosts:

- 192.168.79.81

- 192.168.79.82

- 192.168.79.83

- 192.168.79.80

EOF

# 查看群集信息,只允许本机Kibana访问

[root@kibana wazuh]# curl http://localhost:9200/_cat/nodes?v

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

192.168.79.81 18 96 0 0.04 0.06 0.02 cdhilmrstw - elasticnode1

192.168.79.80 12 97 3 0.01 0.08 0.07 lr - kibananode0

192.168.79.82 23 96 0 0.04 0.09 0.04 cdhilmrstw * elasticnode2

192.168.79.83 23 87 0 0.09 0.11 0.05 cdhilmrstw - elasticnode3

- 配置Kibana

SHELL

yum install -y kibana-7.11.2

# 修改配置文件

cp -a /etc/kibana/kibana.yml{,_$(date +%F)}

cat >> /etc/kibana/kibana.yml << EOF

server.port: 5601

server.host: "localhost"

server.name: "kibana"

i18n.locale: "zh-CN"

elasticsearch.hosts: ["http://localhost:9200"]

kibana.index: ".kibana"

kibana.defaultAppId: "home"

server.defaultRoute : "/app/wazuh"

EOF

# 创建数据目录

mkdir /usr/share/kibana/data

chown -R kibana:kibana /usr/share/kibana

# 离线安装插件

wget https://packages.wazuh.com/4.x/ui/kibana/wazuh_kibana-4.1.5_7.11.2-1.zip

cp ./wazuh_kibana-4.1.5_7.11.2-1.zip /tmp

cd /usr/share/kibana

sudo -u kibana /usr/share/kibana/bin/kibana-plugin install file:///tmp/wazuh_kibana-4.1.5_7.11.2-1.zip

# 配置服务

systemctl daemon-reload

systemctl enable kibana

systemctl start kibana

# 禁用软件源,避免非控升级组件

sed -i "s/^enabled=1/enabled=0/" /etc/yum.repos.d/elastic.repo

# 配置反向代理

yum install -y nginx

systemctl enable --now nginx

vim /etc/ngix/nginx.conf

# 在server{}中添加配置项

```

proxy_redirect off;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $http_host;

location / {

proxy_pass http://localhost:5601/;

}

```

nginx -s reload

# 登录kibana之后选择wazuh插件

# 返回控制台修改插件配置文件

sed -i "s/localhost/192.168.79.60/g" /usr/share/kibana/data/wazuh/config/wazuh.yml

客户端验证

- 部署Wazuh-Agent

SHELL

# CentOS主机

sudo WAZUH_MANAGER='192.168.79.50' WAZUH_AGENT_GROUP='default' yum install https://packages.wazuh.com/4.x/yum/wazuh-agent-4.1.5-1.x86_64.rpm -y

# Ubuntu主机

还有兄弟不知道网络安全面试可以提前刷题吗?费时一周整理的160+网络安全面试题,金九银十,做网络安全面试里的显眼包!

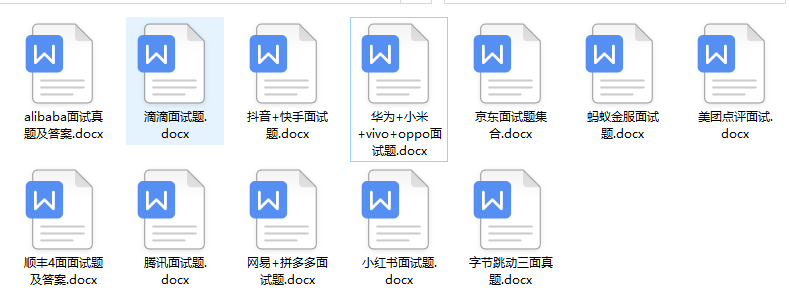

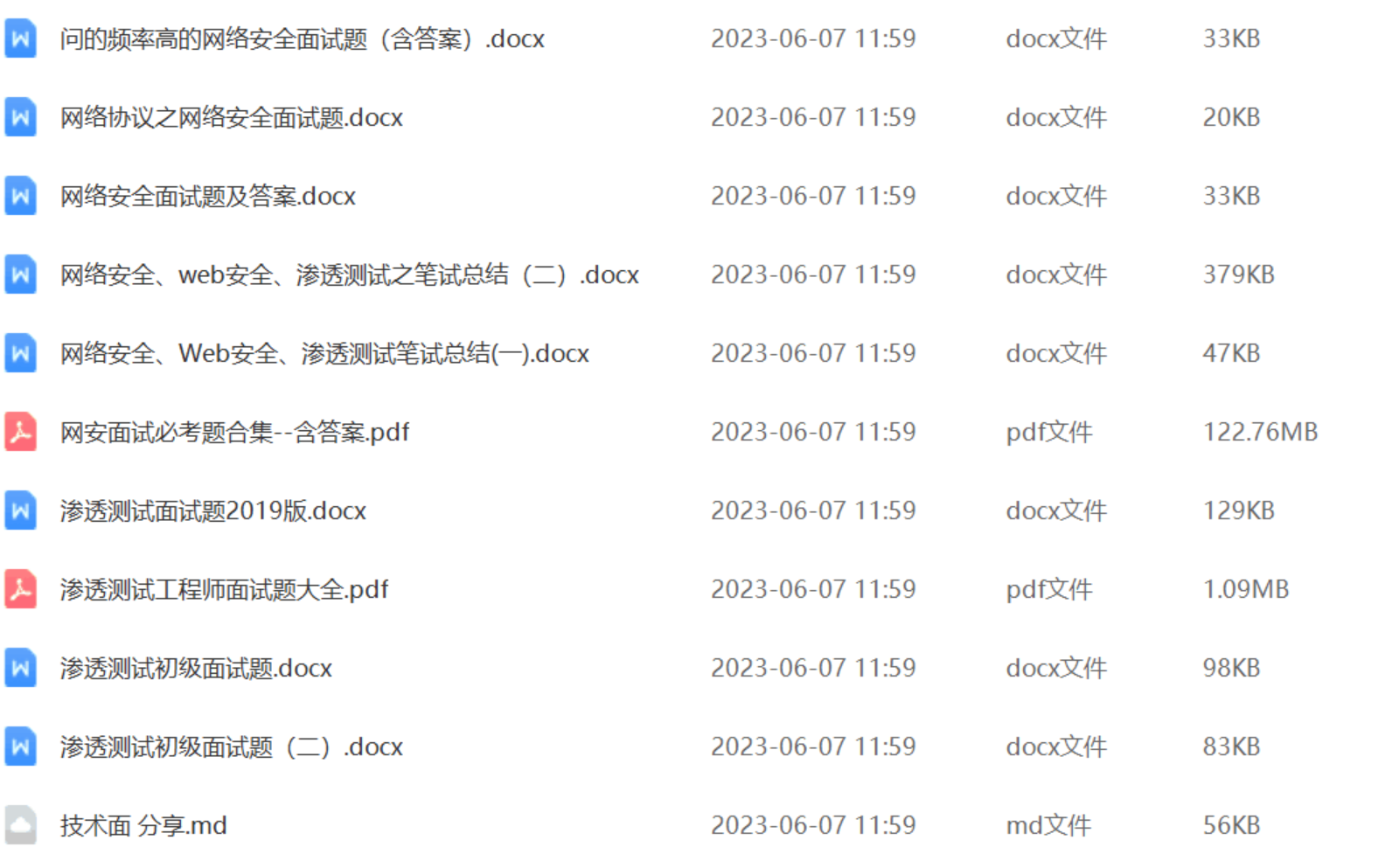

王岚嵚工程师面试题(附答案),只能帮兄弟们到这儿了!如果你能答对70%,找一个安全工作,问题不大。

对于有1-3年工作经验,想要跳槽的朋友来说,也是很好的温习资料!

【完整版领取方式在文末!!】

***93道网络安全面试题***

内容实在太多,不一一截图了

### 黑客学习资源推荐

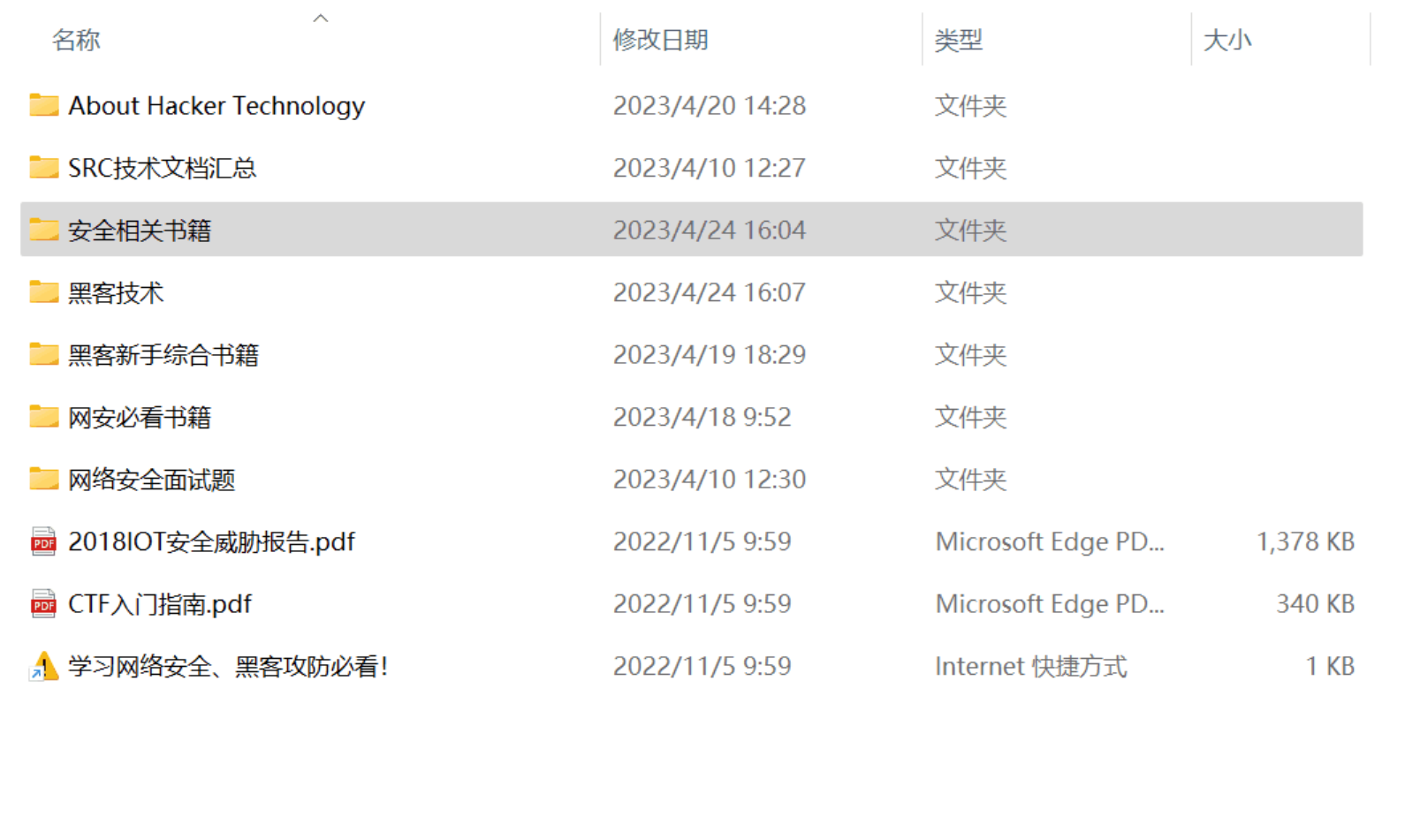

最后给大家分享一份全套的网络安全学习资料,给那些想学习 网络安全的小伙伴们一点帮助!

对于从来没有接触过网络安全的同学,我们帮你准备了详细的学习成长路线图。可以说是最科学最系统的学习路线,大家跟着这个大的方向学习准没问题。

😝朋友们如果有需要的话,可以联系领取~

#### 1️⃣零基础入门

##### ① 学习路线

对于从来没有接触过网络安全的同学,我们帮你准备了详细的**学习成长路线图**。可以说是**最科学最系统的学习路线**,大家跟着这个大的方向学习准没问题。

##### ② 路线对应学习视频

同时每个成长路线对应的板块都有配套的视频提供:

#### 2️⃣视频配套工具&国内外网安书籍、文档

##### ① 工具

##### ② 视频

##### ③ 书籍

资源较为敏感,未展示全面,需要的最下面获取

##### ② 简历模板

**因篇幅有限,资料较为敏感仅展示部分资料,添加上方即可获取👆**

**网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。**

**需要这份系统化的资料的朋友,可以添加V获取:vip204888 (备注网络安全)**

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

准备了详细的**学习成长路线图**。可以说是**最科学最系统的学习路线**,大家跟着这个大的方向学习准没问题。

##### ② 路线对应学习视频

同时每个成长路线对应的板块都有配套的视频提供:

#### 2️⃣视频配套工具&国内外网安书籍、文档

##### ① 工具

##### ② 视频

##### ③ 书籍

资源较为敏感,未展示全面,需要的最下面获取

##### ② 简历模板

**因篇幅有限,资料较为敏感仅展示部分资料,添加上方即可获取👆**

**网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。**

**需要这份系统化的资料的朋友,可以添加V获取:vip204888 (备注网络安全)**

[外链图片转存中...(img-gzDvt2s9-1713184631984)]

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?