内容持续补充中......

目录

(3)、 将secrets注入到名为gaoyingjie的sa中

准备工作:

1、准备一台主机为harbor镜像仓库

2、新建三台主机连接到该镜像仓库

实验环境:

主机名 ip k8s-master 172.25.254.100 k8s-node1 172.25.254.10 k8s-node2 172.25.254.20 docker-harbor(reg.gaoyingjie.org) 172.25.254.250 操作:

(1)、配置三台主机的解析

vim /etc/hosts 内容: 172.25.254.100 k8s-master 172.25.254.10 k8s-node1 172.25.254.20 k8s-node2 172.25.254.250 reg.gaoyingjie.org(2)、检查三台主机的软件仓库

(3)、三台主机安装docker

(4)、从harbor主机将认证证书传输给三台主机

[root@k8s-master docker]# mkdir -p /etc/docker/certs.d/reg.gaoyingjie.org [root@docker-harbor ~]# scp /data/certs/gaoyingjie.org.crt root@172.25.254.100:/etc/docker/certs.d/reg.gaoyingjie.org/ca.crt(5)、设置镜像源

[root@k8s-master reg.gaoyingjie.org]# vim /etc/docker/daemon.json 内容; { "registry-mirrors":["https://reg.gaoyingjie.org"] }(6)、传输

将100主机的所有文件传输给10、20主机

[root@k8s-master ~]# scp *.rpm root@172.25.254.10:/root [root@k8s-master ~]# scp *.rpm root@172.25.254.20:/root [root@k8s-node1 ~]# dnf install *.rpm -y [root@k8s-node2 ~]# dnf install *.rpm -y [root@k8s-master ~]# scp -r /etc/docker/ root@172.25.254.10:/etc/ [root@k8s-master ~]# scp -r /etc/docker/ root@172.25.254.20:/etc/(7)、登录

三台主机都可以登录仓库reg.gaoyingjie.org

docker login reg.gaoyingjie.org

一、k8s的部署

1.1.集群环境初始化

1.1.1.所有主机禁用swap

[root@k8s- ~]# systemctl mask dev-nvme0n1p3.swap

[root@k8s- ~]# swapoff -a

[root@k8s- ~]# systemctl status dev-nvme0n1p3.swap

[root@k8s- ~]# vim /etc/fstab

内容:

注释swap

1.1.2.安装k8s部署工具

[root@k8s-master ~]# dnf install kubelet-1.30.0 kubeadm-1.30.0 kubectl-1.30.0 -y

#设置kubectl命令自动补全功能

[root@k8s-master ~]# dnf install bash-completion -y

[root@k8s-master ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

[root@k8s-master ~]# source ~/.bashrc1.1.2.所有节点安装cri-docker

[root@k8s-master ~]# dnf install libcgroup-0.41-19.el8.x86_64.rpm \

> cri-dockerd-0.3.14-3.el8.x86_64.rpm -y

[root@k8s-master ~]# vim /lib/systemd/system/cri-docker.service

编辑内容:

#指定网络插件名称及基础容器镜像

ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd:// --network-plugin=cni --pod-infra-container-image=reg.timinglee.org/k8s/pause:3.9

[root@k8s-master ~]# systemctl daemon-reload

[root@k8s-master ~]# systemctl start cri-docker

[root@k8s-master ~]# ll /var/run/cri-dockerd.sock

将该配置文件拷贝给其他两台主机:

[root@k8s-master ~]# scp /lib/systemd/system/cri-docker.service root:/172.25.254.10:/lib/systemd/system/cri-docker.service

[root@k8s-master ~]# scp /lib/systemd/system/cri-docker.service root:/172.25.254.20:/lib/systemd/system/cri-docker.service

1.1.3.在master节点拉取K8S所需镜像

#拉取k8s集群所需要的镜像

[root@k8s-master ~]# kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.30.0 --cri-socket=unix:///var/run/cri-dockerd.sock

#上传镜像到harbor仓库

[root@k8s-master ~]# docker images | awk '/google/{ print $1":"$2}' \| awk -F "/" '{system("docker tag "$0" reg.gaoyingjie.org/k8s/"$3)}'

[root@k8s-master ~]# docker images | awk '/k8s/{system("docker push "$1":"$2)}'

1.1.4.集群初始化

[root@k8s-master ~]# systemctl enable --now kubelet.service

[root@k8s-master ~]# systemctl status kubelet.service

#执行初始化命令

[root@k8s-master ~]# kubeadm init --pod-network-cidr=10.244.0.0/16 \

--image-repository reg.gaoyingjie.org/k8s \

--kubernetes-version v1.30.0 \

--cri-socket=unix:///var/run/cri-dockerd.sock

#指定集群配置文件变量[root@k8s-master ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

#当前节点没有就绪,因为还没有安装网络插件,容器没有运行

[root@k8s-master ~]# kubectl get node

[root@k8s-master ~]# kubectl get pod -A1.1.5.其他两台主机加入集群

在此阶段如果生成的集群token找不到了可以重新生成

[root@k8s-master ~]# kubeadm token create --print-join-command kubeadm join 172.25.254.100:6443 --token 5hwptm.zwn7epa6pvatbpwf --discovery-token-ca-cert-hash

[root@k8s-node1 ~]# kubeadm reset --cri-socket=unix:///var/run/cri-dockerd.sock

[root@k8s-node1 ~]# kubeadm join 172.25.254.100:6443 --token baujlw.w4xhwevafprh8uk9 --discovery-token-ca-cert-hash sha256:f05eb014ffdee15265806a1bc7a54270d8b28cccf90b88cb2b2910fe3aaab05f --cri-socket=unix:///var/run/cri-dockerd.sock

[root@k8s-node2 ~]# kubeadm reset --cri-socket=unix:///var/run/cri-dockerd.sock

[root@k8s-node2 ~]# kubeadm join 172.25.254.100:6443 --token baujlw.w4xhwevafprh8uk9 --discovery-token-ca-cert-hash sha256:f05eb014ffdee15265806a1bc7a54270d8b28cccf90b88cb2b2910fe3aaab05f --cri-socket=unix:///var/run/cri-dockerd.sock1.1.6.安装flannel网络插件

#注意关闭防火墙,并且seinux的状态都是disabled

#现在镜像:

[root@k8s-master ~]# docker pull docker.io/flannel/flannel:v0.25.5

[root@k8s-master ~]# docker pull docker.io/flannel/flannel-cni-plugin:v1.5.1-flannel1

#上传镜像到仓库[root@k8s-master ~]# docker tag flannel/flannel:v0.25.5 reg.gaoyingjie.org/flannel/flannel:v0.25.5

[root@k8s-master ~]# docker push reg.gaoyingjie.org/flannel/flannel:v0.25.5

#编辑kube-flannel.yml 修改镜像下载位置

[root@k8s-master ~]# vim kube-flannel.yml

#需要修改以下几行

[root@k8s-master ~]# grep -n image kube-flannel.yml

146: image: reg.timinglee.org/flannel/flannel:v0.25.5

173: image: reg.timinglee.org/flannel/flannel-cni-plugin:v1.5.1-flannel1

184: image: reg.timinglee.org/flannel/flannel:v0.25.5

#安装flannel网络插件

[root@k8s-master ~]# kubectl apply -f kube-flannel.yml

1.1.7.查看所有node的状态

都是ready说明k8s集群已经完成。

三、pod的管理和优化

3、pod的生命周期

(1)、INIT容器

init容器可以独立于Pod应用容器运行,init容器必须在应用容器启动前完成,它可以检测某个自定义任务是否完成,若没有完成,则会一直检测直到完成为止,若该任务完成后,init容器则会启动,当init容器启动后应用容器才会并行启动。也就是说,因此 Init 容器提供了一种机制来阻塞或延迟应用容器的启动,直到满足了一组先决条件。一旦前置条件满足,Pod内的所有的应用容器会并行启动。

[root@k8s-master ~]# vim pod.yml

[root@k8s-master ~]# kubectl apply -f pod.yml

pod/initpod created

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

initpod 0/1 Init:0/1 0 43s #可以看到status没有running

nginx1 1/1 Running 1 (40h ago) 2d

[root@k8s-master ~]# kubectl logs pod/initpod init-myservice

wating for myservice

wating for myservice

wating for myservice

wating for myservice

wating for myservice

wating for myservice

wating for myservice

wating for myservice

[root@k8s-master ~]# kubectl exec -it pods/initpod -c init-myservice -- /bin/sh

/ #

/ # touch testdfile

/ # ls

bin etc lib proc sys tmp var

dev home lib64 root testdfile usr

/ # exit

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

initpod 0/1 Init:0/1 0 2m47s #建立文件后init容器已经running

nginx1 1/1 Running 1 (40h ago) 2d

2、探针

(1)、存活探针

配置文件内容:

apiVersion: v1

kind: Pod

metadata:

labels:

name: initpod

name: initpod

spec:

containers:

- image: myapp:v1

name: myapp

livenessProbe:

tcpSocket: ##检测端口存在性

port: 8080

initalDelaySeconds: 3 #容器启动后要等待多少秒后就探针开始工作,默认是 0

periodSeconds: 1 #执行探测的时间间隔,默认为 10s 如果监测成功,每1s检测一次

timeoutSeconds: 1 #探针执行检测请求后,如果检测失败,等待响应的超时时间,默认为1s

(2)、就绪探针

四、k8s的控制器

1、replicaset

-

ReplicaSet 确保任何时间都有指定数量的 Pod 副本在运行

-

虽然 ReplicaSets 可以独立使用,但今天它主要被Deployments 用作协调 Pod 创建、删除和更新的机制

#生成yml文件

[root@k8s-master ~]# kubectl create deployment replicaset --image myapp:v1 --dry-run=client -o yaml > replicaset.yml配置文件内容:

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: replicaset #指定pod名称,一定小写,如果出现大写报错

spec:

replicas: 2 #指定维护pod数量为2

selector: #指定检测匹配方式

matchLabels: #指定匹配方式为匹配标签

app: myapp #指定匹配的标签为app=myapp

template: #模板,当副本数量不足时,会根据下面的模板创建pod副本

metadata:

labels:

app: myapp

spec:

containers:

- image: myapp:v1

name: myapp

2、deployment控制器

-

Deployment控制器并不直接管理pod,而是通过管理ReplicaSet来间接管理Pod

-

Deployment管理ReplicaSet,ReplicaSet管理Pod

-

主要用来:创建Pod和ReplicaSet、滚动更新和回滚、扩容和缩容、暂停与恢复

#生成yaml文件

[root@k8s-master ~]# kubectl create deployment deployment --image myapp:v1 --dry-run=client -o yaml > deployment.yml配置文件内容:

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment

spec:

replicas: 4

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- image: myapp:v1

name: myapp查看:

版本升级:

版本回滚:

版本滚动更新策略:

版本更新暂停:

[root@k8s2 pod]# kubectl rollout pause deployment deployment-example查看:还是原来的版本

版本更新恢复:

版本更新恢复:

[root@k8s2 pod]# kubectl rollout resume deployment deployment-example

3、daemonset控制器

DaemonSet 确保全部(或者某些)节点上运行一个 Pod 的副本。当有节点加入集群时, 也会为他们新增一个 Pod ,当有节点从集群移除时,这些 Pod 也会被回收。删除 DaemonSet 将会删除它创建的所有 Pod

master有污点:

设置对污点容忍后:master被控制器分配pod

4、job控制器

Job,主要用于负责批量处理(一次要处理指定数量任务)短暂的一次性(每个任务仅运行一次就结束)任务。

Job特点如下:

-

当Job创建的pod执行成功结束时,Job将记录成功结束的pod数量

-

当成功结束的pod达到指定的数量时,Job将完成执行

前提:上传perl镜像到harbor:用来计算。

[root@k8s-master ~]# kubectl create job testjob --image perl:5.34.0 --dry-run=client -o yaml > job.yml

配置文件内容:

apiVersion: batch/v1

kind: Job

metadata:

creationTimestamp: null

name: testjob

spec:

completions: 6 #一共完成任务数为6

parallelism: 2 #每次并行完成2个

backoffLimit: 4 #运行失败后尝试4重新运行

template:

spec:

containers:

- image: perl:5.34.0

name: testjob

command: ["perl", "-Mbignum=bpi", "-wle", "print bpi(2000)"]

restartPolicy: Never #关闭后不自动重启

可以看到并行任务数两个,一共开启6个pod进行运算:

查看任务完成后的日志结果:

5、cronjob控制器

-

Cron Job 创建基于时间调度的 Jobs。

-

CronJob控制器以Job控制器资源为其管控对象,并借助它管理pod资源对象。

-

CronJob可以以类似于Linux操作系统的周期性任务作业计划的方式控制其运行时间点及重复运行的方式。

-

CronJob可以在特定的时间点(反复的)去运行job任务。

生成cronjob的yml文件

[root@k8s-master ~]# kubectl create cronjob testcronjob --image busyboxplus:latest --schedule="* * * * *" --restart Never --dry-run=client -o yaml > cronjob.yml配置文件内容:

apiVersion: batch/v1

kind: CronJob

metadata:

creationTimestamp: null

name: testcronjob

spec:

schedule: '* * * * *'

jobTemplate:

spec:

template:

spec:

containers:

- image: busyboxplus:latest

name: testcronjob

command: ["/bin/sh","-c","date;echo Hello from the Kubernetes cluster"]

restartPolicy: Never

查看:是否每分钟建立一个pod并且输出一句话

[root@k8s-master ~]# watch -n 1 kubectl get pods

五、k8s的微服务

-

Service是一组提供相同服务的Pod对外开放的接口。

-

借助Service,应用可以实现服务发现和负载均衡。

-

service默认只支持4层负载均衡能力,没有7层功能。(可以通过Ingress实现)

5.1、ipvs模式

-

Service 是由 kube-proxy 组件,加上 iptables 来共同实现的

-

kube-proxy 通过 iptables 处理 Service 的过程,需要在宿主机上设置相当多的 iptables 规则,如果宿主机有大量的Pod,不断刷新iptables规则,会消耗大量的CPU资源

-

IPVS模式的service,可以使K8s集群支持更多量级的Pod

简而言之:微服务默认使用iptables调度,意味着增减pod时,策略都需要刷新,大量消耗资源,所以使用ipvs模式。

(1)、安装ipvsadm

[root@k8s-所有节点 ]yum install ipvsadm –y(2)、修改master节点的代理配置

[root@k8s-master ~]# kubectl -n kube-system edit cm kube-proxy

metricsBindAddress: ""

mode: "ipvs" #设置kube-proxy使用ipvs模式

nftables:

(3)、重启pod

在pod运行时配置文件中采用默认配置,当改变配置文件后已经运行的pod状态不会变化,所以要重启pod。

[root@k8s-master ~]# kubectl -n kube-system get pods | awk '/kube-proxy/{system("kubectl -n kube-system delete pods "$1)}'

[root@k8s-master ~]# ipvsadm -Ln

[root@k8s-master services]# kubectl get svc gaoyingjie

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

gaoyingjie ClusterIP 10.108.100.16 <none> 80/TCP 43m

注意:

切换ipvs模式后,kube-proxy会在宿主机上添加一个虚拟网卡:kube-ipvs0,并分配所有service IP

[root@k8s-master services]# ip a | tail 9: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default link/ether b6:f0:7a:ce:64:02 brd ff:ff:ff:ff:ff:ff inet 10.96.0.10/32 scope global kube-ipvs0 valid_lft forever preferred_lft forever inet 10.108.100.16/32 scope global kube-ipvs0 valid_lft forever preferred_lft forever inet 10.96.0.1/32 scope global kube-ipvs0 valid_lft forever preferred_lft forever inet 10.98.254.47/32 scope global kube-ipvs0 valid_lft forever preferred_lft forever

5.2、建立微服务

(1)、ClusterIP

- clusterip模式只能在集群内访问,并对集群内的pod提供健康检测和自动发现功能

- 根据标签将pod的ip加入Endpoints中,通过services的ip在集群内部访问,若标签不一致,则该pod会被驱逐出Endpoints。

- 也就是所有pod的IP直接解析到services的虚拟ip上,services的ip是由ipvs模式下kube-proxy生成的kube-ipvs0网卡分配的。

#clusterip类型

[root@k8s-master services]# kubectl create deployment gaoyingjie --image myapp:v1 --replicas 2 --dry-run=client -o yaml > services.yaml

[root@k8s-master services]# kubectl expose deployment timinglee --port 80 --target-port 80 --dry-run=client -o yaml >> services.yaml

[root@k8s-master services]# vim services.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: gaoyingjie

name: gaoyingjie

spec:

replicas: 2

selector:

matchLabels:

app: gaoyingjie

template:

metadata:

labels:

app: gaoyingjie

spec:

containers:

- image: myapp:v1

name: myapp

---

apiVersion: v1

kind: Service

metadata:

labels:

app: gaoyingjie

name: gaoyingjie

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: gaoyingjie #标签

type:ClusterIP

[root@k8s-master services]# kubectl apply -f services.yaml

deployment.apps/gaoyingjie created

service/gaoyingjie created

[root@k8s-master services]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

gaoyingjie ClusterIP 10.108.100.16 <none> 80/TCP 9s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 59d

nginx-svc ClusterIP None <none> 80/TCP 3d18h

[root@k8s-master services]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

gaoyingjie-74f5f4bf55-56t47 1/1 Running 0 53m app=gaoyingjie,pod-template-hash=74f5f4bf55

gaoyingjie-74f5f4bf55-vrh5w 1/1 Running 0 53m app=gaoyingjie,pod-template-hash=74f5f4bf55

自动发现是指当有新的pod创建时,会自动识别pod的标签,若符合标签就将该pod的ip加入到endpoints中,进行ipvs调度,示例如下:

#新建pod名为testpod

[root@k8s-master services]# kubectl run testpod --image myapp:v1

pod/testpod created

#查看新pod的ip 是10.244.36.121

[root@k8s-master services]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

gaoyingjie-74f5f4bf55-56t47 1/1 Running 0 64m 10.244.36.120 k8s-node1 <none> <none>

gaoyingjie-74f5f4bf55-vrh5w 1/1 Running 0 64m 10.244.169.150 k8s-node2 <none> <none>

testpod 1/1 Running 0 105s 10.244.36.121 k8s-node1 <none> <none>

#查看pod的标签,此时testpod的标签与其他两个不一样

[root@k8s-master services]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

gaoyingjie-74f5f4bf55-56t47 1/1 Running 0 63m app=gaoyingjie,pod-template-hash=74f5f4bf55

gaoyingjie-74f5f4bf55-vrh5w 1/1 Running 0 63m app=gaoyingjie,pod-template-hash=74f5f4bf55

testpod 1/1 Running 0 21s run=testpod

#更改testpod标签

[root@k8s-master services]# kubectl label pod testpod app=gaoyingjie --overwrite

pod/testpod labeled

#查看testpod的标签

[root@k8s-master services]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

gaoyingjie-74f5f4bf55-56t47 1/1 Running 0 64m app=gaoyingjie,pod-template-hash=74f5f4bf55

gaoyingjie-74f5f4bf55-vrh5w 1/1 Running 0 64m app=gaoyingjie,pod-template-hash=74f5f4bf55

testpod 1/1 Running 0 79s app=gaoyingjie,run=testpod

#查看services的endpoints,可以看到testpod的ip:10.244.121已经加入endpoints

[root@k8s-master services]# kubectl describe svc gaoyingjie

Name: gaoyingjie

Namespace: default

Labels: app=gaoyingjie

Annotations: <none>

Selector: app=gaoyingjie

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.108.100.16

IPs: 10.108.100.16

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.169.150:80,10.244.36.120:80,10.244.36.121:80

Session Affinity: None

Events: <none>services使用集群自带 DNS解析,因为当services重新启动时候ip会改变,所以集群内部的沟通都是通过域名进行的,如下:

#查看dns

[root@k8s-master services]# kubectl -n kube-system get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

calico-typha ClusterIP 10.98.254.47 <none> 5473/TCP 52d

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 59d

#service创建后集群DNS提供解析

[root@k8s-master services]# dig gaoyingjie.default.svc.cluster.local @10.96.0.10

......

;; ANSWER SECTION: #解析到clusterip上

gaoyingjie.default.svc.cluster.local. 30 IN A 10.108.100.16 #将10.108.100.16 解析为gaoyingjie.default.svc.cluster.local.

......

#进入集群内部

[root@k8s-master services]# kubectl run busybox --image busyboxplus:latest

[root@k8s-master services]# kubectl exec -it pods/busybox -- /bin/sh

/ # curl gaoyingjie.default.svc.cluster.local.

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

/ # nslookup gaoyingjie.default.svc.cluster.local.

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: gaoyingjie

Address 1: 10.108.100.16 gaoyingjie.default.svc.cluster.local #也可以看到DNS解析到clusterip上

#可以看到用域名gaoyingjie.default.svc.cluster.local.进行访问- ClusterIP 的特殊模式-headless

原有模式是当进行交流时,DNS通过services名称先解析到services的cluster IP,然后services用ipvs对所代理的pod进行路由与负载均衡。

headless无头服务,kube-proxy并不会给services分配 Cluster IP(services的ip), 而且平台也不会为它们进行负载均衡和路由,集群访问通过dns解析直接指向到业务pod上的IP,所有的调度有dns单独完成。相当于把后端pod的ip直接暴露出来。

如果后端的pod重建后名称和ip发生变化后,DNS如何解析到每个pod的ip上?请看后续statefulset控制器。

示例如下:

[root@k8s-master services]# vim headless.yml

[root@k8s-master services]# kubectl apply -f headless.yml

#可以看到clusterips是none

[root@k8s-master services]# kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

gaoyingjie ClusterIP None <none> 80/TCP 20s app=gaoyingjie

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 59d <none>

[root@k8s-master services]# kubectl describe svc gaoyingjie

Name: gaoyingjie

Namespace: default

Labels: app=gaoyingjie

Annotations: <none>

Selector: app=gaoyingjie

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: None #clusterips是<none>

IPs: None

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.169.153:80,10.244.36.122:80

Session Affinity: None

Events: <none>

#查看pod的ip

[root@k8s-master services]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox 1/1 Running 0 141m 10.244.169.152 k8s-node2 <none> <none>

gaoyingjie-74f5f4bf55-g2nb2 1/1 Running 0 7s 10.244.36.122 k8s-node1 <none> <none>

gaoyingjie-74f5f4bf55-hlqxv 1/1 Running 0 7s 10.244.169.153 k8s-node2 <none> <none>

#查看

[root@k8s-master services]# dig gaoyingjie.default.svc.cluster.local @10.96.0.10

......

;; ANSWER SECTION:

gaoyingjie.default.svc.cluster.local. 30 IN A 10.244.169.153 #直接解析到pod上

gaoyingjie.default.svc.cluster.local. 30 IN A 10.244.36.122

......

#开启容器进入集群内部,查看services,也可以看到直接解析到pod的ip上

/ # nslookup gaoyingjie

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: gaoyingjie

Address 1: 10.244.36.122 10-244-36-122.gaoyingjie.default.svc.cluster.local #解析到pod的ip上

Address 2: 10.244.169.153 10-244-169-153.gaoyingjie.default.svc.cluster.local

(2)、NodePoints

通过ipvs暴漏端口从而使外部主机通过master节点的对外ip:<port>来访问pod业务,也就是说,将services的clusterip暴露出一个端口供其他主机使用。

#nodeport类型

[root@k8s-master services]# vim nodeport.yml

[root@k8s-master services]# kubectl apply -f nodeport.yml

#可以看出暴露出的端口是31998

[root@k8s-master services]# kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

gaoyingjie NodePort 10.111.32.111 <none> 80:31998/TCP 24s app=gaoyingjie

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 60d <none>

#在另一台主机上访问该微服务

[root@docker-harbor harbor]# curl 172.25.254.100:31998

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

注意:

nodeport模式所暴露的端口范围是30000-32767,若超出这个范围则会报错。

[root@k8s-master services]# vim nodeport.yml [root@k8s-master services]# kubectl delete -f nodeport.yml deployment.apps "gaoyingjie" deleted service "gaoyingjie" deleted #配置文件设置的端口超出,出现报错 [root@k8s-master services]# kubectl apply -f nodeport.yml deployment.apps/gaoyingjie created The Service "gaoyingjie" is invalid: spec.ports[0].nodePort: Invalid value: 33333: provided port is not in the valid range. The range of valid ports is 30000-32767

如何让解决:

[root@k8s-master ~]# vim /etc/kubernetes/manifests/kube-apiserver.yaml - --service-node-port-range=30000-40000修改后api-server会自动重启,等apiserver正常启动后才能操作集群

集群重启自动完成在修改完参数后全程不需要人为干预

(3)、loadBalancer

云平台会为我们分配vip并实现访问,如果是裸金属主机那么需要metallb来实现ip的分配.

#loadbalancer模式

[root@k8s-master services]# cat nodeport.yml > loadbalancer.yml

[root@k8s-master services]# vim loadbalancer.yml

[root@k8s-master services]# kubectl apply -f loadbalancer.yml

deployment.apps/gaoyingjie created

service/gaoyingjie created

#可以看到EXTERNAL-IP是pending,所以需要metalLB分配对外ip

[root@k8s-master services]# kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

gaoyingjie LoadBalancer 10.99.176.213 <pending> 80:31853/TCP 14s app=gaoyingjie

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 60d <none>

部署metatalLB:

metalLB官网:Installation :: MetalLB, bare metal load-balancer for Kubernetes

metalLB功能:为LoadBalancer分配vip

测试:#1.设置ipvs模式 [root@k8s-master ~]# kubectl edit cm -n kube-system kube-proxy apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: "ipvs" ipvs: strictARP: true [root@k8s-master ~]# kubectl -n kube-system get pods | awk '/kube-proxy/{system("kubectl -n kube-system delete pods "$1)}' #部署metalLB [root@k8s-master metalLB]# ls configmap.yml metallb-native.yaml metalLB.tag.gz [root@k8s-master metalLB]# docker load -i metalLB.tag.gz [root@k8s-master metalLB]# vim metallb-native.yaml ... image: metallb/controller:v0.14.8 image: metallb/speaker:v0.14.8 ... #配置分配地址段 [root@k8s-master metalLB]# vim configmap.yml apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: name: first-pool #地址池名称 namespace: metallb-system spec: addresses: - 172.25.254.50-172.25.254.99 #修改为自己本地地址段 --- #两个不同的kind中间必须加分割 apiVersion: metallb.io/v1beta1 kind: L2Advertisement metadata: name: example namespace: metallb-system spec: ipAddressPools: - first-pool #使用地址池 [root@k8s-master metalLB]# kubectl apply -f metallb-native.yaml [root@k8s-master metalLB]# kubectl apply -f configmap.yml ipaddresspool.metallb.io/first-pool created l2advertisement.metallb.io/example created #可以看到svc的EXTERNAL-IP是172.25.254.50 [root@k8s-master metalLB]# kubectl get svc -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR gaoyingjie LoadBalancer 10.99.176.213 172.25.254.50 80:31853/TCP 3m53s app=gaoyingjie kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 60d <none>#用另一台主机访问svc的对外ip [root@docker-harbor harbor]# curl 172.25.254.50 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

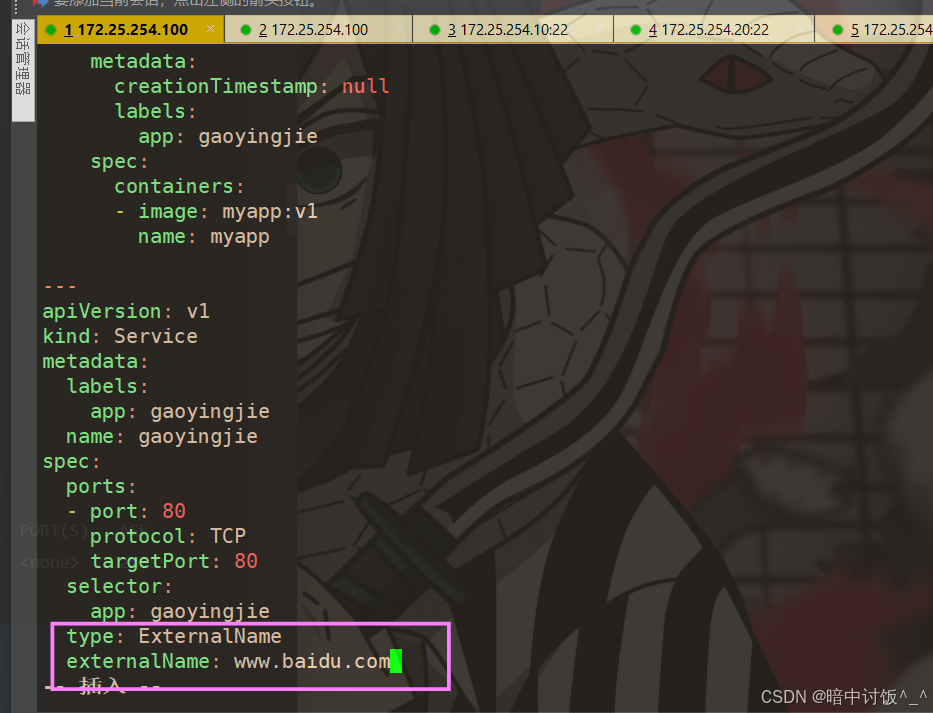

(4)、ExternalName

-

开启services后,不会被分配IP,而是用dns解析CNAME固定域名来解决ip变化问题

-

一般应用于外部业务和pod沟通或外部业务迁移到pod内时

-

在应用向集群迁移过程中,externalname在过度阶段就可以起作用了。

-

集群外的资源迁移到集群时,在迁移的过程中ip可能会变化,但是域名+dns解析能完美解决此问题

#ExternalName类型

[root@k8s-master services]# cat loadbalancer.yml > externalname.yml

[root@k8s-master services]# vim externalname.yml

[root@k8s-master services]# kubectl apply -f externalname.yml

deployment.apps/gaoyingjie created

service/gaoyingjie created

#可以看到没有clusterip,而EXTERNAL-IP是域名

[root@k8s-master services]# kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

gaoyingjie ExternalName <none> www.baidu.com 80/TCP 9s app=gaoyingjie

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 60d <none>

nginx-svc ClusterIP None <none> 80/TCP 4d3h app=nginx

[root@k8s-master services]# kubectl -n kube-system get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

calico-typha ClusterIP 10.98.254.47 <none> 5473/TCP 53d

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 60d

#DNS跟外界域名绑定了

[root@k8s-master services]# dig gaoyingjie.default.svc.cluster.local. @10.96.0.10

...

;; ANSWER SECTION:

gaoyingjie.default.svc.cluster.local. 30 IN CNAME www.baidu.com. #名字就跟外部的一个域名绑定了

www.baidu.com. 30 IN CNAME www.a.shifen.com.

www.a.shifen.com. 30 IN A 183.2.172.185

www.a.shifen.com. 30 IN A 183.2.172.42

...

#进入集群内部,ping svc的名称,直接解析到www.baidu.com

[root@k8s-master services]# kubectl exec -it pods/busybox -- /bin/sh

/ # ping gaoyingjie

PING gaoyingjie (183.2.172.185): 56 data bytes

64 bytes from 183.2.172.185: seq=0 ttl=127 time=72.971 ms

64 bytes from 183.2.172.185: seq=1 ttl=127 time=110.614 ms

64 bytes from 183.2.172.185: seq=2 ttl=127 time=56.570 ms

5.3、Ingress-nginx

前情提要:loadbalancer类型中,裸金属主机中用metalLB为每个services分配外部ip,而在真实的业务中,对外ip是是需要收费的,若每个services都需要一个对外ip则会花费昂贵,所以就产生了ingress-nginx控制器。

service只支持四层负载,也就是对ip端口的处理,不能对请求业务、动静分离等进行处理。

ingress-nginx支持七层负载,支持动静分离。

-

一种全局的、为了代理不同后端 Service 而设置的负载均衡服务,支持7层

-

Ingress由两部分组成:Ingress controller和Ingress服务

-

Ingress Controller 会根据你定义的 Ingress 对象,提供对应的代理能力。

-

业界常用的各种反向代理项目,比如 Nginx、HAProxy、Envoy、Traefik 等,都已经为Kubernetes 专门维护了对应的 Ingress Controller。

(1)、部署Ingress-nginx

官网Installation Guide - Ingress-Nginx Controller

#开启镜像

[root@k8s-master ingress]# ls

deploy.yaml ingress-nginx-1.11.2.tar.gz

[root@k8s-master ingress]# docker load -i ingress-nginx-1.11.2.tar.gz

...

Loaded image: reg.harbor.org/ingress-nginx/controller:v1.11.2

4fa1bbc4cda4: Loading layer [==================================================>] 52.76MB/52.76MB

Loaded image: reg.harbor.org/ingress-nginx/kube-webhook-certgen:v1.4.3

#上传镜像到镜像仓库

步骤是tag打标签-push上传

可看https://blog.csdn.net/m0_58140853/article/details/141759444?spm=1001.2014.3001.5501

#修改部署ingress的配置文件

[root@k8s-master ingress]# vim deploy.yaml

445 image: ingress-nginx/controller:v1.11.2

546 image: ingress-nginx/kube-webhook-certgen:v1.4.3

599 image: ingress-nginx/kube-webhook-certgen:v1.4.3

#部署

[root@k8s-master ingress]# kubectl apply -f deploy.yaml

#建立实验素材

#创建一个控制器deployment,并且暴漏端口建立微服务

[root@k8s-master ingress]# kubectl create deployment myapp-v1 --image myapp:v1 --replicas 2 --dry-run=client -o yaml > myapp:v1.yml

[root@k8s-master ingress]# kubectl apply -f myapp\:v1.yml

[root@k8s-master ingress]# kubectl expose deployment myapp-v1 --port 80 --target-port 80 --dry-run=client -o yaml >> myapp\:v1.yml

[root@k8s-master ingress]# vim myapp\:v1.yml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: myapp-v1

name: myapp-v1

spec:

replicas: 2

selector:

matchLabels:

app: myapp-v1

template:

metadata:

creationTimestamp: null

labels:

app: myapp-v1

spec:

containers:

- image: myapp:v1

name: myapp-v1

---

apiVersion: v1

kind: Service

metadata:

labels:

app: myapp-v1

name: myapp-v1

spec:

ports:

- port: 80

[root@k8s-master ingress]# kubectl apply -f myapp\:v1.yml

deployment.apps/myapp unchanged

service/myapp created

[root@k8s-master ingress]# cp myapp\:v1.yml myapp\:v2.yml

#修改配置文件内name: myapp-v2

[root@k8s-master ingress]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 62d

myapp-v1 ClusterIP 10.104.22.5 <none> 80/TCP 65s

myapp-v2 ClusterIP 10.104.144.174 <none> 80/TCP 17s

#查看ingress部署是否成功

[root@k8s-master ingress]# kubectl -n ingress-nginx get all

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-fhfzk 0/1 Completed 0 11m

pod/ingress-nginx-admission-patch-mzd4t 0/1 Completed 1 11m

pod/ingress-nginx-controller-bb7d8f97c-v45gl 1/1 Running 0 11m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)

service/ingress-nginx-controller NodePort 10.108.205.63 <none> 80:30559/TCP,

service/ingress-nginx-controller-admission ClusterIP 10.96.226.154 <none> 443/TCP

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ingress-nginx-controller 1/1 1 1 11m

NAME DESIRED CURRENT READY AGE

replicaset.apps/ingress-nginx-controller-bb7d8f97c 1 1 1 11m

NAME STATUS COMPLETIONS DURATION AGE

job.batch/ingress-nginx-admission-create Complete 1/1 5s 11m

job.batch/ingress-nginx-admission-patch Complete 1/1 5s 11m

#修改service的类型为loadbalancer,配置文件的修改如下图

[root@k8s-master ingress]# kubectl edit -n ingress-nginx service/ingress-nginx-controller

#可以看到loadbalancer类型,metalLB已经分配了外部ip

[root@k8s-master ingress]# kubectl -n ingress-nginx get all

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-fhfzk 0/1 Completed 0 15m

pod/ingress-nginx-admission-patch-mzd4t 0/1 Completed 1 15m

pod/ingress-nginx-controller-bb7d8f97c-v45gl 1/1 Running 0 15m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)

service/ingress-nginx-controller LoadBalancer 10.108.205.63 172.25.254.50 80:30559

service/ingress-nginx-controller-admission ClusterIP 10.96.226.154 <none> 443/TCP

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ingress-nginx-controller 1/1 1 1 15m

NAME DESIRED CURRENT READY AGE

replicaset.apps/ingress-nginx-controller-bb7d8f97c 1 1 1 15m

NAME STATUS COMPLETIONS DURATION AGE

job.batch/ingress-nginx-admission-create Complete 1/1 5s 15m

job.batch/ingress-nginx-admission-patch Complete 1/1 5s 15m

#测试ingress

[root@k8s-master ingress]# kubectl create ingress webcluster --class nginx --rule '/=myapp-v1:80' --dry-run=client -o yaml > ingress1.yml

[root@k8s-master ingress]# vim ingress1.yml

# 配置文件中 Exact(精确匹配),ImplementationSpecific(特定实现),Prefix(前缀匹配),Regular expression(正则表达式匹配)

[root@k8s-master ingress]# kubectl apply -f ingress1.yml

ingress.networking.k8s.io/webcluster configured

[root@k8s-master ingress]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

webcluster nginx * 80 2m6s

#ingress掌控所有services所控制的pod的ip

[root@k8s-master ingress]# kubectl describe ingress webcluster

Name: webcluster

Labels: <none>

Namespace: default

Address:

Ingress Class: nginx

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

*

/ myapp-v1:80 (10.244.169.170:80,10.244.169.171:80)

Annotations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 8s nginx-ingress-controller Scheduled for sync

#外部主机直接访问ingress的externalIP

[root@docker-harbor harbor]# for i in {1..5}; do curl 172.25.254.50; done

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

ingress的用法

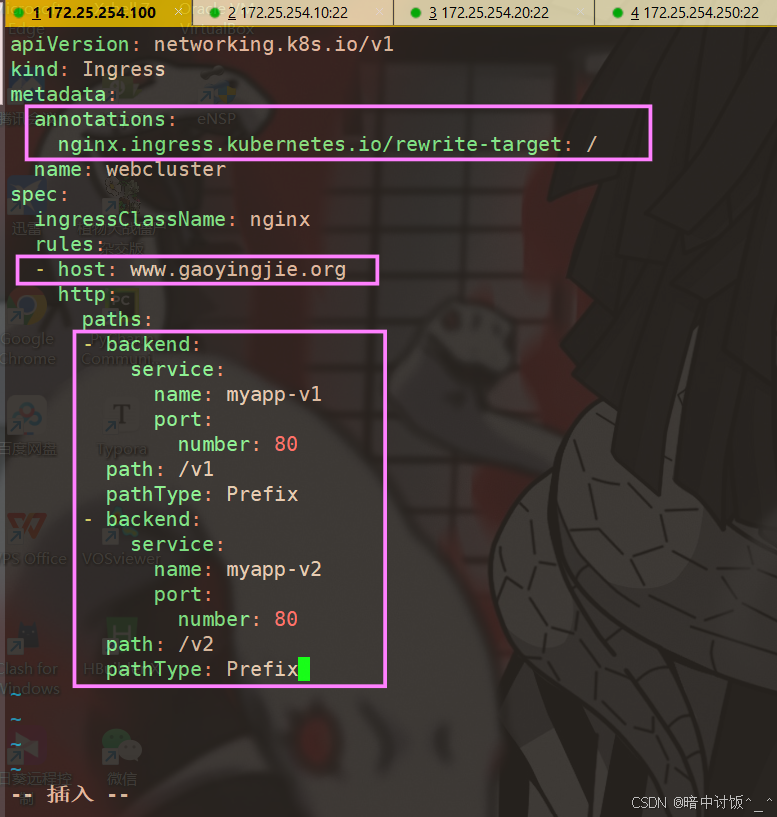

(2)、Ingress基于路径的访问

根据访问路径的不同调度到不同的service上。

[root@k8s-master ingress]# vim ingress2.yml

...

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: / #访问路径后加任何内容都被定向到/

name: webcluster

#当外界访问www.gaoyingjie.org/v1时,实际是访问网页的首发目录,也就是/usr/share/nginx/html/v1,所以就导致找不到页面404报错,因此需要重定向到根目录。

...

[root@k8s-master ingress]# kubectl apply -f ingress2.yml

ingress.networking.k8s.io/webcluster created

[root@k8s-master ingress]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

webcluster nginx www.gaoyingjie.org 80 6s

[root@k8s-master ingress]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 62d

myapp-v1 ClusterIP 10.104.22.5 <none> 80/TCP 3h4m

myapp-v2 ClusterIP 10.104.144.174 <none> 80/TCP 3h4m

#测试

[root@docker-harbor ~]# echo 172.25.254.50 www.gaoyingjie.org >> /etc/hosts

#当访问路径为v1时,ingress会调度到myapp-v1上(myapp-v1是一个services的名字),同理访问v2调度到2上

[root@docker-harbor ~]# curl www.gaoyingjie.org/v1

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

[root@docker-harbor ~]# curl www.gaoyingjie.org/v2

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

#nginx.ingress.kubernetes.io/rewrite-target: / 的功能实现

[root@docker-harbor ~]# curl www.gaoyingjie.org/v2/bbb

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@docker-harbor ~]# curl www.gaoyingjie.org/v1/bbb

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

[root@docker-harbor ~]# curl www.gaoyingjie.org/v1/adavc

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

(3)、Ingress基于域名的访问

根据访问域名的不同调度到不同的service上。

当访问域名myapp1.gaoyingjie.org时,访问service1-myapp1,当访问myapp2.gaoyingjie.org时,访问services2-myapp2。

[root@k8s-master ingress]# vim ingress3.yml

[root@k8s-master ingress]# kubectl apply -f ingress3.yml

ingress.networking.k8s.io/webcluster unchanged

[root@k8s-master ingress]# kubectl describe ingress webcluster

Name: webcluster

Labels: <none>

Namespace: default

Address: 172.25.254.10

Ingress Class: nginx

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

myapp1.gaoyingjie.org

/ myapp-v1:80 (10.244.169.173:80,10.244.36.80:80)

myapp2.gaoyingjie.org

/ myapp-v2:80 (10.244.169.181:80,10.244.36.81:80)

Annotations: nginx.ingress.kubernetes.io/rewrite-target: /

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 3m26s (x2 over 3m55s) nginx-ingress-controller Scheduled for sync

#测试

[root@docker-harbor ~]# echo 172.25.254.50 myapp1.gaoyingjie.org myapp2.gaoyingjie.org >> /etc/hosts

[root@docker-harbor ~]# curl myapp1.gaoyingjie.org

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@docker-harbor ~]# curl myapp2.gaoyingjie.org

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

(4)、Ingress的tls加密访问

访问https://myapp-tls.gaoyingjie.org。

#生成证书和key

[root@k8s-master ingress]# openssl req -newkey rsa:2048 -nodes -keyout tls.key -x509 -days 365 -subj "/CN=nginxsvc/O=nginxsvc" -out tls.crt

[root@k8s-master ingress]# ls

deploy.yaml ingress2.yml ingress-nginx-1.11.2.tar.gz myapp:v2.yml tls.key

ingress1.yml ingress3.yml myapp:v1.yml tls.crt

#将证书和key写入集群资源,secret是集群用来保存敏感资源的一种配置文件。

[root@k8s-master ingress]# kubectl create secret tls web-tls-secret --key tls.key --cert tls.crt

secret/web-tls-secret created

[root@k8s-master ingress]# kubectl get secrets

NAME TYPE DATA AGE

docker-auth kubernetes.io/dockerconfigjson 1 49d

docker-login kubernetes.io/dockerconfigjson 1 3d22h

web-tls-secret kubernetes.io/tls 2 7s

[root@k8s-master ingress]# vim ingress4.yml

...

spec:

tls: #https加密

- hosts:

- myapp-tls.gaoyingjie.org #当访问该域名时要加https

secretName: web-tls-secret #读取secret配置文件

ingressClassName: nginx

...

[root@k8s-master ingress]# kubectl apply -f ingress4.yml

ingress.networking.k8s.io/webcluster created

[root@k8s-master ingress]# kubectl get ingress webcluster

NAME CLASS HOSTS ADDRESS PORTS AGE

webcluster nginx myapp-tls.gaoyingjie.org 80, 443 12s

#测试

[root@docker-harbor ~]# echo 172.25.254.50 myapp-tls.gaoyingjie.org >> /etc/hosts

[root@docker-harbor ~]# curl -k https://myapp-tls.gaoyingjie.org

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

本机测试:

本机测试:

编辑C:\Windows\System32\drivers\etc 下的hosts文件,增加一条解析:172.25.254.50 myapp-tls.gaoyingjie.org,用浏览器访问https://myapp-tls.gaoyingjie.org

(5)、Ingress的auth的访认证

[root@k8s-master app]# dnf install httpd-tools -y

#建立认证用户文件

[root@k8s-master ingress]# htpasswd -cm auth gyj

New password:

Re-type new password:

Adding password for user gyj

[root@k8s-master ingress]# cat auth

gyj:$apr1$4.AjY0jO$hNjMaPOFgiePmBdFIeUc91

#将认证文件写入集群资源

[root@k8s-master ingress]# kubectl create secret generic auth-web --from-file auth

secret/auth-web created

[root@k8s-master ingress]# kubectl get secrets

NAME TYPE DATA AGE

auth-web Opaque 1 11s

docker-auth kubernetes.io/dockerconfigjson 1 49d

docker-login kubernetes.io/dockerconfigjson 1 4d

[root@k8s-master ingress]# vim ingress5.yml

...

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: auth-web #读取拥有用户认证文件的secret文件

nginx.ingress.kubernetes.io/auth-realm: "Please input username and password"

...

#测试

#没有用户认证显示404

[root@docker-harbor ~]# curl www.gaoyingjie.org

<html>

<head><title>401 Authorization Required</title></head>

<body>

<center><h1>401 Authorization Required</h1></center>

<hr><center>nginx</center>

</body>

</html>

#输入用户密码正常访问

[root@docker-harbor ~]# curl www.gaoyingjie.org -u gyj:gyj

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

(6)、Ingress的rewrite重定向

#重定向到hostname.html上

[root@k8s-master ingress]# vim ingress6.yml

...

annotations:

nginx.ingress.kubernetes.io/app-root: /hostname.html #指定默认访问的文件到hostname.html上

...

[root@k8s-master ingress]# kubectl apply -f ingress6.yml

ingress.networking.k8s.io/webcluster created

#测试

[root@docker-harbor ~]# curl -L www.gaoyingjie.org

myapp-v1-7757fb5f56-47t55

当路径有所变化时:

#访问路径变化导致404

[root@docker-harbor ~]# curl -L www.gaoyingjie.org/gyj/hostname.html

<html>

<head><title>404 Not Found</title></head>

<body bgcolor="white">

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.12.2</center>

</body>

</html>

#解决重定向路径问题

[root@k8s-master ingress]# vim ingress6.yml

...

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2 #$2指第二个变量,也就是/gyj/bbb的bbb

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/auth-type: basic

... ... ...

port:

number: 80

path: /gyj(/|$)(.*) #(/|&)表示以gyj结尾或以gyj/a结尾,

#(.*)表示/gyj/后面是随机的,

#匹配/gyj/,/gyj/abc,/gyj/aaa,/gyj/bbb等。

pathType: ImplementationSpecific

...

[root@k8s-master ingress]# kubectl apply -f ingress6.yml

ingress.networking.k8s.io/webcluster created

#测试

#配置文件中 /$2 指的是访问域名后的路径,路径随机时访问myapp1,路径是/gyj时访问myapp2

[root@docker-harbor ~]# curl -L www.gaoyingjie.org/gyj

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

[root@docker-harbor ~]# curl -L www.gaoyingjie.org/gyj/hostname.html

myapp-v2-67cdcc899f-4npb6

[root@docker-harbor ~]# curl -L www.gaoyingjie.org/aaa

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

金丝雀

基于header

基于权重

六、k8s的存储

6.1、Configmap

(1)、congifmap的创建方式

通过字面值创建

[root@k8s-master storage]# kubectl create configmap userlist --from-literal name=gyj --from-literal age=23

configmap/userlist created

[root@k8s-master storage]# kubectl describe cm userlist

Name: userlist

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

age:

----

23

name:

----

gyj

BinaryData

====

Events: <none>

[root@k8s-master storage]# 通过文件创建

[root@k8s-master storage]# kubectl create cm configmap2 --from-file /etc/resolv.conf

configmap/configmap2 created

[root@k8s-master storage]# kubectl describe cm configmap2

Name: configmap2

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

resolv.conf:

----

# Generated by NetworkManager

nameserver 114.114.114.114

BinaryData

====

Events: <none>

通过目录创建

[root@k8s-master storage]# mkdir test

[root@k8s-master storage]# cp /etc/hosts /etc/rc.d/rc.local /root/storage/test/

[root@k8s-master storage]# kubectl create cm configmap2 --from-file test/

configmap/configmap2 created

[root@k8s-master storage]# kubectl describe cm configmap2

Name: configmap2

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

hosts:

----

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.25.254.100 k8s-master

172.25.254.10 k8s-node1

172.25.254.20 k8s-node2

172.25.254.250 reg.gaoyingjie.org

rc.local:

----

#!/bin/bash

# THIS FILE IS ADDED FOR COMPATIBILITY PURPOSES

#

# It is highly advisable to create own systemd services or udev rules

# to run scripts during boot instead of using this file.

#

# In contrast to previous versions due to parallel execution during boot

# this script will NOT be run after all other services.

#

# Please note that you must run 'chmod +x /etc/rc.d/rc.local' to ensure

# that this script will be executed during boot.

touch /var/lock/subsys/local

mount /dev/cdrom /rhel9/

BinaryData

====

Events: <none>通过yaml文件创建

[root@k8s-master storage]# kubectl create cm configmap3 --from-literal db_host=172.25.254.100 --from-literal db_port=3306 --dry-run=client -o yaml > configmap3.yaml

[root@k8s-master storage]# vim configmap3.yaml

[root@k8s-master storage]# kubectl apply -f configmap3.yaml

configmap/configmap3 created

[root@k8s-master storage]# kubectl describe configmaps configmap3

Name: configmap3

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

db_port:

----

3306

db_host:

----

172.25.254.100

BinaryData

====

Events: <none>

(2)、configmap的使用方式

-

使用configmap 填充环境变量

[root@k8s-master storage]# vim testpod1.yml

[root@k8s-master storage]# kubectl apply -f testpod1.yml

pod/configmap-pod created

[root@k8s-master storage]# kubectl get pods

NAME READY STATUS RESTARTS AGE

configmap-pod 0/1 Completed 0 7s

test 1/1 Running 0 5m51s

[root@k8s-master storage]# kubectl logs pods/configmap-pod

KUBERNETES_SERVICE_PORT=443

KUBERNETES_PORT=tcp://10.96.0.1:443

HOSTNAME=configmap-pod

SHLVL=1

HOME=/

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT_443_TCP_PROTO=tcp

key1=172.25.254.100

key2=3306

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

KUBERNETES_SERVICE_PORT_HTTPS=443

PWD=/

KUBERNETES_SERVICE_HOST=10.96.0.1

[root@k8s-master storage]# [root@k8s-master storage]# vim testpod1.yml

apiVersion: v1

kind: Pod

metadata:

labels:

run: configmap-pod

name: configmap-pod

spec:

containers:

- image: busyboxplus:latest

name: configmap-pod

command:

- /bin/sh

- -c

- env

envFrom:

- configMapRef:

name: configmap3

restartPolicy: Never

[root@k8s-master storage]# kubectl apply -f testpod1.yml

pod/configmap-pod created

[root@k8s-master storage]# kubectl logs pods/configmap-pod

KUBERNETES_PORT=tcp://10.96.0.1:443

KUBERNETES_SERVICE_PORT=443

HOSTNAME=configmap-pod

SHLVL=1

HOME=/

db_port=3306

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

KUBERNETES_SERVICE_PORT_HTTPS=443

PWD=/

KUBERNETES_SERVICE_HOST=10.96.0.1

db_host=172.25.254.100

#在pod命令行中使用变量

[root@k8s-master ~]# vim testpod3.yml

apiVersion: v1

kind: Pod

metadata:

labels:

run: testpod

name: testpod

spec:

containers:

- image: busyboxplus:latest

name: testpod

command:

- /bin/sh

- -c

- echo ${db_host} ${db_port} #变量调用需

envFrom:

- configMapRef:

name: lee4-config

restartPolicy: Never

#查看日志

[root@k8s-master ~]# kubectl logs pods/testpod

172.25.254.100 3306-

通过数据卷使用configmap

声明一个卷并挂载,把configmap里的键变为挂载目录下的文件名字、把值变为文件内容。

[root@k8s-master storage]# vim testpod2.yml

apiVersion: v1

kind: Pod

metadata:

labels:

run: testpod

name: testpod

spec:

containers:

- image: busyboxplus:latest

name: testpod

command:

- /bin/sh

- -c

- sleep 1000000

volumeMounts:

- name: config-volume

mountPath: /config

volumes:

- name: config-volume

configMap:

name: configmap3 #配置文件

restartPolicy: Never

-

利用configmap填充pod的配置文件

举例:当容器运行nginx时,nginx的配置文件可以放在configmap(通过文件创建configmap),修改配置时可以直接修改configmap文件内容,就不用修改每个pod的nginx配置文件

#建立配置文件模板

[root@k8s-master ~]# vim nginx.conf

server {

listen 8000;

server_name _;

root /usr/share/nginx/html;

index index.html;

}#利用nging模板文件生成cm(通过文件创立cm)

root@k8s-master ~]# kubectl create cm nginx-conf --from-file nginx.conf #通过nginx.conf 这个文件创建名为nginx-conf的cm

configmap/nginx-conf created

[root@k8s-master ~]# kubectl describe cm nginx-conf

Name: nginx-conf

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

nginx.conf: #该cm的键

----

server { #该cm的值

listen 8000;

server_name _;

root /usr/share/nginx/html;

index index.html;

}

BinaryData

====

Events: <none>#建立nginx控制器文件

[root@k8s-master ~]# kubectl create deployment nginx --image nginx:latest --replicas 1 --dry-run=client -o yaml > nginx.yml

#设定nginx.yml中的卷

[root@k8s-master ~]# vim nginx.yml

[root@k8s-master ~]# cat nginx.yml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx:latest

name: nginx

volumeMounts:

- name: config-volume

mountPath: /etc/nginx/conf.d #挂载目录,在该目录下cm的键成为文件名,值成为文件内容

volumes:

- name: config-volume

configMap:

name: nginx-conf #cm名字测试:

测试可以访问该pod;

在该pod的nginx的目录下也有挂载的配置文件:

通过cm热更新修改配置:

所以当想要修改nginx的配置时,比如修改端口,只需要修改cm的配置,不用一个一个pod去修改。

[root@k8s-master storage]# kubectl edit cm nginx-conf #修改cm

#配置文件内容

apiVersion: v1

data:

nginx.conf: "server{\n\tlisten 80;\n\tserver_name _;\n\troot /usr/share/nginx/html;\n\tindex

index.html;\n}\n"

kind: ConfigMap

metadata:

creationTimestamp: "2024-09-15T08:21:02Z"

name: nginx-conf

namespace: default

resourceVersion: "188837"

uid: 15396d74-0151-4d02-8509-3f3234fb8a9e

#修改配置后,原有的pod的配置并不会修改,所以删除pod,pod会重启,重启后的pod遵循修改后的配置

[root@k8s-master storage]# kubectl delete pods nginx-8487c65cfc-9st6s

pod "nginx-8487c65cfc-9st6s" deleted

[root@k8s-master storage]# kubectl get pods

\NAME READY STATUS RESTARTS AGE

nginx-8487c65cfc-d8kqd 1/1 Running 0 3s

测试:端口改为80,是nginx的默认端口,所以可以直接访问

6.2、secrets

-

Secret 对象类型用来保存敏感信息,例如密码、OAuth 令牌和 ssh key。

-

敏感信息放在 secret 中比放在 Pod 的定义或者容器镜像中来说更加安全和灵活

-

Pod 可以用两种方式使用 secret:

-

作为 volume 中的文件被挂载到 pod 中的一个或者多个容器里。

-

当 kubelet 为 pod 拉取镜像时使用。

-

-

Secret的类型:

-

Service Account:Kubernetes 自动创建包含访问 API 凭据的 secret,并自动修改 pod 以使用此类型的 secret。

-

Opaque:使用base64编码存储信息,可以通过base64 --decode解码获得原始数据,因此安全性弱。

-

kubernetes.io/dockerconfigjson:用于存储docker registry的认证信息

-

(1)、secrets的创建

从文件创建

[root@k8s-master secrets]# echo -n gaoyingjie > username.txt

[root@k8s-master secrets]# echo -n gyj > username.txt

[root@k8s-master secrets]# kubectl create secret generic userlist --from-file username.txt --from-file ^C

[root@k8s-master secrets]# echo -n gaoyingjie > username.txt

[root@k8s-master secrets]# echo -n gaoyingjie > password.txt

[root@k8s-master secrets]# kubectl create secret generic userlist --from-file username.txt --from-file password.txt

secret/userlist created

[root@k8s-master secrets]# kubectl get secrets userlist -o yaml

apiVersion: v1

data:

password.txt: Z2FveWluZ2ppZQ== #username已加密

username.txt: Z2FveWluZ2ppZQ== #password已加密

kind: Secret

metadata:

creationTimestamp: "2024-09-15T11:54:24Z"

name: userlist

namespace: default

resourceVersion: "200519"

uid: 4a144911-0b46-4e1e-95b1-13b403cac262

type: Opaque

编写yml文件建立

#将要存储的username和password用base64加密

[root@k8s-master secrets]# echo -n gaoyingjie | base64

Z2FveWluZ2ppZQ==

[root@k8s-master secrets]# echo -n gyj | base64

Z3lq

#生成secrets配置文件

[root@k8s-master secrets]# kubectl create secret generic userlist --dry-run=client -o yaml > userlist.yml

[root@k8s-master secrets]# vim userlist.yml

#配置文件内容

apiVersion: v1

kind: Secret

metadata:

creationTimestamp: null

name: userlist

type: Opaque

data:

username: Z2FveWluZ2ppZQ==

password: Z3lq

[root@k8s-master secrets]# kubectl apply -f userlist.yml

secret/userlist created

[root@k8s-master secrets]# kubectl get secrets

NAME TYPE DATA AGE

docker-login kubernetes.io/dockerconfigjson 1 4d9h

userlist Opaque 2 10s

#查看

[root@k8s-master secrets]# kubectl describe secrets userlist

Name: userlist

Namespace: default

Labels: <none>

Annotations: <none>

Type: Opaque

Data

====

username: 10 bytes

password: 3 bytes

[root@k8s-master secrets]# kubectl get secrets userlist -o yaml

apiVersion: v1

data:

password: Z3lq

username: Z2FveWluZ2ppZQ==

kind: Secret

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"password":"Z3lq","username":"Z2FveWluZ2ppZQ=="},"kind":"Secret","metadata":{"annotations":{},"creationTimestamp":null,"name":"userlist","namespace":"default"},"type":"Opaque"}

creationTimestamp: "2024-09-15T12:02:45Z"

name: userlist

namespace: default

resourceVersion: "201277"

uid: 3ef997b4-aed7-4b2a-b05d-15064df95bae

type: Opaque(2)、secrets的应用

@挂载卷

[root@k8s-master secrets]# vim pod1.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: nginx

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: secrets #卷的名字

mountPath: /secret #挂载路径

readOnly: true #卷为只读类型

#声明卷

volumes:

- name: secrets #声明卷的名字为secrets

secret:

secretName: userlist #卷内的数据是secrets,名为userlist的sercrets也是以键值对的形式存储,

#userlist里有两个键,那么挂载目录secret下就有两个文件名,userlist内键值对的两个值就是这两个文件的内容

[root@k8s-master secrets]# kubectl apply -f userlist.yml

secret/userlist created

[root@k8s-master secrets]# kubectl apply -f pod1.yml

pod/nginx created

[root@k8s-master secrets]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 4s

[root@k8s-master secrets]# kubectl describe pod nginx

[root@k8s-master secrets]# kubectl exec pods/nginx -it -- /bin/bash #进入容器查看可以看到secret挂载点下有userlist的两个键,分别是两个文件username和password:

注意:集群资源的唯一凭证是建立k8s的时候自动建立的,删除后pod就不能使用集群资源了。

@向指定路径映射 secret 密钥

[root@k8s-master secrets]# vim pod2.yml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx1

name: nginx1

spec:

containers:

- image: nginx

name: nginx1

volumeMounts:

- name: secrets

mountPath: /secret

readOnly: true

volumes:

- name: secrets

secret:

secretName: userlist

items: #指定userlist的个别键放在指定路径下

- key: username #将userlist的键username放在my-users/username目录下

path: my-users/username #也可以指定别的键或者指定别的路径,比如 -key: password path: auth/password

[root@k8s-master secrets]# kubectl apply -f pod2.yml

pod/nginx1 created

[root@k8s-master secrets]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx1 1/1 Running 0 6s

[root@k8s-master secrets]# kubectl exec -it pods/nginx1 -- /bin/bash

可以看到只有username:

@将secrets设置为环境变量

[root@k8s-master secrets]# vim pod3.yml

apiVersion: v1

kind: Pod

metadata:

labels:

run: busybox

name: busybox

spec:

containers:

- image: busybox

name: busybox

command:

- /bin/sh

- -c

- env

env:

- name: USERNAME #将userlist的键username赋给USERNAME

valueFrom:

secretKeyRef:

name: userlist

key: username

- name: PASS #将userlist的键password赋给PASS

valueFrom:

secretKeyRef:

name: userlist

key: password

restartPolicy: Never

[root@k8s-master secrets]# kubectl apply -f pod3.yml

pod/busybox created

@ 存储docker registry的认证信息

docker registry的私有仓库上传和下载都需要验证,而共有仓库上传需要验证,下载不需要验证。

当k8s集群的某个节点想要下载私有仓库的镜像时,必须要登录认证,所以要进行集群化认证。

登录172.25.254.250(reg.gaoyingjie.org)创建名gyj的私有仓库

#登陆仓库

[root@k8s-master secrets]# docker login reg.gaoyingjie.org

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credential-stores

Login Succeeded

#上传镜像

[root@k8s-master secrets]# docker tag nginx:latest reg.gaoyingjie.org/gyj/nginx:latest

[root@k8s-master secrets]# docker push reg.gaoyingjie.org/gyj/nginx:latest

latest: digest: sha256:8a34fb9cb168c420604b6e5d32ca6d412cb0d533a826b313b190535c03fe9390 size: 1364#建立用于docker认证的secret

[root@k8s-master secrets]# kubectl create secret docker-registry docker-auth --docker-server reg.gaoyingjie.org --docker-username admin --docker-password gyj --docker-email gaoyingjie@gaoyingjie.org

secret/docker-auth created

#查看secrets

[root@k8s-master secrets]# kubectl get secrets

NAME TYPE DATA AGE

docker-auth kubernetes.io/dockerconfigjson 1 4m53s #集群拉取镜像需要认证的secrets

docker-login kubernetes.io/dockerconfigjson 1 4d11h

userlist Opaque 2 102m

[root@k8s-master secrets]# vim pod4.yml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: nginx

spec:

containers:

- image: reg.gaoyingjie.org/gyj/nginx:latest #从私有仓库下载时要使用绝对路径

name: nginx

imagePullSecrets: #添加创建的集群认证secrets,不设定docker认证时无法下载

- name: docker-auth

#若没有 imagePullSecrets:- name: docker-auth(不添加集群认证secrtes),那么拉取镜像时会报错

[root@k8s-master secrets]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 0/1 ErrImagePull 0 35s #拉取镜像报错errimagepull

#添加secrets后

[root@k8s-master secrets]# kubectl apply -f pod4.yml

pod/nginx created

[root@k8s-master secrets]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 7s

6.3、volumes配置管理

-

容器中文件在磁盘上是临时存放的,这给容器中运行的特殊应用程序带来一些问题

-

当容器崩溃时,kubelet将重新启动容器,容器中的文件将会丢失,因为容器会以干净的状态重建。

-

当在一个 Pod 中同时运行多个容器时,常常需要在这些容器之间共享文件。

-

Kubernetes 卷具有明确的生命周期与使用它的 Pod 相同

-

卷比 Pod 中运行的任何容器的存活期都长,在容器重新启动时数据也会得到保留

-

当一个 Pod 不再存在时,卷也将不再存在。

-

Kubernetes 可以支持许多类型的卷,Pod 也能同时使用任意数量的卷。

-

卷不能挂载到其他卷,也不能与其他卷有硬链接。 Pod 中的每个容器必须独立地指定每个卷的挂载位置。

静态卷:emptyDir卷、hostpath卷、nfs卷。都是需要管理员手动指定,不能自动调用。

(1)、emptyDir卷

当Pod指定到某个节点上时,首先创建的是一个emptyDir卷,并且只要 Pod 在该节点上运行,卷就一直存在。卷最初是空的。 尽管 Pod 中的容器挂载 emptyDir 卷的路径可能相同也可能不同,但是这些容器都可以读写 emptyDir 卷中相同的文件。 当 Pod 因为某些原因被从节点上删除时,emptyDir 卷中的数据也会永久删除,也就是说,emptyDir的生命周期和pod一样。

emptyDir 的使用场景:

- 主要作用就是共享,对于所有pod之间的共享资源。

-

缓存空间,例如基于磁盘的归并排序。

-

耗时较长的计算任务提供检查点,以便任务能方便地从崩溃前状态恢复执行。

-

在 Web 服务器容器服务数据时,保存内容管理器容器获取的文件。

[root@k8s-master ~]# mkdir volumes

[root@k8s-master volumes]# vim pod1.yml

apiVersion: v1

kind: Pod

metadata:

name: vol1 #pod的名字

spec:

containers:

- image: busyboxplus:latest #开启的镜像

name: vm1 #名字

command:

- /bin/sh

- -c

- sleep 30000000

volumeMounts:

- mountPath: /cache #挂载目录

name: cache-vol #指定的卷的名字

- image: nginx:latest

name: vm2

volumeMounts:

- mountPath: /usr/share/nginx/html

#挂载目录,在该目录下存储的内容就是存储到cache-vol卷中,同样,在该目录下存储的内容,可以在busyboxplus的挂载目录/cache下可以看到。

name: cache-vol

volumes: #声明卷

- name: cache-vol #卷的名字

emptyDir: #卷的类型

medium: Memory #卷的设备:内存

sizeLimit: 100Mi #大小

[root@k8s-master volumes]# kubectl describe pod vol1

#进入容器查看

[root@k8s-master volumes]# kubectl exec -it pods/vol1 -c vm1 -- /bin/sh

/ # ls

bin dev home lib64 media opt root sbin tmp var

cache etc lib linuxrc mnt proc run sys usr

/ # cd cache/

/cache # echo gaoyingjie > index.html

/cache # ls

index.html

/cache # curl localhost #basybox和nginx在一个pod内,共享网络栈,所以可以curl localhost

gaoyingjie

/cache #

/cache # dd if=/dev/zero of=bigfile bs=1M count=99 #卷设置了100M 测试

99+0 records in

99+0 records out

/cache # dd if=/dev/zero of=bigfile bs=1M count=100

100+0 records in

99+1 records out

/cache # dd if=/dev/zero of=bigfile bs=1M count=101 #超过100则没有空间

dd: writing 'bigfile': No space left on device

101+0 records in

99+1 records out

/cache #

(2)、hostpath卷

功能:

hostPath 卷能将主机节点文件系统上的文件或目录挂载到您的 Pod 中,不会因为pod关闭而被删除。也就是将该pod的一些资源存在本机上。

hostPath 的一些用法

-

运行一个需要访问 Docker 引擎内部机制的容器,挂载 /var/lib/docker 路径。

-

在容器中运行 cAdvisor(监控) 时,以 hostPath 方式挂载 /sys。

-

允许 Pod 指定给定的 hostPath 在运行 Pod 之前是否应该存在,是否应该创建以及应该以什么方式存在

hostPath的安全隐患

-

具有相同配置(例如从 podTemplate 创建)的多个 Pod 会由于节点上文件的不同而在不同节点上有不同的行为。

-

当 Kubernetes 按照计划添加资源感知的调度时,这类调度机制将无法考虑由 hostPath 使用的资源。

-

基础主机上创建的文件或目录只能由 root 用户写入。您需要在 特权容器 中以 root 身份运行进程,或者修改主机上的文件权限以便容器能够写入 hostPath 卷。

[root@k8s-master volumes]# vim pod2.yml

apiVersion: v1

kind: Pod

metadata:

name: vol1

spec:

containers:

- image: nginx:latest

name: vm1

volumeMounts:

- mountPath: /usr/share/nginx/html #挂载目录,将主机/data的内容挂载到当前目录

name: cache-vol

volumes:

- name: cache-vol #声明卷

hostPath: #卷的类型

path: /data #卷的路径(主机上的路径)

type: DirectoryOrCreate #当/data目录不存在时自动建立

[root@k8s-master volumes]# kubectl apply -f pod2.yml

pod/vol1 created

[root@k8s-master volumes]# kubectl get -o wide pods

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

vol1 1/1 Running 0 21s 10.244.36.85 k8s-node1 <none> <none>

#pod在node1上#node1是pod的主机,node1上有data目录

[root@k8s-node1 ~]# ll /data

总用量 0

[root@k8s-node1 ~]# cd /data

[root@k8s-node1 data]# echo gaoyingjie > index.html #写入内容

[root@k8s-node1 data]# ls

index.html

[root@k8s-master volumes]# curl 10.244.36.85 #可以看到pod下/usr/share/nginx/html内容

gaoyingjie

#当pod被删除后

[root@k8s-master volumes]# kubectl delete -f pod2.yml --force

#主机hostpath卷不会删除

[root@k8s-node1 data]# ls

index.html

[root@k8s-node1 data]# cat index.html

gaoyingjie

[root@k8s-node1 data]#

(3)、nfs卷

NFS 卷允许将一个现有的 NFS 服务器上的目录挂载到 Kubernetes 中的 Pod 中。这对于在多个 Pod 之间共享数据或持久化存储数据非常有用

例如,如果有多个容器需要访问相同的数据集,或者需要将容器中的数据持久保存到外部存储,NFS 卷可以提供一种方便的解决方案。

意义:用另外一台主机做存储,k8s集群和集群数据资源不在同一台主机上,实现了存储分离。

准备工作:

4台主机都要安装nfs,175.25.254.250是nfs服务器,其他主机是为了访问nfs服务器。

[root@docker-harbor ~]# dnf install nfs-utils -y

[root@docker-harbor rhel9]# systemctl enable --now nfs-server.service

[root@docker-harbor rhel9]# systemctl status nfs-server.service

#编辑策略文件

[root@docker-harbor ~]# vim /etc/exports

/nfsdata *(rw,sync,no_root_squash)

#rw:读写,sync:数据完完整性同步,async:实时性同步,no_root_squash:设置权限,欣慰nfsdata是需要root权限

[root@docker-harbor ~]# exportfs -rv

exporting *:/nfsdata

#关闭火墙后查看,每台主机都能看到

[root@docker-harbor ~]# showmount -e 172.25.254.250

Export list for 172.25.254.250:

/nfsdata *

[root@k8s-master ~]# showmount -e 172.25.254.250

Export list for 172.25.254.250:

/nfsdata *

部署nfs卷:

[root@k8s-master volumes]# vim pod3.yml

apiVersion: v1

kind: Pod

metadata:

name: vol1

spec:

containers:

- image: nginx:latest

name: vm1

volumeMounts:

- mountPath: /usr/share/nginx/html #卷挂载目录

name: cache-vol

volumes: #声明卷

- name: cache-vol #卷的名称

nfs: #使用的是nfs卷

server: 172.25.254.250 #nfs服务器的ip,就是挂载点ip

path: /nfsdata #nfs共享出来的目录

#pod开启后会将/usr/share/nginx/html下的内容挂载到nfs服务器的/nfsdata目录下

[root@k8s-master volumes]# kubectl apply -f pod3.yml

pod/vol1 created

[root@k8s-master volumes]# kubectl describe pod vol1

#nfsdata目录下还没有内容,所以访问nginx的首发目录是403

[root@k8s-master volumes]# curl 10.244.36.87

<html>

<head><title>403 Forbidden</title></head>

<body>

<center><h1>403 Forbidden</h1></center>

<hr><center>nginx/1.27.1</center>

</body>

</html>

#进入nfsdata的目录下写入内容

[root@docker-harbor ~]# cd /nfsdata/

[root@docker-harbor nfsdata]# echo gaoyingjie > index.html

[root@docker-harbor nfsdata]# ls

index.html

#再次查看,可以看到网页内容了

[root@k8s-master volumes]# curl 10.244.36.87

gaoyingjie

动态卷:持久卷。就是在使用资源时卷会自动建立。

(4)、持久卷

静态pv卷:

管理员手动建立pv,然后才能建立pvc,pod才能使用pvc申请pv。

[root@k8s-master pvc]# vim pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv1 #pv的名字

spec:

capacity:

storage: 5Gi #pv的大小

volumeMode: Filesystem #文件系统

accessModes:

- ReadWriteOnce #访问模式:单点读写

persistentVolumeReclaimPolicy: Retain #回收模式:保留

storageClassName: nfs #存储类为nfs

nfs:

path: /nfsdata/pv1 #访问路径,将pv1的存储数据挂载到该目录下

server: 172.25.254.250

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv2

spec:

capacity:

storage: 15Gi

volumeMode: Filesystem

accessModes:

- ReadWriteMany #多点读写

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

nfs:

path: /nfsdata/pv2

server: 172.25.254.250

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv3

spec:

capacity:

storage: 25Gi

volumeMode: Filesystem

accessModes:

- ReadOnlyMangy #多点只读

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

nfs:

path: /nfsdata/pv3

server: 172.25.254.250

[root@k8s-master pvc]# kubectl apply -f pv.yml

persistentvolume/pv1 unchanged

persistentvolume/pv2 unchanged

persistentvolume/pv3 created

[root@k8s-master pvc]# kubectl get pv

\NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pv1 5Gi RWO Retain Available nfs <unset> 37s

pv2 15Gi RWX Retain Available nfs <unset> 37s

pv3 25Gi ROX Retain Available nfs <unset>

[root@k8s-master pvc]# vim pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc1 #名字可以随机,只看pvc的访问模式和请求大小这两个是否有匹配的pv

spec:

storageClassName: nfs

accessModes:

- ReadWriteOnce #pv和pvc的方式必须一致

resources:

requests:

storage: 1Gi #pvc1的请求大小比pv小,不能超过pv的大小

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc2

spec:

storageClassName: nfs

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc3

spec:

storageClassName: nfs

accessModes:

- ReadOnlyMany

resources:

requests:

storage: 15Gi

[root@k8s-master pvc]# kubectl apply -f pvc.yml

persistentvolumeclaim/pvc1 created

persistentvolumeclaim/pvc2 created

persistentvolumeclaim/pvc3 created

[root@k8s-master pvc]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

pvc1 Bound pv1 5Gi RWO nfs <unset> 10s

pvc2 Bound pv2 15Gi RWX nfs <unset> 10s

pvc3 Bound pv3 25Gi ROX nfs <unset> 10s

[root@k8s-master pvc]# vim pod1.yml

apiVersion: v1

kind: Pod

metadata:

name: gaoyingjie

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- mountPath: /usr/share/nginx/html #pv1挂载到该目录下,该目录最终访问的就是pv1的内容

name: vol1

volumes: #声明卷

- name: vol1

persistentVolumeClaim: #持久卷请求,用请求使用卷pv1

claimName: pvc1

[root@k8s-master pvc]# kubectl apply -f pod1.yml

pod/gaoyingjie created

[root@k8s-master pvc]# kubectl get pods

NAME READY STATUS RESTARTS AGE

gaoyingjie 1/1 Running 0 10s

[root@k8s-master pvc]# kubectl describe pods gaoyingjie #pv1中没有内容时,访问不到

[root@k8s-master pvc]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

gaoyingjie 1/1 Running 0 6m8s 10.244.36.88 k8s-node1 <none> <none>

[root@k8s-master pvc]# curl 10.244.36.88

<html>

<head><title>403 Forbidden</title></head>

<body>

<center><h1>403 Forbidden</h1></center>

<hr><center>nginx/1.27.1</center>

</body>

</html>

#在pv1中写入内容

[root@docker-harbor nfsdata]# echo gaoyingjie > pv1/index.html

#可以看到写入的内容

[root@k8s-master pvc]# curl 10.244.36.88

gaoyingjie

#也可以进入容器中,看到pv1的内容已挂载到/usr/share/nginx/html/目录下

[root@k8s-master pvc]# kubectl exec -it pods/gaoyingjie -- /bin/bash

root@gaoyingjie:/# cd /usr/share/nginx/html/

root@gaoyingjie:/usr/share/nginx/html# ls

index.html

root@gaoyingjie:/usr/share/nginx/html# cat index.html

gaoyingjie

6.4、存储类

-

StorageClass提供了一种描述存储类(class)的方法,不同的class可能会映射到不同的服务质量等级和备份策略或其他策略等。

-

每个 StorageClass 都包含 provisioner、parameters 和 reclaimPolicy 字段, 这些字段会在StorageClass需要动态分配 PersistentVolume 时会使用到

动态pv:

创建pvc的时候会调用存储类去自动创建目录,如果没有指定存储类则调用默认存储类(前提是有默认存储类,没有则pvc状态显示pending)。简而言之,就是帮助pvc自动生成pv目录。

(1)、集群授权

[root@k8s-master storageclass]# vim rbac.yml apiVersion: v1 kind: Namespace metadata: name: nfs-client-provisioner #名命空间 --- apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner namespace: nfs-client-provisioner --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["nodes"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: nfs-client-provisioner roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner namespace: nfs-client-provisioner rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner namespace: nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: nfs-client-provisioner roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io [root@k8s-master storageclass]# kubectl apply -f rbac.yml namespace/nfs-client-provisioner created serviceaccount/nfs-client-provisioner created clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created #查看rbac信息 [root@k8s-master storageclass]# kubectl -n nfs-client-provisioner get sa NAME SECRETS AGE default 0 18s nfs-client-provisioner 0 19s

自动创建卷是由存储分配器来做,存储分配器用pod工作。

(2)、存储分配器所用的控制器

[root@k8s-master storageclass]# vim deployment.yml apiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner labels: app: nfs-client-provisioner namespace: nfs-client-provisioner #命名空间 spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner image: sig-storage/nfs-subdir-external-provisioner:v4.0.2 volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: k8s-sigs.io/nfs-subdir-external-provisioner #标签 - name: NFS_SERVER value: 172.25.254.250 - name: NFS_PATH #通过该变量识别nfs的地址和内容 value: /nfsdata volumes: - name: nfs-client-root nfs: server: 172.25.254.250 #nfs服务器的ip path: /nfsdata #nfs的共享目录 #上传镜像到镜像仓库 [root@docker-harbor ~]# docker load -i nfs-subdir-external-provisioner-4.0.2.tar 1a5ede0c966b: Loading layer 3.052MB/3.052MB ad321585b8f5: Loading layer 42.02MB/42.02MB Loaded image: registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2 [root@docker-harbor ~]# docker tag registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2 reg.gaoyingjie.org/sig-storage/nfs-subdir-external-provisioner:v4.0.2 [root@docker-harbor ~]# docker push reg.gaoyingjie.org/sig-storage/nfs-subdir-external-provisioner:v4.0.2 The push refers to repository [reg.gaoyingjie.org/sig-storage/nfs-subdir-external-provisioner] ad321585b8f5: Pushed 1a5ede0c966b: Pushed v4.0.2: digest: sha256:f741e403b3ca161e784163de3ebde9190905fdbf7dfaa463620ab8f16c0f6423 size: 739 [root@k8s-master storageclass]# kubectl apply -f deployment.yml [root@k8s-master storageclass]# kubectl get -n nfs-client-provisioner deployments.apps

(3)、创建存储类

[root@k8s-master storageclass]# vim class.yml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: nfs-client provisioner: k8s-sigs.io/nfs-subdir-external-provisioner parameters: archiveOnDelete: "false" #若是“true”,当删除该类的时候,会对nfs存储目录进行打包,false则不会打包 [root@k8s-master storageclass]# kubectl apply -f class.yml [root@k8s-master storageclass]# kubectl get -n nfs-client-provisioner storageclasses.storage.k8s.io

(4)、创建pvc

[root@k8s-master storageclass]# vim pvc.yml kind: PersistentVolumeClaim apiVersion: v1 metadata: name: test-claim spec: storageClassName: nfs-client accessModes: - ReadWriteMany resources: requests: storage: 1G [root@k8s-master storageclass]# kubectl apply -f pvc.yml persistentvolumeclaim/test-claim created [root@k8s-master storageclass]# kubectl get pvc

可以在nfs服务器上看大自动生成的pv卷:

(5)、创建测试pod

将名为nfs-pvc的pv卷挂载到/mnt,名为test-claim的pvc调用存储类。存储类是动态的,它通过deployment控制器在nfs服务器端建立一个动态目录(类似default-test-claim-pvc-10b58d9a-c212-4f01-b5c3-b6428d42ba1b )。

[root@k8s-master storageclass]# vim pod.yml kind: Pod apiVersion: v1 metadata: name: test-pod spec: containers: - name: test-pod image: busybox #运行了busybox的容器 command: - "/bin/sh" #动作 args: #参数 - "-c" - "touch /mnt/SUCCESS && exit 0 || exit 1" #会写到pvc所创建的目录中 volumeMounts: - name: nfs-pvc #定义pv卷的名称 mountPath: "/mnt" restartPolicy: "Never" #执行一次就退出,不会进行读写 volumes: - name: nfs-pvc #最终会把名为nfs-pvc的pv卷挂载到/mnt,名为test-claim的pvc调用存储类。存储类是动态的,它通过deployment控制器建立一个动态目录(default-test-claim-pvc-10b58d9a-c212-4f01-b5c3-b6428d42ba1b ) persistentVolumeClaim: claimName: test-claim #使用的pvc名称为test-claim [root@k8s-master storageclass]# kubectl apply -f pod.yml pod/test-pod created [root@k8s-master storageclass]# kubectl get pods可以在nfs服务器端,pvc所创建的目录中看到SUCCESS:

(6)、默认存储类

上述定义的存储类名称是nfs-client,若在pvc不使用nfs-client:

[root@k8s-master storageclass]# vim pvc.yml kind: PersistentVolumeClaim apiVersion: v1 metadata: name: test-claim spec: #storageClassName: nfs-client 删除 accessModes: - ReadWriteMany resources: requests: storage: 1G [root@k8s-master storageclass]# kubectl apply -f pvc.yml persistentvolumeclaim/test-claim created [root@k8s-master storageclass]# kubectl get pvc状态显示pending,所以必须要设置默认存储类,以便当pvc没有使用任何存储类时调用默认存储类。

将nfs-client设置为默认存储类:

[root@k8s-master storageclass]# kubectl edit sc nfs-client storageclass.kubernetes.io/is-default-class: "true" [root@k8s-master storageclass]# kubectl get sc [root@k8s-master storageclass]# kubectl apply -f pvc.yml persistentvolumeclaim/test-claim created [root@k8s-master storageclass]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECL test-claim Bound pvc-3b3c6e40-7b8b-4c41-811a-3e081af9866b 1G RWX nfs-clien

名为nfs-client存储类为默认存储类default:

没有使用存储类的pvc状态为Bound,调用默认存储类:

6.5、statefulset控制器

(1)、功能特性

-

Statefulset是为了管理有状态服务的问提设计的

-

StatefulSet将应用状态抽象成了两种情况:

-

拓扑状态:应用实例必须按照某种顺序启动。新创建的Pod必须和原来Pod的网络标识一样

-

存储状态:应用的多个实例分别绑定了不同存储数据。

-

StatefulSet给所有的Pod进行了编号,编号规则是:$(statefulset名称)-$(序号),从0开始。

-

Pod被删除后重建,重建Pod的网络标识也不会改变,Pod的拓扑状态按照Pod的“名字+编号”的方式固定下来,并且为每个Pod提供了一个固定且唯一的访问入口,Pod对应的DNS记录。

(2)、StatefulSet的组成部分

-

Headless Service:用来定义pod网络标识,生成可解析的DNS记录

-

volumeClaimTemplates:创建pvc,指定pvc名称大小,自动创建pvc且pvc由存储类供应。

-

StatefulSet:管理pod的

集群交流是通过集群自带的dns来进行的。deployment控制器所创建的pod是无状态的,创建出的pod名字都是随机的(例如test-db99b9599-zwmdg,db99b9599是控制器id,zwmdg是pod的id,当pod重新生成时,id会发生改变,ip也会发生改变。),若回收pod后再开启,pvc调取存储类所创建的动态目录就会发生改变,因此需要statefulset控制器保证再开启的pod所挂载的pv卷不变。

(3)、构建statefulsetl控制器

#建立无头服务 [root@k8s-master statefulset]# vim headless.yml apiVersion: v1 kind: Service metadata: name: nginx-svc labels: app: nginx spec: ports: - port: 80 name: web clusterIP: None #没有集群内部id selector: app: nginx [root@k8s-master storageclass]# kubectl apply -f headclass.yml

#建立statefulset [root@k8s-master statefulset]# vim statefulset.yml apiVersion: apps/v1 kind: StatefulSet metadata: name: web spec: serviceName: "nginx-svc" replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx volumeMounts: - name: www mountPath: /usr/share/nginx/html #挂载的卷是nginx的发布目录, #最终会挂载到pvc依靠存储类所创建的目录上 volumeClaimTemplates: - metadata: name: www spec: storageClassName: nfs-client #pvc使用的存储类 accessModes: - ReadWriteOnce resources: requests: storage: 1Gi [root@k8s-master storageclass]# kubectl apply -f statefulset.yml可以看pod的名字是有规律的:

nfs服务器端的目录下,自动创建了三个目录:

(4)、测试

在nfs服务器端nfsdatad目录下的三个挂载目录下建立html文件,因为nginx的发布目录挂载在这三个目录下。

[root@docker-harbor nfsdata]# echo web0 > default-test-claim-pvc-8ea9fa4f-77e6-4bc1-bcf8-c461b010cb39/index.html [root@docker-harbor nfsdata]# echo web0 > default-www-web-0-pvc-95becd2f-752c-4dda-b577-98142eb503a2/index.html [root@docker-harbor nfsdata]# echo web1 > default-www-web-1-pvc-ce2cc172-747c-494d-8df8-1390555d52a1/index.html [root@docker-harbor nfsdata]# echo web2 > default-www-web-2-pvc-b701c3f5-6f6b-475f-b8dd-55e6f57e40a0/index.html [root@docker-harbor nfsdata]#访问集团内部服务可以看到写入的内容:

(5)、statefulset弹缩

删除statefulset控制器重新创建后,pod的名称不会发生给改变,访问服务时内容不会发生变化。

回收pod和增加pod都不会使pod的名称改变,方便了集群内的交流。

[root@k8s-master storageclass]# kubectl scale statefulset web --replicas 0 [root@k8s-master storageclass]# kubectl scale statefulset web --replicas 3

目录依然存在,当重新启动服务时,pod就分别挂载到这3个目录上。

statefulset是个控制器,当回收了statefulset后目录依然存在,只要pvc一直存在,目录中的内容就一直存在,除非手动删除pvc。

删除pvc:

七、k8s的网络通信

7.1、flannel

-

当容器发送IP包,通过veth pair 发往cni网桥,再路由到本机的flannel.1设备进行处理。

-

VTEP设备之间通过二层数据帧进行通信,源VTEP设备收到原始IP包后,在上面加上一个目的MAC地址,封装成一个内部数据帧,发送给目的VTEP设备。

-

内部数据桢,并不能在宿主机的二层网络传输,Linux内核还需要把它进一步封装成为宿主机的一个普通的数据帧,承载着内部数据帧通过宿主机的eth0进行传输。

-

Linux会在内部数据帧前面,加上一个VXLAN头,VXLAN头里有一个重要的标志叫VNI,它是VTEP识别某个数据桢是不是应该归自己处理的重要标识。

-

flannel.1设备只知道另一端flannel.1设备的MAC地址,却不知道对应的宿主机地址是什么。在linux内核里面,网络设备进行转发的依据,来自FDB的转发数据库,这个flannel.1网桥对应的FDB信息,是由flanneld进程维护的。

-

linux内核在IP包前面再加上二层数据帧头,把目标节点的MAC地址填进去,MAC地址从宿主机的ARP表获取。

-