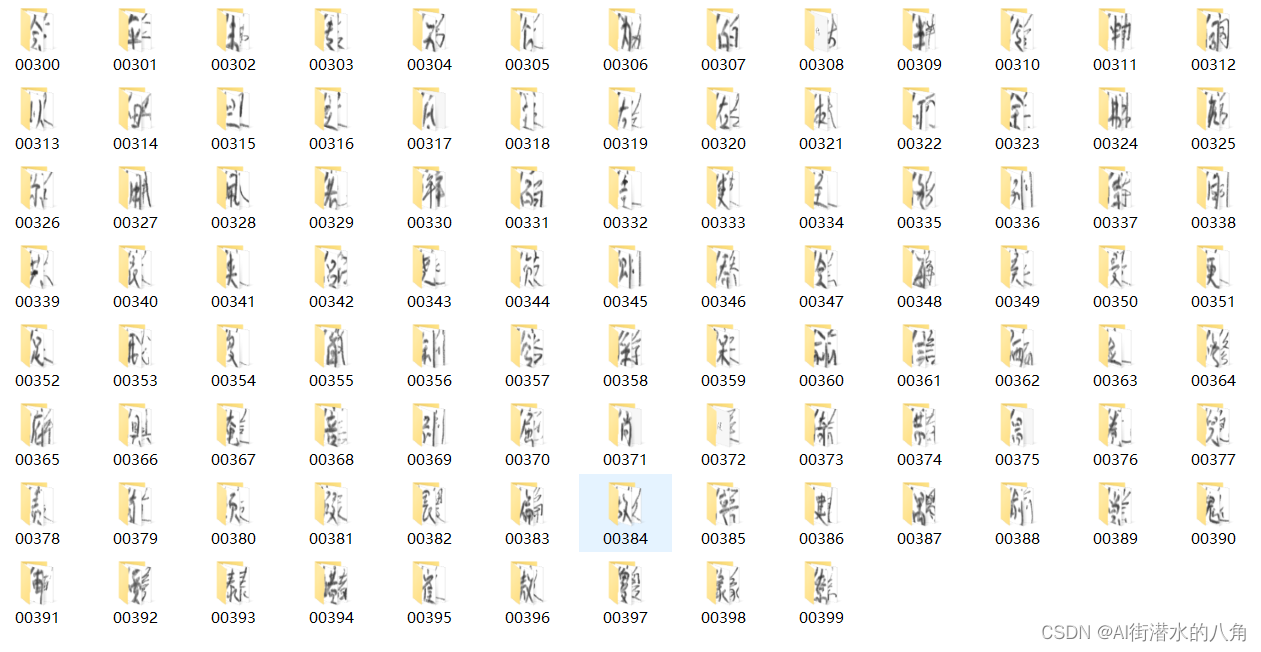

第一步:准备数据

100个手写汉字:会伞伟传伤伦伪伯估伴伶伸伺似佃但位低住佐佑体何余佛作你佣佩佬佯佰佳使侄侈例侍侗供依侠侣侥侦侧侨侩侮侯侵便促俄俊俏俐俗俘保俞信俩俭修俯俱俺倍倒倔倘候倚借倡倦倪债值倾假偎偏做停健偶偷偿傀傅傍傣储催傲傻像僚

第二步:搭建模型

我们这里搭建了一个MobileNetV2网络

参考代码如下:

import torch

import torch.nn as nn

import torch.nn.functional as F

class Block(nn.Module):

'''expand + depthwise + pointwise'''

def __init__(self, in_planes, out_planes, expansion, stride):

super(Block, self).__init__()

self.stride = stride

planes = expansion * in_planes

self.conv1 = nn.Conv2d(in_planes, planes, kernel_size=1, stride=1, padding=0, bias=False)

self.bn1 = nn.BatchNorm2d(planes)

self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride, padding=1, groups=planes, bias=False)

self.bn2 = nn.BatchNorm2d(planes)

self.conv3 = nn.Conv2d(planes, out_planes, kernel_size=1, stride=1, padding=0, bias=False)

self.bn3 = nn.BatchNorm2d(out_planes)

self.shortcut = nn.Sequential()

if stride == 1 and in_planes != out_planes:

self.shortcut = nn.Sequential(

nn.Conv2d(in_planes, out_planes, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(out_planes),

)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = F.relu(self.bn2(self.conv2(out)))

out = self.bn3(self.conv3(out))

out = out + self.shortcut(x) if self.stride==1 else out

return out

class MobileNetV2(nn.Module):

# (expansion, out_planes, num_blocks, stride)

cfg = [(1, 16, 1, 1),

(6, 24, 2, 1), # NOTE: change stride 2 -> 1 for CIFAR10

(6, 32, 3, 2),

(6, 64, 4, 2),

(6, 96, 3, 1),

(6, 160, 3, 2),

(6, 320, 1, 1)]

def __init__(self, num_classes=100):

super(MobileNetV2, self).__init__()

# NOTE: change conv1 stride 2 -> 1 for CIFAR10

self.conv1 = nn.Conv2d(1, 32, kernel_size=3, stride=1, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(32)

self.layers = self._make_layers(in_planes=32)

self.conv2 = nn.Conv2d(320, 1280, kernel_size=1, stride=1, padding=0, bias=False)

self.bn2 = nn.BatchNorm2d(1280)

self.linear = nn.Linear(1280, num_classes) # 5120

def _make_layers(self, in_planes):

layers = []

for expansion, out_planes, num_blocks, stride in self.cfg:

strides = [stride] + [1]*(num_blocks-1)

for stride in strides:

layers.append(Block(in_planes, out_planes, expansion, stride))

in_planes = out_planes

return nn.Sequential(*layers)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = self.layers(out)

out = F.relu(self.bn2(self.conv2(out)))

# NOTE: change pooling kernel_size 7 -> 4 for CIFAR10

out = F.avg_pool2d(out, 4)

out = out.view(out.size(0), -1)

out = self.linear(out)

return out

第三步:统计训练过程

train loss: 100%[**************************************************->]4.095

1777.385651155

[epoch 1] train_loss: 3.637 test_accuracy: 0.399

train loss: 100%[**************************************************->]2.623

788.3096601800003

[epoch 2] train_loss: 1.477 test_accuracy: 0.784

train loss: 100%[**************************************************->]3.320

793.739339316

[epoch 3] train_loss: 0.652 test_accuracy: 0.850

train loss: 100%[**************************************************->]0.815

795.3651353329997

[epoch 4] train_loss: 0.374 test_accuracy: 0.879

train loss: 100%[**************************************************->]2.095

796.7138991949996

[epoch 5] train_loss: 0.237 test_accuracy: 0.883

train loss: 100%[**************************************************->]0.804

796.542634204

[epoch 6] train_loss: 0.155 test_accuracy: 0.879

train loss: 100%[**************************************************->]1.909

790.9162681600001

[epoch 7] train_loss: 0.112 test_accuracy: 0.888

train loss: 100%[**************************************************->]1.926

791.8052450380001

[epoch 8] train_loss: 0.101 test_accuracy: 0.886

train loss: 100%[**************************************************->]0.741

792.4581895470001

[epoch 9] train_loss: 0.076 test_accuracy: 0.894

train loss: 100%[**************************************************->]0.093

795.3900452489997

[epoch 10] train_loss: 0.062 test_accuracy: 0.893

train loss: 100%[**************************************************->]0.968

789.8505018160013

[epoch 11] train_loss: 0.055 test_accuracy: 0.899

train loss: 100%[**************************************************->]0.099

789.6454528190006

[epoch 12] train_loss: 0.061 test_accuracy: 0.892

train loss: 100%[**************************************************->]1.044

789.4033566689995

[epoch 13] train_loss: 0.049 test_accuracy: 0.897

train loss: 100%[**************************************************->]2.218

789.2340884759997

[epoch 14] train_loss: 0.055 test_accuracy: 0.906

train loss: 100%[**************************************************->]0.276

788.954189692

[epoch 15] train_loss: 0.046 test_accuracy: 0.912

train loss: 100%[**************************************************->]0.479

788.7449144220009

[epoch 16] train_loss: 0.035 test_accuracy: 0.905

train loss: 100%[**************************************************->]0.008

789.1742101270011

[epoch 17] train_loss: 0.045 test_accuracy: 0.911

train loss: 100%[**************************************************->]0.214

789.122726865

[epoch 18] train_loss: 0.033 test_accuracy: 0.895

train loss: 100%[**************************************************->]0.008

789.101337797998

[epoch 19] train_loss: 0.043 test_accuracy: 0.908

train loss: 100%[**************************************************->]0.788

789.0350987080019

[epoch 20] train_loss: 0.033 test_accuracy: 0.910

train loss: 100%[**************************************************->]0.431

789.0857053139989

[epoch 21] train_loss: 0.039 test_accuracy: 0.910

train loss: 100%[**************************************************->]0.464

789.2551583959976

[epoch 22] train_loss: 0.037 test_accuracy: 0.911

train loss: 100%[**************************************************->]0.072

789.2582286859979

[epoch 23] train_loss: 0.031 test_accuracy: 0.918

train loss: 100%[**************************************************->]0.007

789.3223936100003

[epoch 24] train_loss: 0.027 test_accuracy: 0.922

train loss: 100%[**************************************************->]0.583

789.4160900979987

[epoch 25] train_loss: 0.027 test_accuracy: 0.917

train loss: 100%[**************************************************->]0.015

789.7534170279978

[epoch 26] train_loss: 0.039 test_accuracy: 0.916

train loss: 100%[**************************************************->]0.013

789.6604947960004

[epoch 27] train_loss: 0.022 test_accuracy: 0.913

train loss: 100%[**************************************************->]1.365

789.5844685500015

[epoch 28] train_loss: 0.026 test_accuracy: 0.913

train loss: 100%[**************************************************->]0.003

789.4390777410008

[epoch 29] train_loss: 0.034 test_accuracy: 0.918

train loss: 100%[**************************************************->]0.015

789.6530912829985

[epoch 30] train_loss: 0.019 test_accuracy: 0.920

train loss: 100%[**************************************************->]0.727

789.4056595289985

[epoch 31] train_loss: 0.024 test_accuracy: 0.913

train loss: 100%[**************************************************->]0.070

789.2102742380011

[epoch 32] train_loss: 0.029 test_accuracy: 0.921

train loss: 100%[**************************************************->]0.093

789.298916153999

[epoch 33] train_loss: 0.022 test_accuracy: 0.927

train loss: 100%[**************************************************->]0.086

789.1393924500007

[epoch 34] train_loss: 0.025 test_accuracy: 0.928

train loss: 100%[**************************************************->]0.172

789.0974727700013

[epoch 35] train_loss: 0.021 test_accuracy: 0.915

train loss: 100%[**************************************************->]0.634

789.2115189659999

[epoch 36] train_loss: 0.021 test_accuracy: 0.924

train loss: 100%[**************************************************->]1.205

789.1811647879986

[epoch 37] train_loss: 0.025 test_accuracy: 0.927

train loss: 100%[**************************************************->]0.034

789.324051919004

[epoch 38] train_loss: 0.022 test_accuracy: 0.926

train loss: 100%[**************************************************->]0.239

789.0316131279978

[epoch 39] train_loss: 0.017 test_accuracy: 0.921

train loss: 100%[**************************************************->]0.543

789.2653705120028

[epoch 40] train_loss: 0.026 test_accuracy: 0.923

train loss: 100%[**************************************************->]0.755

789.288287043004

[epoch 41] train_loss: 0.019 test_accuracy: 0.924

train loss: 100%[**************************************************->]0.012

789.2477201790025

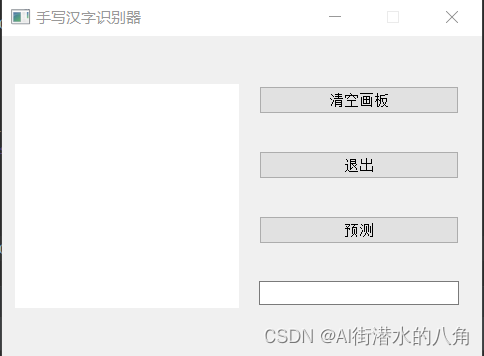

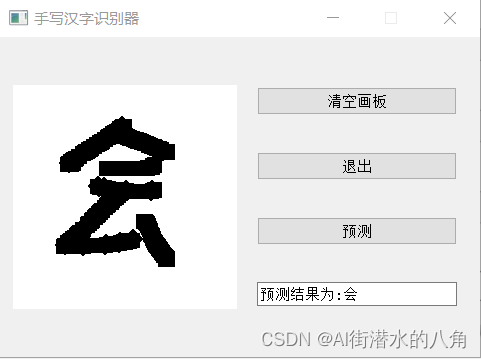

[epoch 42] train_loss: 0.019 test_accuracy: 0.930第四步:搭建GUI界面

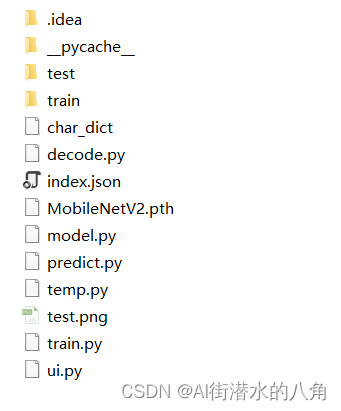

第五步:整个工程的内容

有训练代码和训练好的模型以及训练过程,提供数据,提供GUI界面代码

代码的下载路径(新窗口打开链接):基于Pytorch深度学习神经网络手写汉字识别系统源码(带界面和手写画板)

有问题可以私信或者留言,有问必答

1586

1586

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?