先自我介绍一下,小编浙江大学毕业,去过华为、字节跳动等大厂,目前阿里P7

深知大多数程序员,想要提升技能,往往是自己摸索成长,但自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

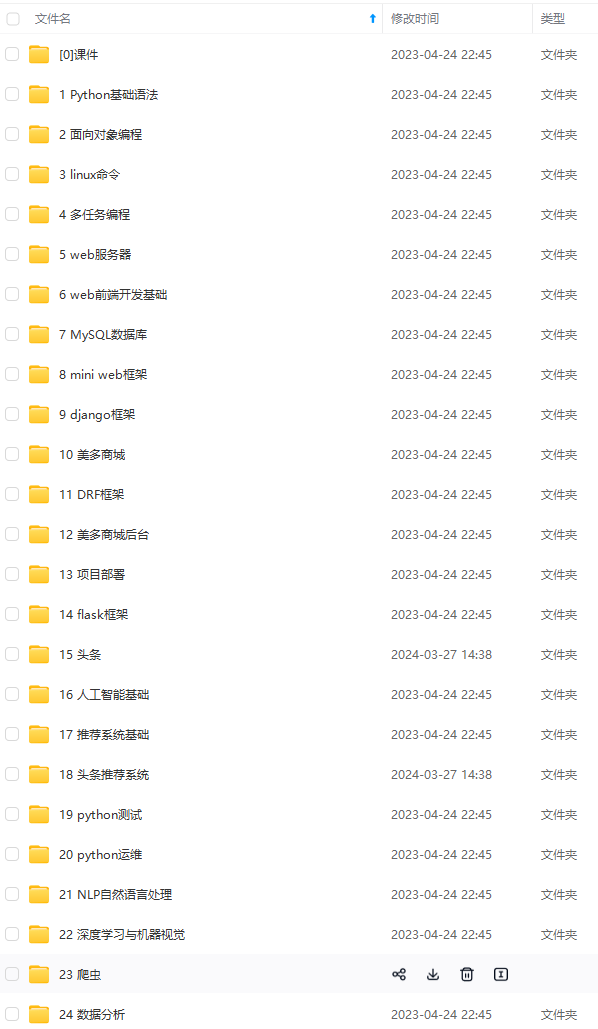

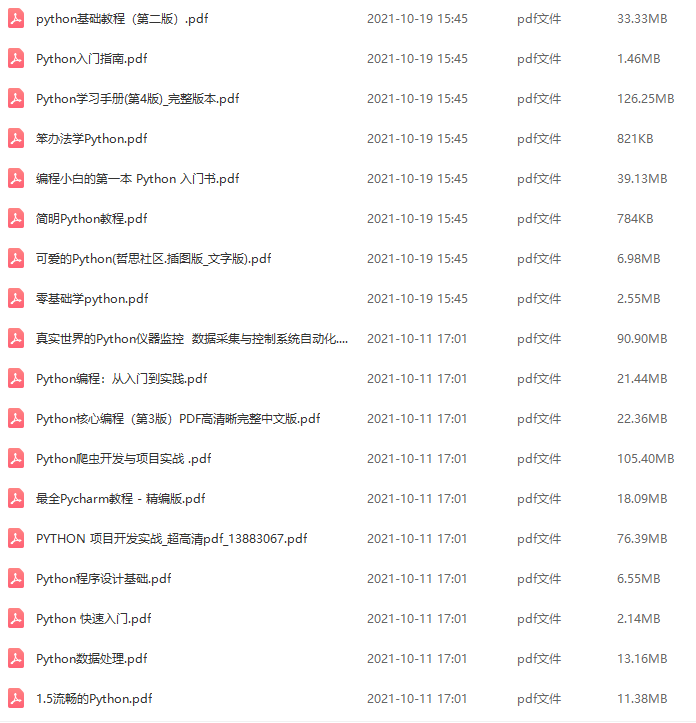

因此收集整理了一份《2024年最新Python全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上Python知识点,真正体系化!

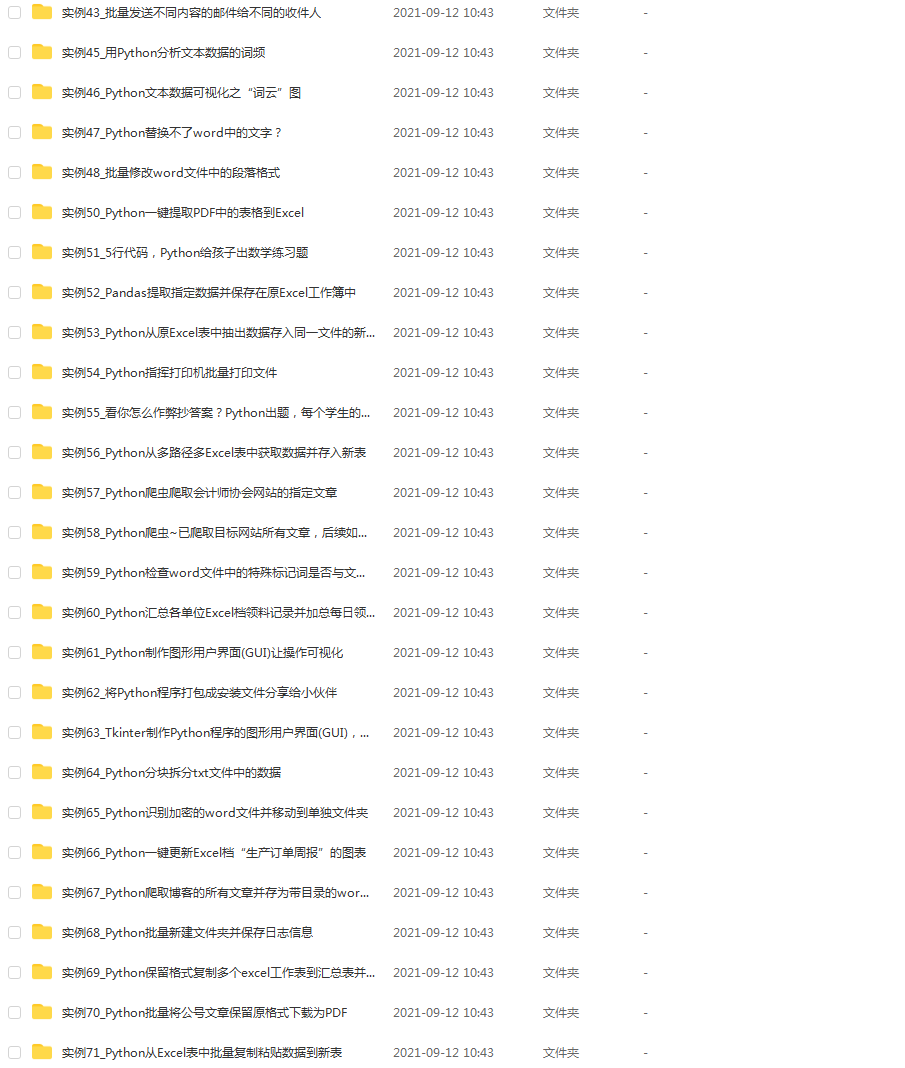

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

如果你需要这些资料,可以添加V获取:vip1024c (备注Python)

正文

def preprocess(features):

image = tf.image.resize(features[‘image’], image_shape[:2])

image = tf.cast(image, tf.float32)

image = (image-127.5)/127.5

return image

ds_train = ds_train.map(preprocess)

ds_train = ds_train.shuffle(ds_info.splits[‘train’].num_examples)

ds_train = ds_train.batch(batch_size, drop_remainder=True).repeat()

train_num = ds_info.splits[‘train’].num_examples

train_steps_per_epoch = round(train_num/batch_size)

print(train_steps_per_epoch)

“”"

WGAN

“”"

class WGAN():

def init(self, input_shape):

self.z_dim = 128

self.input_shape = input_shape

losses

self.loss_critic_real = {}

self.loss_critic_fake = {}

self.loss_critic = {}

self.loss_generator = {}

critic

self.n_critic = 5

self.critic = self.build_critic()

self.critic.trainable = False

self.optimizer_critic = RMSprop(5e-5)

build generator pipeline with frozen critic

self.generator = self.build_generator()

critic_output = self.critic(self.generator.output)

self.model = Model(self.generator.input, critic_output)

self.model.compile(loss = self.wasserstein_loss,

optimizer = RMSprop(5e-5))

self.critic.trainable = True

def wasserstein_loss(self, y_true, y_pred):

w_loss = -tf.reduce_mean(y_true*y_pred)

return w_loss

def build_generator(self):

DIM = 128

model = tf.keras.Sequential(name=‘Generator’)

model.add(layers.Input(shape=[self.z_dim]))

model.add(layers.Dense(444*DIM))

model.add(layers.BatchNormalization())

model.add(layers.ReLU())

model.add(layers.Reshape((4,4,4*DIM)))

model.add(layers.UpSampling2D((2,2), interpolation=“bilinear”))

model.add(layers.Conv2D(2*DIM, 5, padding=‘same’))

model.add(layers.BatchNormalization())

model.add(layers.ReLU())

model.add(layers.UpSampling2D((2,2), interpolation=“bilinear”))

model.add(layers.Conv2D(DIM, 5, padding=‘same’))

model.add(layers.BatchNormalization())

model.add(layers.ReLU())

model.add(layers.UpSampling2D((2,2), interpolation=“bilinear”))

model.add(layers.Conv2D(image_shape[-1], 5, padding=‘same’, activation=‘tanh’))

return model

def build_critic(self):

DIM = 128

model = tf.keras.Sequential(name=‘critics’)

model.add(layers.Input(shape=self.input_shape))

model.add(layers.Conv2D(1*DIM, 5, strides=2, padding=‘same’))

model.add(layers.LeakyReLU(0.2))

model.add(layers.Conv2D(2*DIM, 5, strides=2, padding=‘same’))

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU(0.2))

model.add(layers.Conv2D(4*DIM, 5, strides=2, padding=‘same’))

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU(0.2))

model.add(layers.Flatten())

model.add(layers.Dense(1))

return model

def train_critic(self, real_images, batch_size):

real_labels = tf.ones(batch_size)

fake_labels = -tf.ones(batch_size)

g_input = tf.random.normal((batch_size, self.z_dim))

fake_images = self.generator.predict(g_input)

with tf.GradientTape() as total_tape:

forward pass

pred_fake = self.critic(fake_images)

pred_real = self.critic(real_images)

calculate losses

loss_fake = self.wasserstein_loss(fake_labels, pred_fake)

loss_real = self.wasserstein_loss(real_labels, pred_real)

total loss

total_loss = loss_fake + loss_real

apply gradients

gradients = total_tape.gradient(total_loss, self.critic.trainable_variables)

self.optimizer_critic.apply_gradients(zip(gradients, self.critic.trainable_variables))

for layer in self.critic.layers:

weights = layer.get_weights()

weights = [tf.clip_by_value(w, -0.01, 0.01) for w in weights]

layer.set_weights(weights)

return loss_fake, loss_real

def train(self, data_generator, batch_size, steps, interval=200):

val_g_input = tf.random.normal((batch_size, self.z_dim))

real_labels = tf.ones(batch_size)

for i in range(steps):

for _ in range(self.n_critic):

real_images = next(data_generator)

loss_fake, loss_real = self.train_critic(real_images, batch_size)

critic_loss = loss_fake + loss_real

train generator

g_input = tf.random.normal((batch_size, self.z_dim))

g_loss = self.model.train_on_batch(g_input, real_labels)

self.loss_critic_real[i] = loss_real.numpy()

self.loss_critic_fake[i] = loss_fake.numpy()

self.loss_critic[i] = critic_loss.numpy()

self.loss_generator[i] = g_loss

if i%interval == 0:

msg = “Step {}: g_loss {:.4f} critic_loss {:.4f} critic fake {:.4f} critic_real {:.4f}”\

.format(i, g_loss, critic_loss, loss_fake, loss_real)

print(msg)

fake_images = self.generator.predict(val_g_input)

self.plot_images(fake_images)

self.plot_losses()

def plot_images(self, images):

grid_row = 1

grid_col = 8

f, axarr = plt.subplots(grid_row, grid_col, figsize=(grid_col2.5, grid_row2.5))

for row in range(grid_row):

for col in range(grid_col):

if self.input_shape[-1]==1:

axarr[col].imshow(images[col,:,:,0]*0.5+0.5, cmap=‘gray’)

else:

axarr[col].imshow(images[col]*0.5+0.5)

axarr[col].axis(‘off’)

plt.show()

def plot_losses(self):

fig, (ax1, ax2) = plt.subplots(2, sharex=True)

fig.set_figwidth(10)

fig.set_figheight(6)

ax1.plot(list(self.loss_critic.values()), label=‘Critic loss’, alpha=0.7)

ax1.set_title(“Critic loss”)

ax2.plot(list(self.loss_generator.values()), label=‘Generator loss’, alpha=0.7)

ax2.set_title(“Generator loss”)

plt.xlabel(‘Steps’)

plt.show()

wgan = WGAN(image_shape)

wgan.generator.summary()

wgan.critic.summary()

wgan.train(iter(ds_train), batch_size, 2000, 100)

z = tf.random.normal((8, 128))

generated_images = wgan.generator.predict(z)

wgan.plot_images(generated_images)

wgan.generator.save_weights(‘./wgan_models/wgan_fashion_minist.weights’)

“”"

WGAN_GP

“”"

class WGAN_GP():

def init(self, input_shape):

self.z_dim = 128

self.input_shape = input_shape

critic

self.n_critic = 5

self.penalty_const = 10

self.critic = self.build_critic()

self.critic.trainable = False

self.optimizer_critic = Adam(1e-4, 0.5, 0.9)

build generator pipeline with frozen critic

self.generator = self.build_generator()

critic_output = self.critic(self.generator.output)

self.model = Model(self.generator.input, critic_output)

self.model.compile(loss=self.wasserstein_loss, optimizer=Adam(1e-4, 0.5, 0.9))

def wasserstein_loss(self, y_true, y_pred):

w_loss = -tf.reduce_mean(y_true*y_pred)

return w_loss

def build_generator(self):

DIM = 128

model = Sequential([

layers.Input(shape=[self.z_dim]),

layers.Dense(444*DIM),

layers.BatchNormalization(),

layers.ReLU(),

layers.Reshape((4,4,4*DIM)),

layers.UpSampling2D((2,2), interpolation=‘bilinear’),

layers.Conv2D(2*DIM, 5, padding=‘same’),

layers.BatchNormalization(),

layers.ReLU(),

layers.UpSampling2D((2,2), interpolation=‘bilinear’),

layers.Conv2D(2*DIM, 5, padding=‘same’),

layers.BatchNormalization(),

layers.ReLU(),

layers.UpSampling2D((2,2), interpolation=‘bilinear’),

layers.Conv2D(image_shape[-1], 5, padding=‘same’, activation=‘tanh’)

],name=‘Generator’)

return model

def build_critic(self):

DIM = 128

model = Sequential([

layers.Input(shape=self.input_shape),

layers.Conv2D(1*DIM, 5, strides=2, padding=‘same’, use_bias=False),

layers.LeakyReLU(0.2),

layers.Conv2D(2*DIM, 5, strides=2, padding=‘same’, use_bias=False),

layers.LeakyReLU(0.2),

layers.Conv2D(4*DIM, 5, strides=2, padding=‘same’, use_bias=False),

layers.LeakyReLU(0.2),

layers.Flatten(),

layers.Dense(1)

], name=‘critics’)

return model

def gradient_loss(self, grad):

loss = tf.square(grad)

loss = tf.reduce_sum(loss, axis=np.arange(1, len(loss.shape)))

loss = tf.sqrt(loss)

loss = tf.reduce_mean(tf.square(loss - 1))

loss = self.penalty_const * loss

return loss

def train_critic(self, real_images, batch_size):

real_labels = tf.ones(batch_size)

fake_labels = -tf.ones(batch_size)

g_input = tf.random.normal((batch_size, self.z_dim))

fake_images = self.generator.predict(g_input)

with tf.GradientTape() as gradient_tape, tf.GradientTape() as total_tape:

forward pass

pred_fake = self.critic(fake_images)

pred_real = self.critic(real_images)

calculate losses

loss_fake = self.wasserstein_loss(fake_labels, pred_fake)

loss_real = self.wasserstein_loss(real_labels, pred_real)

gradient penalty

epsilon = tf.random.uniform((batch_size, 1, 1, 1))

interpolates = epsilon * real_images + (1-epsilon) * fake_images

gradient_tape.watch(interpolates)

critic_interpolates = self.critic(interpolates)

gradients_interpolates = gradient_tape.gradient(critic_interpolates, [interpolates])

gradient_penalty = self.gradient_loss(gradients_interpolates)

total loss

total_loss = loss_fake + loss_real + gradient_penalty

apply gradients

gradients = total_tape.gradient(total_loss, self.critic.variables)

self.optimizer_critic.apply_gradients(zip(gradients, self.critic.variables))

return loss_fake, loss_real, gradient_penalty

def train(self, data_generator, batch_size, steps, interval=100):

val_g_input = tf.random.normal((batch_size, self.z_dim))

real_labels = tf.ones(batch_size)

for i in range(steps):

for _ in range(self.n_critic):

real_images = next(data_generator)

loss_fake, loss_real, gradient_penalty = self.train_critic(real_images, batch_size)

critic_loss = loss_fake + loss_real + gradient_penalty

train generator

g_input = tf.random.normal((batch_size, self.z_dim))

g_loss = self.model.train_on_batch(g_input, real_labels)

if i%interval == 0:

msg = “Step {}: g_loss {:.4f} critic_loss {:.4f} critic fake {:.4f} critic_real {:.4f} penalty {:.4f}”.format(i, g_loss, critic_loss, loss_fake, loss_real, gradient_penalty)

print(msg)

fake_images = self.generator.predict(val_g_input)

self.plot_images(fake_images)

def plot_images(self, images):

grid_row = 1

grid_col = 8

f, axarr = plt.subplots(grid_row, grid_col, figsize=(grid_col2.5, grid_row2.5))

for row in range(grid_row):

for col in range(grid_col):

if self.input_shape[-1]==1:

最后

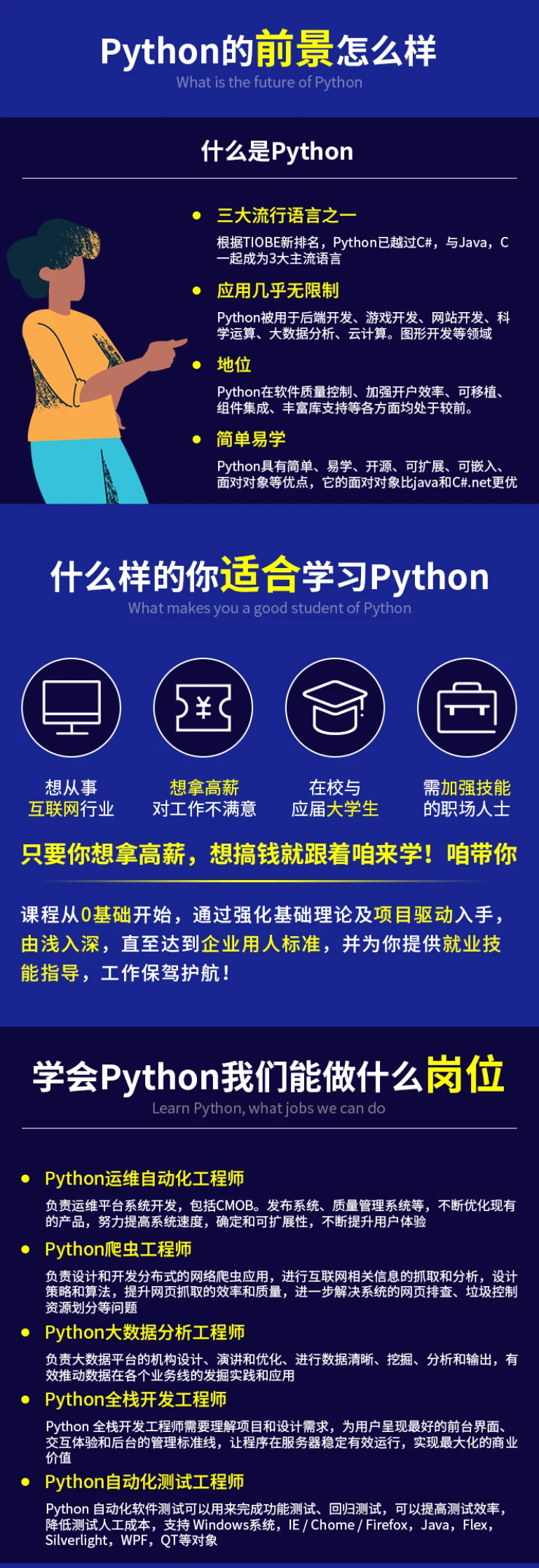

Python崛起并且风靡,因为优点多、应用领域广、被大牛们认可。学习 Python 门槛很低,但它的晋级路线很多,通过它你能进入机器学习、数据挖掘、大数据,CS等更加高级的领域。Python可以做网络应用,可以做科学计算,数据分析,可以做网络爬虫,可以做机器学习、自然语言处理、可以写游戏、可以做桌面应用…Python可以做的很多,你需要学好基础,再选择明确的方向。这里给大家分享一份全套的 Python 学习资料,给那些想学习 Python 的小伙伴们一点帮助!

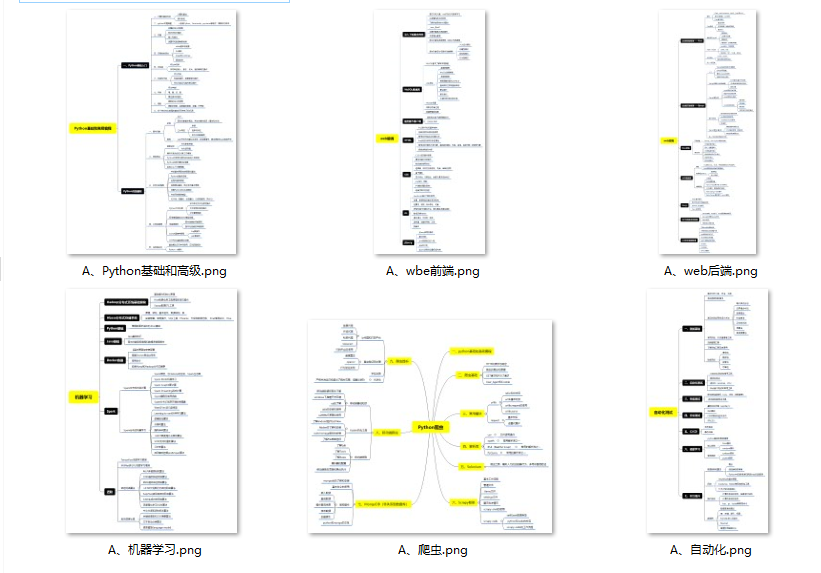

👉Python所有方向的学习路线👈

Python所有方向的技术点做的整理,形成各个领域的知识点汇总,它的用处就在于,你可以按照上面的知识点去找对应的学习资源,保证自己学得较为全面。

👉Python必备开发工具👈

工欲善其事必先利其器。学习Python常用的开发软件都在这里了,给大家节省了很多时间。

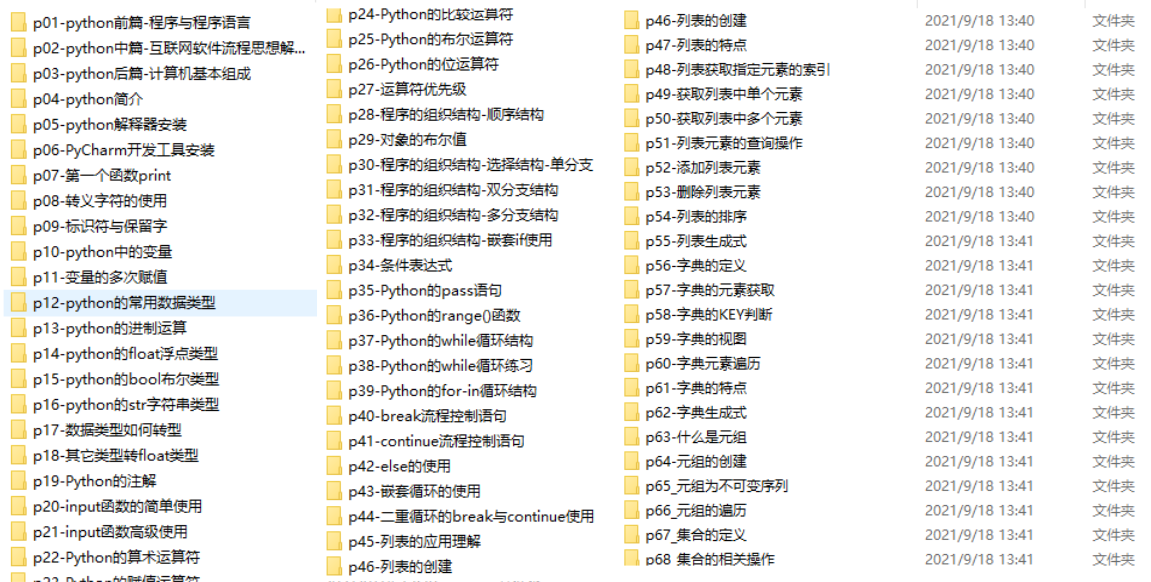

👉Python全套学习视频👈

我们在看视频学习的时候,不能光动眼动脑不动手,比较科学的学习方法是在理解之后运用它们,这时候练手项目就很适合了。

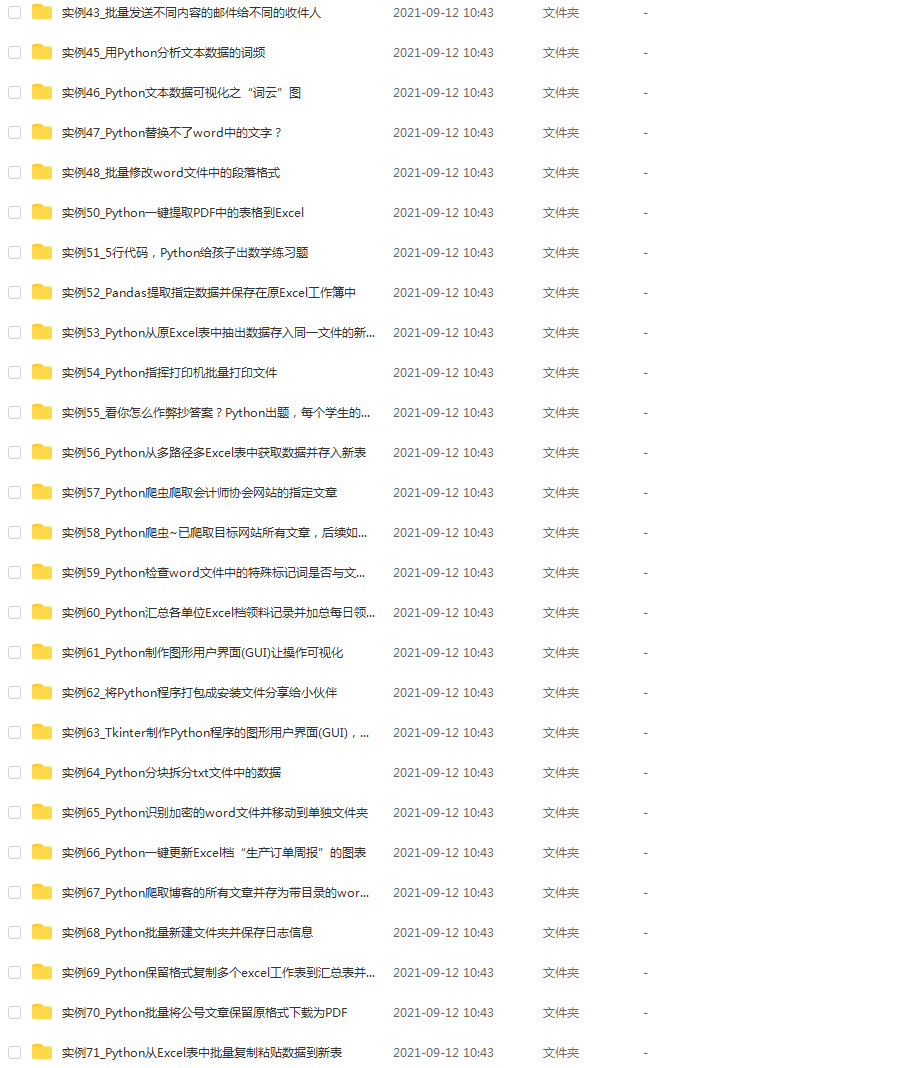

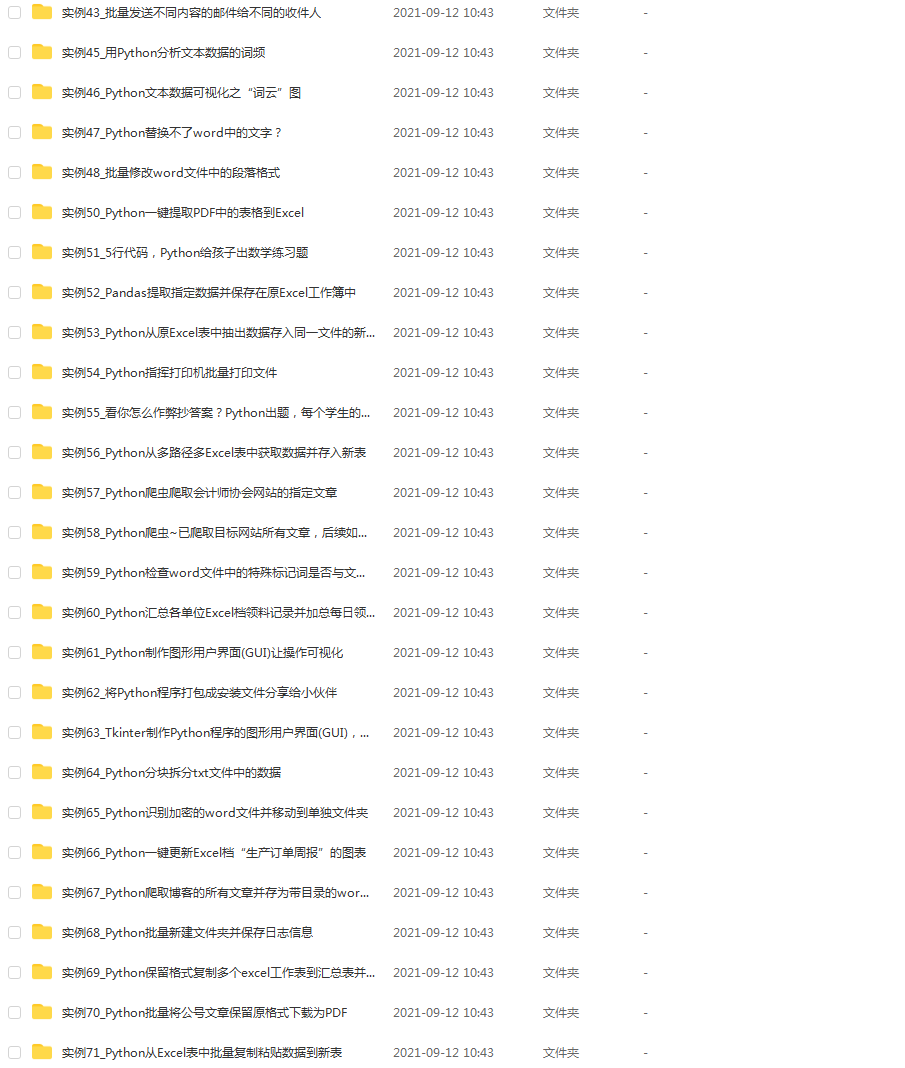

👉实战案例👈

学python就与学数学一样,是不能只看书不做题的,直接看步骤和答案会让人误以为自己全都掌握了,但是碰到生题的时候还是会一筹莫展。

因此在学习python的过程中一定要记得多动手写代码,教程只需要看一两遍即可。

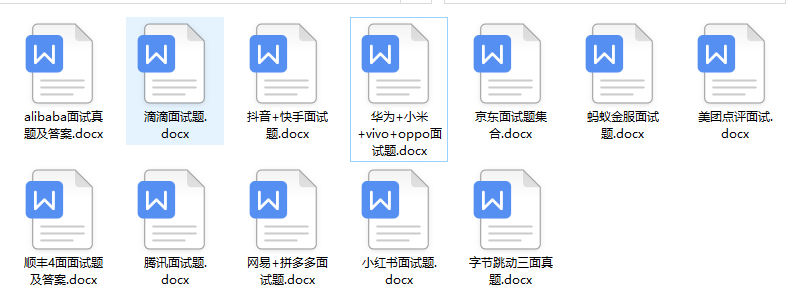

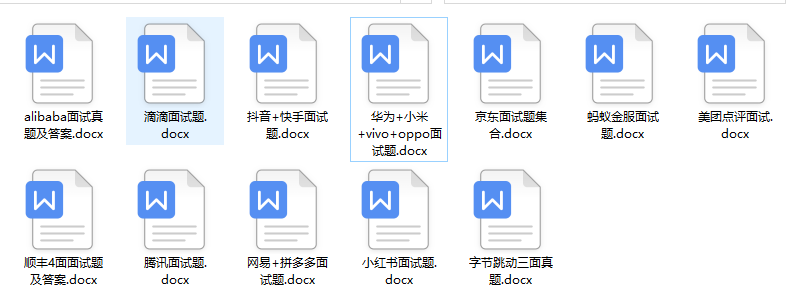

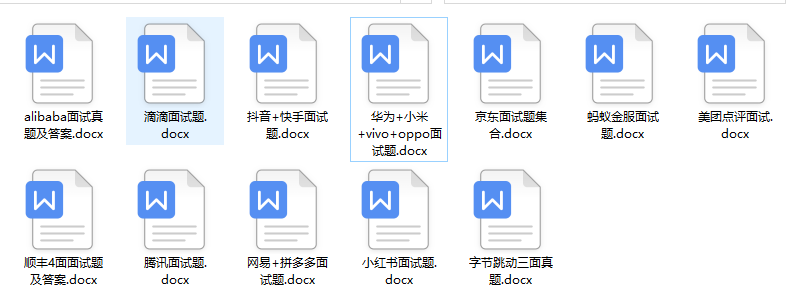

👉大厂面试真题👈

我们学习Python必然是为了找到高薪的工作,下面这些面试题是来自阿里、腾讯、字节等一线互联网大厂最新的面试资料,并且有阿里大佬给出了权威的解答,刷完这一套面试资料相信大家都能找到满意的工作。

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

需要这份系统化的资料的朋友,可以添加V获取:vip1024c (备注python)

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

/img-blog.csdnimg.cn/img_convert/16ac689cb023166b2ffa9c677ac40fc0.png)

👉实战案例👈

学python就与学数学一样,是不能只看书不做题的,直接看步骤和答案会让人误以为自己全都掌握了,但是碰到生题的时候还是会一筹莫展。

因此在学习python的过程中一定要记得多动手写代码,教程只需要看一两遍即可。

👉大厂面试真题👈

我们学习Python必然是为了找到高薪的工作,下面这些面试题是来自阿里、腾讯、字节等一线互联网大厂最新的面试资料,并且有阿里大佬给出了权威的解答,刷完这一套面试资料相信大家都能找到满意的工作。

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

需要这份系统化的资料的朋友,可以添加V获取:vip1024c (备注python)

[外链图片转存中…(img-AMRjiBND-1713240903388)]

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

2905

2905

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?