★★★ 本文源自AI Studio社区精品项目,【点击此处】查看更多精品内容 >>>

1 赛题介绍

《绝地求生》(PUBG) 是一款战术竞技型射击类沙盒游戏。在该游戏中,玩家需要在游戏地图上收集各种资源,并在不断缩小的安全区域内对抗其他玩家,让自己生存到最后。当选手在本局游戏中取得第一名后,会有一段台词出现:“大吉大利,晚上吃鸡!”。

在本次赛题中,我们收集了PUBG比赛数据中玩家的行为数据,希望选手能够构建模型对玩家每局最终的排名进行预测。

2 赛事任务

构建吃鸡排名预测模型,输入每位玩家的统计信息、队友统计信息、本局其他玩家的统计信息,预测最终的游戏排名。这里的排名是按照队伍排名,若多位玩家在PUBG一局游戏中组队,则最终排名相同。

赛题训练集案例如下:

训练集5万局数据,共150w行

测试集共5000局条数据,共50w行

赛题数据文件总大小150MB,数据均为csv格式,列使用逗号分割。若使用Pandas读取数据,可参考如下代码:

import pandas as pd

import numpy as np

pubg_train = pd.read_csv('pubg_train.csv.zip')

测试集中label字段team_placement为空,需要选手预测。完整的数据字段含义如下:

match_id:本局游戏的idteam_id:本局游戏中队伍id,表示在每局游戏中队伍信息game_size:本局队伍数量party_size:本局游戏中队伍人数player_assists:玩家助攻数player_dbno:玩家击倒数player_dist_ride:玩家车辆行驶距离player_dist_walk:玩家不幸距离player_dmg:输出伤害值player_kills:玩家击杀数player_name:玩家名称,在训练集和测试集中全局唯一kill_distance_x_min:击杀另一位选手时最小的x坐标间隔kill_distance_x_max:击杀另一位选手时最大的x坐标间隔kill_distance_y_min:击杀另一位选手时最小的y坐标间隔kill_distance_y_max:击杀另一位选手时最大的x坐标间隔team_placement:队伍排名

选手需要提交测试集队伍排名预测,具体的提交格式如下:

team_placement

19

19

37

37

49

49

13

13

3 评估指标

本次竞赛的使用绝对回归误差MAE进行评分,数值越低精度越高,评估代码参考:

from sklearn.metrics import mean_absolute_error

y_pred = [0, 2, 1, 3]

y_true = [0, 1, 2, 3]

100 - mean_absolute_error(y_true, y_pred)

4 数据分析

结合已有的赛题信息,接下来我们将深入分析数据内部的规律,找出什么类型的队伍会取得更好的排名?

- 赛题字段分析

- 赛题标签分析

- 字段相关性分析

Baseline使用指导

1、点击‘fork按钮’,出现‘fork项目’弹窗

2、点击‘创建按钮’ ,出现‘运行项目’弹窗

3、点击‘运行项目’,自动跳转至新页面

4、点击‘启动环境’ ,出现‘选择运行环境’弹窗

5、选择运行环境(启动项目需要时间,请耐心等待),出现‘环境启动成功’弹窗,点击确定

6、点击进入环境,即可进入notebook环境

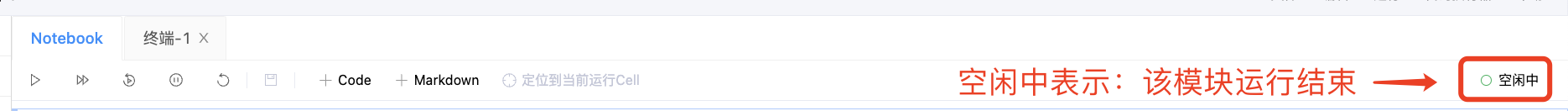

7、鼠标移至下方每个代码块内(代码块左侧边框会变成浅蓝色),再依次点击每个代码块左上角的‘三角形运行按钮’,待一个模块运行完以后再运行下一个模块,直至全部运行完成

8、下载页面左侧submission.zip压缩包

9、在比赛页提交submission.zip压缩包,等待系统评测结束后,即可登榜!

10、点击页面左侧‘版本-生成新版本’

11、填写‘版本名称’,点击‘生成版本按钮’,即可在个人主页查看到该项目(可选择公开此项目哦)

import pandas as pd

import paddle

import numpy as np

%pylab inline

import seaborn as sns

train_df = pd.read_csv('data/data137263/pubg_train.csv.zip')

test_df = pd.read_csv('data/data137263/pubg_test.csv.zip')

train_df.shape, test_df.shape

train_df["team_placement"].head()

sns.heatmap(train_df.corr())

5 模型训练与验证

数据处理

train_df = train_df.drop(['match_id', 'team_id'], axis=1)

test_df = test_df.drop(['match_id', 'team_id'], axis=1)

train_df = train_df.fillna(0)

test_df = test_df.fillna(0)

# 构建新的特征,包括最大死亡距离和最小丝杠距离的差值、死亡距离半径

train_df["kill_distance_min"] = sqrt(train_df["kill_distance_x_min"]**2 + train_df["kill_distance_y_min"]**2)

train_df["kill_distance_max"] = sqrt(train_df["kill_distance_x_max"]**2 + train_df["kill_distance_y_max"]**2)

train_df["kill_distance_minmax"] = train_df["kill_distance_max"] - train_df["kill_distance_min"]

train_df["player_dist_all"] = train_df["player_dist_ride"] + train_df["player_dist_walk"]

train_df = train_df.reindex(columns=["game_size", "party_size", "player_assists", "player_dbno", "player_dist_ride", "player_dist_walk", "player_dmg", "player_kills", "player_name", \

"kill_distance_min", "kill_distance_max", "kill_distance_minmax", "player_dist_all", "team_placement"])

test_df["kill_distance_min"] = sqrt(test_df["kill_distance_x_min"]**2 + test_df["kill_distance_y_min"]**2)

test_df["kill_distance_max"] = sqrt(test_df["kill_distance_x_max"]**2 + test_df["kill_distance_y_max"]**2)

test_df["kill_distance_minmax"] = test_df["kill_distance_max"] - test_df["kill_distance_min"]

test_df["player_dist_all"] = test_df["player_dist_ride"] + test_df["player_dist_walk"]

test_df = test_df.reindex(columns=["game_size", "party_size", "player_assists", "player_dbno", "player_dist_ride", "player_dist_walk", "player_dmg", "player_kills", "player_name", \

"kill_distance_min", "kill_distance_max", "kill_distance_minmax", "player_dist_all"])

# test_df = test_df[]

sns.heatmap(train_df.corr())

# 标签归一化,按照本场比赛的队伍数量进行处理

train_df['team_placement'] /= train_df['game_size']

# 数值归一化

for col in train_df.columns[:-1]:

train_df[col] /= train_df[col].max()

test_df[col] /= test_df[col].max()

train_df.shape, test_df.shape

模型搭建

class Regressor(paddle.nn.Layer):

# self代表类的实例自身

def __init__(self):

# 初始化父类中的一些参数

super(Regressor, self).__init__()

net_num = 100

self.fc1 = paddle.nn.Linear(in_features=13, out_features=net_num)

self.fc2 = paddle.nn.Linear(in_features=net_num, out_features=net_num)

self.fc3 = paddle.nn.Linear(in_features=net_num, out_features=net_num)

self.fc4 = paddle.nn.Linear(in_features=net_num, out_features=net_num)

self.fc5 = paddle.nn.Linear(in_features=net_num, out_features=net_num)

self.fc6 = paddle.nn.Linear(in_features=net_num, out_features=net_num)

self.fc7 = paddle.nn.Linear(in_features=net_num, out_features=net_num)

self.fc8 = paddle.nn.Linear(in_features=net_num, out_features=20)

self.fc9 = paddle.nn.Linear(in_features=20, out_features=1)

self.relu = paddle.nn.ReLU()

# 网络的前向计算

def forward(self, inputs):

x = self.fc1(inputs)

x = self.relu(x)

x = self.fc2(x)

x = self.relu(x)

x = self.fc3(x)

x = self.relu(x)

x = self.fc4(x)

x = self.relu(x)

x = self.fc5(x)

x = self.relu(x)

x = self.fc6(x)

x = self.relu(x)

x = self.fc7(x)

x = self.relu(x)

x = self.fc8(x)

x = self.relu(x)

x = self.fc9(x)

x = self.relu(x)

return x

# 声明定义好的线性回归模型

model = Regressor()

# 开启模型训练模式

model.train()

# 定义优化算法,使用随机梯度下降SGD

opt = paddle.optimizer.SGD(learning_rate=0.01, parameters=model.parameters())

opt = paddle.optimizer.SGD(learning_rate=0.000001, parameters=model.parameters()) # 在loss减小到0.15以下使用0.0001学习率,降低到0.11以下使用0.00001学习率

# 加载模型参数

params_file_path="work/model/100-2net.pdparams"

model_state_dict = paddle.load(params_file_path)

model.load_dict(model_state_dict)

模型训练

EPOCH_NUM = 10000 # 设置外层循环次数

TRAIN_BATCH_SIZE = 5000 # 设置batch大小

BATCH_SIZE = 1000 # 设置batch大小

training_data = train_df.iloc[:-10000].values.astype(np.float32)

val_data = train_df.iloc[-10000:].values.astype(np.float32)

# learning_rate1 = 0.0007

# 定义外层循环

for epoch_id in range(EPOCH_NUM):

# learning_rate1 -= 0.001/400

# print(f"learning_rate={learning_rate1}")

# opt = paddle.optimizer.SGD(learning_rate=learning_rate1, parameters=model.parameters())

# 在每轮迭代开始之前,将训练数据的顺序随机的打乱

np.random.shuffle(training_data)

# 将训练数据进行拆分,每个batch包含10条数据

mini_batches = [training_data[k:k+TRAIN_BATCH_SIZE] for k in range(0, len(training_data), TRAIN_BATCH_SIZE)]

train_loss = []

for iter_id, mini_batch in enumerate(mini_batches):

# 清空梯度变量,以备下一轮计算

opt.clear_grad()

x = np.array(mini_batch[:, :-1])

y = np.array(mini_batch[:, -1:])

# 将numpy数据转为飞桨动态图tensor的格式

features = paddle.to_tensor(x)

y = paddle.to_tensor(y)

# 前向计算

predicts = model(features)

# 计算损失

loss = paddle.nn.functional.l1_loss(predicts, label=y)

avg_loss = paddle.mean(loss)

train_loss.append(avg_loss.numpy())

# 反向传播,计算每层参数的梯度值

avg_loss.backward()

# 更新参数,根据设置好的学习率迭代一步

opt.step()

mini_batches = [val_data[k:k+BATCH_SIZE] for k in range(0, len(val_data), BATCH_SIZE)]

val_loss = []

for iter_id, mini_batch in enumerate(mini_batches):

x = np.array(mini_batch[:, :-1])

y = np.array(mini_batch[:, -1:])

features = paddle.to_tensor(x)

y = paddle.to_tensor(y)

predicts = model(features)

loss = paddle.nn.functional.l1_loss(predicts, label=y)

avg_loss = paddle.mean(loss)

val_loss.append(avg_loss.numpy())

print(f'Epoch {epoch_id}, train MAE {np.mean(train_loss)}, val MAE {np.mean(val_loss)}')

模型预测``[]

model.eval()

test_data = paddle.to_tensor(test_df.values.astype(np.float32))

test_predict = model(test_data)

test_predict = test_predict.numpy().flatten() # 生成预测值

# test_predict = test_predict.round().astype(int)

train_df2 = pd.read_csv('data/data137263/pubg_train.csv.zip')

test_df2 = pd.read_csv('data/data137263/pubg_test.csv.zip')

train_df2 = train_df2.drop(['match_id', 'team_id'], axis=1)

test_df2 = test_df2.drop(['match_id', 'team_id'], axis=1)

train_df2 = train_df2.fillna(0)

test_df2 = test_df2.fillna(0)

test_df3 = test_df2

test_df3['team_placement'] = test_df2['game_size'] * test_predict # 反归一化

test_predict = test_df3['team_placement'].round().astype(int) # 取整

test_predict

pd.DataFrame({

'team_placement': test_predict

}).to_csv('submission.csv', index=None)

!zip submission.zip submission.csv

model_name='120-9-net'

# 保存模型参数

paddle.save(model.state_dict(), 'work/model/{}.pdparams'.format(model_name))

# 保存优化器信息和相关参数,方便继续训练

del_name))

# 保存优化器信息和相关参数,方便继续训练

paddle.save(opt.state_dict(), 'work/model/{}.pdopt'.format(model_name))

6 总结与展望

项目使用全连接网络进行训练和预测。

后续改进方法有:

- 按照队伍进行聚合统计数据,构造新特征。

- 将标签归一化到0-1之间,进行训练。

本文介绍了基于PUBG游戏数据的玩家排名预测模型构建,通过分析游戏中的统计信息,利用机器学习方法(如全连接网络)进行模型训练,目标是预测队伍在游戏中的最终排名。数据预处理包括特征工程和归一化,模型评估使用MAE指标。文章还提出了模型的后续改进方向,包括按队伍聚合统计和标签归一化。

本文介绍了基于PUBG游戏数据的玩家排名预测模型构建,通过分析游戏中的统计信息,利用机器学习方法(如全连接网络)进行模型训练,目标是预测队伍在游戏中的最终排名。数据预处理包括特征工程和归一化,模型评估使用MAE指标。文章还提出了模型的后续改进方向,包括按队伍聚合统计和标签归一化。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?