堆栈溢出

Exception in thread "Spark Context Cleaner" java.lang.OutOfMemoryError: Java heap space

at org.apache.spark.ContextCleaner$$Lambda$701/14932333.get$Lambda(Unknown Source)

at java.lang.invoke.LambdaForm$DMH/29163445.invokeStatic_L_L(LambdaForm$DMH)

at java.lang.invoke.LambdaForm$MH/16692218.linkToTargetMethod(LambdaForm$MH)

at org.apache.spark.ContextCleaner.$anonfun$keepCleaning$1(ContextCleaner.scala:186)

at org.apache.spark.ContextCleaner$$Lambda$606/32594565.apply$mcV$sp(Unknown Source)

at org.apache.spark.util.Utils$.tryOrStopSparkContext(Utils.scala:1381)

at org.apache.spark.ContextCleaner.org$apache$spark$ContextCleaner$$keepCleaning(ContextCleaner.scala:180)

at org.apache.spark.ContextCleaner$$anon$1.run(ContextCleaner.scala:77)

Exception in thread "main" org.apache.spark.SparkException: Job 0 cancelled because SparkContext was shut down

at org.apache.spark.scheduler.DAGScheduler.$anonfun$cleanUpAfterSchedulerStop$1(DAGScheduler.scala:1084)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$cleanUpAfterSchedulerStop$1$adapted(DAGScheduler.scala:1082)

at scala.collection.mutable.HashSet.foreach(HashSet.scala:79)

at org.apache.spark.scheduler.DAGScheduler.cleanUpAfterSchedulerStop(DAGScheduler.scala:1082)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onStop(DAGScheduler.scala:2458)

at org.apache.spark.util.EventLoop.stop(EventLoop.scala:84)

at org.apache.spark.scheduler.DAGScheduler.stop(DAGScheduler.scala:2364)

at org.apache.spark.SparkContext.$anonfun$stop$12(SparkContext.scala:2075)

at org.apache.spark.util.Utils$.tryLogNonFatalError(Utils.scala:1419)

at org.apache.spark.SparkContext.stop(SparkContext.scala:2075)

at org.apache.spark.SparkContext$$anon$3.run(SparkContext.scala:2024)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:868)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2202)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2223)

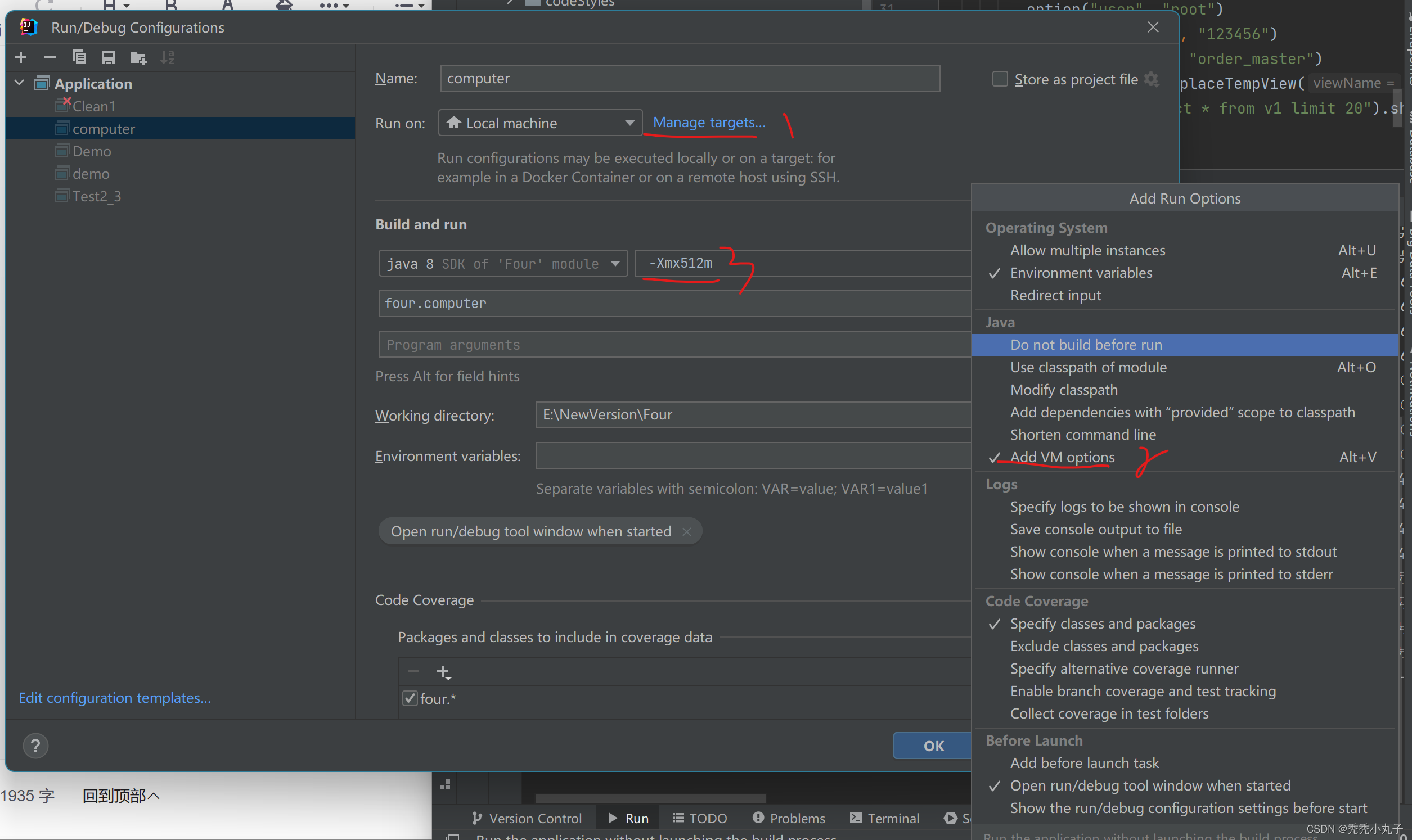

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2242)按截图修改

此处请注意 -Xmx512之前需要加空格0

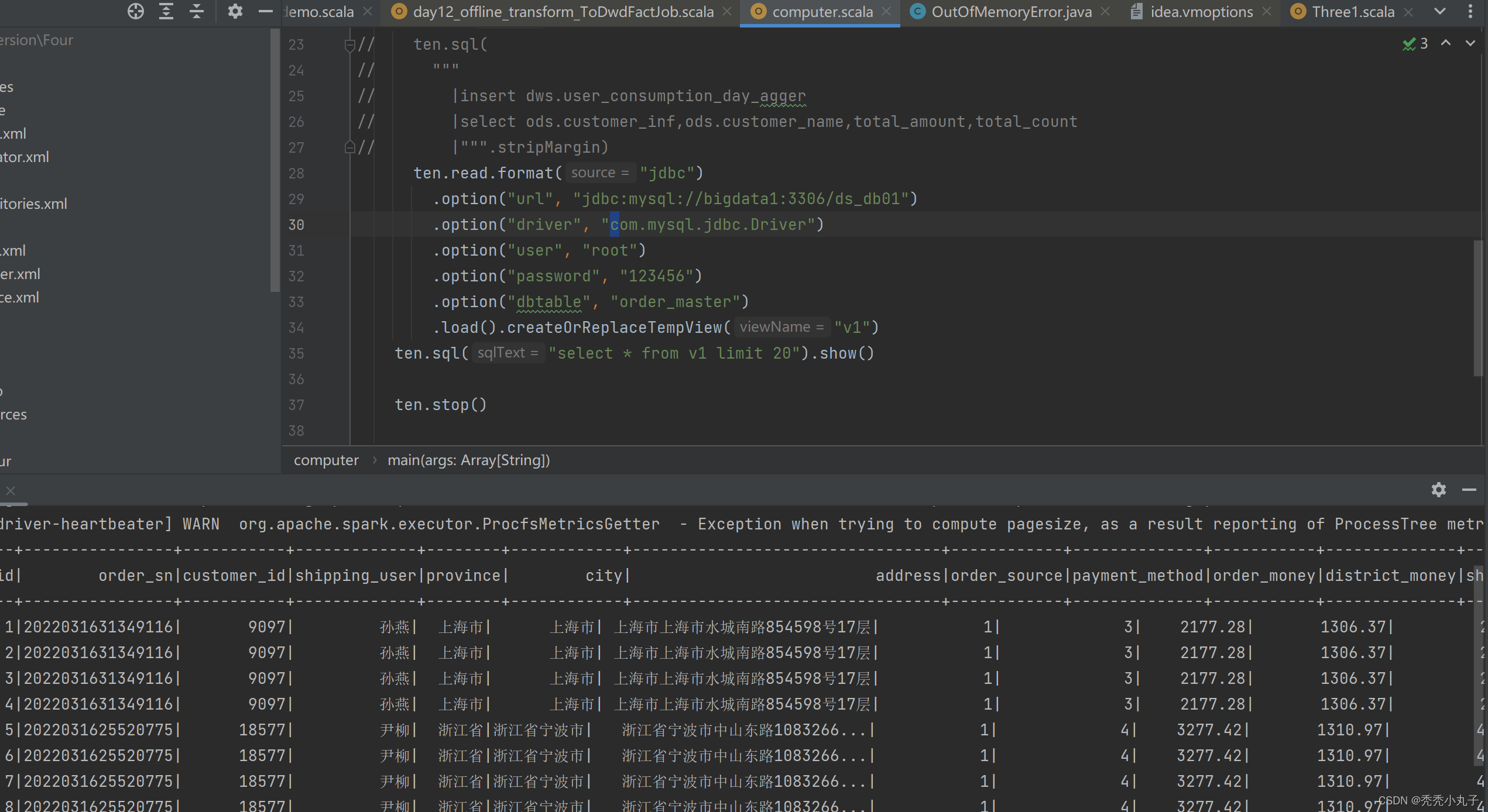

成功

444

444

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?