内存映射

内存映射是在进程的虚拟地址空间中创建一个映射,可为分两种:

- 文件映射:文件支持的内存映射,把文件的一个区间映射到进程的虚拟地址空间,数据源是存储设备上的文件。

- 匿名映射:没有文件支持的内存映射,把物理内存映射到进程的虚拟地址空间,没有数据源。

通常把文件映射的物理页称为文件页,把匿名映射的物理页称为匿名页。

原理

创建内存映射的时候,在进程的用户虚拟地址空间中分配一个虚拟内存区域。Linux内核采用延迟分配物理内存的策略,在进程第一次访问虚拟页的时候,产生缺页异常。如果是文件映射,那么分配物理页,把文件指定区间的数据讲到物理页中,然后在页表中把虚拟页映射到物理页;如果是匿名映射,那么分配物理页,然后在页表中把虚拟页映射到物理页。

这里介绍一下,还有一种分类方式。根据映射区修改是否对其他进程可见以及是否传递到底层文件,内存映射分为共享映射和私有映射:

- 共享映射:修改数据时映射相同区域的其他进程可以看见,如果是文件支持的映射,修改会传递到底层文件。

- 私有映射:第一次修改数据时会从数据源复制一个副本,然后修改副本,其他进程看不见,不影响数据源。

两个进程可以使用共享的文件映射实现共享内存。匿名映射通常是私有映射,共享的匿名映射通常出现在父子进程之间。在进程的虚拟地址空间中,代码段和数据段是私有的文件映射,未初始化数据段和堆栈是私有的匿名映射。

数据结构

虚拟内存区域是分配给进程的一个虚拟地址范围,内核使用结构体vm_area_struct描述虚拟内存区域。看看代码

/*

* This struct defines a memory VMM memory area. There is one of these

* per VM-area/task. A VM area is any part of the process virtual memory

* space that has a special rule for the page-fault handlers (ie a shared

* library, the executable area etc).

*/

//虚拟内存区域结构体

struct vm_area_struct {

/* The first cache line has the info for VMA tree walking. */

//虚拟内存起始地址

unsigned long vm_start; /* Our start address within vm_mm. */

//结束地址,区间是[vm_start,vm_end),注意是左闭右开区间

unsigned long vm_end; /* The first byte after our end address

within vm_mm. */

/* linked list of VM areas per task, sorted by address */

//虚拟内存区域链表,按起始地址排序

struct vm_area_struct *vm_next, *vm_prev;

struct rb_node vm_rb; //红黑树节点

/*

* Largest free memory gap in bytes to the left of this VMA.

* Either between this VMA and vma->vm_prev, or between one of the

* VMAs below us in the VMA rbtree and its ->vm_prev. This helps

* get_unmapped_area find a free area of the right size.

*/

unsigned long rb_subtree_gap;

/* Second cache line starts here. */

//指向内存描述符,即虚拟内存区域所属的用户虚拟地址空间

struct mm_struct *vm_mm; /* The address space we belong to. */

//保护位,即访问权限

pgprot_t vm_page_prot; /* Access permissions of this VMA. */

//标志

unsigned long vm_flags; /* Flags, see mm.h. */

/*

* For areas with an address space and backing store,

* linkage into the address_space->i_mmap interval tree.

*/

//为了支持查询一个文件区间被映射到哪些虚拟内存区域

//把一个文件映射到的所有虚拟内存区域加入该文件的地址空间结构体address_space的成员i_mmap指向的区间树

struct {

struct rb_node rb;

unsigned long rb_subtree_last;

} shared;

/*

* A file's MAP_PRIVATE vma can be in both i_mmap tree and anon_vma

* list, after a COW of one of the file pages. A MAP_SHARED vma

* can only be in the i_mmap tree. An anonymous MAP_PRIVATE, stack

* or brk vma (with NULL file) can only be in an anon_vma list.

*/

//把虚拟内存区域关联的所有anon_vma实例串起来

//一个虚拟内存区域会关联到父进程的anon_vma实例和自己的anon_vma实例

struct list_head anon_vma_chain; /* Serialized by mmap_sem &

* page_table_lock */

//指向一个anon_vma实例,结构体zone_vma用来组织匿名页被映射到的所有虚拟地址空间

struct anon_vma *anon_vma; /* Serialized by page_table_lock */

/* Function pointers to deal with this struct. */

//虚拟内存操作集合

const struct vm_operations_struct *vm_ops;

/* Information about our backing store: */

//文件偏移,单位为页

unsigned long vm_pgoff; /* Offset (within vm_file) in PAGE_SIZE

units */

//文件,如果是私有的匿名映射,为NULL

struct file * vm_file; /* File we map to (can be NULL). */

void * vm_private_data; /* was vm_pte (shared mem) */

#ifndef CONFIG_MMU

struct vm_region *vm_region; /* NOMMU mapping region */

#endif

#ifdef CONFIG_NUMA

struct mempolicy *vm_policy; /* NUMA policy for the VMA */

#endif

struct vm_userfaultfd_ctx vm_userfaultfd_ctx;

};

再看一下标志位:成员vm_flags存放虚拟内存区域的标志,只贴出一部分

/*

* vm_flags in vm_area_struct, see mm_types.h.

* When changing, update also include/trace/events/mmflags.h

*/

#define VM_NONE 0x00000000

//表示可读 可写 可执行 可以被多个进程共享

#define VM_READ 0x00000001 /* currently active flags */

#define VM_WRITE 0x00000002

#define VM_EXEC 0x00000004

#define VM_SHARED 0x00000008

/* mprotect() hardcodes VM_MAYREAD >> 4 == VM_READ, and so for r/w/x bits. */

//表示允许可读 可写 可执行 可以被多个进程共享

#define VM_MAYREAD 0x00000010 /* limits for mprotect() etc */

#define VM_MAYWRITE 0x00000020

#define VM_MAYEXEC 0x00000040

#define VM_MAYSHARE 0x00000080

//虚拟内存区域可以向下扩展

#define VM_GROWSDOWN 0x00000100 /* general info on the segment */

#define VM_UFFD_MISSING 0x00000200 /* missing pages tracking */

#define VM_PFNMAP 0x00000400 /* Page-ranges managed without "struct page", just pure PFN */

#define VM_DENYWRITE 0x00000800 /* ETXTBSY on write attempts.. */

#define VM_UFFD_WP 0x00001000 /* wrprotect pages tracking */

#define VM_LOCKED 0x00002000

#define VM_IO 0x00004000 /* Memory mapped I/O or similar */

/* Used by sys_madvise() */

#define VM_SEQ_READ 0x00008000 /* App will access data sequentially */

#define VM_RAND_READ 0x00010000 /* App will not benefit from clustered reads */

#define VM_DONTCOPY 0x00020000 /* Do not copy this vma on fork */

#define VM_DONTEXPAND 0x00040000 /* Cannot expand with mremap() */

#define VM_LOCKONFAULT 0x00080000 /* Lock the pages covered when they are faulted in */

#define VM_ACCOUNT 0x00100000 /* Is a VM accounted object */

#define VM_NORESERVE 0x00200000 /* should the VM suppress accounting */

#define VM_HUGETLB 0x00400000 /* Huge TLB Page VM */

#define VM_ARCH_1 0x01000000 /* Architecture-specific flag */

#define VM_ARCH_2 0x02000000

#define VM_DONTDUMP 0x04000000 /* Do not include in the core dump */

#ifdef CONFIG_MEM_SOFT_DIRTY

# define VM_SOFTDIRTY 0x08000000 /* Not soft dirty clean area */

#else

# define VM_SOFTDIRTY 0

#endif

#define VM_MIXEDMAP 0x10000000 /* Can contain "struct page" and pure PFN pages */

#define VM_HUGEPAGE 0x20000000 /* MADV_HUGEPAGE marked this vma */

#define VM_NOHUGEPAGE 0x40000000 /* MADV_NOHUGEPAGE marked this vma */

#define VM_MERGEABLE 0x80000000 /* KSM may merge identical pages */

再看一看各种操作

/*

* These are the virtual MM functions - opening of an area, closing and

* unmapping it (needed to keep files on disk up-to-date etc), pointer

* to the functions called when a no-page or a wp-page exception occurs.

*/

//虚拟内存操作集合

struct vm_operations_struct {

void (*open)(struct vm_area_struct * area);

void (*close)(struct vm_area_struct * area);

int (*mremap)(struct vm_area_struct * area);

int (*fault)(struct vm_fault *vmf);

int (*huge_fault)(struct vm_fault *vmf, enum page_entry_size pe_size);

void (*map_pages)(struct vm_fault *vmf,

pgoff_t start_pgoff, pgoff_t end_pgoff);

/* notification that a previously read-only page is about to become

* writable, if an error is returned it will cause a SIGBUS */

//通知以前的只读页即将变成可写,如果返回一个错误,将会发送信号SIGBUS给进程

int (*page_mkwrite)(struct vm_fault *vmf);

/* same as page_mkwrite when using VM_PFNMAP|VM_MIXEDMAP */

//使用VM_PFNMAP|VM_MIXEDMAP时调用,功能和page_mkwrite相同

int (*pfn_mkwrite)(struct vm_fault *vmf);

/* called by access_process_vm when get_user_pages() fails, typically

* for use by special VMAs that can switch between memory and hardware

*/

int (*access)(struct vm_area_struct *vma, unsigned long addr,

void *buf, int len, int write);

/* Called by the /proc/PID/maps code to ask the vma whether it

* has a special name. Returning non-NULL will also cause this

* vma to be dumped unconditionally. */

const char *(*name)(struct vm_area_struct *vma);

#ifdef CONFIG_NUMA

/*

* set_policy() op must add a reference to any non-NULL @new mempolicy

* to hold the policy upon return. Caller should pass NULL @new to

* remove a policy and fall back to surrounding context--i.e. do not

* install a MPOL_DEFAULT policy, nor the task or system default

* mempolicy.

*/

int (*set_policy)(struct vm_area_struct *vma, struct mempolicy *new);

/*

* get_policy() op must add reference [mpol_get()] to any policy at

* (vma,addr) marked as MPOL_SHARED. The shared policy infrastructure

* in mm/mempolicy.c will do this automatically.

* get_policy() must NOT add a ref if the policy at (vma,addr) is not

* marked as MPOL_SHARED. vma policies are protected by the mmap_sem.

* If no [shared/vma] mempolicy exists at the addr, get_policy() op

* must return NULL--i.e., do not "fallback" to task or system default

* policy.

*/

struct mempolicy *(*get_policy)(struct vm_area_struct *vma,

unsigned long addr);

#endif

/*

* Called by vm_normal_page() for special PTEs to find the

* page for @addr. This is useful if the default behavior

* (using pte_page()) would not find the correct page.

*/

struct page *(*find_special_page)(struct vm_area_struct *vma,

unsigned long addr);

};

几个跟内存有关的结构体,之间的联系

创建内存映射

C标准库封装函数mmap用来创建内存映射。

进程创建匿名的内存映射,把内存的物理页映射到进程的虚拟地址空间;进程把文件映射到进程的虚拟地址空间,可以像访问内核一样访问文件,不需要调用系统调用read()/write()访问文件,从页避免用户模式和内核模式之间的切换,提高读写文件速度。两个进程针对同一个文件创建共享的内存映射,达到共享内存。调用此函数成功:返回虚拟地址,否则返回负的错误号。

#include <sys/mman.h>

void *mmap(void *addr, size_t length, int prot, int flags,int fd, off_t offset);

参数

- addr:起始虚拟地址,如果addr为0,内核选择虚拟地址,否则内核把这个参数作为提示,在附近选择虚拟地址;

- length: 映射的长度,单位是字节;

- prot:保护位。举几个例子:PROT_EXEC(页可执行)、PROT_READ(页可读)、PROT_WRITE(页可写)、PROT_NONE(页不可访问)

- flags:标志。如MAP_SHARED(共享映射)、MAP_PRIVATE(私有映射)、MAP_ANONYMOUS(匿名映射)、MAP_FIXED(固定映射)、MAP_LOCKED(把页锁在内存中)、MAP_POPULATE(填充页表,即分配并且映射到物理页,如果是文件映射,该标志导致预读文件 )

- fd:文件描述符:仅当创建文件映射的时候,此参数才有意义。

- offset:偏移,单位是字节,必须是页长度的整数倍。

内核提供POSIX标准定义系统调用mmap。系统调用执行流程:

- 检查偏移是不是页的整数倍,如果偏移不是页的整数倍,则返回“-EINVAL”。

- 如果偏移是页的整数倍,那么把偏移转换成以页为单位的偏移,然后调用函数sys_mmap_pgoff。

看代码

asmlinkage long sys_mmap_pgoff(unsigned long addr, unsigned long len,

unsigned long prot, unsigned long flags,unsigned long fd, unsigned long pgoff);

SYSCALL_DEFINE6(mmap_pgoff, unsigned long, addr, unsigned long, len,

unsigned long, prot, unsigned long, flags,

unsigned long, fd, unsigned long, pgoff)

{

struct file *file = NULL;

unsigned long retval;

if (!(flags & MAP_ANONYMOUS)) {

audit_mmap_fd(fd, flags);

file = fget(fd);

if (!file)

return -EBADF;

if (is_file_hugepages(file))

len = ALIGN(len, huge_page_size(hstate_file(file)));

retval = -EINVAL;

if (unlikely(flags & MAP_HUGETLB && !is_file_hugepages(file)))

goto out_fput;

} else if (flags & MAP_HUGETLB) {

struct user_struct *user = NULL;

struct hstate *hs;

hs = hstate_sizelog((flags >> MAP_HUGE_SHIFT) & MAP_HUGE_MASK);

if (!hs)

return -EINVAL;

len = ALIGN(len, huge_page_size(hs));

/*

* VM_NORESERVE is used because the reservations will be

* taken when vm_ops->mmap() is called

* A dummy user value is used because we are not locking

* memory so no accounting is necessary

*/

file = hugetlb_file_setup(HUGETLB_ANON_FILE, len,

VM_NORESERVE,

&user, HUGETLB_ANONHUGE_INODE,

(flags >> MAP_HUGE_SHIFT) & MAP_HUGE_MASK);

if (IS_ERR(file))

return PTR_ERR(file);

}

flags &= ~(MAP_EXECUTABLE | MAP_DENYWRITE);

retval = vm_mmap_pgoff(file, addr, len, prot, flags, pgoff);

out_fput:

if (file)

fput(file);

return retval;

}

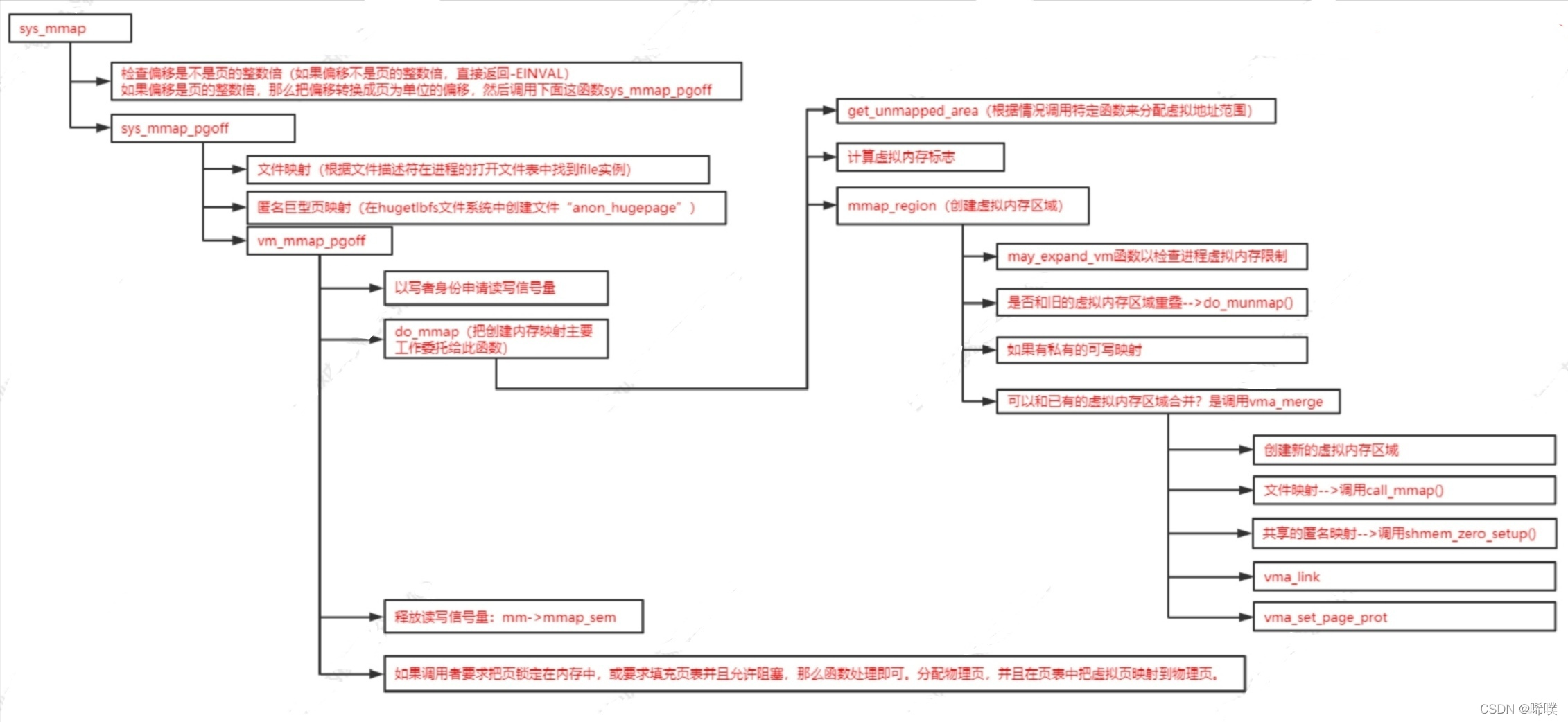

给个函数执行的流程图

再介绍一下图中的两个函数。

- vma_link:把虚拟内存区域添加到链表和红黑树中。如果虚拟内存区域关联文件,那么会把虚拟内存区域添加到文件区间树中。文件区间树用来跟踪文件被映射到哪些地方,方便查找。

- vma_set_page_prot:根据虚拟内存的标志去计算页保护位。如果是共享可写映射,就把页标记为可读,从页保护位中删除可写位,目的是跟踪事件。

有时内存分配器会通过glibc库内存分配器ptmalloc,使用brk()或mmap()直接向内核以页为单位申请内存,然后把页分为小块内存分配给应用程序。

有个阈值(mmap threshold,默认值为 128KB)。如果应用程序申请内存的空间大小小于这个值,就通过ptmalloc分配器使用brk()向内核中申请虚拟内存;否则使用ptmalloc分配器的mmap()向内核中申请虚拟内存。此外,应用程序也可以直接使用mmap()向内核中申请内存。

删除内存映射

系统调用munmap用来删除内存映射,它有两个参数:起始地址和长度。系统调用munmap的执行流程,主要把工作委托给源文件“mm/mmap.c"中的函数do_munmap。

/* Munmap is split into 2 main parts -- this part which finds

* what needs doing, and the areas themselves, which do the

* work. This now handles partial unmappings.

* Jeremy Fitzhardinge <jeremy@goop.org>

*/

int do_munmap(struct mm_struct *mm, unsigned long start, size_t len,

struct list_head *uf)

{

unsigned long end;

struct vm_area_struct *vma, *prev, *last;

if ((offset_in_page(start)) || start > TASK_SIZE || len > TASK_SIZE-start)

return -EINVAL;

len = PAGE_ALIGN(len);

if (len == 0)

return -EINVAL;

/* Find the first overlapping VMA */

//根据起始地址找到要删除的第一个虚拟内存映射的vma

vma = find_vma(mm, start);

if (!vma)

return 0;

prev = vma->vm_prev;

/* we have start < vma->vm_end */

/* if it doesn't overlap, we have nothing.. */

end = start + len;

if (vma->vm_start >= end)

return 0;

if (uf) {

int error = userfaultfd_unmap_prep(vma, start, end, uf);

if (error)

return error;

}

/*

* If we need to split any vma, do it now to save pain later.

*

* Note: mremap's move_vma VM_ACCOUNT handling assumes a partially

* unmapped vm_area_struct will remain in use: so lower split_vma

* places tmp vma above, and higher split_vma places tmp vma below.

*/

if (start > vma->vm_start) {

int error;

/*

* Make sure that map_count on return from munmap() will

* not exceed its limit; but let map_count go just above

* its limit temporarily, to help free resources as expected.

*/

if (end < vma->vm_end && mm->map_count >= sysctl_max_map_count)

return -ENOMEM;

//如果是删除虚拟内存区域里面vma的一部分,就直接分离虚拟内存区域的vma即可

error = __split_vma(mm, vma, start, 0);

if (error)

return error;

prev = vma;

}

/* Does it split the last one? */

//根据结束地址找到最后一个要删除的虚拟内存区域

last = find_vma(mm, end);

if (last && end > last->vm_start) {

//如果要删除last的一部分,就分离

int error = __split_vma(mm, last, end, 1);

if (error)

return error;

}

vma = prev ? prev->vm_next : mm->mmap;

/*

* unlock any mlock()ed ranges before detaching vmas

*/

if (mm->locked_vm) {

struct vm_area_struct *tmp = vma;

while (tmp && tmp->vm_start < end) {

if (tmp->vm_flags & VM_LOCKED) {

mm->locked_vm -= vma_pages(tmp);

//针对所有要删除的目标,如果虚拟内存区域被锁在内存中,就调用此函数结束

munlock_vma_pages_all(tmp);

}

tmp = tmp->vm_next;

}

}

/*

* Remove the vma's, and unmap the actual pages

*/

//把所有删除的目标从进程的虚拟内存区域(链表或树)中进行删除,单独组成一个临时的链表

detach_vmas_to_be_unmapped(mm, vma, prev, end);

//进一步删除进程的页

unmap_region(mm, vma, prev, start, end);

//删除所有架构

arch_unmap(mm, vma, start, end);

/* Fix up all other VM information */

remove_vma_list(mm, vma);

return 0;

}

int vm_munmap(unsigned long start, size_t len)

{

int ret;

struct mm_struct *mm = current->mm;

LIST_HEAD(uf);

if (down_write_killable(&mm->mmap_sem))

return -EINTR;

ret = do_munmap(mm, start, len, &uf);

up_write(&mm->mmap_sem);

userfaultfd_unmap_complete(mm, &uf);

return ret;

}

EXPORT_SYMBOL(vm_munmap);

流程图

其他接口

再介绍一下其他接口,内存管理子系统提供系统调用:

- mmap():用来创建内存映射;

- mremap():用来扩大或缩小已经存在的内存映射,可能同时移动;

- munmap():用来删除内存映射;

- brk():用来设置堆的上界;

- remap_file_pages():用来创建非线性的文件映射,即文件区间和虚拟地址空间之间的映射不是线性关系,现在这些新版已将删除;

- mprotect():用来设备虚拟内存区域的访问权限;

- madvise():用来向内核提出内存使用的建议,应用程序告诉内核期望怎么样使用指定的虚拟内存区域,以方便内核可以选择合适的预读和缓存技术。

介绍其中一个函数

#include <sys/mman.h>

int mprotect(void *addr, size_t len, int prot);

参数addr:起始虚拟地址,必须是页长度的整数倍

len:虚拟内存区域的长度,单位是字节

prot:保护位。如PROT_NONE(页不可以访问)、PROT_READ(页可读)、PROT_WRITE(页可写)、PROT_EXEC(页可执行)。调用此函数:如果成功返回0,否则返回负的错误号。

在内核空间中可以使用两个函数:

- remap_fpn_range():把内存的物理页映射到进程的虚拟地址空间,这个函数的用处是实现进程和内核共享内存。

- io_remap_pfn_range():把外设寄存器的物理地址映射到进程的虚拟地址空间,进程以直接访问外设寄存器。

物理内存组织结构

体系结构

物理内存体系结构,分为NUMA和UMA,以前介绍过,这里简单过一下。

非一致内存访问(Non-Uniform Memory Access,NUMA):指内存被划分成多个内存节点的多处理器系统,访问一个内存节点花费的时间取决于处理器和内存节点的距离。NUMA体系结构是中高端服务器的主流体系结构,原因是每个处理器有个本地的内存节点,访问本地内存节点速度要比访问其他节点速度快。

对称多处理器(Symmetric Multi-Processor, SMP):即一致内存访问(Uniform Memory Access,UMA),所有处理器访问内存花费的时间是相同的。在实际应用中可以采用混合体系结构,在NUMA节点内部使用SMP体系。

内存模型

内存模型是从处理器的角度看到的物理内存分布情况,内核管理不同内存模型的方式存在差异。内存管理子系统支持3种内存模型:

- 平坦内存(Flat Memory):内存的物理地址空间是连续,没有空洞。

- 不连续内存(Discontiguous):内存的物理地址空间存储空洞,这种模型可以高效地处理空洞。

- 稀疏内存(Sparse Memory):内存的物理地址空间存储空洞。如果支持内存热插拔,只能选择稀疏内存模型。

为了更好理解三者区别,提出两个问题。

什么情况下会出现内存的物理地址空间存在空洞?在系统包含多块物理内存,两块内存的物理地址之间存在着空洞。一块内存的物理地址之间也可能存在空洞。可以通过查看处理器专用的参考手册来获取分配给内存的物理地址空间。如果内存的物理地址空间是连续的,那么不连续内存模型就会产生额外的开销,降低性能,所以平坦内存模型是更好的选择。

如果内存的物理地址空间存在空洞,应该选择那种内存模型?平坦内存模型会为空洞分配一个page结构体,浪费内存,而且不连续的内存模型对空洞做了一些优化,不会为空洞分配page结构体。与平坦内存相比,不连续内存模型是更好的选择。

对于稀疏内存模型,目前是一种实验性的模型。尽量不要选择稀疏内存模型,除非内存物理地址空间很稀疏或要支持内存热插拔,其他情况就不应选择稀疏内存模型。

关于内存的三级数据结构

内存管理子系统使用节点(node)、区域(zone)和页(page)三级结构描述物理内存。

内存节点

内存节点分成2种情况。

NUMA系统的内存节点,根据处理器和内存的距离划分。

在具有不连续的内存的UMA系统中,表示比区域的级别更高的内存区域,根据物理地址是否连续划分,每块物理地址连续的内存是一个内存节点。内存节点使用一个pglist_data结构体描述内存布局。对于平坦内存模型,只有一个pglist_data实例。

//内存节点的pglist_data实例

typedef struct pglist_data {

struct zone node_zones[MAX_NR_ZONES]; //内存区域数组

struct zonelist node_zonelists[MAX_ZONELISTS]; //备用区域列表

int nr_zones; //内核区域数量

#ifdef CONFIG_FLAT_NODE_MEM_MAP /* means !SPARSEMEM */

struct page *node_mem_map; //页描述符数组

#ifdef CONFIG_PAGE_EXTENSION

struct page_ext *node_page_ext; //页的扩展属性

#endif

#endif

#ifndef CONFIG_NO_BOOTMEM

struct bootmem_data *bdata;

#endif

#ifdef CONFIG_MEMORY_HOTPLUG

/*

* Must be held any time you expect node_start_pfn, node_present_pages

* or node_spanned_pages stay constant. Holding this will also

* guarantee that any pfn_valid() stays that way.

*

* pgdat_resize_lock() and pgdat_resize_unlock() are provided to

* manipulate node_size_lock without checking for CONFIG_MEMORY_HOTPLUG.

*

* Nests above zone->lock and zone->span_seqlock

*/

spinlock_t node_size_lock;

#endif

unsigned long node_start_pfn; //起始物理页号

//物理页总数(不包括空洞)

unsigned long node_present_pages; /* total number of physical pages */

//物理页(包括空洞)

unsigned long node_spanned_pages; /* total size of physical page

range, including holes */

int node_id; //节点标识符

wait_queue_head_t kswapd_wait;

wait_queue_head_t pfmemalloc_wait;

struct task_struct *kswapd; /* Protected by

mem_hotplug_begin/end() */

int kswapd_order;

enum zone_type kswapd_classzone_idx;

int kswapd_failures; /* Number of 'reclaimed == 0' runs */

#ifdef CONFIG_COMPACTION

int kcompactd_max_order;

enum zone_type kcompactd_classzone_idx;

wait_queue_head_t kcompactd_wait;

struct task_struct *kcompactd;

#endif

#ifdef CONFIG_NUMA_BALANCING

/* Lock serializing the migrate rate limiting window */

spinlock_t numabalancing_migrate_lock;

/* Rate limiting time interval */

unsigned long numabalancing_migrate_next_window;

/* Number of pages migrated during the rate limiting time interval */

unsigned long numabalancing_migrate_nr_pages;

#endif

/*

* This is a per-node reserve of pages that are not available

* to userspace allocations.

*/

unsigned long totalreserve_pages;

#ifdef CONFIG_NUMA

/*

* zone reclaim becomes active if more unmapped pages exist.

*/

unsigned long min_unmapped_pages;

unsigned long min_slab_pages;

#endif /* CONFIG_NUMA */

/* Write-intensive fields used by page reclaim */

ZONE_PADDING(_pad1_)

spinlock_t lru_lock;

#ifdef CONFIG_DEFERRED_STRUCT_PAGE_INIT

/*

* If memory initialisation on large machines is deferred then this

* is the first PFN that needs to be initialised.

*/

unsigned long first_deferred_pfn;

unsigned long static_init_size;

#endif /* CONFIG_DEFERRED_STRUCT_PAGE_INIT */

#ifdef CONFIG_TRANSPARENT_HUGEPAGE

spinlock_t split_queue_lock;

struct list_head split_queue;

unsigned long split_queue_len;

#endif

/* Fields commonly accessed by the page reclaim scanner */

struct lruvec lruvec;

/*

* The target ratio of ACTIVE_ANON to INACTIVE_ANON pages on

* this node's LRU. Maintained by the pageout code.

*/

unsigned int inactive_ratio;

unsigned long flags;

ZONE_PADDING(_pad2_)

/* Per-node vmstats */

struct per_cpu_nodestat __percpu *per_cpu_nodestats;

atomic_long_t vm_stat[NR_VM_NODE_STAT_ITEMS];

} pg_data_t;

内存区域

内存节点被划分为内存区域,内核定义区域类型如下

enum zone_type {

#ifdef CONFIG_ZONE_DMA

/*

* ZONE_DMA is used when there are devices that are not able

* to do DMA to all of addressable memory (ZONE_NORMAL). Then we

* carve out the portion of memory that is needed for these devices.

* The range is arch specific.

*

* Some examples

*

* Architecture Limit

* ---------------------------

* parisc, ia64, sparc <4G

* s390 <2G

* arm Various

* alpha Unlimited or 0-16MB.

*

* i386, x86_64 and multiple other arches

* <16M.

*/

//直接内存访问。如果有些设备不能直接访问所有的内存,就需要使用DMA区

//比如RSA总线只能访问16M以下内存

ZONE_DMA,

#endif

#ifdef CONFIG_ZONE_DMA32

/*

* x86_64 needs two ZONE_DMAs because it supports devices that are

* only able to do DMA to the lower 16M but also 32 bit devices that

* can only do DMA areas below 4G.

*/

//64位系统,如果既要支持只能直接访问16MB以下内存设备,

//又要支持只能直接访问4GB以下的内存的32位设备,那么必须使用DMA32区域

ZONE_DMA32,

#endif

/*

* Normal addressable memory is in ZONE_NORMAL. DMA operations can be

* performed on pages in ZONE_NORMAL if the DMA devices support

* transfers to all addressable memory.

*/

//直接映射到内核虚拟地址空间的内存区域,又称为直接映射区域,或者叫线性映射区域

//ARM处理器需要使用页表映射,MIPS不需要页表映射。

ZONE_NORMAL,

#ifdef CONFIG_HIGHMEM

/*

* A memory area that is only addressable by the kernel through

* mapping portions into its own address space. This is for example

* used by i386 to allow the kernel to address the memory beyond

* 900MB. The kernel will set up special mappings (page

* table entries on i386) for each page that the kernel needs to

* access.

*/

/* 高端内存区域,是32位时代的产物。内核和用户地址空间按1:3划分,

* 即内核地址空间只占1G,不能把1G以上的内存直接映射到内核地址空间。

* 把不能直接映射的内存划分为高端内存区域。

* 64位系统的内核虚拟地址空间非常大,不需要高端内存区域。

*/

//DMA/DMA32/ZONE_NORMAL统称低端内存区域。

ZONE_HIGHMEM,

#endif

//它是一个伪内存区域,用来防止内存碎片。

ZONE_MOVABLE,

#ifdef CONFIG_ZONE_DEVICE

//支持持久内存热插拔增加的内存区域

ZONE_DEVICE,

#endif

__MAX_NR_ZONES

};

每个内存区域用一个zone结构体描述。之前贴过代码,再贴一遍,在原来基础上再添加一点注释,以块注释形式给出。

struct zone {

/* Read-mostly fields */

/* zone watermarks, access with *_wmark_pages(zone) macros */

//区域水线,使用_wmark_pages(zone)宏进行访问

unsigned long watermark[NR_WMARK]; /* 页分配器使用水线 */

unsigned long nr_reserved_highatomic;

/*

* We don't know if the memory that we're going to allocate will be

* freeable or/and it will be released eventually, so to avoid totally

* wasting several GB of ram we must reserve some of the lower zone

* memory (otherwise we risk to run OOM on the lower zones despite

* there being tons of freeable ram on the higher zones). This array is

* recalculated at runtime if the sysctl_lowmem_reserve_ratio sysctl

* changes.

*/

/* 页分配器使用,当前区域保留多少页不能借给高的区域类型 */

long lowmem_reserve[MAX_NR_ZONES];

#ifdef CONFIG_NUMA

int node;

#endif

/* 指向内存节点的pglist_data实例 */

struct pglist_data *zone_pgdat;

/* 每处理器集合 */

struct per_cpu_pageset __percpu *pageset;

#ifndef CONFIG_SPARSEMEM

/*

* Flags for a pageblock_nr_pages block. See pageblock-flags.h.

* In SPARSEMEM, this map is stored in struct mem_section

*/

unsigned long *pageblock_flags;

#endif /* CONFIG_SPARSEMEM */

/* zone_start_pfn == zone_start_paddr >> PAGE_SHIFT */

unsigned long zone_start_pfn; /* 当前区域的起始物理页号 */

/*

* spanned_pages is the total pages spanned by the zone, including

* holes, which is calculated as:

* spanned_pages = zone_end_pfn - zone_start_pfn;

*

* present_pages is physical pages existing within the zone, which

* is calculated as:

* present_pages = spanned_pages - absent_pages(pages in holes);

*

* managed_pages is present pages managed by the buddy system, which

* is calculated as (reserved_pages includes pages allocated by the

* bootmem allocator):

* managed_pages = present_pages - reserved_pages;

*

* So present_pages may be used by memory hotplug or memory power

* management logic to figure out unmanaged pages by checking

* (present_pages - managed_pages). And managed_pages should be used

* by page allocator and vm scanner to calculate all kinds of watermarks

* and thresholds.

*

* Locking rules:

*

* zone_start_pfn and spanned_pages are protected by span_seqlock.

* It is a seqlock because it has to be read outside of zone->lock,

* and it is done in the main allocator path. But, it is written

* quite infrequently.

*

* The span_seq lock is declared along with zone->lock because it is

* frequently read in proximity to zone->lock. It's good to

* give them a chance of being in the same cacheline.

*

* Write access to present_pages at runtime should be protected by

* mem_hotplug_begin/end(). Any reader who can't tolerant drift of

* present_pages should get_online_mems() to get a stable value.

*

* Read access to managed_pages should be safe because it's unsigned

* long. Write access to zone->managed_pages and totalram_pages are

* protected by managed_page_count_lock at runtime. Idealy only

* adjust_managed_page_count() should be used instead of directly

* touching zone->managed_pages and totalram_pages.

*/

unsigned long managed_pages; /* 伙伴分配器管理 的物理页的数量 */

unsigned long spanned_pages; /* 当前区域跨越的总页数,包括空洞 */

unsigned long present_pages; /* 当前区域存在的物理页的数量,不包括空洞 */

const char *name; /* 区域名称 */

#ifdef CONFIG_MEMORY_ISOLATION

/*

* Number of isolated pageblock. It is used to solve incorrect

* freepage counting problem due to racy retrieving migratetype

* of pageblock. Protected by zone->lock.

*/

unsigned long nr_isolate_pageblock;

#endif

#ifdef CONFIG_MEMORY_HOTPLUG

/* see spanned/present_pages for more description */

seqlock_t span_seqlock;

#endif

int initialized;

/* Write-intensive fields used from the page allocator */

ZONE_PADDING(_pad1_)

/* free areas of different sizes */

//MAX_ORDER是最大阶数,实际上是可分配的最大阶数加1

//默认值11,意味着伙伴分配器一次最多可分配2^10页

struct free_area free_area[MAX_ORDER]; /* 不同长度的空闲区域 */

/* zone flags, see below */

unsigned long flags;

/* Primarily protects free_area */

spinlock_t lock;

/* Write-intensive fields used by compaction and vmstats. */

ZONE_PADDING(_pad2_)

/*

* When free pages are below this point, additional steps are taken

* when reading the number of free pages to avoid per-cpu counter

* drift allowing watermarks to be breached

*/

unsigned long percpu_drift_mark;

#if defined CONFIG_COMPACTION || defined CONFIG_CMA

/* pfn where compaction free scanner should start */

unsigned long compact_cached_free_pfn;

/* pfn where async and sync compaction migration scanner should start */

unsigned long compact_cached_migrate_pfn[2];

#endif

#ifdef CONFIG_COMPACTION

/*

* On compaction failure, 1<<compact_defer_shift compactions

* are skipped before trying again. The number attempted since

* last failure is tracked with compact_considered.

*/

unsigned int compact_considered;

unsigned int compact_defer_shift;

int compact_order_failed;

#endif

#if defined CONFIG_COMPACTION || defined CONFIG_CMA

/* Set to true when the PG_migrate_skip bits should be cleared */

bool compact_blockskip_flush;

#endif

bool contiguous;

ZONE_PADDING(_pad3_)

/* Zone statistics */

atomic_long_t vm_stat[NR_VM_ZONE_STAT_ITEMS];

} ____cacheline_internodealigned_in_smp;

物理页

每个物理页对应一个page结构体,称为页描述符。内存节点的pglist_data实例的成员node_mem_map指向该内存节点包含的所有物理页的页描述符组成的数据。再贴一遍代码

/*

* Each physical page in the system has a struct page associated with

* it to keep track of whatever it is we are using the page for at the

* moment. Note that we have no way to track which tasks are using

* a page, though if it is a pagecache page, rmap structures can tell us

* who is mapping it.

*

* The objects in struct page are organized in double word blocks in

* order to allows us to use atomic double word operations on portions

* of struct page. That is currently only used by slub but the arrangement

* allows the use of atomic double word operations on the flags/mapping

* and lru list pointers also.

*/

struct page {

/* First double word block */

/* 标志位,每个bit代表不同的含义 */

unsigned long flags; /* Atomic flags, some possibly updated asynchronously */

union {

/*

* 如果mapping = 0,说明该page属于交换缓存(swap cache);当需要使用地址空间时会指定交换分区的地址空间swapper_space

* 如果mapping != 0,bit[0] = 0,说明该page属于页缓存或文件映射,mapping指向文件的地址空间address_space

* 如果mapping != 0,bit[0] != 0,说明该page为匿名映射,mapping指向struct anon_vma对象

*/

struct address_space *mapping;

void *s_mem; /* slab first object */

};

/* Second double word */

struct {

union {

pgoff_t index; /* Our offset within mapping. */

void *freelist; /* sl[aou]b first free object */

bool pfmemalloc;

};

union {

#if defined(CONFIG_HAVE_CMPXCHG_DOUBLE) && \

defined(CONFIG_HAVE_ALIGNED_STRUCT_PAGE)

/* Used for cmpxchg_double in slub */

unsigned long counters;

#else

/*

* Keep _count separate from slub cmpxchg_double data.

* As the rest of the double word is protected by

* slab_lock but _count is not.

*/

unsigned counters;

#endif

struct {

union {

/*

* 被页表映射的次数,也就是说该page同时被多少个进程共享。初始值为-1,如果只被一个进程的页表映射了,该值为0 。

* 如果该page处于伙伴系统中,该值为PAGE_BUDDY_MAPCOUNT_VALUE(-128),

* 内核通过判断该值是否为PAGE_BUDDY_MAPCOUNT_VALUE来确定该page是否属于伙伴系统。

*/

atomic_t _mapcount;

struct { /* SLUB */

unsigned inuse:16;/* 这个inuse表示这个page已经使用了多少个object */

unsigned objects:15;

unsigned frozen:1;/* frozen代表slab在cpu_slub,unfroze代表在partial队列或者full队列 */

};

int units; /* SLOB */

};

/*

* 引用计数,表示内核中引用该page的次数,如果要操作该page,引用计数会+1,操作完成-1。

* 当该值为0时,表示没有引用该page的位置,所以该page可以被解除映射,这往往在内存回收时是有用的

*/

atomic_t _count; /* Usage count, see below. */

};

unsigned int active; /* SLAB */

};

};

/* Third double word block */

union {

/*

* page处于伙伴系统中时,用于链接相同阶的伙伴(只使用伙伴中的第一个page的lru即可达到目的)

* 设置PG_slab, 则page属于slab,page->lru.next指向page驻留的的缓存的管理结构,page->lru.prec指向保存该page的slab的管理结构

* page被用户态使用或被当做页缓存使用时,用于将该page连入zone中相应的lru链表,供内存回收时使用

*/

struct list_head lru; /* Pageout list, eg. active_list

* protected by zone->lru_lock !

* Can be used as a generic list

* by the page owner.

*/

/* 用作per cpu partial的链表使用 */

struct { /* slub per cpu partial pages */

struct page *next; /* Next partial slab */

#ifdef CONFIG_64BIT

int pages; /* Nr of partial slabs left */

int pobjects; /* Approximate # of objects */

#else

/* */

short int pages;

short int pobjects;

#endif

};

struct slab *slab_page; /* slab fields */

struct rcu_head rcu_head; /* Used by SLAB

* when destroying via RCU

*/

/* First tail page of compound page */

struct {

compound_page_dtor *compound_dtor;

unsigned long compound_order;

};

};

/* Remainder is not double word aligned */

union {

/*

* 如果设置了PG_private标志,则private字段指向struct buffer_head

* 如果设置了PG_compound,则指向struct page

* 如果设置了PG_swapcache标志,private存储了该page在交换分区中对应的位置信息swp_entry_t

* 如果_mapcount = PAGE_BUDDY_MAPCOUNT_VALUE,说明该page位于伙伴系统,private存储该伙伴的阶

*/

unsigned long private;

struct kmem_cache *slab_cache; /* SL[AU]B: Pointer to slab */

struct page *first_page; /* Compound tail pages */

};

#ifdef CONFIG_MEMCG

struct mem_cgroup *mem_cgroup;

#endif

/*

* On machines where all RAM is mapped into kernel address space,

* we can simply calculate the virtual address. On machines with

* highmem some memory is mapped into kernel virtual memory

* dynamically, so we need a place to store that address.

* Note that this field could be 16 bits on x86 ... ;)

*

* Architectures with slow multiplication can define

* WANT_PAGE_VIRTUAL in asm/page.h

*/

#if defined(WANT_PAGE_VIRTUAL)

void *virtual; /* Kernel virtual address (NULL if

not kmapped, ie. highmem) */

#endif /* WANT_PAGE_VIRTUAL */

#ifdef CONFIG_KMEMCHECK

/*

* kmemcheck wants to track the status of each byte in a page; this

* is a pointer to such a status block. NULL if not tracked.

*/

void *shadow;

#endif

#ifdef LAST_CPUPID_NOT_IN_PAGE_FLAGS

int _last_cpupid;

#endif

}

/*

* The struct page can be forced to be double word aligned so that atomic ops

* on double words work. The SLUB allocator can make use of such a feature.

*/

#ifdef CONFIG_HAVE_ALIGNED_STRUCT_PAGE

__aligned(2 * sizeof(unsigned long))

#endif

;

因物理页的数量很大,所以在page结构体中每增加一个成员,可能导致所有page实例占用的内存大幅度增加。为了减少内存消耗,内核努力使page结构体尽可能小,对于不会同时生效的成员,使用联合体,这种做法带来的负责影响是page结构体的可读性差。

内存分配函数

系统调用内存分配函数有两类,一是kmalloc/vmalloc分配内核内存,malloc分配用户空间的内存。这里就只贴出声明了

//用于申请较小的、连续的物理内存

void *kmalloc (size_t size, gfp_t flags);

//用于申请较大的内存空间,虚拟内存是连续的,物理地址空间不一定连续

void *vmalloc(unsigned long size);

kmalloc保证分配的内存在物理上是连续的,那么虚拟地址空间肯定也连续,但是kmalloc能分配的空间大小有限,注意kmalloc最大只能开辟128KB-16B,16个字节是被页描述符结构占用了;vmalloc保证在虚拟地址空间上连续;malloc不保证任何东西。

只有要被DMA访问时才需要物理上的连续,但是vmalloc比kmalloc速度慢。

716

716

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?