秋招面试专栏推荐 :深度学习算法工程师面试问题总结【百面算法工程师】——点击即可跳转

💡💡💡本专栏所有程序均经过测试,可成功执行💡💡💡

专栏目录 :《YOLOv8改进有效涨点》专栏介绍 & 专栏目录 | 目前已有80+篇内容,内含各种Head检测头、损失函数Loss、Backbone、Neck、NMS等创新点改进——点击即可跳转

本文介绍了最新一代的MobileNets,即MobileNetV4(MNv4),它具有针对移动设备的通用高效架构设计。在其核心部分,我们引入了通用倒瓶颈(UIB)搜索块,这是一个统一且灵活的结构,它融合了倒瓶颈(IB)、ConvNext、前馈网络(FFN)以及一种新颖的额外深度wise(ExtraDW)变体。与UIB相结合,我们推出了专为移动加速器设计的Mobile MQA注意力块,文章在介绍主要的原理后,将手把手教学如何进行模块的代码添加和修改,并将修改后的完整代码放在文章的最后,方便大家一键运行,小白也可轻松上手实践。以帮助您更好地学习深度学习目标检测YOLO系列的挑战。

专栏地址:YOLOv8改进——更新各种有效涨点方法——点击即可跳转 订阅学习不迷路

目录

1. 原理

论文地址:MobileNetV4 - Universal Models for the Mobile Ecosystem——点击即可跳转

官方代码: MobileNetV4代码仓库——点击即可跳转

MobileNetV4 (MNv4) 是最新一代 MobileNets,旨在优化各种移动设备的性能。以下是其架构背后的关键原则和组件:

通用倒置瓶颈 (UIB)

-

倒置瓶颈块:MobileNetV4 以倒置瓶颈 (IB) 块的成功为基础,这是之前 MobileNets 的核心功能。

-

灵活性和统一性:UIB 结合了多种微架构,包括倒置瓶颈、ConvNext、前馈网络 (FFN) 和创新的额外深度 (ExtraDW) 变体。这种统一使其能够有效地处理不同类型的操作。

-

可选深度卷积:UIB 包括可选深度卷积,可增强其混合空间和通道信息的计算效率和灵活性。

移动 MQA(移动量化注意力)

-

效率:此注意力模块专门针对移动加速器进行了优化,与传统的多头注意力 (MHSA) 相比,推理速度显著提高了 39%。

-

移动设备上的性能:移动 MQA 模块经过量身定制,可充分利用移动硬件的功能,确保更快的处理速度和更低的延迟。

神经架构搜索 (NAS)

-

优化的搜索方案:MobileNetV4 引入了一种改进的 NAS 流程,其中包括一个两阶段方法:粗粒度搜索和细粒度搜索。此方法提高了搜索过程的效率和有效性,从而可以创建高性能模型。

-

硬件感知:NAS 方法考虑了各种移动硬件平台的限制和功能,确保生成的模型针对各种设备进行了优化,从 CPU 到专用加速器,如 Apple Neural Engine 和 Google Pixel EdgeTPU。

蒸馏技术

-

准确度增强:MobileNetV4 采用了一种新颖的蒸馏技术,该技术使用具有不同增强的混合数据集和平衡的类内数据。该技术提高了模型的泛化能力和准确性。

-

性能:通过这种蒸馏技术增强的 MNv4-Hybrid-Large 模型在 ImageNet-1K 上实现了令人印象深刻的 87% 的准确率,在 Pixel 8 EdgeTPU 上的运行时间仅为 3.8 毫秒。

帕累托最优

-

性能平衡:MobileNetV4 模型旨在在各种硬件平台上实现帕累托最优,这意味着它们在计算效率和准确性之间提供了最佳平衡。

-

独立于硬件的效率:这些模型经过测试,证明在不同类型的硬件上表现良好,从 CPU 和 GPU 到 DSP 和专用加速器,使其用途广泛且适用性广泛。

设计考虑

-

运算强度:MobileNetV4 的设计考虑了不同硬件的运算强度,平衡了计算负载和内存带宽,以最大限度地提高性能。

-

层优化:MobileNetV4 的初始层设计为计算密集型,以提高模型容量和准确性,而最终层则专注于即使在高 RP(脊点)硬件上也能保持准确性。

总之,MobileNetV4 集成了先进的技术和创新,创建了一系列在各种移动设备上都高效准确的模型。其设计原则强调灵活性、硬件感知优化以及计算效率和模型性能之间的平衡。

2. 将MobileNetV4添加到YOLOv8中

2.1 MobileNetV4的代码实现

关键步骤一: 在/ultralytics/ultralytics/nn/modules/下新建MobileNetV4.py,并粘贴下面代码

"""

Creates a MobileNetV4 Model as defined in:

Danfeng Qin, Chas Leichner, Manolis Delakis, Marco Fornoni, Shixin Luo, Fan Yang, Weijun Wang, Colby Banbury, Chengxi Ye, Berkin Akin, Vaibhav Aggarwal, Tenghui Zhu, Daniele Moro, Andrew Howard. (2024).

MobileNetV4 - Universal Models for the Mobile Ecosystem

arXiv preprint arXiv:2404.10518.

"""

import torch

import torch.nn as nn

import math

__all__ = ['mobilenetv4_conv_small', 'mobilenetv4_conv_medium', 'mobilenetv4_conv_large']

def make_divisible(value, divisor, min_value=None, round_down_protect=True):

if min_value is None:

min_value = divisor

new_value = max(min_value, int(value + divisor / 2) // divisor * divisor)

# Make sure that round down does not go down by more than 10%.

if round_down_protect and new_value < 0.9 * value:

new_value += divisor

return new_value

class ConvBN(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride=1):

super(ConvBN, self).__init__()

self.block = nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size, stride, (kernel_size - 1) // 2, bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True),

)

def forward(self, x):

return self.block(x)

class UniversalInvertedBottleneck(nn.Module):

def __init__(self,

in_channels,

out_channels,

expand_ratio,

start_dw_kernel_size,

middle_dw_kernel_size,

stride,

middle_dw_downsample: bool = True,

use_layer_scale: bool = False,

layer_scale_init_value: float = 1e-5):

super(UniversalInvertedBottleneck, self).__init__()

self.start_dw_kernel_size = start_dw_kernel_size

self.middle_dw_kernel_size = middle_dw_kernel_size

if start_dw_kernel_size:

self.start_dw_conv = nn.Conv2d(in_channels, in_channels, start_dw_kernel_size,

stride if not middle_dw_downsample else 1,

(start_dw_kernel_size - 1) // 2,

groups=in_channels, bias=False)

self.start_dw_norm = nn.BatchNorm2d(in_channels)

expand_channels = make_divisible(in_channels * expand_ratio, 8)

self.expand_conv = nn.Conv2d(in_channels, expand_channels, 1, 1, bias=False)

self.expand_norm = nn.BatchNorm2d(expand_channels)

self.expand_act = nn.ReLU(inplace=True)

if middle_dw_kernel_size:

self.middle_dw_conv = nn.Conv2d(expand_channels, expand_channels, middle_dw_kernel_size,

stride if middle_dw_downsample else 1,

(middle_dw_kernel_size - 1) // 2,

groups=expand_channels, bias=False)

self.middle_dw_norm = nn.BatchNorm2d(expand_channels)

self.middle_dw_act = nn.ReLU(inplace=True)

self.proj_conv = nn.Conv2d(expand_channels, out_channels, 1, 1, bias=False)

self.proj_norm = nn.BatchNorm2d(out_channels)

if use_layer_scale:

self.gamma = nn.Parameter(layer_scale_init_value * torch.ones((out_channels)), requires_grad=True)

self.use_layer_scale = use_layer_scale

self.identity = stride == 1 and in_channels == out_channels

def forward(self, x):

shortcut = x

if self.start_dw_kernel_size:

x = self.start_dw_conv(x)

x = self.start_dw_norm(x)

x = self.expand_conv(x)

x = self.expand_norm(x)

x = self.expand_act(x)

if self.middle_dw_kernel_size:

x = self.middle_dw_conv(x)

x = self.middle_dw_norm(x)

x = self.middle_dw_act(x)

x = self.proj_conv(x)

x = self.proj_norm(x)

if self.use_layer_scale:

x = self.gamma * x

return x + shortcut if self.identity else x

class MobileNetV4(nn.Module):

def __init__(self, block_specs, num_classes=1000):

super(MobileNetV4, self).__init__()

c = 3

layers = []

for block_type, *block_cfg in block_specs:

if block_type == 'conv_bn':

block = ConvBN

k, s, f = block_cfg

layers.append(block(c, f, k, s))

elif block_type == 'uib':

block = UniversalInvertedBottleneck

start_k, middle_k, s, f, e = block_cfg

layers.append(block(c, f, e, start_k, middle_k, s))

else:

raise NotImplementedError

c = f

self.features = nn.Sequential(*layers)

self.channels = [64, 128, 256, 512]

self.indexs=[2,4,15,28]

self._initialize_weights()

def forward(self, x):

out=[]

for i in range(len(self.features)):

x = self.features[i](x)

if i in self.indexs:

out.append(x)

# print(x.shape)

return out

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

if m.bias is not None:

m.bias.data.zero_()

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

def mobilenetv4_conv_small(**kwargs):

"""

Constructs a MobileNetV4-Conv-Small model

"""

block_specs = [

# conv_bn, kernel_size, stride, out_channels

# uib, start_dw_kernel_size, middle_dw_kernel_size, stride, out_channels, expand_ratio

# 112px

('conv_bn', 3, 2, 32),

# 56px

('conv_bn', 3, 2, 32),

('conv_bn', 1, 1, 32),

# 28px

('conv_bn', 96, 3, 2),

('conv_bn', 64, 1, 1),

# 14px

('uib', 5, 5, 2, 96, 3.0), # ExtraDW

('uib', 0, 3, 1, 96, 2.0), # IB

('uib', 0, 3, 1, 96, 2.0), # IB

('uib', 0, 3, 1, 96, 2.0), # IB

('uib', 0, 3, 1, 96, 2.0), # IB

('uib', 3, 0, 1, 96, 4.0), # ConvNext

# 7px

('uib', 3, 3, 2, 128, 6.0), # ExtraDW

('uib', 5, 5, 1, 128, 4.0), # ExtraDW

('uib', 0, 5, 1, 128, 4.0), # IB

('uib', 0, 5, 1, 128, 3.0), # IB

('uib', 0, 3, 1, 128, 4.0), # IB

('uib', 0, 3, 1, 128, 4.0), # IB

('conv_bn', 1, 1, 960), # Conv

]

return MobileNetV4(block_specs, **kwargs)

def mobilenetv4_conv_medium(**kwargs):

"""

Constructs a MobileNetV4-Conv-Medium model

"""

block_specs = [

('conv_bn', 3, 2, 32),

('conv_bn', 3, 2, 128),

('conv_bn', 1, 1, 48),

# 3rd stage

('uib', 3, 5, 2, 80, 4.0),

('uib', 3, 3, 1, 80, 2.0),

# 4th stage

('uib', 3, 5, 2, 160, 6.0),

('uib', 3, 3, 1, 160, 4.0),

('uib', 3, 3, 1, 160, 4.0),

('uib', 3, 5, 1, 160, 4.0),

('uib', 3, 3, 1, 160, 4.0),

('uib', 3, 0, 1, 160, 4.0),

('uib', 0, 0, 1, 160, 2.0),

('uib', 3, 0, 1, 160, 4.0),

# 5th stage

('uib', 5, 5, 2, 256, 6.0),

('uib', 5, 5, 1, 256, 4.0),

('uib', 3, 5, 1, 256, 4.0),

('uib', 3, 5, 1, 256, 4.0),

('uib', 0, 0, 1, 256, 4.0),

('uib', 3, 0, 1, 256, 4.0),

('uib', 3, 5, 1, 256, 2.0),

('uib', 5, 5, 1, 256, 4.0),

('uib', 0, 0, 1, 256, 4.0),

('uib', 0, 0, 1, 256, 4.0),

('uib', 5, 0, 1, 256, 2.0),

]

return MobileNetV4(block_specs, **kwargs)

def mobilenetv4_conv_large(**kwargs):

"""

Constructs a MobileNetV4-Conv-Large model

"""

block_specs = [

('conv_bn', 3, 2, 24),

('conv_bn', 3, 2, 96),

('conv_bn', 1, 1, 64),

('uib', 3, 5, 2, 128, 4.0),

('uib', 3, 3, 1, 128, 4.0),

('uib', 3, 5, 2, 256, 4.0),

('uib', 3, 3, 1, 256, 4.0),

('uib', 3, 3, 1, 256, 4.0),

('uib', 3, 3, 1, 256, 4.0),

('uib', 3, 5, 1, 256, 4.0),

('uib', 5, 3, 1, 256, 4.0),

('uib', 5, 3, 1, 256, 4.0),

('uib', 5, 3, 1, 256, 4.0),

('uib', 5, 3, 1, 256, 4.0),

('uib', 5, 3, 1, 256, 4.0),

('uib', 3, 0, 1, 256, 4.0),

('uib', 5, 5, 2, 512, 4.0),

('uib', 5, 5, 1, 512, 4.0),

('uib', 5, 5, 1, 512, 4.0),

('uib', 5, 5, 1, 512, 4.0),

('uib', 5, 0, 1, 512, 4.0),

('uib', 5, 3, 1, 512, 4.0),

('uib', 5, 0, 1, 512, 4.0),

('uib', 5, 0, 1, 512, 4.0),

('uib', 5, 3, 1, 512, 4.0),

('uib', 5, 5, 1, 512, 4.0),

('uib', 5, 0, 1, 512, 4.0),

('uib', 5, 0, 1, 512, 4.0),

('uib', 5, 0, 1, 512, 4.0),

]

return MobileNetV4(block_specs, **kwargs)

MobileNetV4处理图像的主要步骤可以概括为以下几个关键阶段:

1. 图像预处理

在输入模型之前,图像会经过一些预处理步骤,这通常包括:

-

缩放:将图像调整到指定的输入大小(如224x224)。

-

归一化:对图像像素值进行归一化处理,使其值在特定范围内(如0到1或-1到1)。

2. 初始卷积层

图像首先通过一个标准卷积层,该层具有较大的卷积核(如3x3或5x5),用于捕捉低级别的特征,例如边缘和纹理。这一层通常是计算密集型的,以便在早期捕获更多的信息。

3. 倒瓶颈块(Inverted Bottleneck Block)

在MobileNetV4中,倒瓶颈块(IB块)是核心组件之一。每个倒瓶颈块包含以下子步骤:

-

扩展卷积:首先使用1x1卷积扩展特征图的通道数。

-

深度可分离卷积:然后使用深度可分离卷积(Depthwise Separable Convolution)在空间维度上进行卷积操作。

-

压缩卷积:最后通过1x1卷积将通道数压缩回去。

4. 通用倒瓶颈块(Universal Inverted Bottleneck, UIB)

MobileNetV4引入了UIB,这种块可以灵活地调整,以适应不同的计算需求:

-

标准倒瓶颈:与传统倒瓶颈类似,但增加了更多的灵活性。

-

ConvNext变体:结合了现代卷积架构的优点。

-

前馈网络(FFN):适用于需要更多非线性变换的场景。

-

额外深度可分离卷积(ExtraDW):用于提高计算效率和特征提取能力。

5. Mobile MQA注意力块

这个模块是优化后的注意力机制,专门设计用于移动设备:

-

注意力计算:对特征图进行加权操作,以增强重要特征并抑制不重要的部分。

-

快速推理:该模块在硬件加速器上实现了快速的推理速度,提高了整体的处理效率。

6. 全局平均池化

在最后的卷积层之后,模型会对特征图进行全局平均池化(Global Average Pooling),将特征图转化为固定长度的向量。这一步骤通过取每个特征图的平均值,将空间维度消除,仅保留通道维度的信息。

7. 全连接层和分类层

最后,通过全连接层(Fully Connected Layer)和Softmax分类层,将特征向量转换为最终的分类结果。

通过上述步骤,MobileNetV4能够高效地处理和分类输入图像,特别适用于资源受限的移动设备上,同时保证了较高的精度和性能。

2.2 更改init.py文件

关键步骤二:修改modules文件夹下的__init__.py文件,先导入函数

然后在下面的__all__中声明函数

2.3 添加yaml文件

关键步骤三:在/ultralytics/ultralytics/cfg/models/v8下面新建文件yolov8_MobileNetV4.yaml文件,粘贴下面的内容

- OD【目标检测】

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs

x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, mobilenetv4_conv_large, []] # 0-P1/2 可以替换为 mobilenetv4_conv_small, mobilenetv4_conv_medium, mobilenetv4_conv_large中任意一个

- [-1, 1, SPPF, [1024, 5]] # 5

# YOLOv8.0n head

head:

- [-1, 1, nn.Upsample, [None, 2, 'nearest']] #6

- [[-1, 3], 1, Concat, [1]] # cat backbone P4 7

- [-1, 3, C2f, [512]] # 12 8

- [-1, 1, nn.Upsample, [None, 2, 'nearest']] #9

- [[-1, 2], 1, Concat, [1]] # cat backbone P3 10

- [-1, 3, C2f, [256]] # 15 (P3/8-small) 11

- [-1, 1, Conv, [256, 3, 2]] #12

- [[-1, 8], 1, Concat, [1]] # cat head P4 13

- [-1, 3, C2f, [512]] # 18 (P4/16-medium) 14

- [-1, 1, Conv, [512, 3, 2]] #15

- [[-1, 5], 1, Concat, [1]] # cat head P5 16

- [-1, 3, C2f, [1024]] # 21 (P5/32-large) 17

- [[11, 14, 17], 1, Detect, [nc]] # Detect(P3, P4, P5)- Seg【语义分割】

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs

x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, mobilenetv4_conv_large, []] # 0-P1/2 # 可以替换为 mobilenetv4_conv_small, mobilenetv4_conv_medium, mobilenetv4_conv_large中任意一个

- [-1, 1, SPPF, [1024, 5]] # 5

# YOLOv8.0n head

head:

- [-1, 1, nn.Upsample, [None, 2, 'nearest']] #6

- [[-1, 3], 1, Concat, [1]] # cat backbone P4 7

- [-1, 3, C2f, [512]] # 12 8

- [-1, 1, nn.Upsample, [None, 2, 'nearest']] #9

- [[-1, 2], 1, Concat, [1]] # cat backbone P3 10

- [-1, 3, C2f, [256]] # 15 (P3/8-small) 11

- [-1, 1, Conv, [256, 3, 2]] #12

- [[-1, 8], 1, Concat, [1]] # cat head P4 13

- [-1, 3, C2f, [512]] # 18 (P4/16-medium) 14

- [-1, 1, Conv, [512, 3, 2]] #15

- [[-1, 5], 1, Concat, [1]] # cat head P5 16

- [-1, 3, C2f, [1024]] # 21 (P5/32-large) 17

- [[11, 14, 17], 1, Segment, [nc, 32, 256]] # Segment(P3, P4, P5)- OBB【旋转检测】

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs

x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, mobilenetv4_conv_large, []] # 0-P1/2 # 可以替换为 mobilenetv4_conv_small, mobilenetv4_conv_medium, mobilenetv4_conv_large中任意一个

- [-1, 1, SPPF, [1024, 5]] # 5

# YOLOv8.0n head

head:

- [-1, 1, nn.Upsample, [None, 2, 'nearest']] #6

- [[-1, 3], 1, Concat, [1]] # cat backbone P4 7

- [-1, 3, C2f, [512]] # 12 8

- [-1, 1, nn.Upsample, [None, 2, 'nearest']] #9

- [[-1, 2], 1, Concat, [1]] # cat backbone P3 10

- [-1, 3, C2f, [256]] # 15 (P3/8-small) 11

- [-1, 1, Conv, [256, 3, 2]] #12

- [[-1, 8], 1, Concat, [1]] # cat head P4 13

- [-1, 3, C2f, [512]] # 18 (P4/16-medium) 14

- [-1, 1, Conv, [512, 3, 2]] #15

- [[-1, 5], 1, Concat, [1]] # cat head P5 16

- [-1, 3, C2f, [1024]] # 21 (P5/32-large) 17

- [[11, 14, 17], 1, OBB, [nc, 1]] # OBB(P3, P4, P5)温馨提示:因为本文只是对yolov8基础上添加模块,如果要对yolov8n/l/m/x进行添加则只需要指定对应的depth_multiple 和 width_multiple。不明白的同学可以看这篇文章: yolov8yaml文件解读——点击即可跳转

# YOLOv8n

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.25 # layer channel multiple

max_channels: 1024 # max_channels

# YOLOv8s

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

max_channels: 1024 # max_channels

# YOLOv8l

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

max_channels: 512 # max_channels

# YOLOv8m

depth_multiple: 0.67 # model depth multiple

width_multiple: 0.75 # layer channel multiple

max_channels: 768 # max_channels

# YOLOv8x

depth_multiple: 1.33 # model depth multiple

width_multiple: 1.25 # layer channel multiple

max_channels: 512 # max_channels2.4 注册模块

关键步骤四:在task.py的parse_model函数替换为下面的内容

def parse_model(d, ch, verbose=True): # model_dict, input_channels(3)

"""Parse a YOLO model.yaml dictionary into a PyTorch model."""

import ast

# Args

max_channels = float("inf")

nc, act, scales = (d.get(x) for x in ("nc", "activation", "scales"))

depth, width, kpt_shape = (d.get(x, 1.0) for x in ("depth_multiple", "width_multiple", "kpt_shape"))

if scales:

scale = d.get("scale")

if not scale:

scale = tuple(scales.keys())[0]

LOGGER.warning(f"WARNING ⚠️ no model scale passed. Assuming scale='{scale}'.")

depth, width, max_channels = scales[scale]

if act:

Conv.default_act = eval(act) # redefine default activation, i.e. Conv.default_act = nn.SiLU()

if verbose:

LOGGER.info(f"{colorstr('activation:')} {act}") # print

if verbose:

LOGGER.info(f"\n{'':>3}{'from':>20}{'n':>3}{'params':>10} {'module':<45}{'arguments':<30}")

ch = [ch]

is_backbone = False

layers, save, c2 = [], [], ch[-1] # layers, savelist, ch out

for i, (f, n, m, args) in enumerate(d["backbone"] + d["head"]): # from, number, module, args

m = getattr(torch.nn, m[3:]) if "nn." in m else globals()[m] # get module

for j, a in enumerate(args):

if isinstance(a, str):

with contextlib.suppress(ValueError):

args[j] = locals()[a] if a in locals() else ast.literal_eval(a)

n = n_ = max(round(n * depth), 1) if n > 1 else n # depth gain

if m in (

Classify,

Conv,

ConvTranspose,

GhostConv,

Bottleneck,

GhostBottleneck,

SPP,

SPPF,

DWConv,

Focus,

BottleneckCSP,

C1,

C2,

C2f,

C2fAttn,

C3,

C3TR,

C3Ghost,

nn.ConvTranspose2d,

DWConvTranspose2d,

C3x,

RepC3,

):

c1, c2 = ch[f], args[0]

if c2 != nc: # if c2 not equal to number of classes (i.e. for Classify() output)

c2 = make_divisible(min(c2, max_channels) * width, 8)

if m is C2fAttn:

args[1] = make_divisible(min(args[1], max_channels // 2) * width, 8) # embed channels

args[2] = int(

max(round(min(args[2], max_channels // 2 // 32)) * width, 1) if args[2] > 1 else args[2]

) # num heads

args = [c1, c2, *args[1:]]

if m in (BottleneckCSP, C1, C2, C2f, C2fAttn, C3, C3TR, C3Ghost, C3x, RepC3):

args.insert(2, n) # number of repeats

n = 1

elif m is AIFI:

args = [ch[f], *args]

elif m in (HGStem, HGBlock):

c1, cm, c2 = ch[f], args[0], args[1]

args = [c1, cm, c2, *args[2:]]

if m is HGBlock:

args.insert(4, n) # number of repeats

n = 1

elif m in (mobilenetv4_conv_small, mobilenetv4_conv_medium, mobilenetv4_conv_large):

m = m(*args)

c2 = m.channels

# print(m.channels)

elif m is ResNetLayer:

c2 = args[1] if args[3] else args[1] * 4

elif m is nn.BatchNorm2d:

args = [ch[f]]

elif m is Concat:

c2 = sum(ch[x] for x in f)

elif m in (Detect, WorldDetect, Segment, Pose, OBB, ImagePoolingAttn):

args.append([ch[x] for x in f])

if m is Segment:

args[2] = make_divisible(min(args[2], max_channels) * width, 8)

elif m is RTDETRDecoder: # special case, channels arg must be passed in index 1

args.insert(1, [ch[x] for x in f])

else:

c2 = ch[f]

if isinstance(c2, list):

is_backbone = True

m_ = m

m_.backbone = True

else:

m_ = nn.Sequential(*(m(*args) for _ in range(n))) if n > 1 else m(*args) # module

t = str(m)[8:-2].replace('__main__.', '') # module type

m.np = sum(x.numel() for x in m_.parameters()) # number params

m_.i, m_.f, m_.type, m_.np = i + 4 if is_backbone else i, f, t, m.np # attach index, 'from' index, type, number params

if verbose:

LOGGER.info(f'{i:>3}{str(f):>20}{n_:>3}{m.np:10.0f} {t:<45}{str(args):<30}') # print

save.extend(x % (i + 4 if is_backbone else i) for x in ([f] if isinstance(f, int) else f) if

x != -1) # append to savelist

layers.append(m_)

if i == 0:

ch = []

if isinstance(c2, list):

ch.extend(c2)

for _ in range(5 - len(ch)):

ch.insert(0, 0)

else:

ch.append(c2)

return nn.Sequential(*layers), sorted(save)2.5 替换函数

关键步骤五:在task.py的BaseModel类下的_predict_once函数替换为下面的内容

def _predict_once(self, x, profile=False, visualize=False, embed=None):

"""

Perform a forward pass through the network.

Args:

x (torch.Tensor): The input tensor to the model.

profile (bool): Print the computation time of each layer if True, defaults to False.

visualize (bool): Save the feature maps of the model if True, defaults to False.

embed (list, optional): A list of feature vectors/embeddings to return.

Returns:

(torch.Tensor): The last output of the model.

"""

y, dt, embeddings = [], [], [] # outputs

for m in self.model:

if m.f != -1: # if not from previous layer

x = (

y[m.f]

if isinstance(m.f, int)

else [x if j == -1 else y[j] for j in m.f]

) # from earlier layers

if profile:

self._profile_one_layer(m, x, dt)

if hasattr(m, "backbone"):

x = m(x)

for _ in range(5 - len(x)):

x.insert(0, None)

for i_idx, i in enumerate(x):

if i_idx in self.save:

y.append(i)

else:

y.append(None)

# for i in x:

# if i is not None:

# print(i.size())

x = x[-1]

else:

x = m(x) # run

y.append(x if m.i in self.save else None) # save output

if visualize:

feature_visualization(x, m.type, m.i, save_dir=visualize)

if embed and m.i in embed:

embeddings.append(

nn.functional.adaptive_avg_pool2d(x, (1, 1)).squeeze(-1).squeeze(-1)

) # flatten

if m.i == max(embed):

return torch.unbind(torch.cat(embeddings, 1), dim=0)

return x2.6 执行程序

在train.py中,将model的参数路径设置为yolov8_MobileNetv4.yaml的路径

建议大家写绝对路径,确保一定能找到

from ultralytics import YOLO

# Load a model

# model = YOLO('yolov8n.yaml') # build a new model from YAML

# model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

model = YOLO(r'/projects/ultralytics/ultralytics/cfg/models/v8/yolov8_MobilenetV4.yaml') # build from YAML and transfer weights

# Train the model

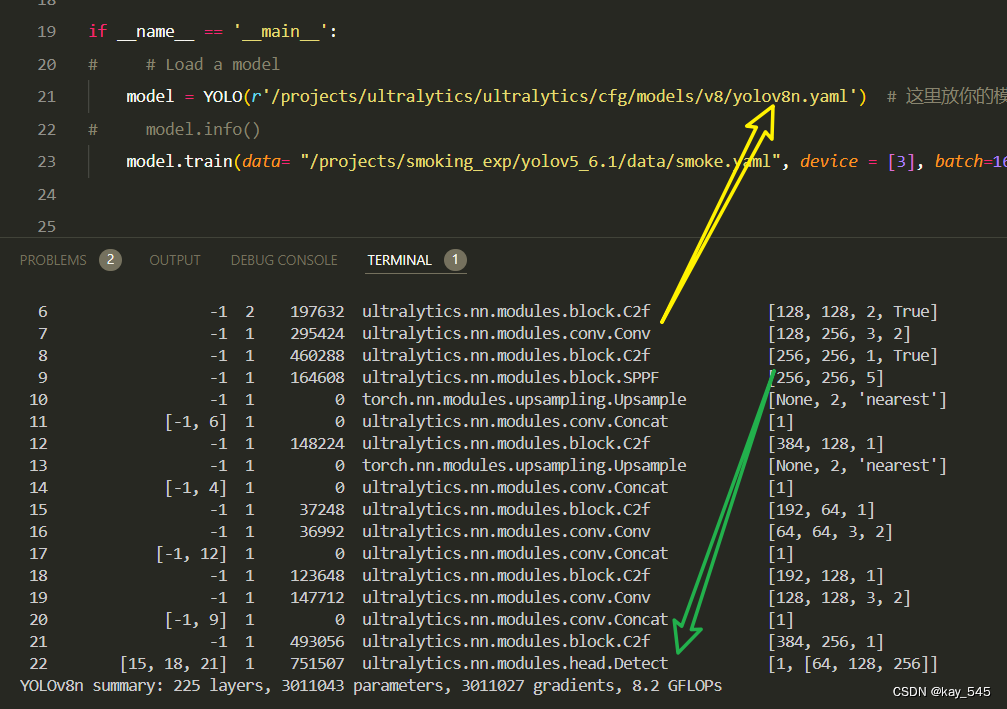

model.train(batch=16)🚀运行程序,如果出现下面的内容则说明添加成功🚀

1 -1 1 394240 ultralytics.nn.modules.block.SPPF [512, 256, 5]

2 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

3 [-1, 3] 1 0 ultralytics.nn.modules.conv.Concat [1]

4 -1 1 164608 ultralytics.nn.modules.block.C2f [512, 128, 1]

5 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

6 [-1, 2] 1 0 ultralytics.nn.modules.conv.Concat [1]

7 -1 1 41344 ultralytics.nn.modules.block.C2f [256, 64, 1]

8 -1 1 36992 ultralytics.nn.modules.conv.Conv [64, 64, 3, 2]

9 [-1, 8] 1 0 ultralytics.nn.modules.conv.Concat [1]

10 -1 1 123648 ultralytics.nn.modules.block.C2f [192, 128, 1]

11 -1 1 147712 ultralytics.nn.modules.conv.Conv [128, 128, 3, 2]

12 [-1, 5] 1 0 ultralytics.nn.modules.conv.Concat [1]

13 -1 1 493056 ultralytics.nn.modules.block.C2f [384, 256, 1]

14 [11, 14, 17] 1 897664 ultralytics.nn.modules.head.Detect [80, [64, 128, 256]]

YOLOv8_mobilenetv4 summary: 418 layers, 34655800 parameters, 34655784 gradients

3. 完整代码分享

https://pan.baidu.com/s/12_qtwlKNeSiycQY_EKPV-w?pwd=h13b提取码: h13b

4. GFLOPs

关于GFLOPs的计算方式可以查看:百面算法工程师 | 卷积基础知识——Convolution

未改进的YOLOv8nGFLOPs

改进后的GFLOPs

现在手上没有卡了,等过段时候有卡了把这补上,需要的同学自己测一下

5. 进阶

可以与其他的注意力机制或者损失函数等结合,进一步提升检测效果

6. 总结

MobileNetV4(MNv4)是最新一代的MobileNet,其核心原理在于实现了跨各种移动设备的高效性能。它引入了通用倒瓶颈(UIB)搜索块,这是一种统一且灵活的结构,结合了倒瓶颈、ConvNext、前馈网络(FFN)和新的额外深度可分离卷积(ExtraDW)变体。与此同时,MobileNetV4还推出了专为移动加速器优化的Mobile MQA注意力块,提高了39%的推理速度。通过改进的神经架构搜索(NAS)方法,MobileNetV4显著提高了搜索效率,创建了在CPU、DSP、GPU以及专用加速器(如Apple Neural Engine和Google Pixel EdgeTPU)上表现优异的模型。此外,MobileNetV4还采用了一种新的蒸馏技术,进一步提高了模型的准确性。整体上,MobileNetV4通过整合UIB、Mobile MQA和改进的NAS方法,成功打造出一系列在移动设备上表现最优的模型,兼顾计算效率和精度,实现了在多种硬件平台上的帕累托最优性能。

8万+

8万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?