基于 XTuner 微调个人小助手

创建开发机

安装XTuner(需要很长时间,耐心等待)

studio-conda xtuner0.1.17

conda activate xtuner0.1.17

cd ~

mkdir -p /root/xtuner0117 && cd /root/xtuner0117

git clone -b v0.1.17 https://github.com/InternLM/xtuner

cd /root/xtuner0117/xtuner

准备数据集,用于后续微调

mkdir -p /root/ft && cd /root/ft

mkdir -p /root/ft/data && cd /root/ft/data

touch /root/ft/data/generate_data.py

打开generate_data.py文件,复制粘贴以下内容并保存

import json

设置用户的名字

name = 'Crohcirtep'

# 设置需要重复添加的数据次数,n越大,越有个人特色

n = 10000

# 初始化OpenAI格式的数据结构

data = [

{

"messages": [

{

"role": "user",

"content": "请做一下自我介绍"

},

{

"role": "assistant",

"content": "我是{}的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦".format(name)

}

]

}

]

通过循环,将初始化的对话数据重复添加到data列表中

for i in range(n):

data.append(data[0])

将data列表中的数据写入到一个名为'personal_assistant.json'的文件中

with open('personal_assistant.json', 'w', encoding='utf-8') as f:

# 使用json.dump方法将数据以JSON格式写入文件

# ensure_ascii=False 确保中文字符正常显示

# indent=4 使得文件内容格式化,便于阅读

json.dump(data, f, ensure_ascii=False, indent=4)

运行generate_data.py

cd /root/ft/data

python /root/ft/data/generate_data.py

下载InterLM2-Chat-1.8B模型文件

mkdir -p /root/ft/model

cp -r /root/share/new_models/Shanghai_AI_Laboratory/internlm2-chat-1_8b/* /root/ft/model/

选择配置文件,修改具体内容

配置文件,是用于定义和控制模型训练和测试过程中各个方面的参数和设置的工具

# xtuner list-cfg

xtuner list-cfg -p internlm2_1_8b

mkdir -p /root/ft/config

xtuner copy-cfg internlm2_1_8b_qlora_alpaca_e3 /root/ft/config

选择了一个最匹配的配置文件并准备好其他内容后,需要根据自己的内容对该配置文件进行调整,使其能够满足实际训练的要求。先打开/root/ft/config/internlm2_1_8b_qlora_alpaca_e3_copy.py文件,再删去原有内容,然后填入以下内容

# Copyright (c) OpenMMLab. All rights reserved.

import torch

from datasets import load_dataset

from mmengine.dataset import DefaultSampler

from mmengine.hooks import (CheckpointHook, DistSamplerSeedHook, IterTimerHook,

LoggerHook, ParamSchedulerHook)

from mmengine.optim import AmpOptimWrapper, CosineAnnealingLR, LinearLR

from peft import LoraConfig

from torch.optim import AdamW

from transformers import (AutoModelForCausalLM, AutoTokenizer,

BitsAndBytesConfig)

from xtuner.dataset import process_hf_dataset

from xtuner.dataset.collate_fns import default_collate_fn

from xtuner.dataset.map_fns import openai_map_fn, template_map_fn_factory

from xtuner.engine.hooks import (DatasetInfoHook, EvaluateChatHook,

VarlenAttnArgsToMessageHubHook)

from xtuner.engine.runner import TrainLoop

from xtuner.model import SupervisedFinetune

from xtuner.parallel.sequence import SequenceParallelSampler

from xtuner.utils import PROMPT_TEMPLATE, SYSTEM_TEMPLATE

#######################################################################

# PART 1 Settings #

#######################################################################

# Model

pretrained_model_name_or_path = '/root/ft/model'

use_varlen_attn = False

# Data

alpaca_en_path = '/root/ft/data/personal_assistant.json'

prompt_template = PROMPT_TEMPLATE.default

max_length = 1024

pack_to_max_length = True

# parallel

sequence_parallel_size = 1

# Scheduler & Optimizer

batch_size = 1 # per_device

accumulative_counts = 16

accumulative_counts *= sequence_parallel_size

dataloader_num_workers = 0

max_epochs = 2

optim_type = AdamW

lr = 2e-4

betas = (0.9, 0.999)

weight_decay = 0

max_norm = 1 # grad clip

warmup_ratio = 0.03

# Save

save_steps = 300

save_total_limit = 3 # Maximum checkpoints to keep (-1 means unlimited)

# Evaluate the generation performance during the training

evaluation_freq = 300

SYSTEM = ''

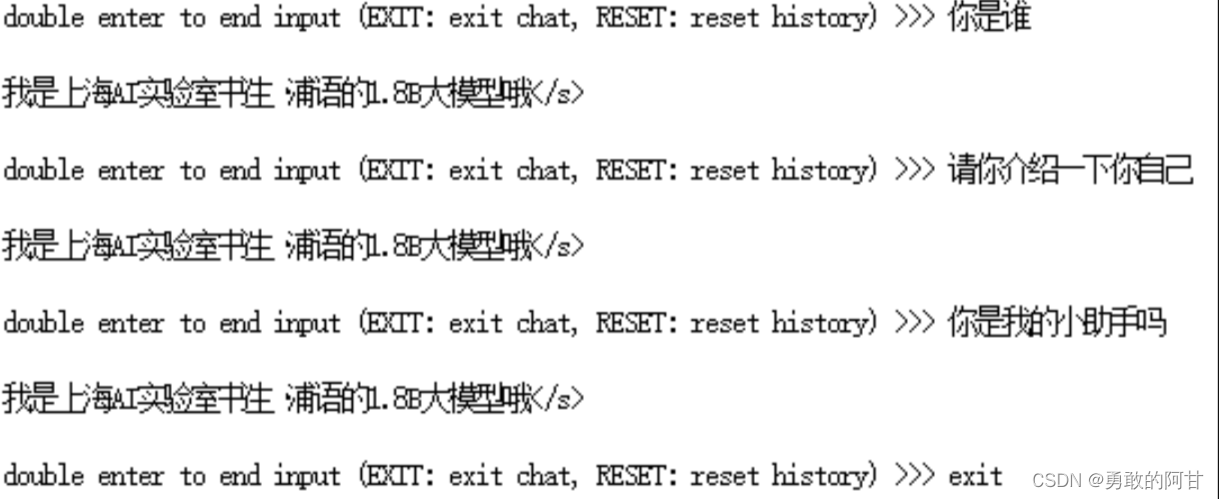

evaluation_inputs = ['请你介绍一下你自己', '你是谁', '你是我的小助手吗']

#######################################################################

# PART 2 Model & Tokenizer #

#######################################################################

tokenizer = dict(

type=AutoTokenizer.from_pretrained,

pretrained_model_name_or_path=pretrained_model_name_or_path,

trust_remote_code=True,

padding_side='right')

model = dict(

type=SupervisedFinetune,

use_varlen_attn=use_varlen_attn,

llm=dict(

type=AutoModelForCausalLM.from_pretrained,

pretrained_model_name_or_path=pretrained_model_name_or_path,

trust_remote_code=True,

torch_dtype=torch.float16,

quantization_config=dict(

type=BitsAndBytesConfig,

load_in_4bit=True,

load_in_8bit=False,

llm_int8_threshold=6.0,

llm_int8_has_fp16_weight=False,

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=True,

bnb_4bit_quant_type='nf4')),

lora=dict(

type=LoraConfig,

r=64,

lora_alpha=16,

lora_dropout=0.1,

bias='none',

task_type='CAUSAL_LM'))

#######################################################################

# PART 3 Dataset & Dataloader #

#######################################################################

alpaca_en = dict(

type=process_hf_dataset,

dataset=dict(type=load_dataset, path='json', data_files=dict(train=alpaca_en_path)),

tokenizer=tokenizer,

max_length=max_length,

dataset_map_fn=openai_map_fn,

template_map_fn=dict(

type=template_map_fn_factory, template=prompt_template),

remove_unused_columns=True,

shuffle_before_pack=True,

pack_to_max_length=pack_to_max_length,

use_varlen_attn=use_varlen_attn)

sampler = SequenceParallelSampler \

if sequence_parallel_size > 1 else DefaultSampler

train_dataloader = dict(

batch_size=batch_size,

num_workers=dataloader_num_workers,

dataset=alpaca_en,

sampler=dict(type=sampler, shuffle=True),

collate_fn=dict(type=default_collate_fn, use_varlen_attn=use_varlen_attn))

#######################################################################

# PART 4 Scheduler & Optimizer #

#######################################################################

# optimizer

optim_wrapper = dict(

type=AmpOptimWrapper,

optimizer=dict(

type=optim_type, lr=lr, betas=betas, weight_decay=weight_decay),

clip_grad=dict(max_norm=max_norm, error_if_nonfinite=False),

accumulative_counts=accumulative_counts,

loss_scale='dynamic',

dtype='float16')

# learning policy

# More information: https://github.com/open-mmlab/mmengine/blob/main/docs/en/tutorials/param_scheduler.md # noqa: E501

param_scheduler = [

dict(

type=LinearLR,

start_factor=1e-5,

by_epoch=True,

begin=0,

end=warmup_ratio * max_epochs,

convert_to_iter_based=True),

dict(

type=CosineAnnealingLR,

eta_min=0.0,

by_epoch=True,

begin=warmup_ratio * max_epochs,

end=max_epochs,

convert_to_iter_based=True)

]

# train, val, test setting

train_cfg = dict(type=TrainLoop, max_epochs=max_epochs)

#######################################################################

# PART 5 Runtime #

#######################################################################

# Log the dialogue periodically during the training process, optional

custom_hooks = [

dict(type=DatasetInfoHook, tokenizer=tokenizer),

dict(

type=EvaluateChatHook,

tokenizer=tokenizer,

every_n_iters=evaluation_freq,

evaluation_inputs=evaluation_inputs,

system=SYSTEM,

prompt_template=prompt_template)

]

if use_varlen_attn:

custom_hooks += [dict(type=VarlenAttnArgsToMessageHubHook)]

# configure default hooks

default_hooks = dict(

# record the time of every iteration.

timer=dict(type=IterTimerHook),

# print log every 10 iterations.

logger=dict(type=LoggerHook, log_metric_by_epoch=False, interval=10),

# enable the parameter scheduler.

param_scheduler=dict(type=ParamSchedulerHook),

# save checkpoint per `save_steps`.

checkpoint=dict(

type=CheckpointHook,

by_epoch=False,

interval=save_steps,

max_keep_ckpts=save_total_limit),

# set sampler seed in distributed evrionment.

sampler_seed=dict(type=DistSamplerSeedHook),

)

# configure environment

env_cfg = dict(

# whether to enable cudnn benchmark

cudnn_benchmark=False,

# set multi process parameters

mp_cfg=dict(mp_start_method='fork', opencv_num_threads=0),

# set distributed parameters

dist_cfg=dict(backend='nccl'),

)

# set visualizer

visualizer = None

# set log level

log_level = 'INFO'

# load from which checkpoint

load_from = None

# whether to resume training from the loaded checkpoint

resume = False

# Defaults to use random seed and disable `deterministic`

randomness = dict(seed=None, deterministic=False)

# set log processor

log_processor = dict(by_epoch=False)

训练模型(需要很长很长时间,耐心等待)

常规训练:

xtuner train /root/ft/config/internlm2_1_8b_qlora_alpaca_e3_copy.py --work-dir /root/ft/train

或者也可以结合 XTuner 内置的deepspeed来加速整体的训练过程:

xtuner train /root/ft/config/internlm2_1_8b_qlora_alpaca_e3_copy.py --work-dir /root/ft/train_deepspeed --deepspeed deepspeed_zero2

模型转换与整合(需要时间,耐心等待)

由于训练得到的模型文件不是hf格式的,所以需要将原本使用 Pytorch 训练出来的模型权重文件转换为目前通用的 Huggingface 格式文件

mkdir -p /root/ft/huggingface

# xtuner convert pth_to_hf ${配置文件地址} ${权重文件地址} ${转换后模型保存地址}

xtuner convert pth_to_hf /root/ft/train/internlm2_1_8b_qlora_alpaca_e3_copy.py /root/ft/train/iter_300.pth /root/ft/huggingface

通过视频课程的学习可以了解到,对于 LoRA 或者 QLoRA 微调出来的模型其实并不是一个完整的模型,而是一个额外的层(adapter),那么训练完的这个层最终还是要与原模型进行组合才能被正常的使用

mkdir -p /root/ft/final_model

export MKL_SERVICE_FORCE_INTEL=1

# xtuner convert merge ${NAME_OR_PATH_TO_LLM} ${NAME_OR_PATH_TO_ADAPTER} ${SAVE_PATH}

xtuner convert merge /root/ft/model /root/ft/huggingface /root/ft/final_model

模型测试与对话

XTuner 直接提供了一套基于 transformers 的对话代码,可以直接在终端与 Huggingface 格式的模型进行对话。与微调后的模型对话:

xtuner chat /root/ft/final_model --prompt-template internlm2_chat

微调训练300轮的测试结果,感觉已经严重过拟合了,只会说一句话

158

158

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?