lecture 3:简单卷积网络CNN

目录

1、简单CNN

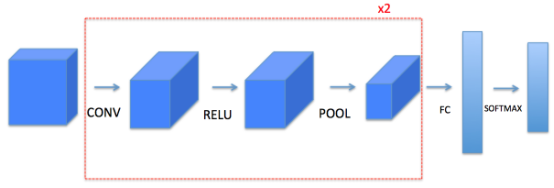

网络结构:conv2d-relu-maxpool-conv2d-relu-maxpool-flatten-fc

Conv1: 滑动[1,1],padding=’SAME’,滤波器大小[4,4,3,8]

Pool1: 滑动[8,8],大小[8,8]

Conv2: 滑动[1,1],padding=SAME”,滤波器大小[2,2,8,16]

Pool2: 滑动[4,4],大小[4,4]

m*64*64*3 -> m*8*8*8 -> m*2*2*16 -> m*64 -> m*6

迭代次数100,学习率0.01,minibatch_size=64,优化算法Adam

import math

import h5py

import scipy

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from tensorflow.python.framework import ops

from PIL import Image

from cnn_utils import *

%matplotlib inline

np.random.seed(1)

# 下载数据

X_train_orig, Y_train_orig, X_test_orig, Y_test_orig, classes = load_dataset()

# 显示数据图片

index = 6

plt.imshow(X_train_orig[index])

plt.show()

print("y = " + str(np.squeeze(Y_train_orig[:, index])))

# 将数据归一化,标签one-hot

X_train = X_train_orig/255.

X_test = X_test_orig/255.

Y_train = convert_to_one_hot(Y_train_orig, 6).T

Y_test = convert_to_one_hot(Y_test_orig, 6).T

print ("number of training examples = " + str(X_train.shape[0]))

print ("number of test examples = " + str(X_test.shape[0]))

print ("X_train shape: " + str(X_train.shape))

print ("Y_train shape: " + str(Y_train.shape))

print ("X_test shape: " + str(X_test.shape))

print ("Y_test shape: " + str(Y_test.shape))

y = 2

number of training examples = 1080

number of test examples = 120

X_train shape: (1080, 64, 64, 3)

Y_train shape: (1080, 6)

X_test shape: (120, 64, 64, 3)

Y_test shape: (120, 6)

def create_placeholders(n_H0, n_W0, n_C0, n_y):

X = tf.placeholder(tf.float32, shape=[None, n_H0, n_W0, n_C0])

Y = tf.placeholder(tf.float32, shape=[None, n_y])

keep_prob = tf.placeholder(tf.float32)

return X, Y, keep_prob

def initialize_parameters():

tf.set_random_seed(1)

W1 = tf.get_variable("W1", [4,4,3,8], initializer=tf.contrib.layers.xavier_initializer(seed=0))

W2 = tf.get_variable("W2", [2,2,8,16], initializer=tf.contrib.layers.xavier_initializer(seed=0))

parameters = {"W1":W1,

"W2":W2}

return parameters

def forward_propagation(X, parameters, keep_prob):

# CONV2D -> RELU -> MAXPOOL -> CONV2D -> RELU -> MAXPOOL -> FLATTEN -> FULLYCONNECTED

W1 = parameters['W1']

W2 = parameters['W2']

# 第一层卷积、激活、最大池化 m*64*64*3 -> m*64*64*8 -> m*8*8*8

Z1 = tf.nn.conv2d(X, W1, strides=[1,1,1,1], padding='SAME')

A1 = tf.nn.relu(Z1)

P1 = tf.nn.max_pool(A1, ksize=[1,8,8,1], strides=[1,8,8,1], padding='SAME')

# 第二层卷积、激活、最大池化 m*8*8*8 -> m*8*8*16 -> m*2*2*16

Z2 = tf.nn.conv2d(P1, W2, strides=[1,1,1,1], padding='SAME')

A2 = tf.nn.relu(Z2)

P2 = tf.nn.max_pool(A2, ksize=[1,4,4,1], strides=[1,4,4,1], padding='SAME')

# 全连接之前,平铺 m*2*2*16 -> m*64

P2 = tf.reshape(P2,[-1,2*2*16])

W_fc1 = tf.Variable(tf.truncated_normal([2*2*16,1024],stddev = 0.1))

b_fc1 = tf.Variable(tf.zeros([1024]))

h_fc1 = tf.nn.relu(tf.matmul(P2, W_fc1) + b_fc1)

# dropout

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

# 输出层

W_fc2 = tf.Variable(tf.truncated_normal([1024,6],stddev = 0.1))

b_fc2 = tf.Variable(tf.zeros([6]))

y_out = tf.nn.softmax(tf.matmul(h_fc1_drop, W_fc2) + b_fc2)

return y_out

def compute_cost(y_out, Y):

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=y_out, labels=Y))

return cost

def model(X_train, Y_train, X_test, Y_test, learning_rate=0.01,

num_epochs=100, minibatch_size=64, print_cost=True):

ops.reset_default_graph() # 能重新运行模型,而不会覆盖tf变量

tf.set_random_seed(1) # 保持结果的一致性(tensorflow seed)

seed=3 # 保持结果的一致性(numpy seed)

(m, n_H0, n_W0, n_C0) = X_train.shape

n_y = Y_train.shape[1]

costs = []

# 构建 tf 模型

X, Y ,keep_prob = create_placeholders(n_H0, n_W0, n_C0, n_y)

parameters = initialize_parameters()

y_out = forward_propagation(X, parameters, keep_prob)

cost = compute_cost(y_out, Y)

optimizer = tf.train.AdamOptimizer(learning_rate).minimize(cost)

init = tf.global_variables_initializer()

# start the Session to compute the tensorflow graph

with tf.Session() as sess:

# run the initialization

sess.run(init)

for epoch in range(num_epochs):

minibatch_cost = 0.

num_minibatches = int(m/minibatch_size)

seed = seed + 1

minibatches = random_mini_batches(X_train, Y_train, minibatch_size, seed)

for minibatch in minibatches:

(minibatch_X, minibatch_Y) = minibatch

_, temp_cost = sess.run([optimizer, cost], feed_dict={X:minibatch_X, Y:minibatch_Y, keep_prob:0.8})

minibatch_cost += temp_cost/num_minibatches

if print_cost==True and epoch%10==0:

print("cost after epoch %i:%f" % (epoch, minibatch_cost))

predict_op = tf.argmax(y_out, 1)

correct_prediction = tf.equal(predict_op, tf.argmax(Y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

train_accuracy = accuracy.eval({X:X_train, Y:Y_train, keep_prob:1})

test_accuracy = accuracy.eval({X:X_test, Y:Y_test, keep_prob:1})

print("Train Accuracy:", train_accuracy)

print("Test Accuracy:", test_accuracy)

return train_accuracy, test_accuracy, parameters

train_accuracy, test_accuracy, parameters = model(X_train, Y_train, X_test, Y_test)cost after epoch 0:1.922228

cost after epoch 10:1.603383

cost after epoch 20:1.522252

cost after epoch 30:1.462150

cost after epoch 40:1.445070

cost after epoch 50:1.395123

cost after epoch 60:1.340423

cost after epoch 70:1.332700

cost after epoch 80:1.331721

cost after epoch 90:1.315808

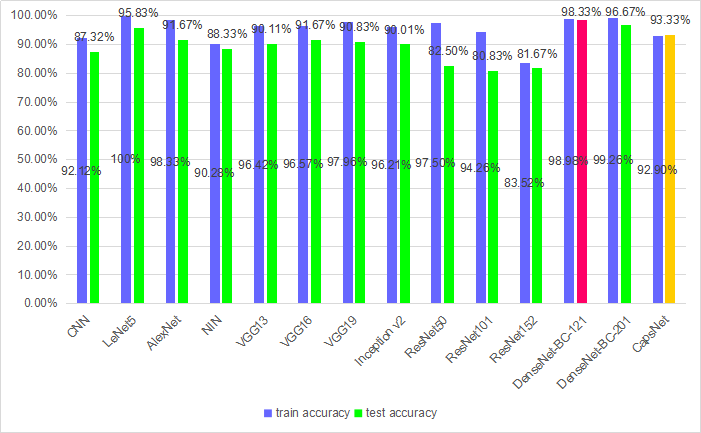

Train Accuracy: 0.842593

Test Accuracy: 0.65

1867

1867

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?