k8s版本1.24.0遇到的一些问题。

搭一次集群,一主一从,方便平时使用。

问题太多了,耗时长,遇到各种问题。

主节点初始化失败

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

Additionally, a control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all running Kubernetes containers by using crictl:

- 'crictl --runtime-endpoint unix:///var/run/cri-docker.sock ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'crictl --runtime-endpoint unix:///var/run/cri-docker.sock logs CONTAINERID'

couldn't initialize a Kubernetes cluster

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/init.runWaitControlPlanePhase

cmd/kubeadm/app/cmd/phases/init/waitcontrolplane.go:108

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).Run.func1

cmd/kubeadm/app/cmd/phases/workflow/runner.go:234

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).visitAll

cmd/kubeadm/app/cmd/phases/workflow/runner.go:421

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).Run

cmd/kubeadm/app/cmd/phases/workflow/runner.go:207

k8s.io/kubernetes/cmd/kubeadm/app/cmd.newCmdInit.func1

cmd/kubeadm/app/cmd/init.go:153

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).execute

vendor/github.com/spf13/cobra/command.go:856

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).ExecuteC

vendor/github.com/spf13/cobra/command.go:974

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).Execute

vendor/github.com/spf13/cobra/command.go:902

k8s.io/kubernetes/cmd/kubeadm/app.Run

cmd/kubeadm/app/kubeadm.go:50

main.main

cmd/kubeadm/kubeadm.go:25

runtime.main

/usr/local/go/src/runtime/proc.go:250

runtime.goexit

/usr/local/go/src/runtime/asm_amd64.s:1571

error execution phase wait-control-plane

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).Run.func1

cmd/kubeadm/app/cmd/phases/workflow/runner.go:235

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).visitAll

cmd/kubeadm/app/cmd/phases/workflow/runner.go:421

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).Run

cmd/kubeadm/app/cmd/phases/workflow/runner.go:207

k8s.io/kubernetes/cmd/kubeadm/app/cmd.newCmdInit.func1

cmd/kubeadm/app/cmd/init.go:153

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).execute

vendor/github.com/spf13/cobra/command.go:856

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).ExecuteC

vendor/github.com/spf13/cobra/command.go:974

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).Execute

vendor/github.com/spf13/cobra/command.go:902

k8s.io/kubernetes/cmd/kubeadm/app.Run

cmd/kubeadm/app/kubeadm.go:50

main.main

cmd/kubeadm/kubeadm.go:25

runtime.main

/usr/local/go/src/runtime/proc.go:250

runtime.goexit

/usr/local/go/src/runtime/asm_amd64.s:1571

排查

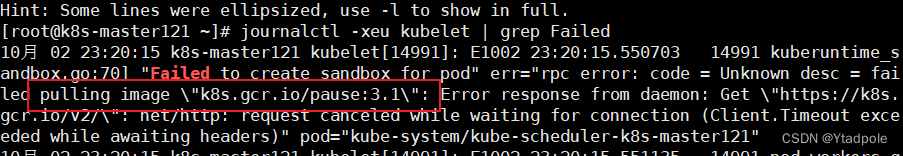

journalctl -xeu kubelet | grep Failed

实际日志显示用的pause:3.1

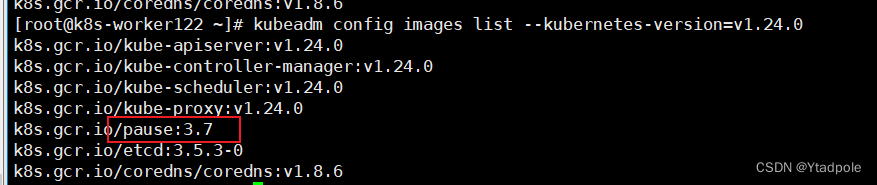

这里比较坑,镜像我是提前去拉取的。用的pause3.7

kubeadm config images list --kubernetes-version=v1.24.0

解决:

https://www.jianshu.com/p/4b20e7ea4883

docker pull registry.aliyuncs.com/google_containers/pause:3.1

docker tag registry.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

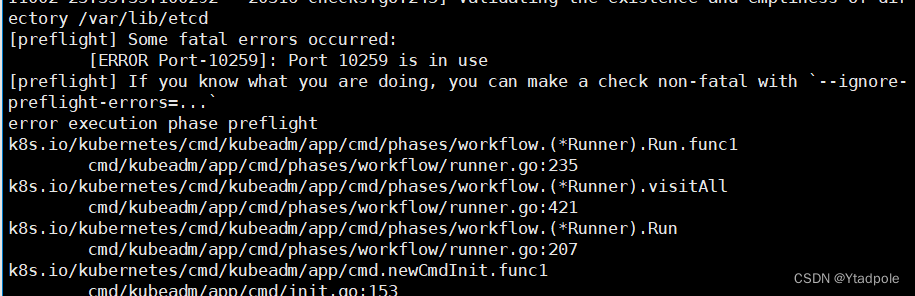

端口占用报错

[ERROR Port-10259]: Port 10259 is in use

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

error execution phase preflight

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).Run.func1

cmd/kubeadm/app/cmd/phases/workflow/runner.go:235

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).visitAll

cmd/kubeadm/app/cmd/phases/workflow/runner.go:421

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).Run

cmd/kubeadm/app/cmd/phases/workflow/runner.go:207

k8s.io/kubernetes/cmd/kubeadm/app/cmd.newCmdInit.func1

cmd/kubeadm/app/cmd/init.go:153

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).execute

vendor/github.com/spf13/cobra/command.go:856

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).ExecuteC

vendor/github.com/spf13/cobra/command.go:974

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).Execute

vendor/github.com/spf13/cobra/command.go:902

k8s.io/kubernetes/cmd/kubeadm/app.Run

cmd/kubeadm/app/kubeadm.go:50

main.main

cmd/kubeadm/kubeadm.go:25

runtime.main

/usr/local/go/src/runtime/proc.go:250

runtime.goexit

/usr/local/go/src/runtime/asm_amd64.s:1571

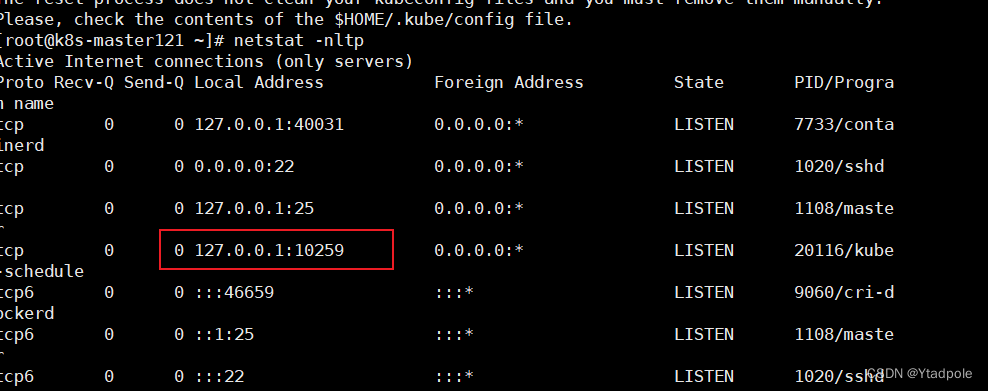

kubeadm init部分成功。导致后面init,端口占用。先尝试kubeadm reset,按理会有用。实际没重置。

只能直接kill进程。

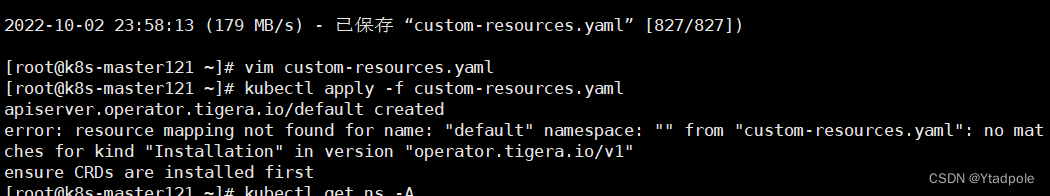

calico版本不匹配

error: resource mapping not found for name: "default" namespace: "" from "custom-resources.yaml": no matches for kind "Installation" in version "operator.tigera.io/v1"

ensure CRDs are installed first

更正k8s适配的calico版本

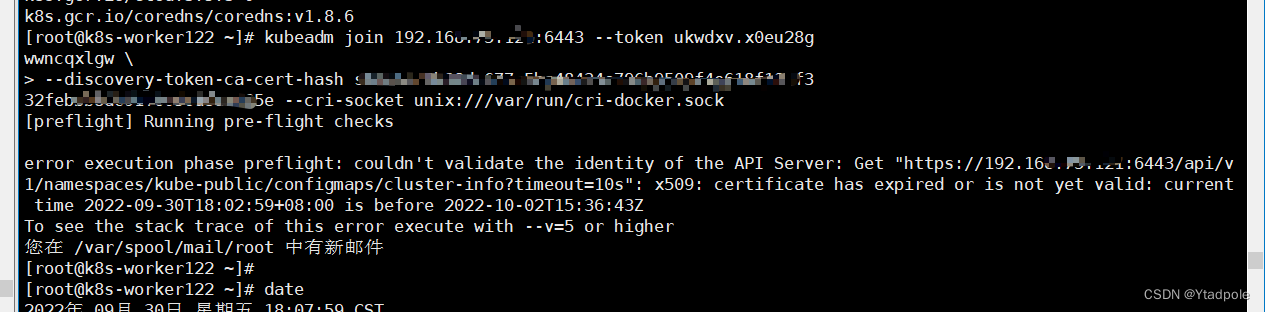

工作节点加入失败

注意到 时间有问题。我这里不是证书或token过期。

工作节点虚拟机挂起后,时间没同步。之前写的同步定时任务没生效。同步即可解决。

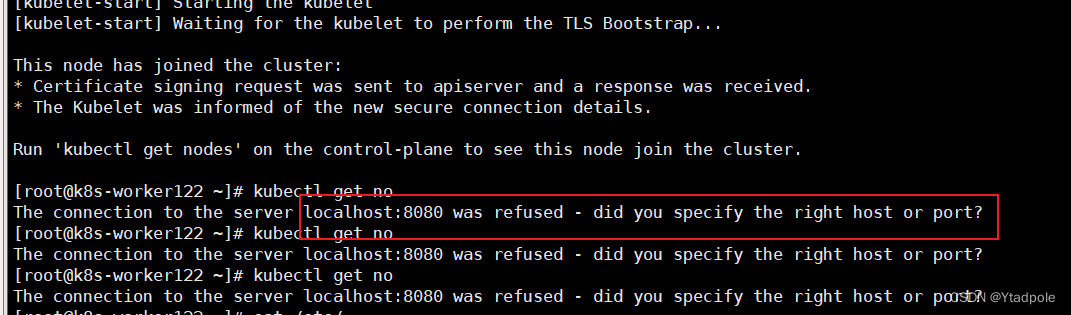

工作节点不能使用kubectl

[root@k8s-worker122 ~]# kubectl get no

The connection to the server localhost:8080 was refused - did you specify the right host or port?

解决:

工作节点 .kube/没有证书(可能是这个根本原因,加了证书确实好了)

cp /etc/kubernetes/kubelet.conf .kube/config

4568

4568

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?