前言

如果想要在浏览器运行代码,能使用的语言只有JavaScript ,因为在浏览器里配置了JavaScript 引擎用来负责解析和执行JavaScript 。但是,很多时候JavaScript并不适合我们想要实现的所有功能,比如想在浏览器运动C++、C或Rust等代码,那么WebAssembly则是目前最佳的选择。

一、 WebAssembly

WebAssembly(缩写WASM)是浏览器所能执行的一种新类型的代码,它的目标是为了在网络上获得更好的性能。 WASM是一种低层的二进制格式代码,体积小,因此加载和执行速度快。而且不用直接编写WASM的代码,它可以从高级语言(比如C++、C、Rust)编译而来。比如当前DAPP(mora星球)所在的ICP链也是把motoko或rust编写的智能合约编译成WebAssembly之后安装到Canister运行。

WebAssembly 在 web 中被设计成无版本、特性可测试、向后兼容的。WebAssembly 可以被 JavaScript 调用,进入 JavaScript 上下文,也可以像 Web API 一样调用浏览器的功能。当然,WebAssembly 不仅可以运行在浏览器上,也可以运行在非web环境下。emscripten可以将C/C++代码可以通过编译为 webassembly。

二、计算机视觉

计算机视觉给人类生活带很多方便,但也有很多数据安全方面的问题,比如应用在安防上的网络摄像头,当摄像头检测到的画面要回传到服务器才能检测出当面视频流属于什么状态。举个例子,应用于智慧城市的安防摄像头,它要检测当前画面是否有人在禁止吸烟的区域吸烟了,工人进入工地时是否做了安全防护措拖,是否有起烟或者火灾的现象,如果要把图像时时回传服务器才做检测,这样可能会引起数据安全隐私问题。

这里使用WebAssembly做推理就可以解决实现在浏览器就把结果检测出来,只要把结果给传递给处理中心就可以了,用户数据不会上传,保护用户隐私。部署起来也简单,只要安装浏览器就行,无客户端。

那么现在可以把一个目标识别的算法部署到浏览器上运行试试,当前代码所使用的语言为C++,目标检测算法是YOLOV5,用到图像算法库OpenCV,推理加速库是NCNN。

三、编译环境配置

当前测试的环境为Mac,浏览器为google chrome,首先要安装emscripten用来编译C++代码。

1.安装emscripten

git clone https://github.com/emscripten-core/emsdk.git

cd emsdk

./emsdk install 2.0.8

./emsdk activate 2.0.8

source emsdk/emsdk_env.sh

2.下载NCNN库

wget https://github.com/Tencent/ncnn/releases/download/20220216/ncnn-20220216-webassembly.zip

unzip ncnn-20220216-webassembly.zip

四、工程

1.工程资源

工程使用的模型是yolov5的模型,原模型是onnx格式的,要用使用ncnn转换成ncnn模型,关于模型训练和模型转换,可以参考ncnn的git和yolov5的git。

2.工程后端代码

yolov5.h

```cpp

#ifndef YOLOV5_H

#define YOLOV5_H

#include <net.h>

#include <simpleocv.h>

struct Object

{

cv::Rect_<float> rect;

int label;

float prob;

};

class YOLOv5

{

public:

YOLOv5();

int load();

int detect(const cv::Mat& rgba, std::vector<Object>& objects, float prob_threshold = 0.4f, float nms_threshold = 0.5f);

int draw(cv::Mat& rgba, const std::vector<Object>& objects);

private:

ncnn::Net yolov5;

};

#endif // YOLOV5_H

yolov5.cpp

```cpp

#include "yolov5.h"

#include <float.h>

#include <cpu.h>

#include <simpleocv.h>

static inline float intersection_area(const Object& a, const Object& b)

{

cv::Rect_<float> inter = a.rect & b.rect;

return inter.area();

}

static void qsort_descent_inplace(std::vector<Object>& faceobjects, int left, int right)

{

int i = left;

int j = right;

float p = faceobjects[(left + right) / 2].prob;

while (i <= j)

{

while (faceobjects[i].prob > p)

i++;

while (faceobjects[j].prob < p)

j--;

if (i <= j)

{

// swap

std::swap(faceobjects[i], faceobjects[j]);

i++;

j--;

}

}

// #pragma omp parallel sections

{

// #pragma omp section

{

if (left < j) qsort_descent_inplace(faceobjects, left, j);

}

// #pragma omp section

{

if (i < right) qsort_descent_inplace(faceobjects, i, right);

}

}

}

static void qsort_descent_inplace(std::vector<Object>& faceobjects)

{

if (faceobjects.empty())

return;

qsort_descent_inplace(faceobjects, 0, faceobjects.size() - 1);

}

static void nms_sorted_bboxes(const std::vector<Object>& faceobjects, std::vector<int>& picked, float nms_threshold)

{

picked.clear();

const int n = faceobjects.size();

std::vector<float> areas(n);

for (int i = 0; i < n; i++)

{

areas[i] = faceobjects[i].rect.width * faceobjects[i].rect.height;

}

for (int i = 0; i < n; i++)

{

const Object& a = faceobjects[i];

int keep = 1;

for (int j = 0; j < (int)picked.size(); j++)

{

const Object& b = faceobjects[picked[j]];

// intersection over union

float inter_area = intersection_area(a, b);

float union_area = areas[i] + areas[picked[j]] - inter_area;

// float IoU = inter_area / union_area

if (inter_area / union_area > nms_threshold)

keep = 0;

}

if (keep)

picked.push_back(i);

}

}

static inline float sigmoid(float x)

{

return static_cast<float>(1.f / (1.f + exp(-x)));

}

static void generate_proposals(const ncnn::Mat& anchors, int stride, const ncnn::Mat& in_pad, const ncnn::Mat& feat_blob, float prob_threshold, std::vector<Object>& objects)

{

const int num_grid_x = feat_blob.w;

const int num_grid_y = feat_blob.h;

const int num_anchors = anchors.w / 2;

const int num_class = 80;

for (int q = 0; q < num_anchors; q++)

{

const float anchor_w = anchors[q * 2];

const float anchor_h = anchors[q * 2 + 1];

for (int i = 0; i < num_grid_y; i++)

{

for (int j = 0; j < num_grid_x; j++)

{

// find class index with max class score

int class_index = 0;

float class_score = -FLT_MAX;

for (int k = 0; k < num_class; k++)

{

float score = feat_blob.channel(q * 85 + 5 + k).row(i)[j];

if (score > class_score)

{

class_index = k;

class_score = score;

}

}

float box_score = feat_blob.channel(q * 85 + 4).row(i)[j];

float confidence = sigmoid(box_score) * sigmoid(class_score);

if (confidence >= prob_threshold)

{

// yolov5/models/yolo.py Detect forward

// y = x[i].sigmoid()

// y[..., 0:2] = (y[..., 0:2] * 2. - 0.5 + self.grid[i].to(x[i].device)) * self.stride[i] # xy

// y[..., 2:4] = (y[..., 2:4] * 2) ** 2 * self.anchor_grid[i] # wh

float dx = sigmoid(feat_blob.channel(q * 85 + 0).row(i)[j]);

float dy = sigmoid(feat_blob.channel(q * 85 + 1).row(i)[j]);

float dw = sigmoid(feat_blob.channel(q * 85 + 2).row(i)[j]);

float dh = sigmoid(feat_blob.channel(q * 85 + 3).row(i)[j]);

float pb_cx = (dx * 2.f - 0.5f + j) * stride;

float pb_cy = (dy * 2.f - 0.5f + i) * stride;

float pb_w = pow(dw * 2.f, 2) * anchor_w;

float pb_h = pow(dh * 2.f, 2) * anchor_h;

float x0 = pb_cx - pb_w * 0.5f;

float y0 = pb_cy - pb_h * 0.5f;

float x1 = pb_cx + pb_w * 0.5f;

float y1 = pb_cy + pb_h * 0.5f;

Object obj;

obj.rect.x = x0;

obj.rect.y = y0;

obj.rect.width = x1 - x0;

obj.rect.height = y1 - y0;

obj.label = class_index;

obj.prob = confidence;

objects.push_back(obj);

}

}

}

}

}

YOLOv5::YOLOv5()

{

}

int YOLOv5::load()

{

yolov5.clear();

ncnn::set_cpu_powersave(2);

ncnn::set_omp_num_threads(ncnn::get_big_cpu_count());

yolov5.opt.num_threads = ncnn::get_big_cpu_count();

yolov5.load_param("yolov5n.ncnn.param");

yolov5.load_model("yolov5n.ncnn.bin");

return 0;

}

int YOLOv5::detect(const cv::Mat& rgba, std::vector<Object>& objects, float prob_threshold, float nms_threshold)

{

int width = rgba.cols;

int height = rgba.rows;

const int target_size = 320;

const int max_stride = 64;

// pad to multiple of max_stride

int w = width;

int h = height;

float scale = 1.f;

if (w > h)

{

scale = (float)target_size / w;

w = target_size;

h = h * scale;

}

else

{

scale = (float)target_size / h;

h = target_size;

w = w * scale;

}

ncnn::Mat in = ncnn::Mat::from_pixels_resize(rgba.data, ncnn::Mat::PIXEL_RGBA2RGB, width, height, w, h);

// pad to target_size rectangle

int wpad = (w + max_stride - 1) / max_stride * max_stride - w;

int hpad = (h + max_stride - 1) / max_stride * max_stride - h;

ncnn::Mat in_pad;

ncnn::copy_make_border(in, in_pad, hpad / 2, hpad - hpad / 2, wpad / 2, wpad - wpad / 2, ncnn::BORDER_CONSTANT, 114.f);

const float norm_vals[3] = {1 / 255.f, 1 / 255.f, 1 / 255.f};

in_pad.substract_mean_normalize(0, norm_vals);

ncnn::Extractor ex = yolov5.create_extractor();

ex.input("in0", in_pad);

std::vector<Object> proposals;

// anchor setting from yolov5/models/yolov5s.yaml

// stride 8

{

ncnn::Mat out;

ex.extract("out0", out);

ncnn::Mat anchors(6);

anchors[0] = 10.f;

anchors[1] = 13.f;

anchors[2] = 16.f;

anchors[3] = 30.f;

anchors[4] = 33.f;

anchors[5] = 23.f;

std::vector<Object> objects8;

generate_proposals(anchors, 8, in_pad, out, prob_threshold, objects8);

proposals.insert(proposals.end(), objects8.begin(), objects8.end());

}

// stride 16

{

ncnn::Mat out;

ex.extract("out1", out);

ncnn::Mat anchors(6);

anchors[0] = 30.f;

anchors[1] = 61.f;

anchors[2] = 62.f;

anchors[3] = 45.f;

anchors[4] = 59.f;

anchors[5] = 119.f;

std::vector<Object> objects16;

generate_proposals(anchors, 16, in_pad, out, prob_threshold, objects16);

proposals.insert(proposals.end(), objects16.begin(), objects16.end());

}

// stride 32

{

ncnn::Mat out;

ex.extract("out2", out);

ncnn::Mat anchors(6);

anchors[0] = 116.f;

anchors[1] = 90.f;

anchors[2] = 156.f;

anchors[3] = 198.f;

anchors[4] = 373.f;

anchors[5] = 326.f;

std::vector<Object> objects32;

generate_proposals(anchors, 32, in_pad, out, prob_threshold, objects32);

proposals.insert(proposals.end(), objects32.begin(), objects32.end());

}

// sort all proposals by score from highest to lowest

qsort_descent_inplace(proposals);

// apply nms with nms_threshold

std::vector<int> picked;

nms_sorted_bboxes(proposals, picked, nms_threshold);

int count = picked.size();

objects.resize(count);

for (int i = 0; i < count; i++)

{

objects[i] = proposals[picked[i]];

// adjust offset to original unpadded

float x0 = (objects[i].rect.x - (wpad / 2)) / scale;

float y0 = (objects[i].rect.y - (hpad / 2)) / scale;

float x1 = (objects[i].rect.x + objects[i].rect.width - (wpad / 2)) / scale;

float y1 = (objects[i].rect.y + objects[i].rect.height - (hpad / 2)) / scale;

// clip

x0 = std::max(std::min(x0, (float)(width - 1)), 0.f);

y0 = std::max(std::min(y0, (float)(height - 1)), 0.f);

x1 = std::max(std::min(x1, (float)(width - 1)), 0.f);

y1 = std::max(std::min(y1, (float)(height - 1)), 0.f);

objects[i].rect.x = x0;

objects[i].rect.y = y0;

objects[i].rect.width = x1 - x0;

objects[i].rect.height = y1 - y0;

}

// sort objects by area

struct

{

bool operator()(const Object& a, const Object& b) const

{

return a.rect.area() > b.rect.area();

}

} objects_area_greater;

std::sort(objects.begin(), objects.end(), objects_area_greater);

return 0;

}

int YOLOv5::draw(cv::Mat& rgba, const std::vector<Object>& objects)

{

static const char* class_names[] = {

"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light",

"fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

"sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear",

"hair drier", "toothbrush"

};

static const unsigned char colors[19][3] = {

{ 54, 67, 244},

{ 99, 30, 233},

{176, 39, 156},

{183, 58, 103},

{181, 81, 63},

{243, 150, 33},

{244, 169, 3},

{212, 188, 0},

{136, 150, 0},

{ 80, 175, 76},

{ 74, 195, 139},

{ 57, 220, 205},

{ 59, 235, 255},

{ 7, 193, 255},

{ 0, 152, 255},

{ 34, 87, 255},

{ 72, 85, 121},

{158, 158, 158},

{139, 125, 96}

};

int color_index = 0;

for (size_t i = 0; i < objects.size(); i++)

{

const Object& obj = objects[i];

// fprintf(stderr, "%d = %.5f at %.2f %.2f %.2f x %.2f\n", obj.label, obj.prob,

// obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height);

const unsigned char* color = colors[color_index % 19];

color_index++;

cv::Scalar cc(color[0], color[1], color[2], 255);

cv::rectangle(rgba, cv::Rect(obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height), cc, 2);

char text[256];

sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100);

int baseLine = 0;

cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

int x = obj.rect.x;

int y = obj.rect.y - label_size.height - baseLine;

if (y < 0)

y = 0;

if (x + label_size.width > rgba.cols)

x = rgba.cols - label_size.width;

cv::rectangle(rgba, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)), cc, -1);

cv::Scalar textcc = (color[0] + color[1] + color[2] >= 381) ? cv::Scalar(0, 0, 0, 255) : cv::Scalar(255, 255, 255, 255);

cv::putText(rgba, text, cv::Point(x, y + label_size.height), cv::FONT_HERSHEY_SIMPLEX, 0.5, textcc, 1);

}

return 0;

}

运行效果:

4.交互代码

```cpp

#include <benchmark.h>

#include <simpleocv.h>

#include "yolov5.h"

static int draw_fps(cv::Mat& rgba)

{

// resolve moving average

float avg_fps = 0.f;

{

static double t0 = 0.f;

static float fps_history[10] = {0.f};

double t1 = ncnn::get_current_time();

if (t0 == 0.f)

{

t0 = t1;

return 0;

}

float fps = 1000.f / (t1 - t0);

t0 = t1;

for (int i = 9; i >= 1; i--)

{

fps_history[i] = fps_history[i - 1];

}

fps_history[0] = fps;

if (fps_history[9] == 0.f)

{

return 0;

}

for (int i = 0; i < 10; i++)

{

avg_fps += fps_history[i];

}

avg_fps /= 10.f;

}

char text[32];

sprintf(text, "FPS=%.2f", avg_fps);

int baseLine = 0;

cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

int y = 0;

int x = rgba.cols - label_size.width;

cv::rectangle(rgba, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)),

cv::Scalar(255, 255, 255, 255), -1);

cv::putText(rgba, text, cv::Point(x, y + label_size.height),

cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0, 255));

return 0;

}

static YOLOv5* g_yolov5 = 0;

static void on_image_render(cv::Mat& rgba)

{

if (!g_yolov5)

{

g_yolov5 = new YOLOv5;

g_yolov5->load();

}

std::vector<Object> objects;

g_yolov5->detect(rgba, objects);

g_yolov5->draw(rgba, objects);

draw_fps(rgba);

}

#ifdef __EMSCRIPTEN_PTHREADS__

static const unsigned char* rgba_data = 0;

static int w = 0;

static int h = 0;

static ncnn::Mutex lock;

static ncnn::ConditionVariable condition;

static ncnn::Mutex finish_lock;

static ncnn::ConditionVariable finish_condition;

static void worker()

{

while (1)

{

lock.lock();

while (rgba_data == 0)

{

condition.wait(lock);

}

cv::Mat rgba(h, w, CV_8UC4, (void*)rgba_data);

on_image_render(rgba);

rgba_data = 0;

lock.unlock();

finish_lock.lock();

finish_condition.signal();

finish_lock.unlock();

}

}

#include <thread>

static std::thread t(worker);

extern "C" {

void yolov5_ncnn(unsigned char* _rgba_data, int _w, int _h)

{

lock.lock();

while (rgba_data != 0)

{

condition.wait(lock);

}

rgba_data = _rgba_data;

w = _w;

h = _h;

lock.unlock();

condition.signal();

// wait for finished

finish_lock.lock();

while (rgba_data != 0)

{

finish_condition.wait(finish_lock);

}

finish_lock.unlock();

}

}

#else // __EMSCRIPTEN_PTHREADS__

extern "C" {

void yolov5_ncnn(unsigned char* rgba_data, int w, int h)

{

cv::Mat rgba(h, w, CV_8UC4, (void*)rgba_data);

on_image_render(rgba);

}

}

#endif // __EMSCRIPTEN_PTHREADS__

5.前端代码

index.html

```html

<html lang="en">

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width" />

<title>ncnn webassembly yolov5</title>

<style>

video {

/* position: absolute; */

/* visibility: hidden; */

}

canvas {

border: 1px solid black;

}

</style>

</head>

<body>

<div>

<h1>ncnn webassembly yolov5</h1>

<div>

<button disabled id="switch-camera-btn" style="height:48px">Switch Camera</button>

</div>

<div>

<canvas id="canvas" width="640"></canvas>

</div>

<video id="video" playsinline autoplay></video>

</div>

<script src="wasmFeatureDetect.js"></script>

<script type='text/javascript'>

var Module = {};

var has_simd;

var has_threads;

var wasmModuleLoaded = false;

var wasmModuleLoadedCallbacks = [];

Module.onRuntimeInitialized = function() {

wasmModuleLoaded = true;

for (var i = 0; i < wasmModuleLoadedCallbacks.length; i++) {

wasmModuleLoadedCallbacks[i]();

}

}

wasmFeatureDetect.simd().then(simdSupported => {

has_simd = simdSupported;

wasmFeatureDetect.threads().then(threadsSupported => {

has_threads = threadsSupported;

if (has_simd)

{

if (has_threads)

{

yolov5_module_name = 'yolov5-simd-threads';

}

else

{

yolov5_module_name = 'yolov5-simd';

}

}

else

{

if (has_threads)

{

yolov5_module_name = 'yolov5-threads';

}

else

{

yolov5_module_name = 'yolov5-basic';

}

}

console.log('load ' + yolov5_module_name);

var yolov5wasm = yolov5_module_name + '.wasm';

var yolov5js = yolov5_module_name + '.js';

fetch(yolov5wasm)

.then(response => response.arrayBuffer())

.then(buffer => {

Module.wasmBinary = buffer;

var script = document.createElement('script');

script.src = yolov5js;

script.onload = function() {

console.log('Emscripten boilerplate loaded.');

}

document.body.appendChild(script);

});

});

});

var shouldFaceUser = true;

var stream = null;

var w = 640;

var h = 480;

var dst = null;

var resultarray = null;

var resultbuffer = null;

window.addEventListener('DOMContentLoaded', function() {

var isStreaming = false;

switchcamerabtn = document.getElementById('switch-camera-btn');

video = document.getElementById('video');

canvas = document.getElementById('canvas');

ctx = canvas.getContext('2d');

// Wait until the video stream canvas play

video.addEventListener('canplay', function(e) {

if (!isStreaming) {

// videoWidth isn't always set correctly in all browsers

if (video.videoWidth > 0) h = video.videoHeight / (video.videoWidth / w);

canvas.setAttribute('width', w);

canvas.setAttribute('height', h);

isStreaming = true;

}

}, false);

// Wait for the video to start to play

video.addEventListener('play', function() {

//Setup image memory

var id = ctx.getImageData(0, 0, canvas.width, canvas.height);

var d = id.data;

if (wasmModuleLoaded) {

mallocAndCallSFilter();

} else {

wasmModuleLoadedCallbacks.push(mallocAndCallSFilter);

}

function mallocAndCallSFilter() {

if (dst != null)

{

_free(dst);

dst = null;

}

dst = _malloc(d.length);

//console.log("What " + d.length);

sFilter();

}

});

// check whether we can use facingMode

var supports = navigator.mediaDevices.getSupportedConstraints();

if (supports['facingMode'] === true) {

switchcamerabtn.disabled = false;

}

switchcamerabtn.addEventListener('click', function() {

if (stream == null)

return

stream.getTracks().forEach(t => {

t.stop();

});

shouldFaceUser = !shouldFaceUser;

capture();

});

capture();

});

function capture() {

var constraints = { audio: false, video: { width: 640, height: 480, facingMode: shouldFaceUser ? 'user' : 'environment' } };

navigator.mediaDevices.getUserMedia(constraints)

.then(function(mediaStream) {

var video = document.querySelector('video');

stream = mediaStream;

video.srcObject = mediaStream;

video.onloadedmetadata = function(e) {

video.play();

};

})

.catch(function(err) {

console.log(err.message);

});

}

function ncnn_yolov5() {

var canvas = document.getElementById('canvas');

var ctx = canvas.getContext('2d');

var imageData = ctx.getImageData(0, 0, canvas.width, canvas.height);

var data = imageData.data;

HEAPU8.set(data, dst);

_yolov5_ncnn(dst, canvas.width, canvas.height);

var result = HEAPU8.subarray(dst, dst + data.length);

imageData.data.set(result);

ctx.putImageData(imageData, 0, 0);

}

//Request Animation Frame function

var sFilter = function() {

if (video.paused || video.ended) return;

ctx.fillRect(0, 0, w, h);

ctx.drawImage(video, 0, 0, w, h);

ncnn_yolov5();

window.requestAnimationFrame(sFilter);

}

</script>

</body>

</html>

6.make文件

project(yolov5)

cmake_minimum_required(VERSION 3.10)

set(CMAKE_BUILD_TYPE release)

if(NOT WASM_FEATURE)

message(FATAL_ERROR "You must pass cmake option -DWASM_FEATURE and possible values are basic, simd, threads and simd-threads")

endif()

set(ncnn_DIR "${CMAKE_CURRENT_SOURCE_DIR}/ncnn/${WASM_FEATURE}/lib/cmake/ncnn")

find_package(ncnn REQUIRED)

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -s FORCE_FILESYSTEM=1 -s INITIAL_MEMORY=256MB -s EXIT_RUNTIME=1")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -s FORCE_FILESYSTEM=1 -s INITIAL_MEMORY=256MB -s EXIT_RUNTIME=1")

set(CMAKE_EXECUTBLE_LINKER_FLAGS "${CMAKE_EXECUTBLE_LINKER_FLAGS} -s FORCE_FILESYSTEM=1 -s INITIAL_MEMORY=256MB -s EXIT_RUNTIME=1")

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -sEXPORTED_FUNCTIONS=['_yolov5_ncnn'] --preload-file ${CMAKE_CURRENT_SOURCE_DIR}/assets@.")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -sEXPORTED_FUNCTIONS=['_yolov5_ncnn'] --preload-file ${CMAKE_CURRENT_SOURCE_DIR}/assets@.")

set(CMAKE_EXECUTBLE_LINKER_FLAGS "${CMAKE_EXECUTBLE_LINKER_FLAGS} -sEXPORTED_FUNCTIONS=['_yolov5_ncnn'] --preload-file ${CMAKE_CURRENT_SOURCE_DIR}/assets@.")

if(${WASM_FEATURE} MATCHES "threads")

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -fopenmp -pthread -s USE_PTHREADS=1 -s PTHREAD_POOL_SIZE=4")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -fopenmp -pthread -s USE_PTHREADS=1 -s PTHREAD_POOL_SIZE=4")

set(CMAKE_EXE_LINKER_FLAGS "${CMAKE_EXE_LINKER_FLAGS} -fopenmp -pthread -s USE_PTHREADS=1 -s PTHREAD_POOL_SIZE=4")

endif()

add_executable(yolov5-${WASM_FEATURE} yolov5.cpp yolov5ncnn.cpp)

target_link_libraries(yolov5-${WASM_FEATURE} ncnn)

五、编译工程

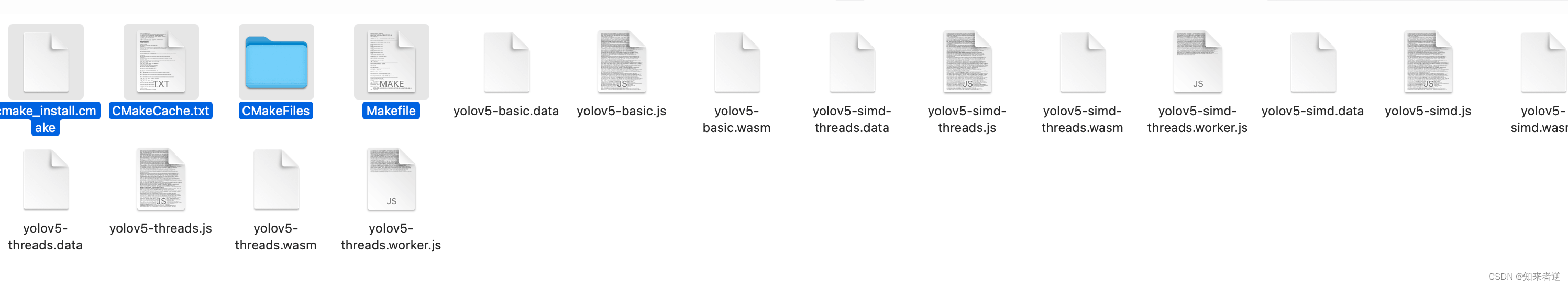

1.工程包含文件

image.pngassets里面模型文件,ncnn目录是刚刚下载的ncnn依赖库。

2.build

在build前把emsdk环境启动。

source emsdk/emsdk_env.sh

在工程目录下添加一个build目录

mkdir build

编译项目

cd build

cmake -DCMAKE_TOOLCHAIN_FILE=$EMSDK/upstream/emscripten/cmake/Modules/Platform/Emscripten.cmake -DWASM_FEATURE=basic ..

make -j4

cmake -DCMAKE_TOOLCHAIN_FILE=$EMSDK/upstream/emscripten/cmake/Modules/Platform/Emscripten.cmake -DWASM_FEATURE=simd ..

make -j4

cmake -DCMAKE_TOOLCHAIN_FILE=$EMSDK/upstream/emscripten/cmake/Modules/Platform/Emscripten.cmake -DWASM_FEATURE=threads ..

make -j4

cmake -DCMAKE_TOOLCHAIN_FILE=$EMSDK/upstream/emscripten/cmake/Modules/Platform/Emscripten.cmake -DWASM_FEATURE=simd-threads ..

make -j4

3.启动服务

创建一个server的目录,在目录里面添加一个python的微服务器用来测试。

mkdir server

cd server

vim server.py

server.py

from http.server import HTTPServer, SimpleHTTPRequestHandler

import ssl, os

os.system("openssl req -nodes -x509 -newkey rsa:4096 -keyout key.pem -out cert.pem -days 365 -subj '/CN=mylocalhost'")

port = 8888

httpd = HTTPServer(('0.0.0.0', port), SimpleHTTPRequestHandler)

httpd.socket = ssl.wrap_socket(httpd.socket, keyfile='key.pem', certfile="cert.pem", server_side=True)

print(f"Server running on https://0.0.0.0:{port}")

httpd.serve_forever()

把bulid目录下的除了和make相关的文件都移动到server目录下:

image.png把工程目录的的index.html和wasmFeatureDetect.js也移动到server目录。

运行server.py

python3 server.py

image.png打开浏览器,输入服务器地址,如果出现安全连接问题

在Chrome提示“您的连接不是私密连接”页面的空白区域点击一下,然后输入“thisisunsafe”(页面不会有任何输入提示),输入完成后会自动继续访问。

输入正确则跳到检测界面:

整体演示完成。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?