安装CUDA10

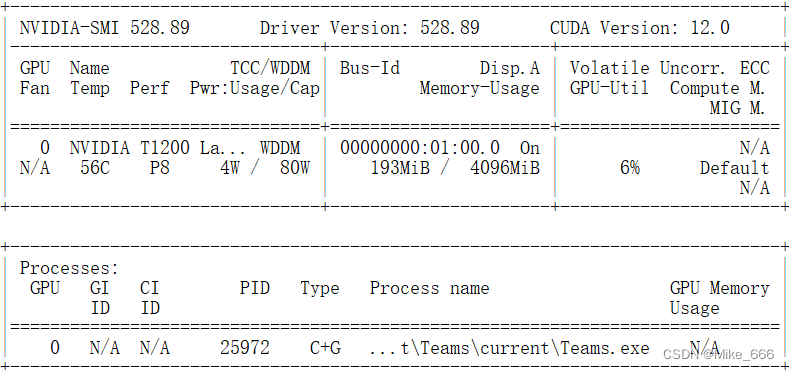

1、因为要用到tensorflow1.15.5的GPU版本,所以想安装cuda10来进行加速,通过nvidia-smi检查本机上的CUDA版本

2、下载的cuda10版本,cuda_10.0.130_411.31_win10.exe

下载的cudnn版本,cudnn-10.0-windows10-x64-v7.6.4.38.zip

然后按照下面这个博客链接进行安装。

win10系统CUDA10.0安装教程(for tensorflow2.0)_cuda10.0下载-CSDN博客

3、安装完后之后,通过nvidia-smi,发现cuda还是上面的情况

通过启动训练程序,发现仍然不能启动GPU加速,

通过搜索发现:

如果您的系统上已经安装了CUDA 12.0的驱动程序,即使安装了CUDA 10.0的运行时库,nvidia-smi也可能会显示CUDA 12.0。在这种情况下,您可能需要更新您的NVIDIA驱动程序,以便显示正确的CUDA版本。您可以通过NVIDIA官方网站下载并安装适用于您的GPU的最新驱动程序。

基于上述理论指导,卸载CUDA12.0的驱动程序,手动卸载没有卸载成功。安装腾讯管家进行卸载,卸载成功。

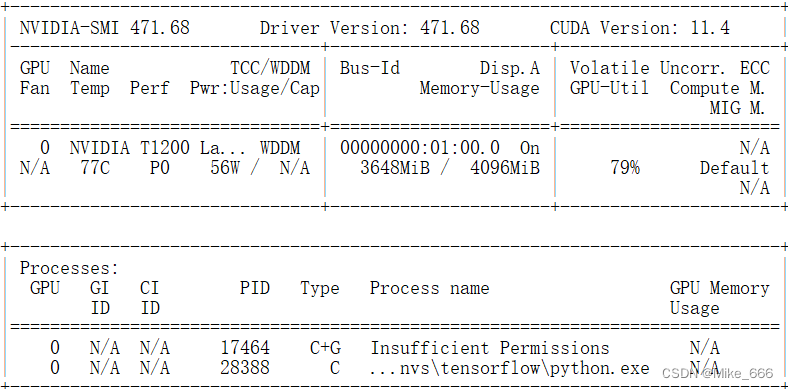

4、然后下载适合NVIDIA T1200显卡的驱动成功,搜索到下面这个,

471.68-desktop-win10-win11-64bit-international-dch-whql.exe

然后双击应用程序进行安装,安装成功。运行训练程序,成功开启GPU训练。

虽然显示的是CUDA Version:11.4,但是可以启动cuda10的加速库。

2024.04.07

--------------------------------------------------------------------------------------------

卸载CUDA10

用了两天之后,发现还是CUDA10.2应用广泛,稳定性强。下载CUDA10.2,在有CUDA10的前提下安装CUDA10.2,安装失败,想着估计还得把CUDA10卸载,才能安装上CUDA10.2。

卸载CUDA10的步骤:

1、把上述步骤倒回去,删除环境变量,删除cudnn。

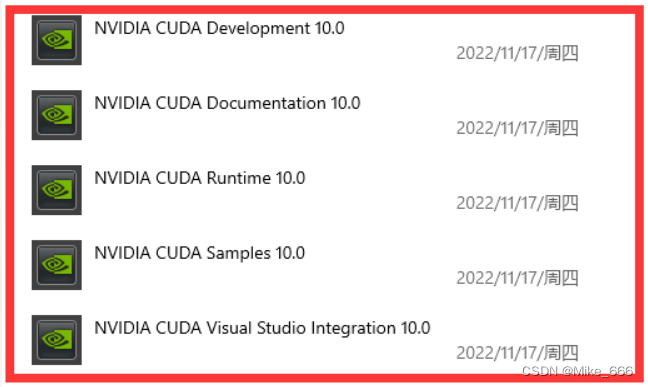

2、参考下面这个博客,卸载其中的5个,从Control Panel、Programs and Features中进行卸载,如果一次卸载不成功,重启电脑再次进行卸载。

Win10卸载cuda10.0,重装cuda11.0-CSDN博客

3、删除环境变量

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.2\cudnn\bin

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.2\extras\CUPTI\lib64

删除环境变量的方法

win11 系统环境变量下方的“新建 编辑 删除”是灰色的,无法点击_系统变量无法新增修改-CSDN博客

解决方法就是通过 管理员身份进入,从 “控制面板-系统和安全-系统-高级系统设置-环境变量”这种路径进入即可!

4、卸载完成后,重启一下电脑。

-------------------------------------------------------------------------

安装CUDA10.2

下载的cuda10.2和cudnn文件名如下:

cuda_10.2.89_441.22_win10.exe

cudnn-10.2-windows10-x64-v7.6.5.32.zip

按照安装CUDA10的步骤安装CUDA10.2。

---------------------------------------------------------------------------

安装MMSEG

参考资料

a、官方安装方案:

Get started: Install and Run MMSeg — MMSegmentation 1.2.2 documentation

b、民间安装方案:

【OpenMMLab实践】01MMSegmentation官方教程实现过程记录(mmcv,mmsegmentation,torch)_mmcv.utils的get_device-CSDN博客

Pytorch 和CUDA版本对应关系-腾讯云开发者社区-腾讯云

安装步骤:

1、安装Pytorch

# CUDA 10.2

conda install pytorch==1.9.0 torchvision==0.10.0 torchaudio==0.9.0 cudatoolkit=10.2 -c pytorch

2、测试torch和GPU是否可用

import torch

num_gpu = torch.cuda.device_count()

device = torch.device('cuda:0' if (torch.cuda.is_available() and num_gpu>0) else 'cpu')

print('Number of gpu:{}\nDevice:{}'.format(num_gpu,device))

torch.cuda.get_device_name(0)输出:

Number of gpu:1

Device:cuda:0

3、按照官方步骤来

检查mmseg是否安装成功

# Check Pytorch installation

import torch, torchvision

print(torch.__version__, torch.cuda.is_available())

# Check MMSegmentation installation

import mmseg

print(mmseg.__version__)输出:

1.9.0 True

1.2.2

4、验证推理的时候出现以下问题

(openmmlab) C:\Users\gjmhs4\mmsegmentation>python demo/image_demo.py demo/demo.png configs/pspnet/pspnet_r50-d8_4xb2-40k_cityscapes-512x1024.py pspnet_r50-d8_512x1024_40k_cityscapes_20200605_003338-2966598c.pth --device cuda:0 --out-file result.jpg

Traceback (most recent call last):

File "demo/image_demo.py", line 6, in <module>

from mmseg.apis import inference_model, init_model, show_result_pyplot

File "c:\users\gjmhs4\mmsegmentation\mmseg\__init__.py", line 4, in <module>

import mmcv

File "C:\Users\gjmhs4\AppData\Local\conda\conda\envs\openmmlab\lib\site-packages\mmcv\__init__.py", line 4, in <module>

from .image import *

File "C:\Users\gjmhs4\AppData\Local\conda\conda\envs\openmmlab\lib\site-packages\mmcv\image\__init__.py", line 11, in <module>

from .photometric import (adjust_brightness, adjust_color, adjust_contrast,

File "C:\Users\gjmhs4\AppData\Local\conda\conda\envs\openmmlab\lib\site-packages\mmcv\image\photometric.py", line 8, in <module>

from PIL import Image, ImageEnhance

File "C:\Users\gjmhs4\AppData\Local\conda\conda\envs\openmmlab\lib\site-packages\PIL\Image.py", line 114, in <module>

from . import _imaging as core

ImportError: DLL load failed while importing _imaging: The specified module could not be found.解决方案:

运行

pip install pillow==9.0.0问题1解决。

再次运行上述指令:

python demo/image_demo.py demo/demo.png configs/pspnet/pspnet_r50-d8_4xb2-40k_cityscapes-512x1024.py pspnet_r50-d8_512x1024_40k_cityscapes_20200605_003338-2966598c.pth --device cuda:0 --out-file result.jpg出现问题2

ModuleNotFoundError: No module named 'ftfy'

运行

pip install ftfy问题解决,出现result.jpg图像。

20240410

775

775

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?