搭建k8s高可用集群

1、准备工作

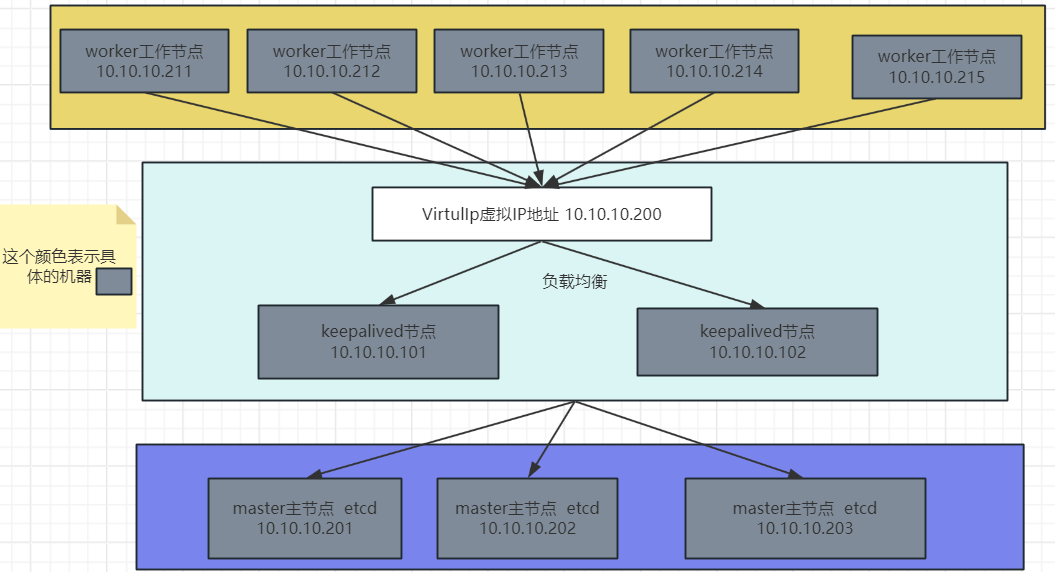

记录一次搭建k8s高可用集群的过程,使用KubeSphere可视化界面引导安装集群。本次实验一共是10台机器,内网IP地址和配置如下图:

| IP 地址 | 主机名 | 角色 | 配置 |

|---|---|---|---|

| 10.10.10.101 | keepalived1 | Keepalived & HAproxy | 2核2G,50GB |

| 10.10.10.102 | keepalived2 | Keepalived & HAproxy | 2核2G,50GB |

| 10.10.10.201 | k8s-master1 | master主节点, etcd | 4核8G,100GB |

| 10.10.10.202 | k8s-master2 | master主节点, etcd | 4核8G,100GB |

| 10.10.10.203 | k8s-master3 | master主节点, etcd | 4核8G,100GB |

| 10.10.10.211 | k8s-node1 | worker node工作节点 | 6核8G,60GB |

| 10.10.10.212 | k8s-node2 | worker node工作节点 | 4核8G,50GB |

| 10.10.10.213 | k8s-node3 | worker node工作节点 | 8核16G,100GB |

| 10.10.10.214 | k8s-node4 | worker node工作节点 | 6核8G,60GB |

| 10.10.10.215 | k8s-node5 | worker node工作节点 | 6核8G,70GB |

有2个keepalived节点,三个主节点和5个工作节点,另外准备用一个没被使用的IP10.10.10.200当虚拟ip地址。(10台安装了centos7的机器另加一个没被使用过的IP地址)

意在开始搭建集群前请停止所有机器的防火墙,并且确保机器之间能互相ssh连接访问:

意在开始搭建集群前请停止所有机器的防火墙,并且确保机器之间能互相ssh连接访问:

systemctl disable firewalld

systemctl stop firewalld

所有机器都运行如下命令同步时间:

yum install -y ntpdate

ntpdate ntp.aliyun.com

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

使用如下命令把主机名字设置好,每台机器设一个便于记忆的名字。我这里按表格中的设置了:

#各个机器设置自己的域名

hostnamectl set-hostname k8s-master1

使用如下命令把所有的master节点和worker工作节点设置禁用swap,关闭selinux

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

#关闭swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

#允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

使用如下命令在所有的master节点和worker工作节点安装docker并配置docker镜像加速器。

yum install -y yum-utils device-mapper-persistent-data lvm2 wget vim --skip-broken

yum install -y conntrack socat

# 设置docker镜像源

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's/download.docker.com/mirrors.aliyun.com\/docker-ce/g' /etc/yum.repos.d/docker-ce.repo

yum makecache fast

yum install -y docker-ce-20.10.10 docker-ce-cli-20.10.10 containerd.io-1.4.9

所有的master节点和worker工作节点启动docker

systemctl enable docker --now

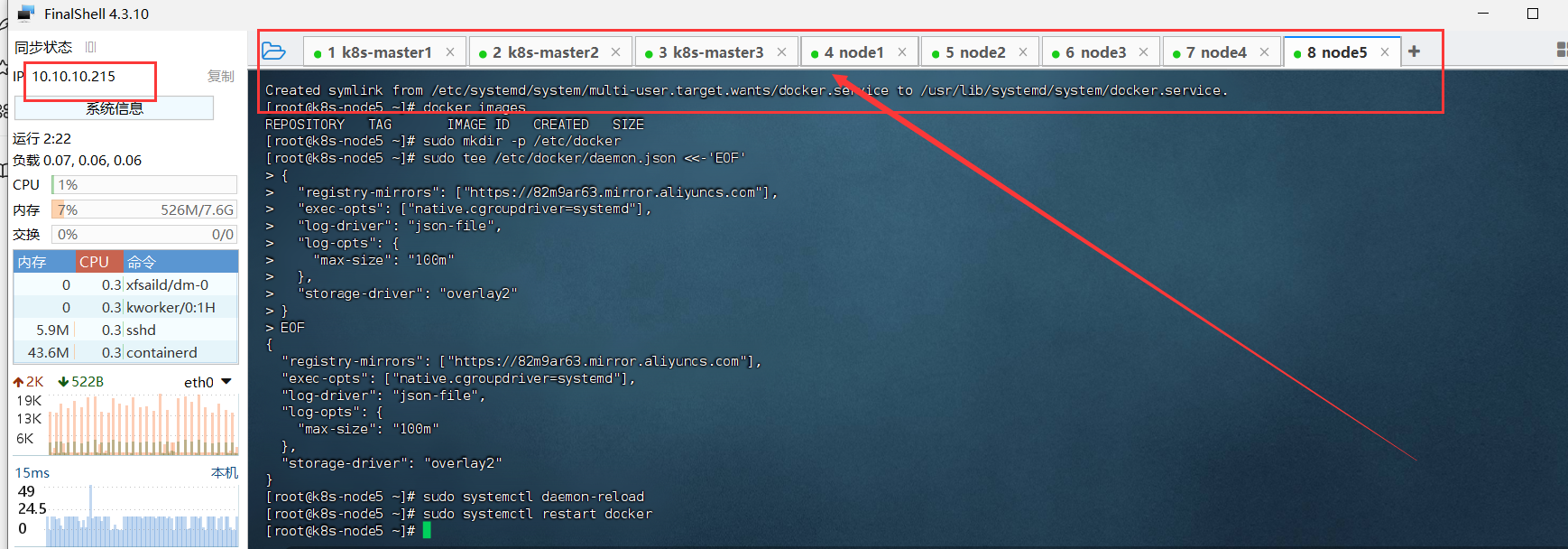

所有的master节点和worker工作节点配置docker镜像加速器(这里额外添加了docker的生产环境核心配置cgroup)

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://82m9ar63.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

所有的master节点和worker工作节点配置k8s源地址

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum makecache fast

2.在keepalived1和keepalived2上运行如下命令安装keepalived和haproxy:

yum install keepalived haproxy psmisc -y

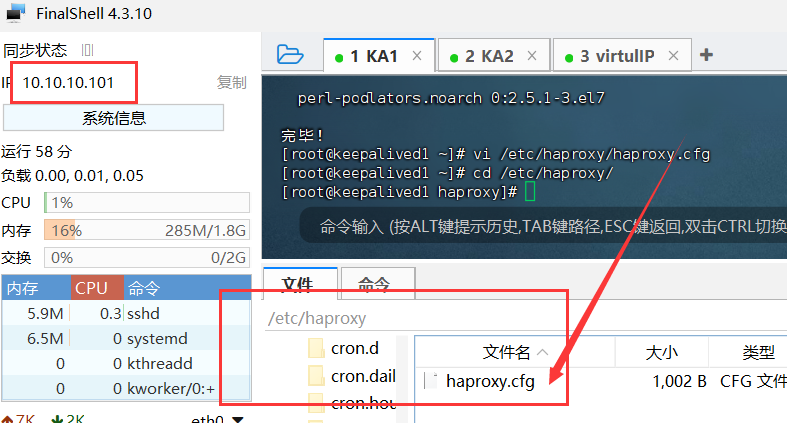

安装完成keepalived和haproxy后编辑haproxy配置,

进入/etc/haproxy找到如上图文件编辑内容如下:

global

log /dev/log local0 warning

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

log global

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

frontend kube-apiserver

bind *:6443 #注意这里的6443是kube-apiserver的默认端口,一般不需要修改

mode tcp

option tcplog

default_backend kube-apiserver

backend kube-apiserver

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server kube-apiserver-1 10.10.10.201:6443 check # 改成你自己的主节点IP,我是3个主节点,你有几个主节点就写几个

server kube-apiserver-2 10.10.10.202:6443 check # 改成你自己的主节点IP

server kube-apiserver-3 10.10.10.203:6443 check # 改成你自己的主节点IP

注意这个修改haproxy配置文件是两个keepalived节点都要做的事情,配置过程完全一样。

两台keepalived都配置完成后运行如下命令重启kaproxy

systemctl enable haproxy

systemctl restart haproxy

3、Keepalived配置

两台keepalived机器分别进入/etc/keepalived/文件夹下,编辑keepalived.conf文件,但是两台机器的配置文件内容略有不一样的地方,如下:

global_defs {

notification_email {

}

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 2

weight 2

}

vrrp_instance haproxy-vip {

state BACKUP

priority 100

interface eth0 #这个是机器的网卡名字,就是你运行ip a命令看到的IP地址对应的网卡名

virtual_router_id 60

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

unicast_src_ip 10.10.10.101 #这里填你现在编辑文件的这台keepalived机器的IP地址

unicast_peer {

10.10.10.102 #这是剩下那台keepalived机器的IP地址,

} #因此2台keepalived机器的unicast_src_ip和unicast_peer正好相反

virtual_ipaddress {

10.10.10.200/24 #这是VirtulIp虚拟IP地址

}

track_script {

chk_haproxy

}

}

- 为 unicast_src_ip 提供的 IP 地址是您当前机器的 IP 地址。

- 对于也安装了 HAproxy 和 Keepalived 进行负载均衡的其他机器,必须在字段 unicast_peer 中输入其 IP 地址。

保存配置文件,重启2台keepalived机器:

systemctl restart keepalived

systemctl enable keepalived

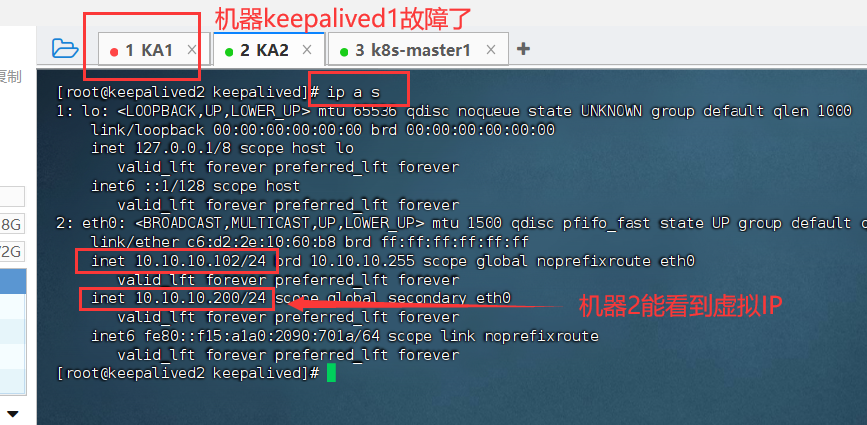

4、验证高可用性

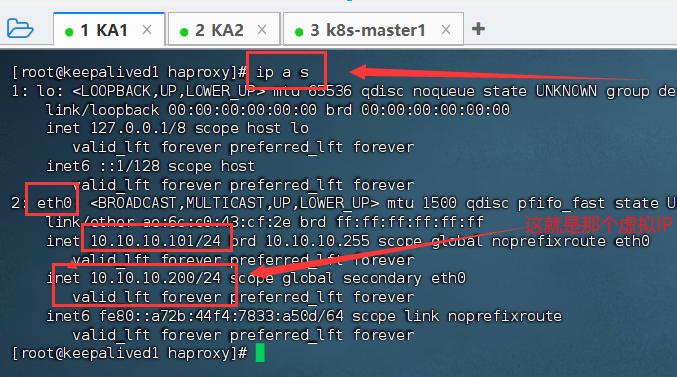

在keepalived1 10.10.10.101机器上运行ip a s命令,输出如下:

现在把keepalived1 10.10.10.101机器关机,模拟机器故障。

然后在keepalived2 10.10.10.102机器上应该能看到如下:

如上图结果所示,高可用已经配置成功。

5、使用KubeKey引导创建k8s集群

在k8s-master1主节点上运行如下命令:

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

chmod +x kk

下载kubeKey后使用如下命令安装kubernetes1.23.10版本,您也可以更换其他版本:

./kk create config --with-kubesphere v3.4.1 --with-kubernetes v1.23.10

运行上述命令后将会在当前路径创建配置文件config-sample.yaml,接下来编辑这个文件:

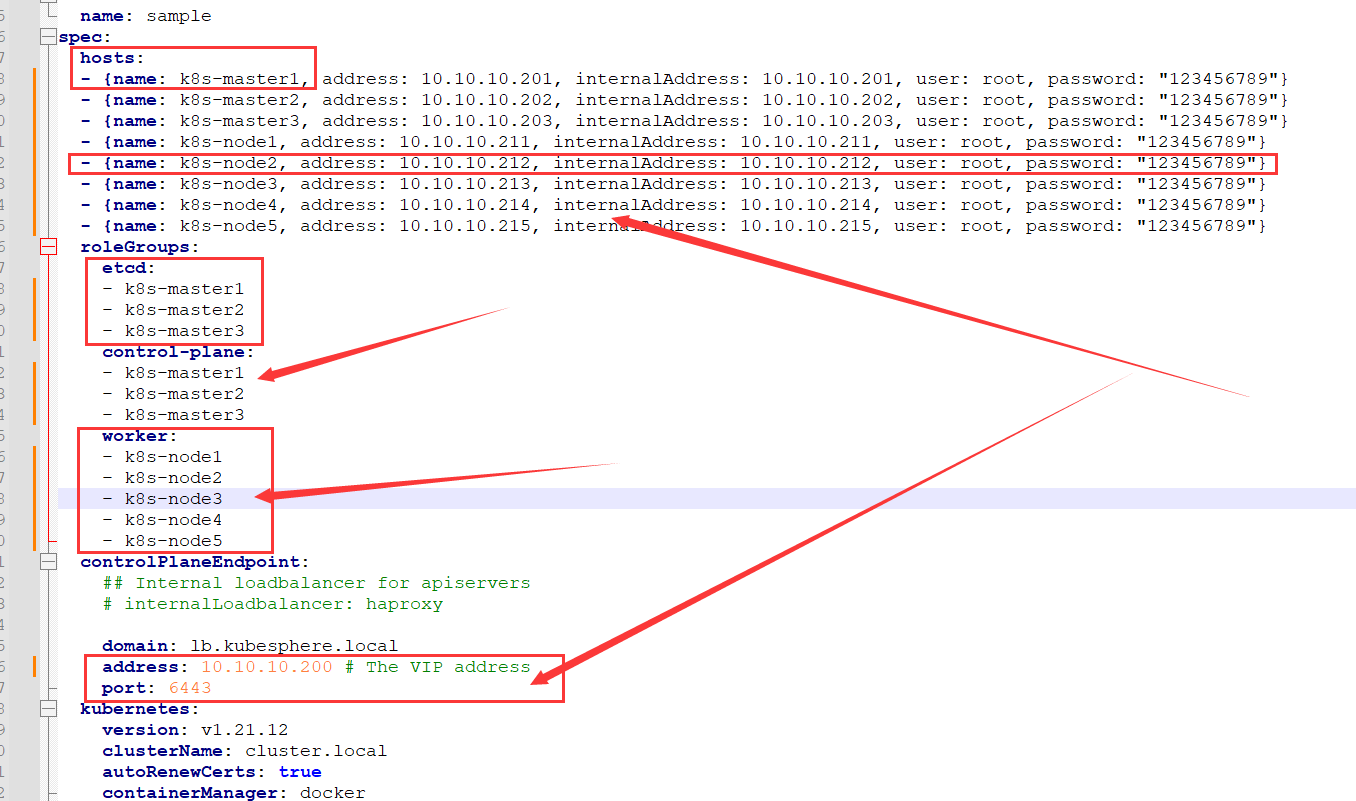

重点关注以下几个地方,如下图红色方框中的内容:

spec:hosts:中每一行代表一个机器,要把所有机器列出来,name:实例的主机名(就是第一步准备工作时设置的hostname),address和internalAddress是每个机器的IP地址,后面的user和password是能远程登录机器的用户名和密码。

controlPlaneEndpoint 部分为高可用集群提供外部负载均衡器信息,上图中下方的红色方框中填那个虚拟IP的地址。

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: k8s-master1, address: 10.10.10.201, internalAddress: 10.10.10.201, user: root, password: "123456789"}

- {name: k8s-master2, address: 10.10.10.202, internalAddress: 10.10.10.202, user: root, password: "123456789"}

- {name: k8s-master3, address: 10.10.10.203, internalAddress: 10.10.10.203, user: root, password: "123456789"}

- {name: k8s-node1, address: 10.10.10.211, internalAddress: 10.10.10.211, user: root, password: "123456789"}

- {name: k8s-node2, address: 10.10.10.212, internalAddress: 10.10.10.212, user: root, password: "123456789"}

- {name: k8s-node3, address: 10.10.10.213, internalAddress: 10.10.10.213, user: root, password: "123456789"}

- {name: k8s-node4, address: 10.10.10.214, internalAddress: 10.10.10.214, user: root, password: "123456789"}

- {name: k8s-node5, address: 10.10.10.215, internalAddress: 10.10.10.215, user: root, password: "123456789"}

roleGroups:

etcd:

- k8s-master1

- k8s-master2

- k8s-master3

control-plane:

- k8s-master1

- k8s-master2

- k8s-master3

worker:

- k8s-node1

- k8s-node2

- k8s-node3

- k8s-node4

- k8s-node5

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

# internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: 10.10.10.200 # The VIP address

port: 6443

kubernetes:

version: v1.23.10

clusterName: cluster.local

autoRenewCerts: true

containerManager: docker

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 172.30.0.0/16

kubeServiceCIDR: 192.168.0.0/16

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

privateRegistry: ""

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons: []

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.4.1

spec:

persistence:

storageClass: ""

authentication:

jwtSecret: ""

local_registry: ""

# dev_tag: ""

etcd:

monitoring: false

endpointIps: localhost

port: 2379

tlsEnable: true

common:

core:

console:

enableMultiLogin: true

port: 30880

type: NodePort

# apiserver:

# resources: {}

# controllerManager:

# resources: {}

redis:

enabled: false

enableHA: false

volumeSize: 2Gi

openldap:

enabled: false

volumeSize: 2Gi

minio:

volumeSize: 20Gi

monitoring:

# type: external

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

GPUMonitoring:

enabled: false

gpu:

kinds:

- resourceName: "nvidia.com/gpu"

resourceType: "GPU"

default: true

es:

# master:

# volumeSize: 4Gi

# replicas: 1

# resources: {}

# data:

# volumeSize: 20Gi

# replicas: 1

# resources: {}

enabled: false

logMaxAge: 7

elkPrefix: logstash

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchHost: ""

externalElasticsearchPort: ""

opensearch:

# master:

# volumeSize: 4Gi

# replicas: 1

# resources: {}

# data:

# volumeSize: 20Gi

# replicas: 1

# resources: {}

enabled: true

logMaxAge: 7

opensearchPrefix: whizard

basicAuth:

enabled: true

username: "admin"

password: "admin"

externalOpensearchHost: ""

externalOpensearchPort: ""

dashboard:

enabled: false

alerting:

enabled: false

# thanosruler:

# replicas: 1

# resources: {}

auditing:

enabled: false

# operator:

# resources: {}

# webhook:

# resources: {}

devops:

enabled: false

jenkinsCpuReq: 0.5

jenkinsCpuLim: 1

jenkinsMemoryReq: 4Gi

jenkinsMemoryLim: 4Gi

jenkinsVolumeSize: 16Gi

events:

enabled: false

# operator:

# resources: {}

# exporter:

# resources: {}

ruler:

enabled: true

replicas: 2

# resources: {}

logging:

enabled: false

logsidecar:

enabled: true

replicas: 2

# resources: {}

metrics_server:

enabled: false

monitoring:

storageClass: ""

node_exporter:

port: 9100

# resources: {}

# kube_rbac_proxy:

# resources: {}

# kube_state_metrics:

# resources: {}

# prometheus:

# replicas: 1

# volumeSize: 20Gi

# resources: {}

# operator:

# resources: {}

# alertmanager:

# replicas: 1

# resources: {}

# notification_manager:

# resources: {}

# operator:

# resources: {}

# proxy:

# resources: {}

gpu:

nvidia_dcgm_exporter:

enabled: false

# resources: {}

multicluster:

clusterRole: none

network:

networkpolicy:

enabled: false

ippool:

type: none

topology:

type: none

openpitrix:

store:

enabled: false

servicemesh:

enabled: false

istio:

components:

ingressGateways:

- name: istio-ingressgateway

enabled: false

cni:

enabled: false

edgeruntime:

enabled: false

kubeedge:

enabled: false

cloudCore:

cloudHub:

advertiseAddress:

- ""

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

# resources: {}

# hostNetWork: false

iptables-manager:

enabled: true

mode: "external"

# resources: {}

# edgeService:

# resources: {}

gatekeeper:

enabled: false

# controller_manager:

# resources: {}

# audit:

# resources: {}

terminal:

timeout: 600

配置完成后保存。

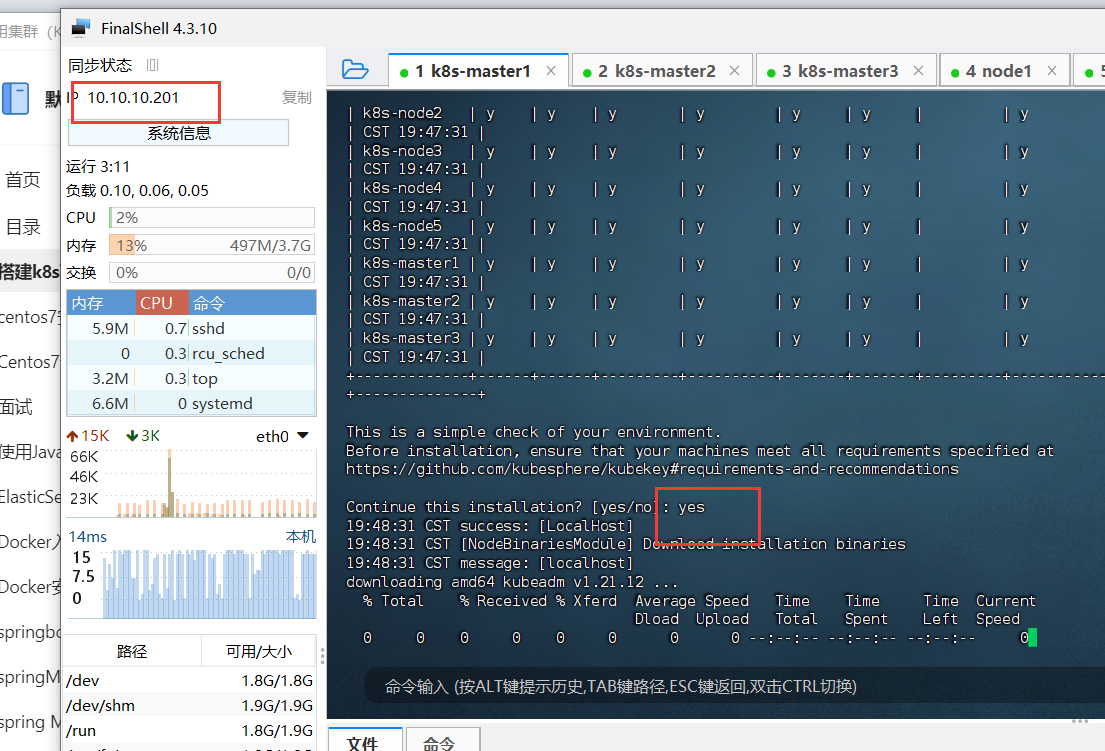

运行如下命令启动kubekey引导k8s安装:

./kk create cluster -f config-sample.yaml

输入yes确认安装。

整个安装过程可能需要 20 到 40 分钟,具体取决于您的计算机和网络环境。

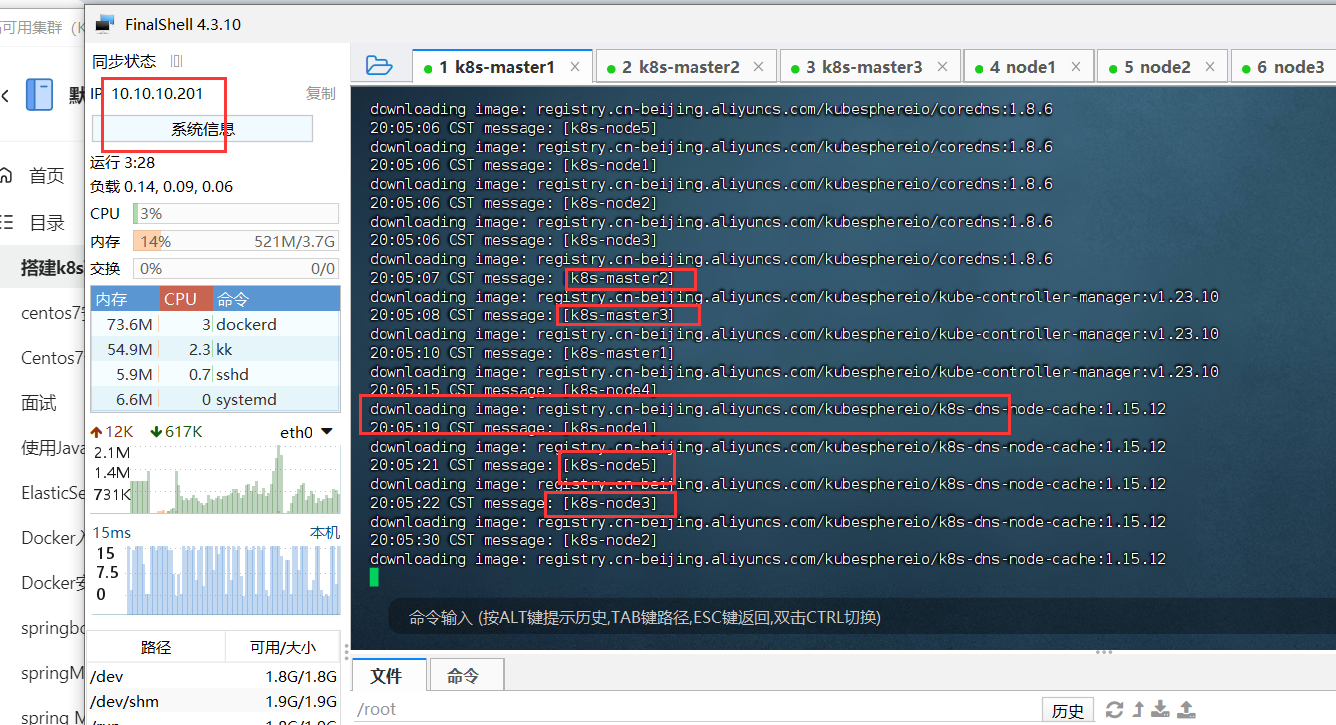

安装过程中会拉取很多镜像:

安装完成输出如下:

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://10.10.10.201:30880

Account: admin

Password: pass@6**

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 2024-03-11 11:28:33

#####################################################

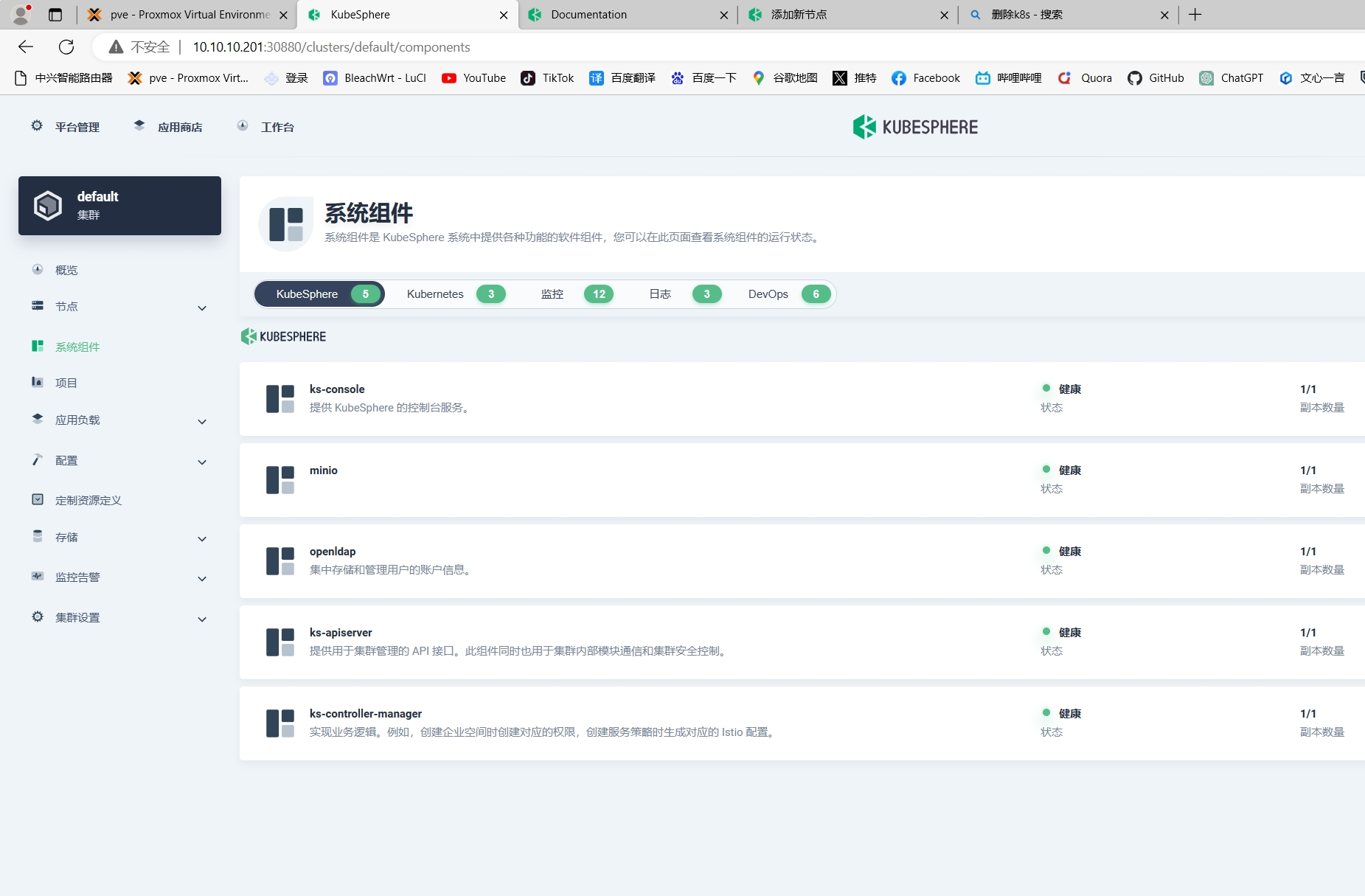

稍等片刻在浏览器中访问:http://10.10.10.201:30880 输入账号密码

说明K8s安装成功。

参考https://kubesphere.io/zh/docs/v3.4/

参考:尚硅谷教程

976

976

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?