学习思路:重现作者结果,实现自己的任务。

环境:win10 + Caffe的Python接口 Anaconda3+Python3.5 CUDA8.0 Cudnn5.1

caffe编译:官方过程,需要提前安装git

>>git clone https://github.com/BVLC/caffe.git

'''Edit any of the options inside build_win.cmd tosuit your needs'''

在scripts文件夹中的build_win.cmd右键notepad打开,修改需要的参数

>>scripts\build_win.cmd

重现作者过程

1。下载FCNs源代码

>>git clone https://github.com/shelhamer/fcn.berkeleyvision.org

得到fcn.berkeleyvision.org文件夹,文件夹内有很多模型源代码。我用的是fcn8s的,数据集用sbdd训练voc验证,对应文件夹为voc-fcn8s。

2。推导infer.py,红色的是改过的,用到的fcn8s-heavy-pascal.caffemodel文件是作者训练好的模型,下载地址在voc-fcn8文件下caffemodel-url文件内,地址为http://dl.caffe.berkeleyvision.org/fcn8s-heavy-pascal.caffemodel 。

import numpy as np

from PIL import Image

#添加caffe编译的目录

import sys

sys.path.append('../../caffe/python')

import caffe

import vis

# the demo image is "2007_000129" from PASCAL VOC

# load image, switch to BGR, subtract mean, and make dims C x H x W for Caffe

im = Image.open('demo/image.jpg')

in_ = np.array(im, dtype=np.float32)

in_ = in_[:,:,::-1]

in_ -= np.array((104.00698793,116.66876762,122.67891434))

in_ = in_.transpose((2,0,1))

# load net

net = caffe.Net('voc-fcn8s/deploy.prototxt', 'voc-fcn8s/fcn8s-heavy-pascal.caffemodel', caffe.TEST)

# shape for input (data blob is N x C x H x W), set data

net.blobs['data'].reshape(1, *in_.shape)

net.blobs['data'].data[...] = in_

# run net and take argmax for prediction

net.forward()

out = net.blobs['score'].data[0].argmax(axis=0)

#保存

# visualize segmentation in PASCAL VOC colors

voc_palette = vis.make_palette(21)

out_im = Image.fromarray(vis.color_seg(out, voc_palette))

out_im.save('demo/output.png')

masked_im = Image.fromarray(vis.vis_seg(im, out, voc_palette))

masked_im.save('demo/visualization.jpg')3。训练solve.py,红色的是改过的

1)先下载sbdd和voc2012,其中sbdd用于训练,voc用于测试

sbdd是从别人那里下的,地址找不到了,需要dataset文件,没有的话可以用voc作训练集。readme有个网站,如下:

http://www.eecs.berkeley.edu/Research/Projects/CS/vision/grouping/resources.html

voc2012

https://pjreddie.com/projects/pascal-voc-dataset-mirror/

下载Train/ValidationData,解压

2)下载vgg16预训练模型

3)把voc_layers.py、surgery.py、score.py复制到voc-fcn8s文件内,训练需要加载。因为我的是python3,所以需要把print改为print()

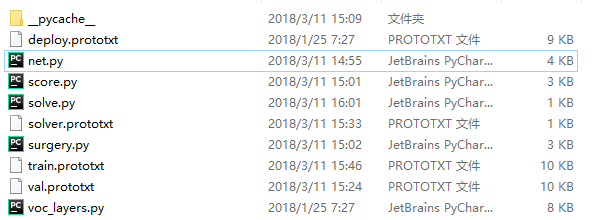

进入voc-fcn8s文件内,有如下几个文件(第一个是训练之后出现的)

4)修改net.py,使用net.py生成train.prototxt和val.prototxt

import sys

sys.path.append('D:\\caffeForWINDOWS\\caffe\\python')

import caffe

from caffe import layers as L, params as P

from caffe.coord_map import crop

def conv_relu(bottom, nout, ks=3, stride=1, pad=1):

conv = L.Convolution(bottom, kernel_size=ks, stride=stride,

num_output=nout, pad=pad,

param=[dict(lr_mult=1, decay_mult=1), dict(lr_mult=2, decay_mult=0)])

return conv, L.ReLU(conv, in_place=True)

def max_pool(bottom, ks=2, stride=2):

return L.Pooling(bottom, pool=P.Pooling.MAX, kernel_size=ks, stride=stride)

def fcn(split):

n = caffe.NetSpec()

pydata_params = dict(split=split, mean=(104.00699, 116.66877, 122.67892),

seed=1337)

if split == 'train':

pydata_params['sbdd_dir'] = '../../sbdd/dataset'#下载的用于训练的数据

pylayer = 'SBDDSegDataLayer'

else:

pydata_params['voc_dir'] = '../../VOC2012'#VOC2012数据

pylayer = 'VOCSegDataLayer'

n.data, n.label = L.Python(module='voc_layers', layer=pylayer,

ntop=2, param_str=str(pydata_params))

# the base net

n.conv1_1, n.relu1_1 = conv_relu(n.data, 64, pad=100)

n.conv1_2, n.relu1_2 = conv_relu(n.relu1_1, 64)

n.pool1 = max_pool(n.relu1_2)

n.conv2_1, n.relu2_1 = conv_relu(n.pool1, 128)

n.conv2_2, n.relu2_2 = conv_relu(n.relu2_1, 128)

n.pool2 = max_pool(n.relu2_2)

n.conv3_1, n.relu3_1 = conv_relu(n.pool2, 256)

n.conv3_2, n.relu3_2 = conv_relu(n.relu3_1, 256)

n.conv3_3, n.relu3_3 = conv_relu(n.relu3_2, 256)

n.pool3 = max_pool(n.relu3_3)

n.conv4_1, n.relu4_1 = conv_relu(n.pool3, 512)

n.conv4_2, n.relu4_2 = conv_relu(n.relu4_1, 512)

n.conv4_3, n.relu4_3 = conv_relu(n.relu4_2, 512)

n.pool4 = max_pool(n.relu4_3)

n.conv5_1, n.relu5_1 = conv_relu(n.pool4, 512)

n.conv5_2, n.relu5_2 = conv_relu(n.relu5_1, 512)

n.conv5_3, n.relu5_3 = conv_relu(n.relu5_2, 512)

n.pool5 = max_pool(n.relu5_3)

# fully conv

n.fc6, n.relu6 = conv_relu(n.pool5, 4096, ks=7, pad=0)

n.drop6 = L.Dropout(n.relu6, dropout_ratio=0.5, in_place=True)

n.fc7, n.relu7 = conv_relu(n.drop6, 4096, ks=1, pad=0)

n.drop7 = L.Dropout(n.relu7, dropout_ratio=0.5, in_place=True)

n.score_fr = L.Convolution(n.drop7, num_output=21, kernel_size=1, pad=0,

param=[dict(lr_mult=1, decay_mult=1), dict(lr_mult=2, decay_mult=0)])

n.upscore2 = L.Deconvolution(n.score_fr,

convolution_param=dict(num_output=21, kernel_size=4, stride=2,

bias_term=False),

param=[dict(lr_mult=0)])

n.score_pool4 = L.Convolution(n.pool4, num_output=21, kernel_size=1, pad=0,

param=[dict(lr_mult=1, decay_mult=1), dict(lr_mult=2, decay_mult=0)])

n.score_pool4c = crop(n.score_pool4, n.upscore2)

n.fuse_pool4 = L.Eltwise(n.upscore2, n.score_pool4c,

operation=P.Eltwise.SUM)

n.upscore_pool4 = L.Deconvolution(n.fuse_pool4,

convolution_param=dict(num_output=21, kernel_size=4, stride=2,

bias_term=False),

param=[dict(lr_mult=0)])

n.score_pool3 = L.Convolution(n.pool3, num_output=21, kernel_size=1, pad=0,

param=[dict(lr_mult=1, decay_mult=1), dict(lr_mult=2, decay_mult=0)])

n.score_pool3c = crop(n.score_pool3, n.upscore_pool4)

n.fuse_pool3 = L.Eltwise(n.upscore_pool4, n.score_pool3c,

operation=P.Eltwise.SUM)

n.upscore8 = L.Deconvolution(n.fuse_pool3,

convolution_param=dict(num_output=21, kernel_size=16, stride=8,

bias_term=False),

param=[dict(lr_mult=0)])

n.score = crop(n.upscore8, n.data)

n.loss = L.SoftmaxWithLoss(n.score, n.label,

loss_param=dict(normalize=False, ignore_label=255))

return n.to_proto()

def make_net():

with open('train.prototxt', 'w') as f:

f.write(str(fcn('train')))

with open('val.prototxt', 'w') as f:

f.write(str(fcn('seg11valid')))

if __name__ == '__main__':

make_net()

这会生成train.prototxt和val.prototxt,需要打开这两个文件,把fc6和fc7改成fc6_new和fc7_new(名字随意起的,和原来的不一样就行),假如红色的地方没有改,直接在这两个文件里修改param_str就可以了。

5)修改solve.py

import sys

sys.path.append('../../caffe/python')

import caffe

import surgery, score

import numpy as np

import os

import sys

try:

import setproctitle

setproctitle.setproctitle(os.path.basename(os.getcwd()))

except:

pass

weights = '../../vgg16-fcn.caffemodel'#你的预训练权重地址

# init

#caffe.set_device(int(sys.argv[1]))#不知道为什么要注释

#caffe.set_mode_gpu()#显卡不行,只好使用cpu了,或者在做数据集的时候把图片调小一点

solver = caffe.SGDSolver('solver.prototxt')

solver.net.copy_from(weights)

# surgeries

interp_layers = [k for k in solver.net.params.keys() if 'up' in k]

surgery.interp(solver.net, interp_layers)

# scoring

val = np.loadtxt('../../segvalid111.txt', dtype=str)#这个文件是VOC2012/ImageSets/Segmentation文件下的val.txt文件,重命名就行,好像是用来记录测试用到的分割图的名称。

for _ in range(1):#少循环几次试试能不能成

solver.step(5)

score.seg_tests(solver, False, val, layer='score')#测试

6)修改solver.prototxt

train_net、test_net地址对应上train.prototxt和val.prototxt,snapshot_prefix对应训练结果保存的地址

train_net: "train.prototxt"

test_net: "val.prototxt"

test_iter: 736

# make test net, but don't invoke it from the solver itself

test_interval: 999999999

display: 1

average_loss: 20

lr_policy: "fixed"

# lr for unnormalized softmax

base_lr: 1e-14

# high momentum

momentum: 0.99

# no gradient accumulation

iter_size: 1

max_iter: 100000

weight_decay: 0.0005

snapshot: 4000

snapshot_prefix: "../../snapshot/"

test_initialization: false7)score.py只把print改为print()

8)surgery.py不做修改

9)voc_layers.py

import caffe#训练时已经调用了caffe,不用再改

import numpy as np

from PIL import Image

import random

class VOCSegDataLayer(caffe.Layer):

"""

Load (input image, label image) pairs from PASCAL VOC

one-at-a-time while reshaping the net to preserve dimensions.

Use this to feed data to a fully convolutional network.

"""

def setup(self, bottom, top):

"""

Setup data layer according to parameters:

- voc_dir: path to PASCAL VOC year dir

- split: train / val / test

- mean: tuple of mean values to subtract

- randomize: load in random order (default: True)

- seed: seed for randomization (default: None / current time)

for PASCAL VOC semantic segmentation.

example

params = dict(voc_dir="/path/to/PASCAL/VOC2011",

mean=(104.00698793, 116.66876762, 122.67891434),

split="val")

"""

# config

params = eval(self.param_str)

self.voc_dir = params['voc_dir']

self.split = params['split']

self.mean = np.array(params['mean'])

self.random = params.get('randomize', True)

self.seed = params.get('seed', None)

# two tops: data and label

if len(top) != 2:

raise Exception("Need to define two tops: data and label.")

# data layers have no bottoms

if len(bottom) != 0:

raise Exception("Do not define a bottom.")

# load indices for images and labels

split_f = '{}/ImageSets/Segmentation/{}.txt'.format(self.voc_dir,

self.split)#这个要求有./VOC2012/ImageSets/Segmentation/seg11valid.txt文件,同样把val.txt重命名

self.indices = open(split_f, 'r').read().splitlines()

self.idx = 0

# make eval deterministic

if 'train' not in self.split:

self.random = False

# randomization: seed and pick

if self.random:

random.seed(self.seed)

self.idx = random.randint(0, len(self.indices)-1)

def reshape(self, bottom, top):

# load image + label image pair

self.data = self.load_image(self.indices[self.idx])

self.label = self.load_label(self.indices[self.idx])

# reshape tops to fit (leading 1 is for batch dimension)

top[0].reshape(1, *self.data.shape)

top[1].reshape(1, *self.label.shape)

def forward(self, bottom, top):

# assign output

top[0].data[...] = self.data

top[1].data[...] = self.label

# pick next input

if self.random:

self.idx = random.randint(0, len(self.indices)-1)

else:

self.idx += 1

if self.idx == len(self.indices):

self.idx = 0

def backward(self, top, propagate_down, bottom):

pass

def load_image(self, idx):

"""

Load input image and preprocess for Caffe:

- cast to float

- switch channels RGB -> BGR

- subtract mean

- transpose to channel x height x width order

"""

im = Image.open('{}/JPEGImages/{}.jpg'.format(self.voc_dir, idx))

in_ = np.array(im, dtype=np.float32)

in_ = in_[:,:,::-1]

in_ -= self.mean

in_ = in_.transpose((2,0,1))

return in_

def load_label(self, idx):

"""

Load label image as 1 x height x width integer array of label indices.

The leading singleton dimension is required by the loss.

"""

im = Image.open('{}/SegmentationClass/{}.png'.format(self.voc_dir, idx))

label = np.array(im, dtype=np.uint8)

label = label[np.newaxis, ...]

return label

class SBDDSegDataLayer(caffe.Layer):

"""

Load (input image, label image) pairs from the SBDD extended labeling

of PASCAL VOC for semantic segmentation

one-at-a-time while reshaping the net to preserve dimensions.

Use this to feed data to a fully convolutional network.

"""

def setup(self, bottom, top):

"""

Setup data layer according to parameters:

- sbdd_dir: path to SBDD `dataset` dir

- split: train / seg11valid

- mean: tuple of mean values to subtract

- randomize: load in random order (default: True)

- seed: seed for randomization (default: None / current time)

for SBDD semantic segmentation.

N.B.segv11alid is the set of segval11 that does not intersect with SBDD.

Find it here: https://gist.github.com/shelhamer/edb330760338892d511e.

example

params = dict(sbdd_dir="/path/to/SBDD/dataset",

mean=(104.00698793, 116.66876762, 122.67891434),

split="valid")

"""

# config

params = eval(self.param_str)

self.sbdd_dir = params['sbdd_dir']

self.split = params['split']

self.mean = np.array(params['mean'])

self.random = params.get('randomize', True)

self.seed = params.get('seed', None)

# two tops: data and label

if len(top) != 2:

raise Exception("Need to define two tops: data and label.")

# data layers have no bottoms

if len(bottom) != 0:

raise Exception("Do not define a bottom.")

# load indices for images and labels

split_f = '{}/{}.txt'.format(self.sbdd_dir,

self.split)

self.indices = open(split_f, 'r').read().splitlines()

self.idx = 0

# make eval deterministic

if 'train' not in self.split:

self.random = False

# randomization: seed and pick

if self.random:

random.seed(self.seed)

self.idx = random.randint(0, len(self.indices)-1)

def reshape(self, bottom, top):

# load image + label image pair

self.data = self.load_image(self.indices[self.idx])

self.label = self.load_label(self.indices[self.idx])

# reshape tops to fit (leading 1 is for batch dimension)

top[0].reshape(1, *self.data.shape)

top[1].reshape(1, *self.label.shape)

def forward(self, bottom, top):

# assign output

top[0].data[...] = self.data

top[1].data[...] = self.label

# pick next input

if self.random:

self.idx = random.randint(0, len(self.indices)-1)

else:

self.idx += 1

if self.idx == len(self.indices):

self.idx =

def backward(self, top, propagate_down, bottom):

pass

def load_image(self, idx):

"""

Load input image and preprocess for Caffe:

- cast to float

- switch channels RGB -> BGR

- subtract mean

- transpose to channel x height x width order

"""

im = Image.open('{}/img/{}.jpg'.format(self.sbdd_dir, idx))

in_ = np.array(im, dtype=np.float32)

in_ = in_[:,:,::-1]

in_ -= self.mean

in_ = in_.transpose((2,0,1))

return in_

def load_label(self, idx):

"""

Load label image as 1 x height x width integer array of label indices.

The leading singleton dimension is required by the loss.

"""

import scipy.io

mat = scipy.io.loadmat('{}/cls/{}.mat'.format(self.sbdd_dir, idx))

label = mat['GTcls'][0]['Segmentation'][0].astype(np.uint8)

label = label[np.newaxis, ...]

return label

#def load_label(self, idx):

# im = Image.open('{}/cls/{}.png'.format(self.sbdd_dir, idx))

# label = np.array(im, dtype=np.uint8)

# label = label[np.newaxis, ...]

# return label

#这样可以直接使用分割图片而不用转换为mat图,不过目前还没整明白分割图的制作,没有测试

目前卡在制作自己数据这里,老是说label的number和prediction的number不匹配。

还有就是labelme制作的标签使用skimage里的函数上色永远都是按顺序上色。等做完了再更。。。

能力有限。。。

1767

1767

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?