获取设备能力信息

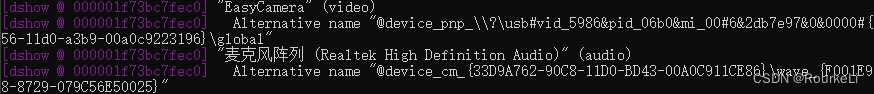

首先获取全部设备信息,可以通过命令行查看所有的设备信息

ffmpeg -list_devices true -f dshow -i dummy

因为使用的时笔记本,可以看到输入设备包含摄像头和麦克风。需要记录下摄像头的名字“EasyCamera”,在代码中需要通过这个名字或者叫ID打开设备。不同的设备名字也不一样,调用者应该根据实际情况调整。

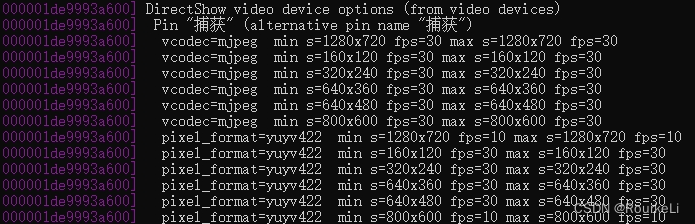

我们可以使用下面的命令查看摄像头支持的像素格式、帧率和分辨率。不同设备的输出能力是不一样的。

ffmpeg -list_options true -f dshow -i video="EasyCamera"通过输出的信息可以得知,摄像头输出的像素格式为yuyv422,分辨率超过 800*600后,每秒最多只能输出10帧图像。

代码实现

之前有直接将yuv420p数据封装成mp4文件,这次需要做的只是将摄像头采集的数据转换为yuv420p。在代码中,我们只能获取到摄像头输出的压缩数据,因此需要进行解码操作,得到原始的视频帧,然后再进行像素格式转换。

工作线程代码

#include "cworkthread.h"

#include <qdebug.h>

extern "C"{

#include <libavdevice/avdevice.h>

#include <libavformat/avformat.h>

#include <libavutil/avutil.h>

#include <libavcodec/avcodec.h>

#include <libavutil/imgutils.h>

}

#include "cscalevideoframe.h"

#define PRINT_ERROR(ret)\

if (ret < 0) {\

char buf[100]={0};\

av_strerror(ret,buf,100);\

qDebug()<<buf;\

return ;\

}\

CWorkThread::CWorkThread(QObject* parent):QThread(parent)

{

}

CWorkThread::~CWorkThread()

{

disconnect();

stop();

quit();

wait();

}

void CWorkThread::stop()

{

if(false == isInterruptionRequested())

requestInterruption();

}

int createVideoInput(AVFormatContext*& pContext)

{

//获取输入格式对象

const AVInputFormat *fmt = av_find_input_format("dshow");

//格式上下文 打开设备

pContext = avformat_alloc_context();

//设备名

//const char * deviceName = "audio=virtual-audio-capturer";

const char * deviceName ="video=EasyCamera";// "video=ASUS USB2.0 WebCam";

AVDictionary *options;

av_dict_set(&options,"video_size","640*480",0);

//av_dict_set(&options,"pixel_format","yuyv422",0);

//av_dict_set(&options,"framerate","30",0);

//av_dict_set_int(&options,"framerate",30,0);

int ret = avformat_open_input(&pContext,deviceName,fmt,&options);

return ret;

}

int createVideoDecoder(AVCodecContext*& pDecoderCtx,AVStream* videoStream)

{

const AVCodec* pDecoder;

pDecoder = avcodec_find_decoder(videoStream->codecpar->codec_id);

pDecoderCtx = avcodec_alloc_context3(pDecoder);

avcodec_parameters_to_context(pDecoderCtx,videoStream->codecpar);

//打开解码器

int ret = avcodec_open2(pDecoderCtx,pDecoder,nullptr);

return ret;

}

int createVideoEncoder(AVCodecContext*& pEncoderCtx,AVStream* videoStream)

{

const AVCodec* pEncoder ;

AVCodecID codecId = AV_CODEC_ID_H264;

pEncoder = avcodec_find_encoder(codecId);

pEncoderCtx = avcodec_alloc_context3(pEncoder);

pEncoderCtx->codec_id = codecId;

//codecCtx->bit_rate = 400000;

pEncoderCtx->pix_fmt = *pEncoder->pix_fmts;// AV_PIX_FMT_YUV420P;

pEncoderCtx->width = videoStream->codecpar->width;

pEncoderCtx->height = videoStream->codecpar->height;

pEncoderCtx->time_base = videoStream->time_base;

pEncoderCtx->framerate = videoStream->r_frame_rate;

pEncoderCtx->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

int ret = avcodec_open2(pEncoderCtx,pEncoder,nullptr);

return ret;

}

int createOutputFormat( AVFormatContext *&pOutFormatCtx,const AVCodecContext* pEncoderCtx

,AVStream*videoStream,const char* outFileName)

{

const AVOutputFormat * pOutputFmt;

avformat_alloc_output_context2(&pOutFormatCtx,NULL,"mp4",outFileName);

pOutputFmt = pOutFormatCtx->oformat;

/*创建输出流*/

AVStream * outVideoStream;

outVideoStream = avformat_new_stream(pOutFormatCtx,pEncoderCtx->codec);

avcodec_parameters_from_context(outVideoStream->codecpar,pEncoderCtx);

outVideoStream->time_base = av_inv_q(videoStream->r_frame_rate);

/*打开输出文件*/

int ret = avio_open(&pOutFormatCtx->pb, outFileName, AVIO_FLAG_WRITE);

/*写入文件头信息 */

ret = avformat_write_header(pOutFormatCtx, NULL);

return ret;

}

void CWorkThread::run()

{

avdevice_register_all();

//打开摄像头设备

AVFormatContext* pContext = nullptr;

int ret = createVideoInput(pContext);

PRINT_ERROR(ret);

//查找视频流

int videoStreamId = av_find_best_stream(pContext, AVMEDIA_TYPE_VIDEO, -1, -1, NULL, NULL);

AVStream* videoStream = pContext->streams[videoStreamId];

int fps = av_q2d(videoStream->r_frame_rate);

AVRational videoFrameRate{1,fps};

/*初始化解码器上下文*/

AVCodecContext* pDecoderCtx = nullptr;

ret = createVideoDecoder(pDecoderCtx,videoStream);

PRINT_ERROR(ret);

/*查找编码器,初始化编码器上下文*/

AVCodecContext* pEncoderCtx = nullptr;

ret = createVideoEncoder(pEncoderCtx,videoStream);

PRINT_ERROR(ret);

/*初始化输出上下文*/

AVFormatContext *pOutFormatCtx;

const char* outFileName = "d:/outcamera.mp4";

ret = createOutputFormat(pOutFormatCtx,pEncoderCtx,videoStream,outFileName);

AVPacket* reEncodecdPkt = av_packet_alloc();//存编码后的数据

int videoFrameCount =0; //解码视频帧计数

AVPacket* pVideoPkt = av_packet_alloc(); //解码前视频压缩包

AVFrame* pVideoFrame = av_frame_alloc(); //解码后视频帧

CScaleVideoFrame frameScaler;

frameScaler.setTarget(pEncoderCtx->width,pEncoderCtx->height,pEncoderCtx->pix_fmt);

while (!isInterruptionRequested()) {

int res = av_read_frame(pContext,pVideoPkt);

if(res == 0)

{

//解码

if(pVideoPkt->stream_index != AVMEDIA_TYPE_VIDEO)

{

continue;

}

int ret = avcodec_send_packet(pDecoderCtx, pVideoPkt);

PRINT_ERROR(ret);

while(!isInterruptionRequested())

{

ret = avcodec_receive_frame(pDecoderCtx, pVideoFrame);

if ( ret == AVERROR(EAGAIN) || ret == AVERROR_EOF)

{

break;

}

//转换像素格式

AVFrame* scaled_frame = frameScaler.getScaledFrame(pVideoFrame);

if(scaled_frame == NULL)

{

break;

}

//根据帧率和时间基计算pts

auto pts = av_rescale_q(videoFrameCount, videoFrameRate, videoStream->time_base);

scaled_frame->pts = pts;

++videoFrameCount;

//编码

ret = avcodec_send_frame(pEncoderCtx, scaled_frame);

ret = avcodec_receive_packet(pEncoderCtx, reEncodecdPkt);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF)

{

break;

}

//写入文件

ret = av_interleaved_write_frame(pOutFormatCtx, reEncodecdPkt);

PRINT_ERROR(ret);

}

}

else if(res == AVERROR(ERANGE))

{

continue;

}

else

break;

}

qDebug()<<"frame count"<<videoFrameCount;

av_write_trailer(pOutFormatCtx);

avio_close(pOutFormatCtx->pb);

av_packet_free(&reEncodecdPkt);

av_frame_free(&pVideoFrame);

avcodec_free_context(&pEncoderCtx);

avcodec_free_context(&pDecoderCtx);

avformat_free_context(pOutFormatCtx);

avformat_close_input(&pContext);

av_packet_unref(pVideoPkt);

}格式转换

CScaleVideoFrame是简单封装的类,用于像素格式转换。因为转换功能比较独立,调用的接口较多,放在一起感觉有点影响阅读。该类的主要代码如下所示。

#ifndef CSCALEVIDEOFRAME_H

#define CSCALEVIDEOFRAME_H

extern "C"{

#include <libavdevice/avdevice.h>

#include <libavformat/avformat.h>

#include <libavutil/avutil.h>

#include <libavcodec/avcodec.h>

#include <libavutil/imgutils.h>

#include <libswscale/swscale.h>

#include <libswresample/swresample.h>

#include "libavutil/audio_fifo.h"

}

#include <mutex>

class CScaleVideoFrame

{

public:

CScaleVideoFrame();

~CScaleVideoFrame();

void setTarget(int dstW,int dstH,AVPixelFormat dstPixFmt=AV_PIX_FMT_RGB24);

AVFrame* getScaledFrame(AVFrame* srcFrame);

int dstWidth(){return m_dst_w;}

int dstHeight(){return m_dst_h;}

private:

void initSwr();

private:

uint8_t *m_dst_data;

int m_dst_bufSize;

int m_dst_linesize[4];

int m_src_w,m_src_h;

enum AVPixelFormat m_src_pix_fmt = AV_PIX_FMT_NONE;

int m_dst_w, m_dst_h;

enum AVPixelFormat m_dst_pix_fmt = AV_PIX_FMT_RGB24;

struct SwsContext *m_sws_ctx=nullptr;

AVFrame* m_dst_frame;

std::mutex m_contextMtx;

};

#endif // CSCALEVIDEOFRAME_H

#include "cscalevideoframe.h"

CScaleVideoFrame::CScaleVideoFrame():

m_dst_w(-1),

m_dst_h(-1),

m_dst_pix_fmt(AVPixelFormat::AV_PIX_FMT_RGB24)

{

}

CScaleVideoFrame::~CScaleVideoFrame()

{

if(m_sws_ctx)

{

sws_freeContext(m_sws_ctx);

//av_freep(&src_data[0]);

av_freep(&m_dst_data);

av_frame_free(&m_dst_frame);

}

}

void CScaleVideoFrame::setTarget(int dstW, int dstH, AVPixelFormat dstPixFmt)

{

if( m_dst_w != dstW ||

m_dst_h != dstH ||

m_dst_pix_fmt != dstPixFmt)

{

std::unique_lock<std::mutex> lck(m_contextMtx);

m_dst_w = dstW;

m_dst_h = dstH;

initSwr();

}

m_dst_w = dstW;

m_dst_h = dstH;

m_dst_pix_fmt = dstPixFmt;

}

AVFrame *CScaleVideoFrame::getScaledFrame(AVFrame *srcFrame)

{

if(srcFrame == nullptr)

return nullptr;

if(srcFrame->width != m_src_w ||

srcFrame->height != m_src_h ||

srcFrame->format != m_src_pix_fmt)

{

std::unique_lock<std::mutex> lck(m_contextMtx);

m_src_w = srcFrame->width;

m_src_h = srcFrame->height;

m_src_pix_fmt = (AVPixelFormat)srcFrame->format;

initSwr();

}

std::unique_lock<std::mutex> lck(m_contextMtx);

if(m_sws_ctx != nullptr)

{

sws_scale(m_sws_ctx, (const uint8_t **)srcFrame->data,

srcFrame->linesize, 0, srcFrame->height, m_dst_frame->data, m_dst_frame->linesize);

m_dst_frame->width = m_dst_w;

m_dst_frame->height = m_dst_h;

m_dst_frame->format = m_dst_pix_fmt;

return m_dst_frame;

}

return nullptr;

}

void CScaleVideoFrame::initSwr()

{

if(m_sws_ctx != nullptr)

{

sws_freeContext(m_sws_ctx);

av_freep(&m_dst_data[0]);

av_frame_free(&m_dst_frame);

}

if(m_dst_h == -1 && m_dst_w == -1)

{

m_dst_w = m_src_w;

m_dst_h = m_src_h;

}

if(m_src_pix_fmt == AV_PIX_FMT_NONE)

{

return;

}

m_sws_ctx = sws_getContext(m_src_w,m_src_h,

m_src_pix_fmt,

m_dst_w, m_dst_h, m_dst_pix_fmt,

SWS_BICUBIC, NULL, NULL, NULL);

m_dst_frame = av_frame_alloc();

m_dst_bufSize = av_image_get_buffer_size(m_dst_pix_fmt, m_dst_w,m_dst_h, 1);

//开辟空间给buffer

m_dst_data = (uint8_t*)av_malloc(m_dst_bufSize * sizeof(uint8_t));

av_image_fill_arrays(m_dst_frame->data, m_dst_frame->linesize, m_dst_data

, m_dst_pix_fmt, m_dst_w, m_dst_h, 1);

}

319

319

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?