一、逻辑斯谛回归用于解决什么问题?

逻辑斯谛回归是经典分类方法,用于解决分类问题。二项逻辑斯谛回归可以解决二分类问题。逻辑回归假设数据服从伯努利分布,通过极大化似然函数的方法,运用梯度下降来求解参数来解决二分类问题。

二、逻辑斯谛回归为什么可以解决分类问题?

逻辑斯谛分布函数为:

F

(

x

)

=

P

(

X

≤

x

)

=

1

/

1

+

e

−

(

−

x

−

μ

)

/

γ

F(x)=P(X \le x)=1/1+e^{-(-x-\mu)/\gamma}

F(x)=P(X≤x)=1/1+e−(−x−μ)/γ

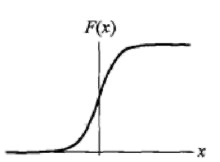

分布函数图形是一条S型曲线,以

(

μ

,

1

/

2

)

(\mu,1/2)

(μ,1/2)为中心对称。

二项逻辑斯谛回归模型的条件概率分布如下:

P

(

Y

=

1

∣

x

)

=

e

x

p

(

w

⋅

x

+

b

)

1

+

e

x

p

(

w

⋅

x

+

b

)

P(Y=1|x)=\frac{exp(w \cdot x+b)}{1+exp(w \cdot x + b)}

P(Y=1∣x)=1+exp(w⋅x+b)exp(w⋅x+b)

P

(

Y

=

0

∣

x

)

=

1

1

+

e

x

p

(

w

⋅

x

+

b

)

P(Y=0|x)=\frac{1}{1+exp(w \cdot x + b)}

P(Y=0∣x)=1+exp(w⋅x+b)1

对于给定的输入实例

x

x

x,按照上述两式子可以求得两个概率,比较两个条件概率值的大小,将

x

x

x分到概率值较大的那一类。

若记

w

=

(

w

(

1

)

,

w

(

2

)

,

.

.

.

,

w

(

n

)

,

b

)

T

w=(w^{(1)},w^{(2)},...,w^{(n)},b)^{T}

w=(w(1),w(2),...,w(n),b)T,

x

=

(

x

(

1

)

,

x

(

2

)

,

.

.

.

,

x

(

n

)

,

1

)

T

x=(x^{(1)},x^{(2)},...,x^{(n)},1)^{T}

x=(x(1),x(2),...,x(n),1)T,逻辑斯谛回归模型化为:

P

(

Y

=

1

∣

x

)

=

e

x

p

(

w

⋅

x

)

1

+

e

x

p

(

w

⋅

x

)

(

1

)

P(Y=1|x)=\frac{exp(w \cdot x)}{1+exp(w \cdot x)}\quad(1)

P(Y=1∣x)=1+exp(w⋅x)exp(w⋅x)(1)

P

(

Y

=

0

∣

x

)

=

1

1

+

e

x

p

(

w

⋅

x

)

(

2

)

P(Y=0|x)=\frac{1}{1+exp(w \cdot x)}\quad(2)

P(Y=0∣x)=1+exp(w⋅x)1(2)

一个事件的几率是指该事件发生的概率与该事件不发生的概率的比值。如果事件发生的概率是

p

p

p,那么该事件的几率是

p

1

−

p

\frac{p}{1-p}

1−pp,逻辑斯谛回归模型的核心是

l

o

g

i

t

logit

logit函数,该函数为:

l

o

g

i

t

(

p

)

=

l

o

g

p

1

−

p

logit(p)=log\frac{p}{1-p}

logit(p)=log1−pp

将函数代入

P

(

Y

=

1

∣

x

)

=

e

x

p

(

w

⋅

x

)

1

+

e

x

p

(

w

⋅

x

)

P(Y=1|x)=\frac{exp(w \cdot x)}{1+exp(w \cdot x)}

P(Y=1∣x)=1+exp(w⋅x)exp(w⋅x)

同时两边取对数得:

l

n

P

(

Y

=

1

∣

x

)

1

−

P

(

Y

=

1

∣

x

)

=

w

⋅

x

ln\frac{P(Y=1|x)}{1-P(Y=1|x)}=w \cdot x

ln1−P(Y=1∣x)P(Y=1∣x)=w⋅x

通过极大似然估计求解对应参数,将分类问题转化为概率问题映射至

(

0

,

1

)

(0,1)

(0,1)区间。线性函数值越接近正无穷,概率值就越接近

1

1

1,线性函数值越接近负无穷,概率值就越接近

0

0

0。

三、如果求解逻辑斯谛模型实现二分类?

1.记

h

θ

(

x

)

=

g

(

θ

T

x

)

=

1

1

+

e

−

θ

T

x

h_\theta(x)=g(\theta^Tx)=\frac{1}{1+e^{-\theta^Tx}}

hθ(x)=g(θTx)=1+e−θTx1,其中

g

(

z

)

=

1

1

+

e

−

z

g(z)=\frac{1}{1+e^{-z}}

g(z)=1+e−z1,

g

′

(

z

)

=

g

(

z

)

(

1

−

g

(

z

)

)

g^{'}(z)=g(z)(1-g(z))

g′(z)=g(z)(1−g(z))则:

P

(

y

=

1

∣

x

;

θ

)

=

h

θ

(

x

)

P

(

y

=

0

∣

x

;

θ

)

=

1

−

h

θ

(

x

)

P(y=1|x;\theta)=h_\theta(x)\\P(y=0|x;\theta)=1-h_\theta(x)

P(y=1∣x;θ)=hθ(x)P(y=0∣x;θ)=1−hθ(x)

2.观察上述两个式子,发现可以将它们合并成一条式子:

P

(

y

∣

x

;

θ

)

=

(

h

θ

(

x

)

)

y

(

1

−

h

θ

(

x

)

)

1

−

y

P(y|x;\theta)=(h_\theta(x))^y(1-h_\theta(x))^{1-y}

P(y∣x;θ)=(hθ(x))y(1−hθ(x))1−y

当

y

=

1

y=1

y=1时

P

(

y

=

1

∣

x

;

θ

)

=

h

θ

(

x

)

P(y=1|x;\theta)=h_\theta(x)

P(y=1∣x;θ)=hθ(x);当

y

=

0

y=0

y=0时

P

(

y

=

0

∣

x

;

θ

)

=

1

−

h

θ

(

x

)

P(y=0|x;\theta)=1-h_\theta(x)

P(y=0∣x;θ)=1−hθ(x)

3.似然函数: L ( θ ) = ∏ i = 0 n ( h θ ( x ( i ) ) ) y ( i ) ( 1 − h θ ( x ( i ) ) ) 1 − y ( i ) L(\theta)=\prod \limits_{i=0}^{n}(h_\theta(x^{(i)}))^{y^{(i)}}(1-h_\theta(x^{(i)}))^{1-y^{(i)}} L(θ)=i=0∏n(hθ(x(i)))y(i)(1−hθ(x(i)))1−y(i)

4.似然函数两边同时取对数: l n L ( θ ) = l ( θ ) = ∑ i = 1 n y ( i ) l o g h θ ( x ( i ) ) + ( 1 − y ( i ) ) l o g ( 1 − h θ ( x ( i ) ) ) lnL(\theta)=l(\theta)=\sum \limits_{i=1}^{n}y^{(i)}logh_\theta(x^{(i)})+(1-y^{(i)})log(1-h_\theta(x^{(i)})) lnL(θ)=l(θ)=i=1∑ny(i)loghθ(x(i))+(1−y(i))log(1−hθ(x(i)))

5.目标是最大化似然函数: max θ l ( θ ) \max \limits_{\theta} l(\theta) θmaxl(θ)

6.使用梯度上升算法求解参数 θ \theta θ,参数 θ \theta θ的迭代式为: θ j + 1 = θ j + α ▽ l ( θ ) \theta _{j+1}=\theta _j + \alpha \bigtriangledown l(\theta) θj+1=θj+α▽l(θ)

7.似然函数两边对

θ

\theta

θ求偏导:

▽

l

(

θ

)

=

∂

l

(

θ

)

∂

θ

j

=

(

y

1

g

(

θ

T

x

)

−

(

1

−

y

)

1

1

−

g

(

θ

T

x

)

)

∂

g

(

θ

T

x

)

∂

θ

j

=

(

y

1

g

(

θ

T

x

)

−

(

1

−

y

)

1

1

−

g

(

θ

T

x

)

)

g

(

θ

T

x

)

(

1

−

g

(

θ

T

x

)

)

∂

θ

T

x

∂

θ

j

\bigtriangledown l(\theta) = \frac{\partial l(\theta)}{\partial \theta_j}=(y\frac{1}{g(\theta^Tx)}-(1-y)\frac{1}{1-g(\theta^Tx)})\frac{\partial g(\theta^Tx)}{\partial \theta_j}\\ \qquad \,=(y\frac{1}{g(\theta^Tx)}-(1-y)\frac{1}{1-g(\theta^Tx)})g(\theta^Tx)(1-g(\theta^Tx))\frac{\partial \theta^Tx}{\partial \theta_j}

▽l(θ)=∂θj∂l(θ)=(yg(θTx)1−(1−y)1−g(θTx)1)∂θj∂g(θTx)=(yg(θTx)1−(1−y)1−g(θTx)1)g(θTx)(1−g(θTx))∂θj∂θTx

=

(

y

(

1

−

g

(

θ

T

x

)

)

−

(

1

−

y

)

g

(

θ

T

x

)

)

x

j

\qquad \,=(y(1-g(\theta^Tx))-(1-y)g(\theta^Tx))x_j

=(y(1−g(θTx))−(1−y)g(θTx))xj

=

(

y

−

h

θ

(

x

)

)

x

j

\qquad \,=(y-h_\theta(x))x_j

=(y−hθ(x))xj

8.联合6、7步,可以得到 θ j \theta_j θj的最终更新式子为: θ j + 1 = θ j + α ∑ i = 1 n ( y ( i ) − h θ ( x ( i ) ) ) x j ( i ) \theta_{j+1}=\theta_j+\alpha \sum \limits_{i=1}^{n}(y^{(i)}-h_{\theta}(x^{(i)}))x_j^{(i)} θj+1=θj+αi=1∑n(y(i)−hθ(x(i)))xj(i)

四、逻辑斯谛回归实现二分类的代码

假设输入数据特征x是m行n列,组成一个m*n矩阵

x

=

[

x

00

⋯

x

0

n

⋮

⋱

⋮

x

m

0

⋯

x

m

n

]

x=\begin{bmatrix} {x_{00}}&{\cdots}&{x_{0n}}\\ {\vdots}&{\ddots}&{\vdots}\\ {x_{m0}}&{\cdots}&{x_{mn}}\\ \end{bmatrix}

x=⎣⎢⎡x00⋮xm0⋯⋱⋯x0n⋮xmn⎦⎥⎤

数据标签y

y

=

[

y

1

⋯

y

m

]

T

y=\begin{bmatrix} y_1 \cdots y_m \end{bmatrix}^T

y=[y1⋯ym]T

参数

θ

\theta

θ

θ

=

[

θ

1

⋯

θ

m

]

T

\theta=\begin{bmatrix} \theta_1 \cdots \theta_m \end{bmatrix}^T

θ=[θ1⋯θm]T

定义

z

=

θ

T

x

z=\theta^Tx

z=θTx,

g

(

z

)

=

1

/

1

+

e

−

z

g(z)=1/1+e^{-z}

g(z)=1/1+e−z

误差损失为:loss =

h

θ

(

x

)

−

y

=

g

(

z

)

−

y

h_\theta(x)-y = g(z)-y

hθ(x)−y=g(z)−y

在此基础上可以得到参数迭代的向量化式子为:

w

j

+

1

=

w

j

+

α

x

T

l

o

s

s

w_{j+1}=w_j+\alpha x^T loss

wj+1=wj+αxTloss

下面用一个二分类的例子说明, Banknote Dataset(钞票数据集):这是从纸币鉴别过程中的图像里提取的数据,用来预测钞票的真伪的数据集。该数据集中含有1372个样本,每个样本由5个数值型变量构成,4个输入变量和1个输出变量,这是一个二分类问题。

Banknote Dataset可以从

https://archive.ics.uci.edu/ml/datasets/banknote+authentication

\url{https://archive.ics.uci.edu/ml/datasets/banknote+authentication}

https://archive.ics.uci.edu/ml/datasets/banknote+authentication 下载,默认是txt格式,如下是数据集前10行的数据:

3.6216,8.6661,-2.8073,-0.44699,0

4.5459,8.1674,-2.4586,-1.4621,0

3.866,-2.6383,1.9242,0.10645,0

3.4566,9.5228,-4.0112,-3.5944,0

0.32924,-4.4552,4.5718,-0.9888,0

4.3684,9.6718,-3.9606,-3.1625,0

3.5912,3.0129,0.72888,0.56421,0

2.0922,-6.81,8.4636,-0.60216,0

3.2032,5.7588,-0.75345,-0.61251,0

1.5356,9.1772,-2.2718,-0.73535,0

具体代码如下所示:

import random

import numpy as np

import pandas as pd

dataset = pd.read_csv('data_banknote_authentication.txt', header=None)

X = dataset.iloc[:,0:4]

Y = dataset.iloc[:,[4]]

m = X.shape[0]

n = X.shape[1]

theta = np.random.rand(n, 1)

def log_likelihood(h, y):

lik = np.dot(np.log(h).T, y) + np.dot(np.log(1 - h).T, 1 - y)

return lik

def sigmoid(x, theta):

sig = 1 / (1 + np.exp(-np.dot(x, theta)))

return sig

def gradientAscent(alpha, x, loss):

gra = alpha * np.dot(x.T, loss)

return gra

def logistic(X, y, t_):

theta = t_.copy()

for step in range(80000):

h = sigmoid(X, theta)

L_ = log_likelihood(h, y)

loss = y - h

theta += gradientAscent(0.001, X, loss)

return theta

if __name__ == '__main__':

theta_ = logistic(X, Y, theta)

print(theta_)

X = X.values.tolist()

Y = Y.values.tolist()

b = 0

for i in range(1000):

z = 0

j = random.randint(0, m - 1)

for k in range (n):

z = z + X[j][k] * theta_[k]

sum = 1 / (1.0 + np.exp(-z))

if sum > 0.5:

if Y[j][0] == 1:

b = b + 1

else :

if Y[j][0] == 0:

b = b + 1

print("准确率:",b / 1000)

1268

1268

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?