TORCHVISION OBJECT DETECTION FINETUNING TUTORIAL

首先要按步骤

pip install pycocotools

下载数据 PennFudan.zip 解压 路径的话随便 复制一下相对路径替换就行

https://www.cis.upenn.edu/~jshi/ped_html/PennFudanPed.zip

报错:AttributeError: module ‘torch.utils.data‘ has no attribute ‘collate‘

解决方案:去下载几个文件 放在同级目录

https://github.com/pytorch/vision/tree/main/references/detection

~~ 接下来都是坑!~~

报错:此处忽略一大串,总之报错含有"freeze_support()" (之前就遇到过,已经算不上坑)

原因:多线程的问题,不考虑太多

解决方案:改3处num_workers=0

报错:AttributeError: module ‘transforms’ has no attribute ‘ToTensor’. Did y\3.1.py", line 199, in get_transform you mean: ‘Tensor’?

不用按照建议改,否则就是 TypeError: ‘Tensor’ object is not callable

解决方案:

from torchvision import transforms as T

现在就有了ToTensor(),调用试试看吧?

报错:call() takes 2 positional arguments but 3 were given

原因:参数过多

解决方案:其实这里的“transforms"模块就是我们下载的,而不是torchvision中的,

就用下面这个引用不用改

import transforms as T

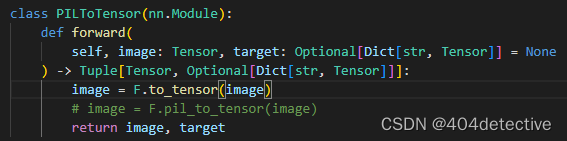

猜测:应该是代码改了教程有没跟上,让我们看看transforms模块里都有啥

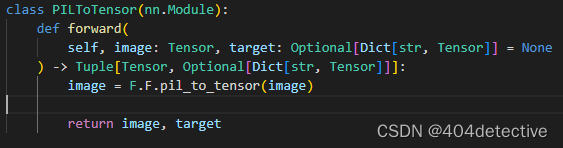

解决方案:那就尝试改为T.PILToTensor()

报错:TypeError: Expected input images to be of floating type (in range [0, 1]), but found type torch.uint8 instead

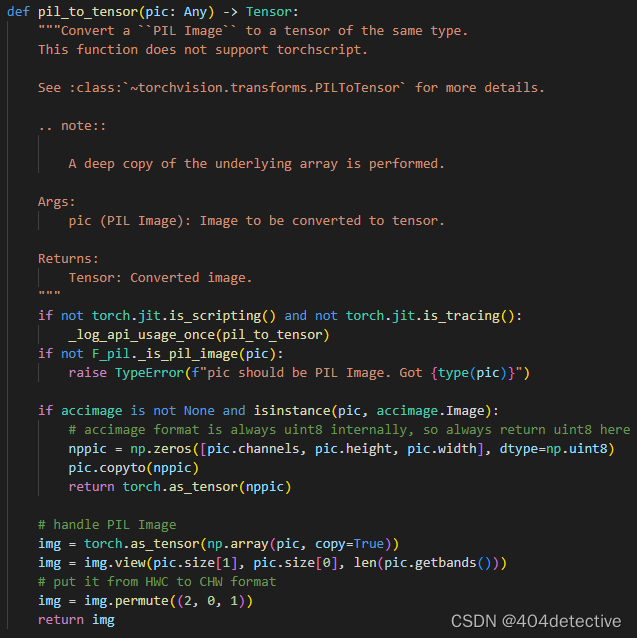

原因:输入格式不一致,让我们看看F.pil_to_tensor()

解决方案:改一下类型怎么样?

报错:依旧如上

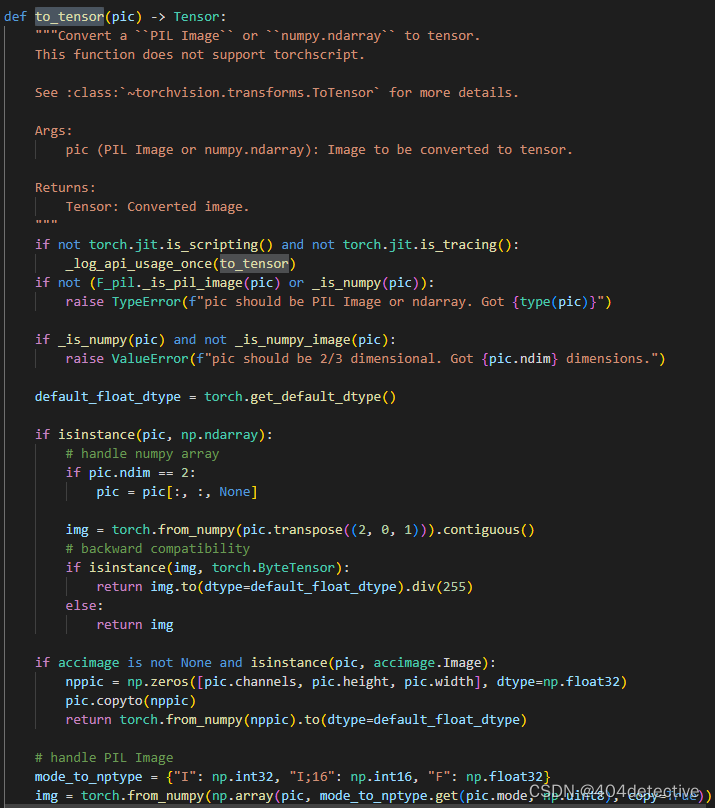

原因:什么玩意不想搞了,等等,上面这不就有个to_tensor,还是float类型,直接用就好了

解决方案:把

image = F.pil_to_tensor(image)

改成

image = F.to_tensor(image)

成功跑起来!

总结:

transforms.append(T.ToTensor())

改

transforms.append(T.PILToTensor())

image = F.pil_to_tensor(image)

改

image = F.to_tensor(image)

代码

# 使用TorchVision微调物体检测模型教程 # bounding box翻译为边界框。

# 在本教程中,我们将对Penn-Fudan数据库中的行人检测和分割的预训练Mask R-CNN模型进行微调。

# 它包含170张图片,其中有345个行人实例,我们将用它来说明如何使用torchvision的新功能,

# 以便在自定义数据集上训练一个实例分割模型。

# 3.1.2 定义Dataset

# 训练对象检测、实例分割和人物关键点检测的参考脚本允许轻松支持添加新的自定义数据集。

# 数据集应该继承自标准的 torch.utils.data.Dataset 类,并实现 __len__ 和 __getitem__。

# 我们要求数据集的__getitem__应该返回:

# 图像:一个大小为(H, W)的PIL图像

# target:一个包含以下字段的dict

# boxes(FloatTensor[N, 4]):N个边界框的坐标,格式为[x0, y0, x1, y1],范围从0到W和0到H。

# labels(Int64Tensor[N]):每个边界框的标签。0代表的永远是背景类。

# image_id(Int64Tensor[1]):一个图像标识符。它在数据集中的所有图像中应该是唯一的,并在评估时使用。

# area (Tensor[N])。边界框的面积。这是在评估COCO指标时使用的,用来区分小、中、大边界框之间的指标分值。

# iscrowd (UInt8Tensor[N]): iscrowd=True的实例在评估中会被忽略。

# (可选)masks(UInt8Tensor[N, H, W])。每个对象的分割mask。

# (可选)keypoints(FloatTensor[N, K, 3])。对于N个对象中的每一个,它包含了[x, y, visibility]格式的K个关键点,定义了该对象。 visibility=0意味着该关键点不可见。请注意,对于数据增强,翻转关键点的记号取决于数据表示形式,你可能会在表示你的新关键点的时候,用到references/detection/transforms.py

# 如果你的模型返回上述方法,它们在训练和评估中都能发挥作用,并将使用来自 pycocotools 的评估脚本,这些脚本可以用 pip install pycocotools 来安装。

import os

import numpy as np

import torch

from PIL import Image

class PennFudanDataset(torch.utils.data.Dataset):

def __init__(self, root, transforms):

self.root = root

self.transforms = transforms

# load all image files, sorting them to

# ensure that they are aligned

self.imgs = list(sorted(os.listdir(os.path.join(root, "PNGImages"))))

self.masks = list(sorted(os.listdir(os.path.join(root, "PedMasks"))))

def __getitem__(self, idx):

# load images and masks

img_path = os.path.join(self.root, "PNGImages", self.imgs[idx])

mask_path = os.path.join(self.root, "PedMasks", self.masks[idx])

img = Image.open(img_path).convert("RGB")

# note that we haven't converted the mask to RGB,

# because each color corresponds to a different instance

# with 0 being background

mask = Image.open(mask_path)

# convert the PIL Image into a numpy array

mask = np.array(mask)

# instances are encoded as different colors

obj_ids = np.unique(mask)

# first id is the background, so remove it

obj_ids = obj_ids[1:]

# split the color-encoded mask into a set

# of binary masks

masks = mask == obj_ids[:, None, None]

# get bounding box coordinates for each mask

num_objs = len(obj_ids)

boxes = []

for i in range(num_objs):

pos = np.where(masks[i])

xmin = np.min(pos[1])

xmax = np.max(pos[1])

ymin = np.min(pos[0])

ymax = np.max(pos[0])

boxes.append([xmin, ymin, xmax, ymax])

# convert everything into a torch.Tensor

boxes = torch.as_tensor(boxes, dtype=torch.float32)

# there is only one class

labels = torch.ones((num_objs,), dtype=torch.int64)

masks = torch.as_tensor(masks, dtype=torch.uint8)

image_id = torch.tensor([idx])

area = (boxes[:, 3] - boxes[:, 1]) * (boxes[:, 2] - boxes[:, 0])

# suppose all instances are not crowd

iscrowd = torch.zeros((num_objs,), dtype=torch.int64)

target = {}

target["boxes"] = boxes

target["labels"] = labels

target["masks"] = masks

target["image_id"] = image_id

target["area"] = area

target["iscrowd"] = iscrowd

if self.transforms is not None:

img, target = self.transforms(img, target)

return img, target

def __len__(self):

return len(self.imgs)

# 在本教程中,我们将使用Mask R-CNN,它是基于Faster R-CNN创建的。

# Faster R-CNN是一个同时预测图像中物体的边界框和类别置信度的模型。

# Mask R-CNN在Faster R-CNN的基础上增加了一个额外的分支,它也预测每个实例的分割mask。

# 有两种常见的情况,人们可能想要修改torchvision模型库中的一个可用模型。

# 第一种情况是我们想从一个预先训练好的模型开始,只对最后一层进行微调。

# 另一种情况是,我们想用一个不同的模型来替换模型的骨干(例如,为了更快地预测)

# 1.从预训练的模型进行微调

# 假设你想从一个在COCO上预训练的模型开始,并想针对你的特定类别对它进行微调。

# 这里有一个可能的方法去实现它:

# import torchvision

# from torchvision.models.detection.faster_rcnn import FastRCNNPredictor

# # load a model pre-trained pre-trained on COCO

# model = torchvision.models.detection.fasterrcnn_resnet50_fpn(pretrained=True)

# # replace the classifier with a new one, that has

# # num_classes which is user-defined

# num_classes = 2 # 1 class (person) + background

# # get number of input features for the classifier

# in_features = model.roi_heads.box_predictor.cls_score.in_features

# # replace the pre-trained head with a new one

# model.roi_heads.box_predictor = FastRCNNPredictor(in_features, num_classes)

# # 2.通过添加不同的backbone修改模型

# import torchvision

# from torchvision.models.detection import FasterRCNN

# from torchvision.models.detection.rpn import AnchorGenerator

# # load a pre-trained model for classification and return

# # only the features

# backbone = torchvision.models.mobilenet_v2(pretrained=True).features

# # FasterRCNN needs to know the number of

# # output channels in a backbone. For mobilenet_v2, it's 1280

# # so we need to add it here

# backbone.out_channels = 1280

# # let's make the RPN generate 5 x 3 anchors per spatial

# # location, with 5 different sizes and 3 different aspect

# # ratios. We have a Tuple[Tuple[int]] because each feature

# # map could potentially have different sizes and

# # aspect ratios

# anchor_generator = AnchorGenerator(sizes=((32, 64, 128, 256, 512),),

# aspect_ratios=((0.5, 1.0, 2.0),))

# # let's define what are the feature maps that we will

# # use to perform the region of interest cropping, as well as

# # the size of the crop after rescaling.

# # if your backbone returns a Tensor, featmap_names is expected to

# # be [0]. More generally, the backbone should return an

# # OrderedDict[Tensor], and in featmap_names you can choose which

# # feature maps to use.

# roi_pooler = torchvision.ops.MultiScaleRoIAlign(featmap_names=['0'],

# output_size=7,

# sampling_ratio=2)

# # put the pieces together inside a FasterRCNN model

# model = FasterRCNN(backbone,

# num_classes=2,

# rpn_anchor_generator=anchor_generator,

# box_roi_pool=roi_pooler)

# 在我们的案例中,鉴于我们的数据集非常小,所以想从一个预训练的模型中进行微调,我们将采用第1种方法。

# 在这里,我们还想计算实例分割mask,所以我们将使用Mask R-CNN。

import torchvision

from torchvision.models.detection.faster_rcnn import FastRCNNPredictor

from torchvision.models.detection.mask_rcnn import MaskRCNNPredictor

def get_model_instance_segmentation(num_classes):

# load an instance segmentation model pre-trained pre-trained on COCO

model = torchvision.models.detection.maskrcnn_resnet50_fpn(pretrained=True)

# get number of input features for the classifier

in_features = model.roi_heads.box_predictor.cls_score.in_features

# replace the pre-trained head with a new one

model.roi_heads.box_predictor = FastRCNNPredictor(in_features, num_classes)

# now get the number of input features for the mask classifier

in_features_mask = model.roi_heads.mask_predictor.conv5_mask.in_channels

hidden_layer = 256

# and replace the mask predictor with a new one

model.roi_heads.mask_predictor = MaskRCNNPredictor(in_features_mask,

hidden_layer,

num_classes)

return model

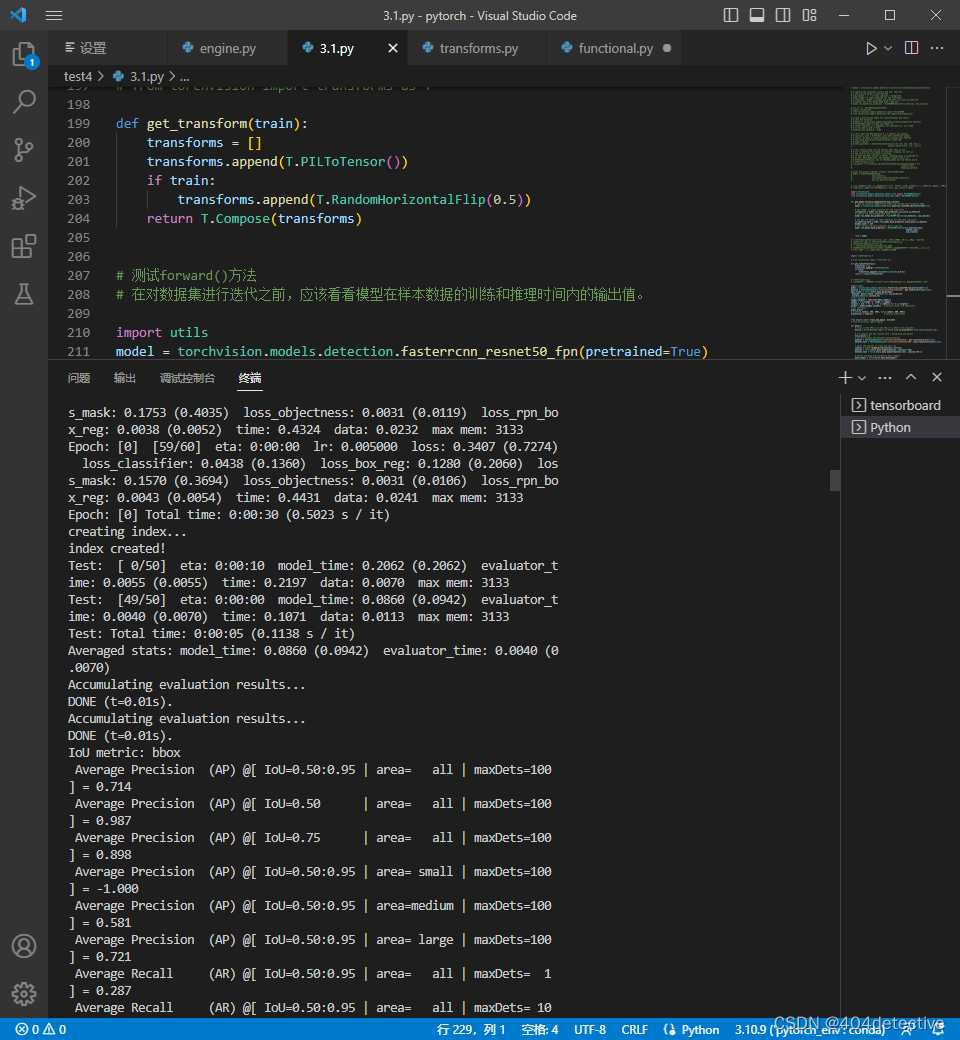

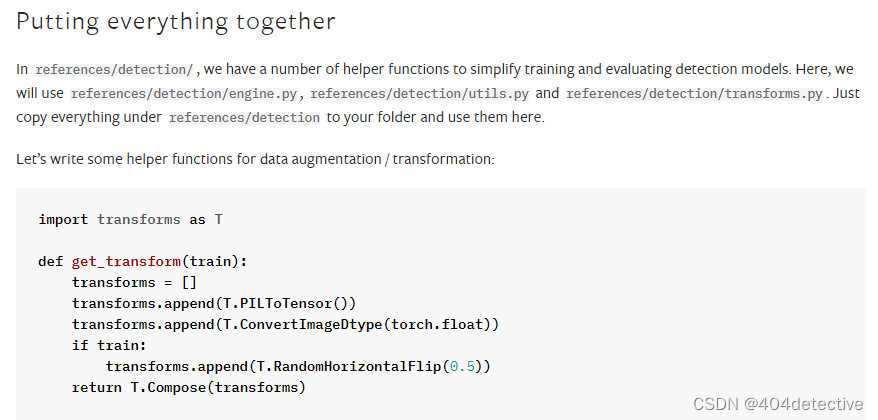

# 在references/detection/中,我们有一些辅助函数来简化训练和评估检测模型。

# 在这里,我们将使用 references/detection/engine.py,

# references/detection/utils.py

# 和 references/detection/transforms.py。

# 只要将references/detection下的所有内容复制到你的文件夹中,并在这里使用它们。

# 让我们来写一些用于数据增强/转换的辅助函数。

import transforms as T

# from torchvision import transforms as T

def get_transform(train):

transforms = []

transforms.append(T.ToTensor())

if train:

transforms.append(T.RandomHorizontalFlip(0.5))

return T.Compose(transforms)

# 测试forward()方法

# 在对数据集进行迭代之前,应该看看模型在样本数据的训练和推理时间内的输出值。

import utils

model = torchvision.models.detection.fasterrcnn_resnet50_fpn(pretrained=True)

dataset = PennFudanDataset('test4\data\PennFudanPed', get_transform(train=True))

data_loader = torch.utils.data.DataLoader(

dataset, batch_size=2, shuffle=True, num_workers=0,

collate_fn=utils.collate_fn)

# For Training

images,targets = next(iter(data_loader))

images = list(image for image in images)

targets = [{k: v for k, v in t.items()} for t in targets]

output = model(images,targets) # Returns losses and detections

# For inference

model.eval()

x = [torch.rand(3, 300, 400), torch.rand(3, 500, 400)]

predictions = model(x) # Returns predictions

from engine import train_one_epoch, evaluate

# from torchvision import engine

def main():

# train on the GPU or on the CPU, if a GPU is not available

device = torch.device('cuda') if torch.cuda.is_available() else torch.device('cpu')

# our dataset has two classes only - background and person

num_classes = 2

# use our dataset and defined transformations

dataset = PennFudanDataset('test4\data\PennFudanPed', get_transform(train=True))

dataset_test = PennFudanDataset('test4\data\PennFudanPed', get_transform(train=False))

# split the dataset in train and test set

indices = torch.randperm(len(dataset)).tolist()

dataset = torch.utils.data.Subset(dataset, indices[:-50])

dataset_test = torch.utils.data.Subset(dataset_test, indices[-50:])

# define training and validation data loaders

data_loader = torch.utils.data.DataLoader(

dataset, batch_size=2, shuffle=True, num_workers=0,

collate_fn=utils.collate_fn)

data_loader_test = torch.utils.data.DataLoader(

dataset_test, batch_size=1, shuffle=False, num_workers=0,

collate_fn=utils.collate_fn)

# get the model using our helper function

model = get_model_instance_segmentation(num_classes)

# move model to the right device

model.to(device)

# construct an optimizer

params = [p for p in model.parameters() if p.requires_grad]

optimizer = torch.optim.SGD(params, lr=0.005,

momentum=0.9, weight_decay=0.0005)

# and a learning rate scheduler

lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer,

step_size=3,

gamma=0.1)

# let's train it for 10 epochs

num_epochs = 10

for epoch in range(num_epochs):

# train for one epoch, printing every 10 iterations

train_one_epoch(model, optimizer, data_loader, device, epoch, print_freq=10)

# update the learning rate

lr_scheduler.step()

# evaluate on the test dataset

evaluate(model, data_loader_test, device=device)

print("That's it!")

main()

写完之后去看了一眼英文文档,其实已经改了

以后遇到问题还是先去看看官方文档吧。。。

3万+

3万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?