一.场景

因Scala函数式和链式编程使用使得代码简介性相较Java有质的提升,因此,在Flink开发时还是使用Scala进行编程。但是在加载Kafka的数据时报objenesis异常,但是代码中没有使用POJO类。

二.异常信息

D:\Users\Administrator\jdk1.8.0_66\bin\java -Didea.launcher.port=7536 -Didea.launcher.bin.path=D:\Users\Administrator\IDEA15\bin -Dfile.encoding=UTF-8 -classpath D:\Users\Administrator\jdk1.8.0_66\jre\lib\charsets.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\deploy.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\ext\access-bridge-64.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\ext\cldrdata.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\ext\dnsns.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\ext\jaccess.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\ext\jfxrt.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\ext\localedata.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\ext\nashorn.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\ext\sunec.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\ext\sunjce_provider.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\ext\sunmscapi.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\ext\sunpkcs11.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\ext\zipfs.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\javaws.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\jce.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\jfr.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\jfxswt.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\jsse.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\management-agent.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\plugin.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\resources.jar;D:\Users\Administrator\jdk1.8.0_66\jre\lib\rt.jar;D:\software\flink-1.7.2-src\Flink\out\production\Flink;D:\Users\Administrator\scala\lib\scala-actors-2.11.0.jar;D:\Users\Administrator\scala\lib\scala-actors-migration_2.11-1.1.0.jar;D:\Users\Administrator\scala\lib\scala-library.jar;D:\Users\Administrator\scala\lib\scala-parser-combinators_2.11-1.0.4.jar;D:\Users\Administrator\scala\lib\scala-reflect.jar;D:\Users\Administrator\scala\lib\scala-swing_2.11-1.0.2.jar;D:\Users\Administrator\scala\lib\scala-xml_2.11-1.0.4.jar;D:\jar\flink\lz4-1.2.0.jar;D:\jar\flink\avro-1.7.4.jar;D:\jar\flink\gson-2.2.4.jar;D:\jar\flink\minlog-1.2.jar;D:\jar\flink\zinc-0.3.5.jar;D:\jar\flink\junit-3.8.1.jar;D:\jar\flink\kryo-2.24.0.jar;D:\jar\flink\xmlenc-0.52.jar;D:\jar\flink\config-1.3.0.jar;D:\jar\flink\guava-16.0.1.jar;D:\jar\flink\jsr305-1.3.9.jar;D:\jar\flink\log4j-1.2.17.jar;D:\jar\flink\paranamer-2.3.jar;D:\jar\flink\activation-1.1.jar;D:\jar\flink\commons-io-2.2.jar;D:\jar\flink\commons-io-2.4.jar;D:\jar\flink\httpcore-4.2.4.jar;D:\jar\flink\jaxb-api-2.2.2.jar;D:\jar\flink\stax-api-1.0-2.jar;D:\jar\flink\commons-net-3.1.jar;D:\jar\flink\fastjson-1.2.22.jar;D:\jar\flink\jersey-core-1.9.jar;D:\jar\flink\servlet-api-2.5.jar;D:\jar\flink\xml-apis-1.3.04.jar;D:\jar\flink\zookeeper-3.4.6.jar;D:\jar\flink\chill-java-0.7.4.jar;D:\jar\flink\chill_2.11-0.7.4.jar;D:\jar\flink\commons-exec-1.1.jar;D:\jar\flink\commons-lang-2.4.jar;D:\jar\flink\commons-lang-2.6.jar;D:\jar\flink\flink-core-1.6.0.jar;D:\jar\flink\flink-java-1.6.0.jar;D:\jar\flink\httpclient-4.2.5.jar;D:\jar\flink\scopt_2.11-3.5.0.jar;D:\jar\flink\slf4j-api-1.7.16.jar;D:\jar\flink\xercesImpl-2.9.1.jar;D:\jar\flink\commons-cli-1.3.1.jar;D:\jar\flink\commons-codec-1.6.jar;D:\jar\flink\commons-math3-3.5.jar;D:\jar\flink\hadoop-auth-2.6.0.jar;D:\jar\flink\hadoop-hdfs-2.6.0.jar;D:\jar\flink\jersey-client-1.9.jar;D:\jar\flink\netty-3.7.0.Final.jar;D:\jar\flink\api-util-1.0.0-M20.jar;D:\jar\flink\commons-lang3-3.3.2.jar;D:\jar\flink\force-shading-1.6.0.jar;D:\jar\flink\hadoop-client-2.6.0.jar;D:\jar\flink\hadoop-common-2.6.0.jar;D:\jar\flink\protobuf-java-2.5.0.jar;D:\jar\flink\slf4j-log4j12-1.7.5.jar;D:\jar\flink\commons-digester-1.8.jar;D:\jar\flink\curator-client-2.6.0.jar;D:\jar\flink\jcl-over-slf4j-1.5.6.jar;D:\jar\flink\sbt-interface-0.13.5.jar;D:\jar\flink\commons-logging-1.1.3.jar;D:\jar\flink\curator-recipes-2.6.0.jar;D:\jar\flink\flink-hadoop-fs-1.6.0.jar;D:\jar\flink\hadoop-yarn-api-2.6.0.jar;D:\jar\flink\kafka-clients-0.9.0.1.jar;D:\jar\flink\akka-actor_2.11-2.4.20.jar;D:\jar\flink\akka-slf4j_2.11-2.4.20.jar;D:\jar\flink\api-asn1-api-1.0.0-M20.jar;D:\jar\flink\commons-compress-1.4.1.jar;D:\jar\flink\commons-httpclient-3.1.jar;D:\jar\flink\flink-scala_2.11-1.6.0.jar;D:\jar\flink\flink-table_2.11-1.6.0.jar;D:\jar\flink\akka-stream_2.11-2.4.20.jar;D:\jar\flink\apacheds-i18n-2.0.0-M15.jar;D:\jar\flink\curator-framework-2.6.0.jar;D:\jar\flink\flink-annotations-1.6.0.jar;D:\jar\flink\flink-clients_2.11-1.6.0.jar;D:\jar\flink\flink-metrics-core-1.6.0.jar;D:\jar\flink\flink-runtime_2.11-1.6.0.jar;D:\jar\flink\hadoop-annotations-2.6.0.jar;D:\jar\flink\hadoop-yarn-client-2.6.0.jar;D:\jar\flink\hadoop-yarn-common-2.6.0.jar;D:\jar\flink\akka-protobuf_2.11-2.4.20.jar;D:\jar\flink\commons-collections-3.2.2.jar;D:\jar\flink\commons-configuration-1.6.jar;D:\jar\flink\flink-optimizer_2.11-1.6.0.jar;D:\jar\flink\flink-shaded-asm-5.0.4-4.0.jar;D:\jar\flink\ssl-config-core_2.11-0.2.1.jar;D:\jar\flink\flink-shaded-guava-18.0-4.0.jar;D:\jar\flink\mysql-connector-java-5.1.38.jar;D:\jar\flink\backport-util-concurrent-3.1.jar;D:\jar\flink\commons-beanutils-core-1.8.0.jar;D:\jar\flink\flink-shaded-jackson-2.7.9-4.0.jar;D:\jar\flink\flink-streaming-java_2.11-1.6.0.jar;D:\jar\flink\hadoop-yarn-server-common-2.6.0.jar;D:\jar\flink\flink-streaming-scala_2.11-1.6.0.jar;D:\jar\flink\apacheds-kerberos-codec-2.0.0-M15.jar;D:\jar\flink\hadoop-mapreduce-client-app-2.6.0.jar;D:\jar\flink\hadoop-mapreduce-client-core-2.6.0.jar;D:\jar\flink\flink-shaded-netty-4.1.24.Final-4.0.jar;D:\jar\flink\flink-connector-kafka-0.9_2.11-1.6.0.jar;D:\jar\flink\hadoop-mapreduce-client-common-2.6.0.jar;D:\jar\flink\flink-connector-kafka-base_2.11-1.6.0.jar;D:\jar\flink\hadoop-mapreduce-client-shuffle-2.6.0.jar;D:\jar\flink\hadoop-mapreduce-client-jobclient-2.6.0.jar;D:\jar\flink\flink-queryable-state-client-java_2.11-1.6.0.jar;D:\jar\flink-sql-connector-kafka_2.11-1.9.0.jar;D:\Users\Administrator\IDEA15\lib\idea_rt.jar com.intellij.rt.execution.application.AppMain cn.kafka.FlinkKafka

Exception in thread "main" org.apache.flink.runtime.client.JobExecutionException: java.lang.NoClassDefFoundError: org/objenesis/strategy/InstantiatorStrategy

at org.apache.flink.runtime.minicluster.MiniCluster.executeJobBlocking(MiniCluster.java:623)

at org.apache.flink.streaming.api.environment.LocalStreamEnvironment.execute(LocalStreamEnvironment.java:123)

at org.apache.flink.streaming.api.scala.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.scala:654)

at cn.kafka.FlinkKafka$.main(FlinkKafka.scala:58)

at cn.kafka.FlinkKafka.main(FlinkKafka.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at com.intellij.rt.execution.application.AppMain.main(AppMain.java:144)

Caused by: java.lang.NoClassDefFoundError: org/objenesis/strategy/InstantiatorStrategy

at org.apache.flink.api.java.typeutils.GenericTypeInfo.createSerializer(GenericTypeInfo.java:90)

at org.apache.flink.api.java.typeutils.TupleTypeInfo.createSerializer(TupleTypeInfo.java:107)

at org.apache.flink.api.java.typeutils.TupleTypeInfo.createSerializer(TupleTypeInfo.java:52)

at org.apache.flink.api.java.typeutils.ListTypeInfo.createSerializer(ListTypeInfo.java:102)

at org.apache.flink.api.common.state.StateDescriptor.initializeSerializerUnlessSet(StateDescriptor.java:288)

at org.apache.flink.runtime.state.DefaultOperatorStateBackend.getListState(DefaultOperatorStateBackend.java:734)

at org.apache.flink.runtime.state.DefaultOperatorStateBackend.getUnionListState(DefaultOperatorStateBackend.java:278)

at org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumerBase.initializeState(FlinkKafkaConsumerBase.java:789)

at org.apache.flink.streaming.util.functions.StreamingFunctionUtils.tryRestoreFunction(StreamingFunctionUtils.java:178)

at org.apache.flink.streaming.util.functions.StreamingFunctionUtils.restoreFunctionState(StreamingFunctionUtils.java:160)

at org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator.initializeState(AbstractUdfStreamOperator.java:96)

at org.apache.flink.streaming.api.operators.AbstractStreamOperator.initializeState(AbstractStreamOperator.java:254)

at org.apache.flink.streaming.runtime.tasks.StreamTask.initializeState(StreamTask.java:738)

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:289)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:711)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.lang.ClassNotFoundException: org.objenesis.strategy.InstantiatorStrategy

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 16 more

Process finished with exit code 1

三.非Kafka数据源解决方案【以Socket为例】

在Socket数据源中使用POJO会报上述错误,改为例样例类即可解决!

参考博客:Flink中使用POJO原因分析及解决方案

四.Kafka数据源解决方案

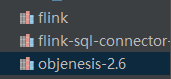

在使用Kafka作为数据源时,上述的样例类已经没有效果了,在验证多种方式都无效的情况下,使用了一种最简单粗暴的方法,导入objenesis的jar包!

经验证有效!!!

2266

2266

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?