文章目录

Flink 窗口

flink 官方文档:flink官方文档

参考博客:Alienware^ 博主链接

计数窗口 - count window

计数窗口基于元素的个数来截取数据,到达固定的个数时就触发计算并关闭窗口。这相当于座位有限、“人满就发车”,是否发车与时间无关。每个窗口截取数据的个数,就是窗口的大小。

计数窗口相比时间窗口就更加简单,我们只需指定窗口大小,就可以把数据分配到对应的窗口中了。在 Flink 内部也并没有对应的类来表示计数窗口,底层是通过“全局窗口”(Global Window)来实现的。

package com.ali.flink.demo.driver;

import com.ali.flink.demo.utils.DataGeneratorImpl002;

import com.ali.flink.demo.utils.FlinkEnv;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.ReduceFunction;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.datagen.DataGeneratorSource;

import java.util.Random;

/**

* count window : 计数窗口

* 按 key 分组,累计到5个窗口长度后触发计算

*/

public class FlinkCountWindowDemo01 {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = FlinkEnv.FlinkDataStreamRunEnv();

env.setParallelism(1);

DataGeneratorSource<String> dataGeneratorSource = new DataGeneratorSource<>(new DataGeneratorImpl002());

DataStream<String> dataGeneratorStream = env.addSource(dataGeneratorSource).returns(String.class);

// dataGeneratorStream.print("source");

// 指定watermark

DataStream<Tuple2<String, Integer>> mapStream = dataGeneratorStream

.map(new MapFunction<String, Tuple2<String, Integer>>() {

@Override

public Tuple2<String, Integer> map(String s) throws Exception {

JSONObject jsonObject = JSON.parseObject(s);

String username = jsonObject.getString("username");

return Tuple2.of(username, new Random().nextInt(10));

}

});

mapStream.print("map source");

DataStream<Tuple2<String, Integer>> outStream = mapStream

.keyBy(new KeySelector<Tuple2<String, Integer>, String>() {

@Override

public String getKey(Tuple2<String, Integer> tuple2) throws Exception {

return tuple2.f0;

}

})

.countWindow(5)

.reduce(new ReduceFunction<Tuple2<String, Integer>>() {

@Override

public Tuple2<String, Integer> reduce(Tuple2<String, Integer> tuple2, Tuple2<String, Integer> t1) throws Exception {

tuple2.f1 = tuple2.f1 + t1.f1;

return tuple2;

}

});

outStream.print("count");

env.execute("tumble window test");

}

}

map source> (ccc,6)

map source> (ccc,4)

map source> (aaa,3)

map source> (aaa,7)

map source> (bbb,9)

map source> (bbb,2)

map source> (aaa,2)

map source> (ccc,3)

map source> (ccc,2)

map source> (bbb,7)

map source> (ccc,9)

count> (ccc,24)

map source> (bbb,7)

map source> (aaa,4)

map source> (bbb,8)

count> (bbb,33)

map source> (bbb,6)

map source> (aaa,0)

count> (aaa,16)

时间窗口 - time window

时间窗口分为:滚动窗口 (Tumbling Window)、滑动窗口 (Sliding Windows)、会话窗口 (Session Windows)、全局窗口 (Global Windows)

滚动窗口 (Tumbling Window)

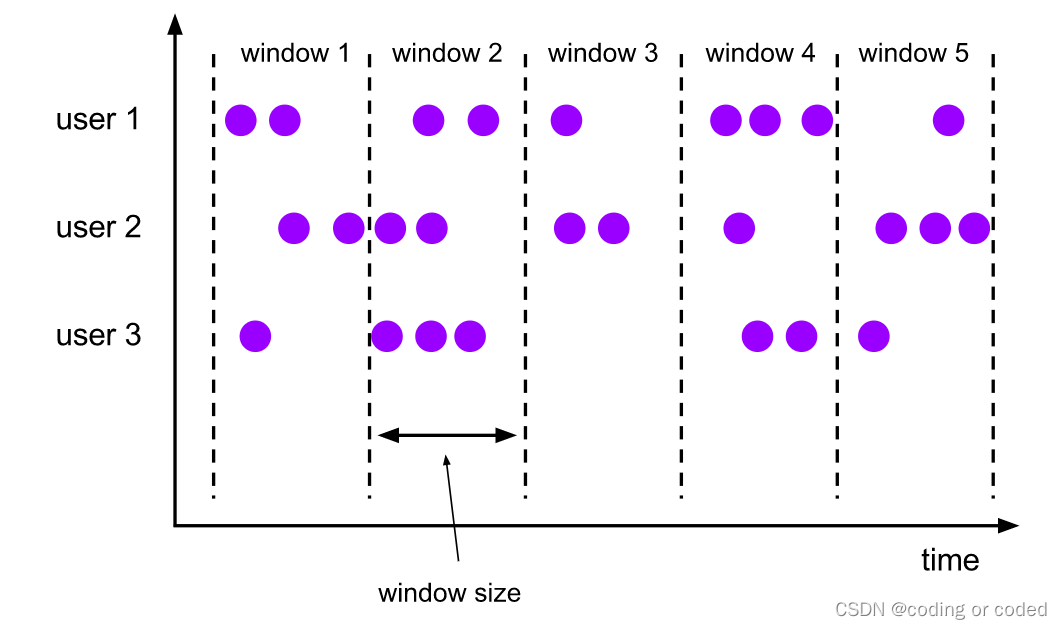

滚动窗口有固定的大小,是一种对数据进行“均匀切片”的划分方式。窗口之间没有重叠,也不会有间隔,是“首尾相接”的状态。如果我们把多个窗口的创建,看作一个窗口的运动,那就好像它在不停地向前“翻滚”一样。这是最简单的窗口形式,我们之前所举的例子都是滚动窗口。也正是因为滚动窗口是“无缝衔接”,所以每个数据都会被分配到一个窗口,而且只会属于一个窗口。

滚动窗口可以基于时间定义,也可以基于数据个数定义;需要的参数只有一个,就是窗口的大小(window size)

滚动窗口应用非常广泛,它可以对每个时间段做聚合统计,很多 BI 分析指标都可以用它来实现。

package com.ali.flink.demo.driver;

import com.ali.flink.demo.utils.DataGeneratorImpl002;

import com.ali.flink.demo.utils.FlinkEnv;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.datagen.DataGeneratorSource;

import org.apache.flink.streaming.api.functions.windowing.ProcessWindowFunction;

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.time.Duration;

import java.util.Date;

public class FlinkTumblingWindowDemo01{

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = FlinkEnv.FlinkDataStreamRunEnv();

env.setParallelism(1);

DataGeneratorSource<String> dataGeneratorSource = new DataGeneratorSource<>(new DataGeneratorImpl002());

DataStream<String> dataGeneratorStream = env.addSource(dataGeneratorSource).returns(String.class);

dataGeneratorStream.print("source");

// 指定watermark

DataStream<String> outStream = dataGeneratorStream

.assignTimestampsAndWatermarks(WatermarkStrategy.<String>forBoundedOutOfOrderness(Duration.ofSeconds(2))

.withTimestampAssigner(new SerializableTimestampAssigner<String>() {

@Override

public long extractTimestamp(String s, long l) {

JSONObject jsonObject = (JSONObject) JSON.parse(s);

String eventtime = jsonObject.getString("eventtime");

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

Date event_time = null;

try {

event_time = simpleDateFormat.parse(eventtime);

} catch (ParseException e) {

e.printStackTrace();

}

return event_time.getTime();

}

}))

// 对username字段进行分组,进行group by 操作

.keyBy(new KeySelector<String, String>() {

@Override

public String getKey(String s) throws Exception {

JSONObject jsonObject = (JSONObject) JSON.parse(s);

return jsonObject.getString("username");

}

})

.window(TumblingEventTimeWindows.of(Time.seconds(20)))

// 使用aggregate做增量聚合统计

.aggregate(new UrlClickCountAgg(), new UrlClickCountResutl());

outStream.print("count");

env.execute("tumble window test");

}

public static class UrlClickCountResutl extends ProcessWindowFunction<Long, String, String, TimeWindow>{

@Override

public void process(String s, Context context, Iterable<Long> iterable, Collector<String> out) throws Exception {

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

String start = simpleDateFormat.format(context.window().getStart());

String end = simpleDateFormat.format(context.window().getEnd());

Long count = iterable.iterator().next();

out.collect(start + "--" + end + "--" + s + "--" + count);

}

}

public static class UrlClickCountAgg implements AggregateFunction<String, Long, Long>{

@Override

public Long createAccumulator() {

return 0L;

}

@Override

public Long add(String value, Long acc) {

return acc + 1;

}

@Override

public Long getResult(Long acc) {

return acc;

}

@Override

public Long merge(Long acc, Long acc1) {

return acc + acc1;

}

}

}

source> {"username":"ccc","click_url":"url2","eventtime":"2022-07-05 17:56:35"}

source> {"username":"bbb","click_url":"url2","eventtime":"2022-07-05 17:56:43"}

count> 2022-07-05 17:56:20--2022-07-05 17:56:40--ccc--1

source> {"username":"bbb","click_url":"url1","eventtime":"2022-07-05 17:56:46"}

source> {"username":"ccc","click_url":"url2","eventtime":"2022-07-05 17:56:47"}

source> {"username":"ccc","click_url":"url1","eventtime":"2022-07-05 17:56:52"}

source> {"username":"aaa","click_url":"url2","eventtime":"2022-07-05 17:56:58"}

source> {"username":"bbb","click_url":"url2","eventtime":"2022-07-05 17:57:00"}

source> {"username":"aaa","click_url":"url1","eventtime":"2022-07-05 17:57:07"}

count> 2022-07-05 17:56:40--2022-07-05 17:57:00--bbb--2

count> 2022-07-05 17:56:40--2022-07-05 17:57:00--ccc--2

count> 2022-07-05 17:56:40--2022-07-05 17:57:00--aaa--1

滑动窗口 (Sliding Windows)

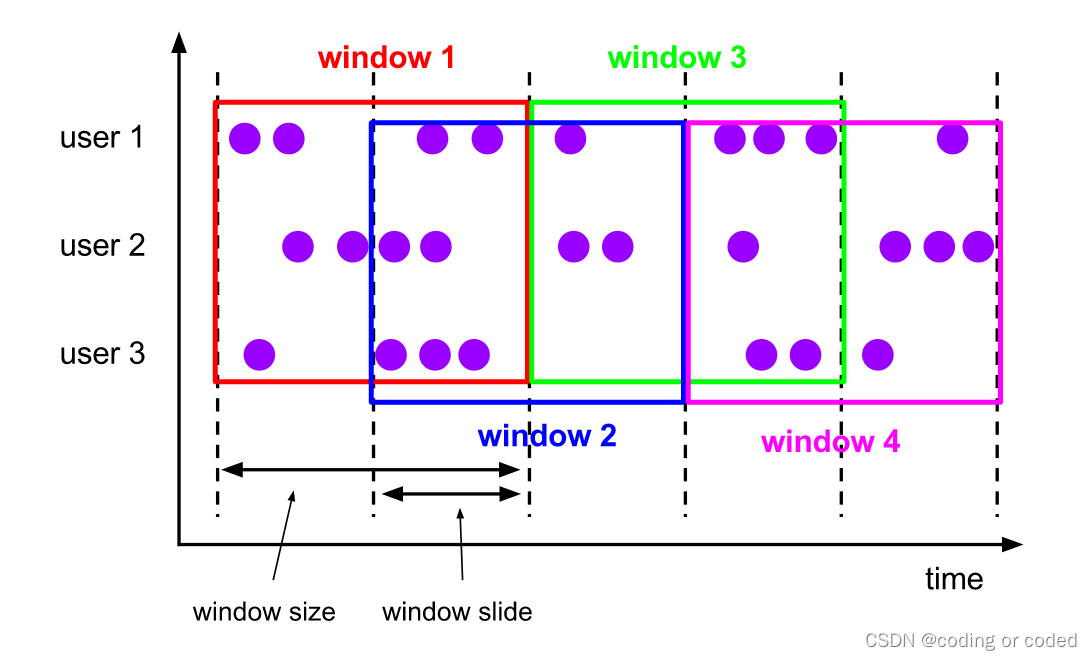

与滚动窗口类似,滑动窗口的大小也是固定的。区别在于,窗口之间并不是首尾相接的,而是可以“错开”一定的位置。如果看作一个窗口的运动,那么就像是向前小步“滑动”一样。既然是向前滑动,那么每一步滑多远,就也是可以控制的。所以定义滑动窗口的参数有两个:除去窗口大小(window size)之外,还有一个“滑动步长”(window slide),它其实就代表了窗口计算的频率。滑动的距离代表了下个窗口开始的时间间隔,而窗口大小是固定的,所以也就是两个窗口结束时间的间隔;窗口在结束时间触发计算输出结果,那么滑动步长就代表了计算频率。

滑动窗口其实是固定大小窗口的更广义的一种形式;换句话说,滚动窗口也可以看作是一种特殊的滑动窗口——窗口大小等于滑动步长(size = slide)

在一些场景中,可能需要统计最近一段时间内的指标,而结果的输出频率要求又很高,甚至要求实时更新,比如股票价格的 24 小时涨跌幅统计,或者基于一段时间内行为检测的异常报警。这时滑动窗口无疑就是很好的实现方式。

package com.ali.flink.demo.driver;

import com.ali.flink.demo.utils.DataGeneratorImpl002;

import com.ali.flink.demo.utils.FlinkEnv;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.datagen.DataGeneratorSource;

import org.apache.flink.streaming.api.functions.windowing.ProcessWindowFunction;

import org.apache.flink.streaming.api.windowing.assigners.SlidingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.time.Duration;

import java.util.Date;

public class FlinkSlidingWindowDemo01 {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = FlinkEnv.FlinkDataStreamRunEnv();

env.setParallelism(1);

DataGeneratorSource<String> dataGeneratorSource = new DataGeneratorSource<>(new DataGeneratorImpl002());

DataStream<String> dataGeneratorStream = env.addSource(dataGeneratorSource).returns(String.class);

dataGeneratorStream.print("source");

// 指定watermark

DataStream<String> outStream = dataGeneratorStream

.assignTimestampsAndWatermarks(WatermarkStrategy.<String>forBoundedOutOfOrderness(Duration.ofSeconds(2))

.withTimestampAssigner(new SerializableTimestampAssigner<String>() {

@Override

public long extractTimestamp(String s, long l) {

JSONObject jsonObject = (JSONObject) JSON.parse(s);

String eventtime = jsonObject.getString("eventtime");

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

Date event_time = null;

try {

event_time = simpleDateFormat.parse(eventtime);

} catch (ParseException e) {

e.printStackTrace();

}

return event_time.getTime();

}

}))

// 对username字段进行分组,进行group by 操作

.keyBy(new KeySelector<String, String>() {

@Override

public String getKey(String s) throws Exception {

JSONObject jsonObject = (JSONObject) JSON.parse(s);

return jsonObject.getString("username");

}

})

.window(SlidingEventTimeWindows.of(Time.seconds(20), Time.seconds(10)))

// 使用aggregate做增量聚合统计

.aggregate(new UrlClickCountAgg(), new UrlClickCountResutl());

outStream.print("count");

env.execute("tumble window test");

}

public static class UrlClickCountResutl extends ProcessWindowFunction<Long, String, String, TimeWindow>{

@Override

public void process(String s, Context context, Iterable<Long> iterable, Collector<String> out) throws Exception {

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

String start = simpleDateFormat.format(context.window().getStart());

String end = simpleDateFormat.format(context.window().getEnd());

Long count = iterable.iterator().next();

out.collect(start + "--" + end + "--" + s + "--" + count);

}

}

public static class UrlClickCountAgg implements AggregateFunction<String, Long, Long>{

@Override

public Long createAccumulator() {

return 0L;

}

@Override

public Long add(String value, Long acc) {

return acc + 1;

}

@Override

public Long getResult(Long acc) {

return acc;

}

@Override

public Long merge(Long acc, Long acc1) {

return acc + acc1;

}

}

}

source> {"username":"bbb","click_url":"url1","eventtime":"2022-07-05 18:00:57"}

source> {"username":"bbb","click_url":"url2","eventtime":"2022-07-05 18:01:03"}

count> 2022-07-05 18:00:40--2022-07-05 18:01:00--bbb--1

source> {"username":"ccc","click_url":"url1","eventtime":"2022-07-05 18:01:07"}

source> {"username":"aaa","click_url":"url1","eventtime":"2022-07-05 18:01:08"}

source> {"username":"ccc","click_url":"url2","eventtime":"2022-07-05 18:01:09"}

source> {"username":"bbb","click_url":"url2","eventtime":"2022-07-05 18:01:18"}

count> 2022-07-05 18:00:50--2022-07-05 18:01:10--bbb--2

count> 2022-07-05 18:00:50--2022-07-05 18:01:10--ccc--2

count> 2022-07-05 18:00:50--2022-07-05 18:01:10--aaa--1

source> {"username":"aaa","click_url":"url1","eventtime":"2022-07-05 18:01:21"}

source> {"username":"bbb","click_url":"url2","eventtime":"2022-07-05 18:01:28"}

count> 2022-07-05 18:01:00--2022-07-05 18:01:20--aaa--1

count> 2022-07-05 18:01:00--2022-07-05 18:01:20--bbb--2

count> 2022-07-05 18:01:00--2022-07-05 18:01:20--ccc--2

会话窗口 (Session Windows)

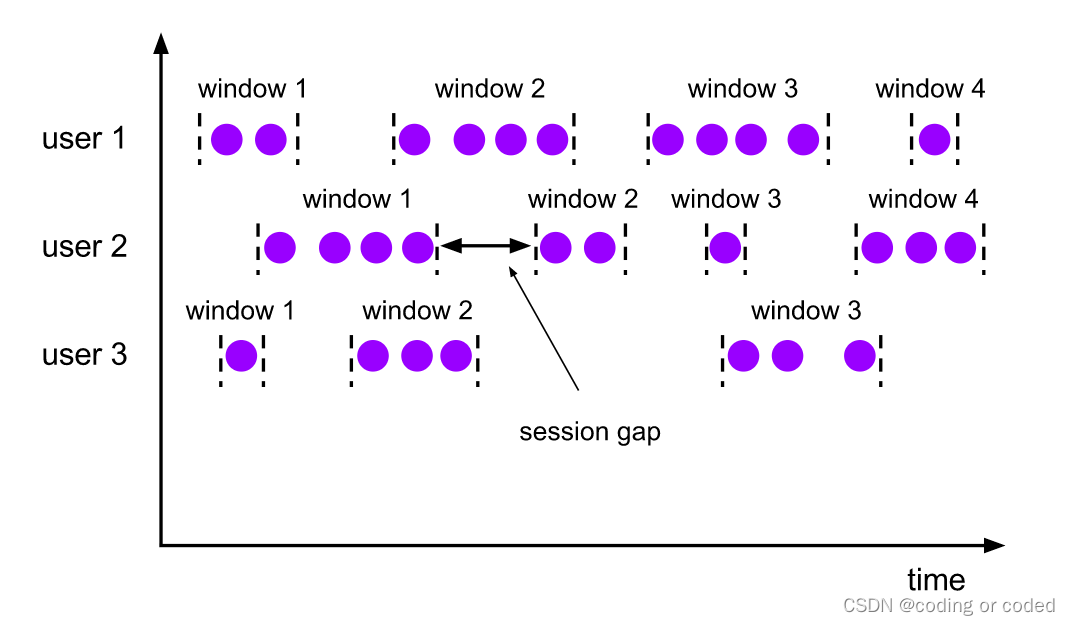

会话窗口顾名思义,是基于“会话”(session)来来对数据进行分组的。这里的会话类似 Web 应用中 session 的概念,不过并不表示两端的通讯过程,而是借用会话超时失效的机制来描述窗口。简单来说,就是数据来了之后就开启一个会话窗口,如果接下来还有数据陆续到来,那么就一直保持会话;如果一段时间一直没收到数据,那就认为会话超时失效,窗口自动关闭。这就好像我们打电话一样,如果时不时总能说点什么,那说明还没聊完;如果陷入了尴尬的沉默,半天都没话说,那自然就可以挂电话了。

与滑动窗口和滚动窗口不同,会话窗口只能基于时间来定义,而没有“会话计数窗口”的概念。这很好理解,“会话”终止的标志就是“隔一段时间没有数据来”,如果不依赖时间而改成个数,就成了“隔几个数据没有数据来”,这完全是自相矛盾的说法。

package com.ali.flink.demo.driver;

import com.ali.flink.demo.utils.DataGeneratorImpl002;

import com.ali.flink.demo.utils.FlinkEnv;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.datagen.DataGeneratorSource;

import org.apache.flink.streaming.api.functions.windowing.ProcessWindowFunction;

import org.apache.flink.streaming.api.windowing.assigners.EventTimeSessionWindows;

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.time.Duration;

import java.util.Date;

public class FlinkSessionWindowDemo01 {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = FlinkEnv.FlinkDataStreamRunEnv();

env.setParallelism(1);

DataGeneratorSource<String> dataGeneratorSource = new DataGeneratorSource<>(new DataGeneratorImpl002());

DataStream<String> dataGeneratorStream = env.addSource(dataGeneratorSource).returns(String.class);

dataGeneratorStream.print("source");

// 指定watermark

DataStream<String> outStream = dataGeneratorStream

.assignTimestampsAndWatermarks(WatermarkStrategy.<String>forBoundedOutOfOrderness(Duration.ofSeconds(2))

.withTimestampAssigner(new SerializableTimestampAssigner<String>() {

@Override

public long extractTimestamp(String s, long l) {

JSONObject jsonObject = (JSONObject) JSON.parse(s);

String eventtime = jsonObject.getString("eventtime");

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

Date event_time = null;

try {

event_time = simpleDateFormat.parse(eventtime);

} catch (ParseException e) {

e.printStackTrace();

}

return event_time.getTime();

}

}))

// 对username字段进行分组,进行group by 操作

.keyBy(new KeySelector<String, String>() {

@Override

public String getKey(String s) throws Exception {

JSONObject jsonObject = (JSONObject) JSON.parse(s);

return jsonObject.getString("username");

}

})

.window(EventTimeSessionWindows.withGap(Time.seconds(7)))

// 使用aggregate做增量聚合统计

.aggregate(new UrlClickCountAgg(), new UrlClickCountResutl());

outStream.print("count");

env.execute("tumble window test");

}

public static class UrlClickCountResutl extends ProcessWindowFunction<Long, String, String, TimeWindow>{

@Override

public void process(String s, Context context, Iterable<Long> iterable, Collector<String> out) throws Exception {

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

String start = simpleDateFormat.format(context.window().getStart());

String end = simpleDateFormat.format(context.window().getEnd());

Long count = iterable.iterator().next();

out.collect(start + "--" + end + "--" + s + "--" + count);

}

}

public static class UrlClickCountAgg implements AggregateFunction<String, Long, Long>{

@Override

public Long createAccumulator() {

return 0L;

}

@Override

public Long add(String value, Long acc) {

return acc + 1;

}

@Override

public Long getResult(Long acc) {

return acc;

}

@Override

public Long merge(Long acc, Long acc1) {

return acc + acc1;

}

}

}

source> {"username":"ccc","click_url":"url2","eventtime":"2022-07-05 18:06:44"}

source> {"username":"bbb","click_url":"url2","eventtime":"2022-07-05 18:06:46"}

source> {"username":"ccc","click_url":"url1","eventtime":"2022-07-05 18:06:50"}

source> {"username":"bbb","click_url":"url2","eventtime":"2022-07-05 18:06:53"}

source> {"username":"ccc","click_url":"url1","eventtime":"2022-07-05 18:07:02"}

count> 2022-07-05 18:06:44--2022-07-05 18:06:57--ccc--2

count> 2022-07-05 18:06:46--2022-07-05 18:07:00--bbb--2

source> {"username":"bbb","click_url":"url1","eventtime":"2022-07-05 18:07:04"}

source> {"username":"ccc","click_url":"url2","eventtime":"2022-07-05 18:07:13"}

count> 2022-07-05 18:07:02--2022-07-05 18:07:09--ccc--1

count> 2022-07-05 18:07:04--2022-07-05 18:07:11--bbb--1

全局窗口 (Global Windows)

还有一类比较通用的窗口,就是“全局窗口”。这种窗口全局有效,会把相同 key 的所有数据都分配到同一个窗口中;说直白一点,就跟没分窗口一样。无界流的数据永无止尽,所以这种窗口也没有结束的时候,默认是不会做触发计算的。如果希望它能对数据进行计算处理,还需要自定义“触发器”(Trigger)

package com.ali.flink.demo.driver;

import com.ali.flink.demo.utils.DataGeneratorImpl002;

import com.ali.flink.demo.utils.FlinkEnv;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.ReduceFunction;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.datagen.DataGeneratorSource;

import org.apache.flink.streaming.api.windowing.assigners.GlobalWindows;

import org.apache.flink.streaming.api.windowing.triggers.CountTrigger;

import java.util.Random;

/**

* global window : 计数窗口

* 全局窗口计算,简单的 max, min, apply, process

*/

public class FlinkGlobalWindowByKeyDemo01 {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = FlinkEnv.FlinkDataStreamRunEnv();

env.setParallelism(1);

DataGeneratorSource<String> dataGeneratorSource = new DataGeneratorSource<>(new DataGeneratorImpl002());

DataStream<String> dataGeneratorStream = env.addSource(dataGeneratorSource).returns(String.class);

// dataGeneratorStream.print("source");

// 指定watermark

DataStream<Tuple2<String, Integer>> mapStream = dataGeneratorStream

.map(new MapFunction<String, Tuple2<String, Integer>>() {

@Override

public Tuple2<String, Integer> map(String s) throws Exception {

JSONObject jsonObject = JSON.parseObject(s);

String username = jsonObject.getString("username");

return Tuple2.of(username, new Random().nextInt(10));

}

});

mapStream.print("map source");

DataStream<Tuple2<String, Integer>> outStream = mapStream

.keyBy(new KeySelector<Tuple2<String, Integer>, String>() {

@Override

public String getKey(Tuple2<String, Integer> tuple2) throws Exception {

return tuple2.f0;

}

})

.window(GlobalWindows.create())

.trigger(CountTrigger.of(5))

.reduce(new ReduceFunction<Tuple2<String, Integer>>() {

@Override

public Tuple2<String, Integer> reduce(Tuple2<String, Integer> tuple2, Tuple2<String, Integer> t1) throws Exception {

return Tuple2.of(tuple2.f0, tuple2.f1 + t1.f1);

}

});

outStream.print("count");

env.execute("tumble window test");

}

}

map source> (ccc,3)

map source> (ccc,1)

map source> (bbb,8)

map source> (ccc,4)

map source> (aaa,6)

map source> (bbb,1)

map source> (bbb,0)

map source> (aaa,4)

map source> (ccc,0)

map source> (aaa,1)

map source> (ccc,4)

map source> (ccc,9)

count> (ccc,12)

map source> (ccc,7)

map source> (aaa,7)

map source> (aaa,8)

map source> (aaa,6)

map source> (bbb,5)

count> (aaa,26)

map source> (bbb,4)

count> (bbb,18)

Source Code

package com.ali.flink.demo.utils;

import org.apache.commons.math3.random.RandomDataGenerator;

import org.apache.flink.api.common.functions.RuntimeContext;

import org.apache.flink.runtime.state.FunctionInitializationContext;

import org.apache.flink.streaming.api.functions.source.datagen.DataGenerator;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.Random;

public class DataGeneratorImpl002 implements DataGenerator<String> {

RandomDataGenerator generator;

@Override

public void open(String s, FunctionInitializationContext functionInitializationContext, RuntimeContext runtimeContext) throws Exception {

generator = new RandomDataGenerator();

}

@Override

public boolean hasNext() {

return true;

}

@Override

public String next() {

Random random = new Random();

int sleep_cnt = random.nextInt(10);

try {

Thread.sleep(1000 * sleep_cnt);

} catch (InterruptedException e) {

e.printStackTrace();

}

String[] names = new String[]{"aaa","bbb","ccc"};

String[] urls = new String[]{"url1","url2"};

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

String format = simpleDateFormat.format(new Date());

return "{\"username\":\""+ names[random.nextInt(3)] +"\",\"click_url\":\"" + urls[random.nextInt(2)] +"\",\"eventtime\":\"" + format +"\"}";

}

}

1097

1097

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?