Lab 2 Summary

cmu15445第二个项目是关于在内存中实现buffer pool的,通过实现三个部分来加深对缓冲池的实现机制的理解,分别是LRU算法实现frame管理,单个buffer pool的管理,并行多个buffer pool的管理。

实验地址:https://15445.courses.cs.cmu.edu/fall2021/project1/#buffer-pool-instance

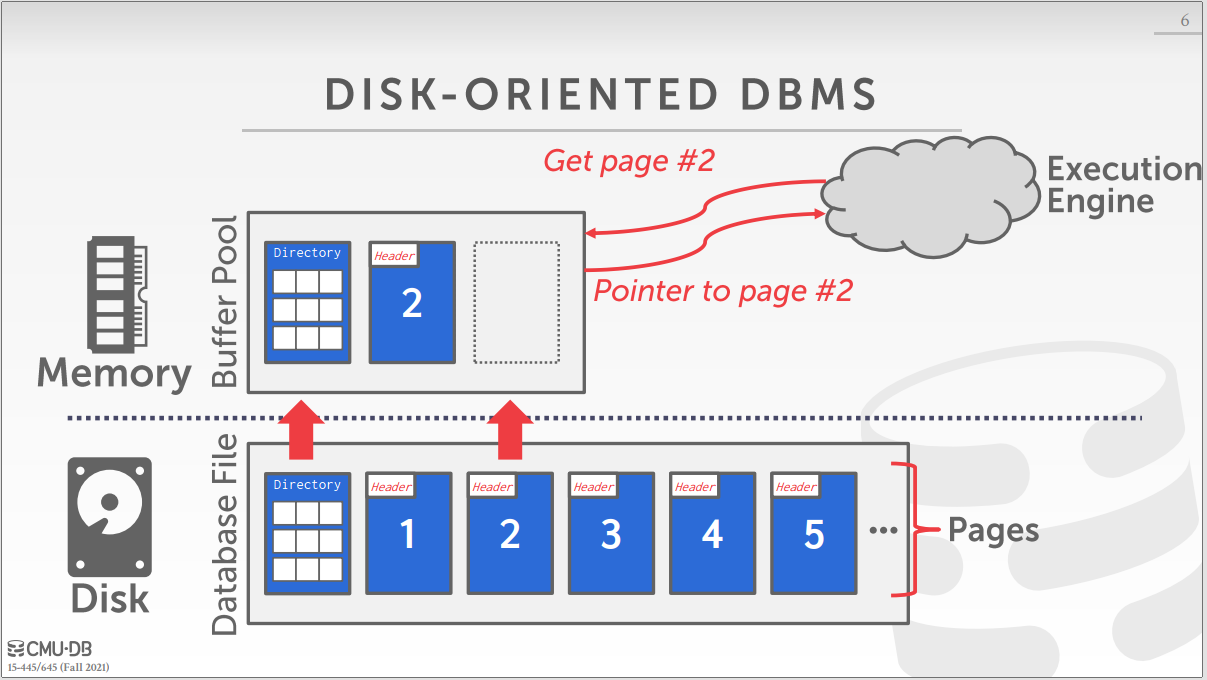

首先谈一谈对整个page在磁盘和内存中的进出过程,理解到这些各部分过后,后续的实验就很好理解了;

1、首先就是说DBMS不是直接去disk里拿到存储页儿,这个点儿但凡开始看这个课应该都可以理解;

2、当执行某条SQL语句的时候,执行引擎先是去内存中的buffer pool里获取该页儿,这个时候会发生两种情况:

- 当buffer pool内存在该页儿的时候,首先我们知道对页儿操作有两种,读和写,

但是写仍然是对于内存中buffer内的frame的修改,因此当多个线程操作的时候,有可能该缓存内的页儿没有写到disk内,因此这个时候该该页儿可能是dirty page,所以这个时候就检测,如果该页儿时脏页儿,就先写回disk,然后再进行该页儿的操作,具体的操作由上层决定,返回指向该page的指针; - 当buffer pool内不存在该页儿的时候,这个时候,buffer pool manager会从disk manager内从disk读取需要的page,然后缓存到buffer pool内,缓存进来过后,返回指向该page的指针给执行引擎;

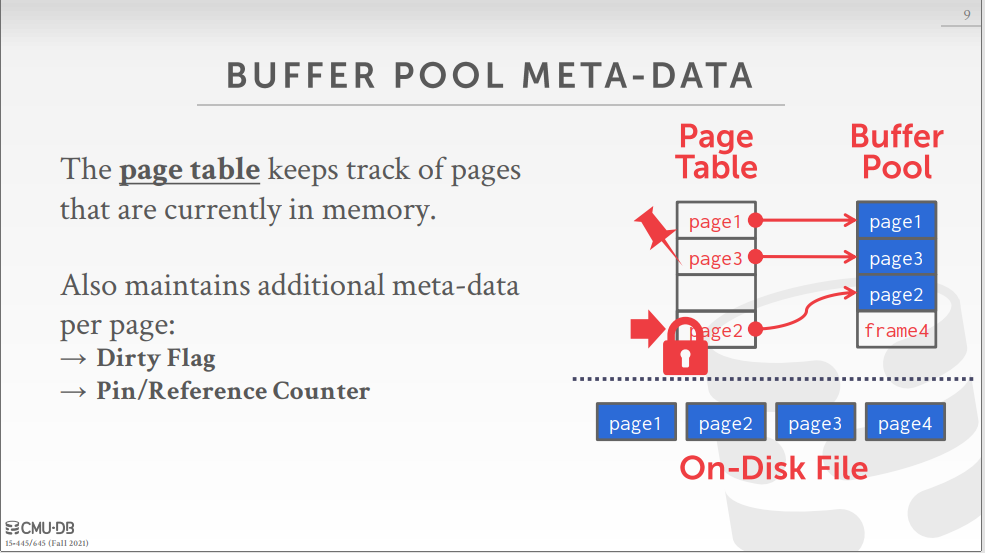

3、如何管理好buffer pool内存中页儿的换进换出,通过策略性的控制,实现线程安全的线程控制:

- buffer pool:内存中的frame数组

- page table:map[page_id] = frame_id,page和frame的哈希表

- replacer:控制页儿的置换与否

- pin/reference counter:正在使用该page的thread,pin_count为0的才会写回disk

当操作某个页儿的时候,不管是添加还是删除,还是从磁盘读取都要对该page进行pin操作,也就是说当前的页再被线程操作,不可以置换,而且这样的操作是原子的,因此得需要进行上锁。

LRU Replacement Policy

个人理解

LRU置换算法,把使用频率最低的置换出去,体现在数据结构上就是队列的队尾给去掉,使用某个页儿时加入到队列的对头,当然再加入的时候得检测队列的大小是否超过容量;LRUReplacer管理的是frame_id也就是确定buffer pool内的page是否可以被置换,当我们把某些页儿给LRUReplacer时,通过lru策略管理他什么时候置换出去,而lru通过自身的算法优势来确定要victim哪些页儿;

- Victim:从buffer pool中置换出某个frame,victim要与buffer pool manager配合使用来置换页儿;

- Pin:page有一个pin_count的数据结构来表示是否被线程使用,因此当某个page被pin的时候,表示正在被线程使用不可以被置换,因此对应到LRU就是这个page对应的frame不应该在可以被置换的队列内;

- Unpin:该页儿可以被置换出去,因此将该page对应的frame加入可被置换的队列内,如果本身在的话就不用管了;

LRU这个部分总结起来就是,这个部分是一种策略性的东西,是buffer pool manager的一个步骤,自身无法操作page那些的。

实现细节

TODO 1 data structure

private:

// TODO(student): implement me!

std::mutex lru_latch_;

size_t num_pages_;

std::list<frame_id_t> lru_list_;

std::unordered_map<frame_id_t, std::list<frame_id_t>::iterator> lru_map_{};

TODO 2 constructor and destructor

LRUReplacer::LRUReplacer(size_t num_pages) {

std::lock_guard<std::mutex> lock_guard(lru_latch_);

num_pages_ = num_pages;

}

LRUReplacer::~LRUReplacer() {

// std::lock_guard<std::mutex> lock_guard(lru_latch_);

// 我人傻了,搞了2天,好蠢~~~

lru_list_.clear();

lru_map_.clear();

};

TODO 3 Victim

bool LRUReplacer::Victim(frame_id_t *frame_id) {

std::lock_guard<std::mutex> lock_guard(lru_latch_);

if (lru_list_.empty()) {

return false;

}

*frame_id = lru_list_.back();

lru_map_.erase(*frame_id);

lru_list_.pop_back();

return true;

}

TODO 4 Pin

void LRUReplacer::Pin(frame_id_t frame_id) {

std::lock_guard<std::mutex> lock_guard(lru_latch_);

auto item = lru_map_.find(frame_id);

if (item == lru_map_.end()) {

return;

}

lru_list_.erase(item->second);

lru_map_.erase(item);

}

TODO 5 Unpin

void LRUReplacer::Unpin(frame_id_t frame_id) {

std::lock_guard<std::mutex> lock_guard(lru_latch_);

if (lru_list_.size() >= num_pages_) {

return;

}

if (lru_map_.count(frame_id) != 0) {

return;

}

lru_list_.push_front(frame_id);

lru_map_[frame_id] = lru_list_.begin();

}

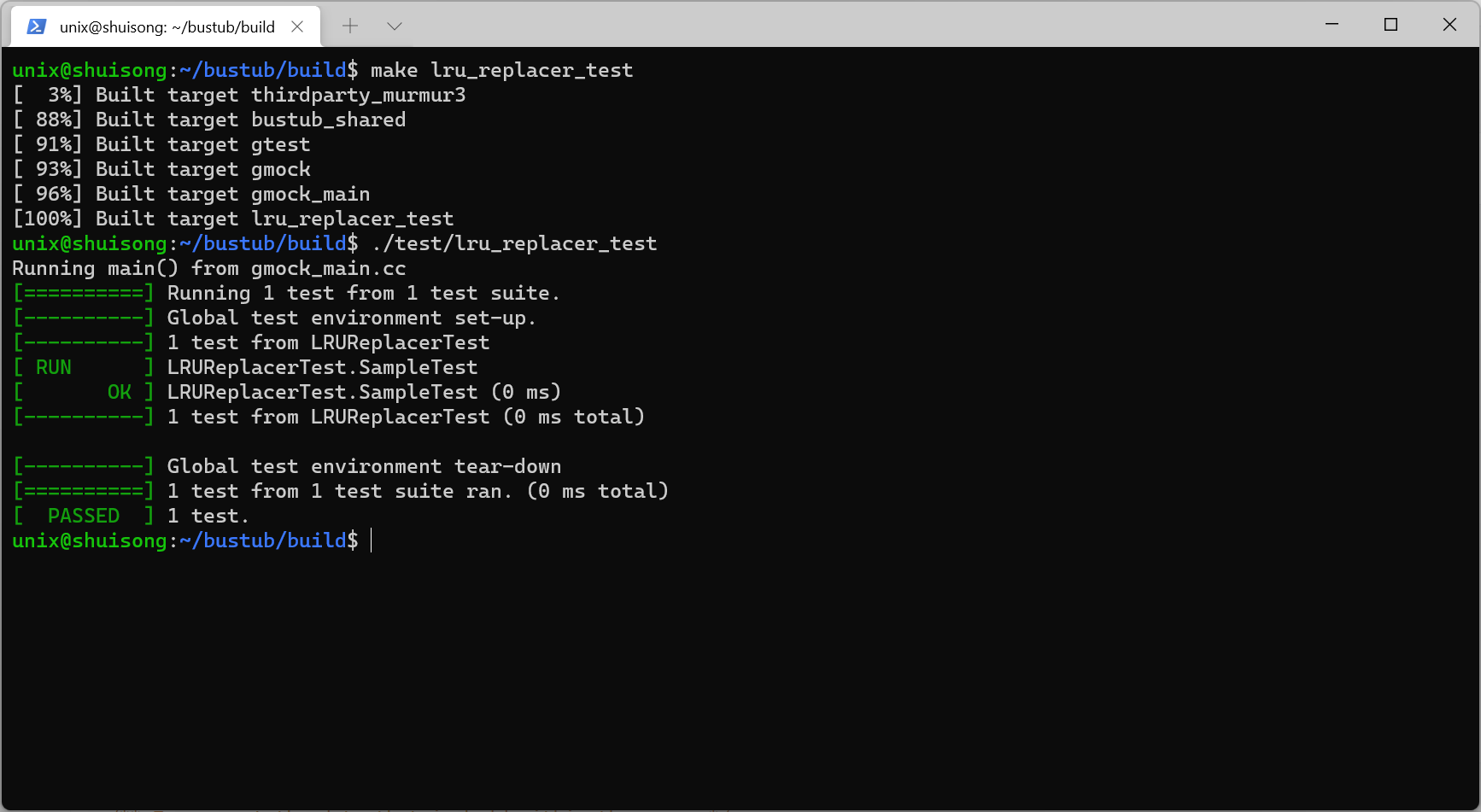

Test

make lru_replacer_test

./test/lru_replacer_test

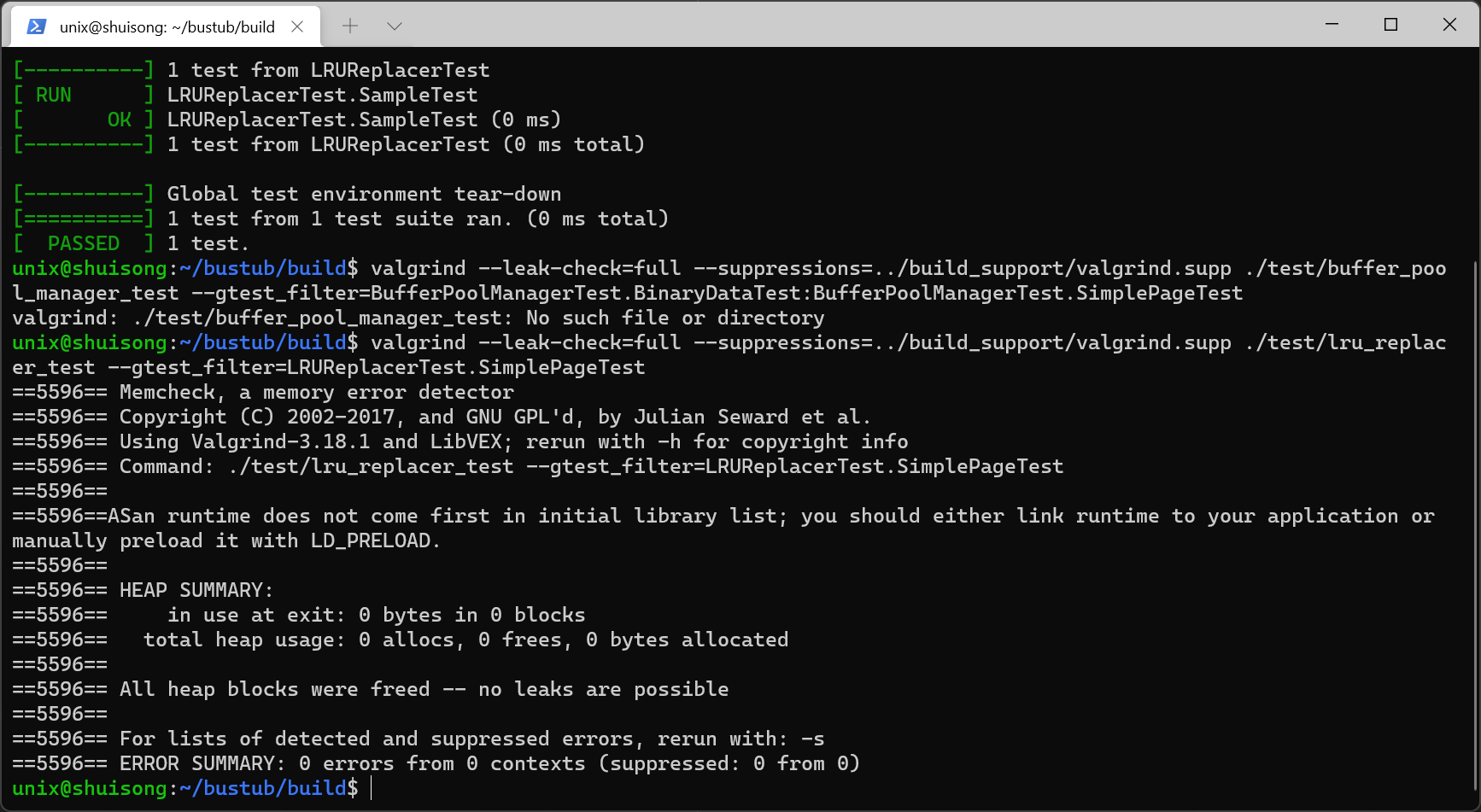

valgrind --leak-check=full --suppressions=../build_support/valgrind.supp ./test/lru_replacer_test --gtest_filter=LRUReplacerTest.SimplePageTest

Buffer Pool Manager Instance

个人理解

Buffer Pool Manager主要实现几个功能,向disk写新的page,从disk里fetch page,刷新page等,这个部分千万千万反复看视频和PPT,当对page进行内容操作的时候会对该page进行pin,跟PPT上所说的一样。

实现细节

Notice

page.h

private:

/** Zeroes out the data that is held within the page. */

inline void ResetMemory() { memset(data_, OFFSET_PAGE_START, PAGE_SIZE); }

/** The actual data that is stored within a page. */

char data_[PAGE_SIZE]{};

/** The ID of this page. */

page_id_t page_id_ = INVALID_PAGE_ID;

/** The pin count of this page. */

int pin_count_ = 0;

/** True if the page is dirty, i.e. it is different from its corresponding page on disk. */

bool is_dirty_ = false;

/** Page latch. */

ReaderWriterLatch rwlatch_;

buffer_pool_manager_instance.h

/** Number of pages in the buffer pool. */

const size_t pool_size_;

/** How many instances are in the parallel BPM (if present, otherwise just 1 BPI) */

const uint32_t num_instances_ = 1;

/** Index of this BPI in the parallel BPM (if present, otherwise just 0) */

const uint32_t instance_index_ = 0;

/** Each BPI maintains its own counter for page_ids to hand out, must ensure they mod back to its instance_index_ */

std::atomic<page_id_t> next_page_id_ = instance_index_;

/** Array of buffer pool pages. */

Page *pages_;

/** Pointer to the disk manager. */

DiskManager *disk_manager_ __attribute__((__unused__));

/** Pointer to the log manager. */

LogManager *log_manager_ __attribute__((__unused__));

/** Page table for keeping track of buffer pool pages. */

std::unordered_map<page_id_t, frame_id_t> page_table_;

/** Replacer to find unpinned pages for replacement. */

Replacer *replacer_;

/** List of free pages. */

std::list<frame_id_t> free_list_;

/** This latch protects shared data structures. We recommend updating this comment to describe what it protects. */

std::mutex latch_;

disk_manager.h

/**

* Write a page to the database file.

* @param page_id id of the page

* @param page_data raw page data

*/

void WritePage(page_id_t page_id, const char *page_data);

/**

* Read a page from the database file.

* @param page_id id of the page

* @param[out] page_data output buffer

*/

void ReadPage(page_id_t page_id, char *page_data);

TODO 1 FlushPgImp

bool BufferPoolManagerInstance::FlushPgImp(page_id_t page_id) {

// Make sure you call DiskManager::WritePage!

std::lock_guard<std::mutex> lock_guard(latch_);

if (page_id == INVALID_PAGE_ID) {

return false;

}

auto item = page_table_.find(page_id);

if (item == page_table_.end()) {

return false;

}

frame_id_t frame_id = item->second;

Page *page = &pages_[frame_id];

disk_manager_->WritePage(page->GetPageId(), page->GetData());

page->is_dirty_ = false;

return true;

}

TODO 2 FlushAllPgsImp

void BufferPoolManagerInstance::FlushAllPgsImp() {

// You can do it!

std::lock_guard<std::mutex> lock_guard(latch_);

for (auto &item : page_table_) {

frame_id_t frame_id = item.second;

Page *page = &pages_[frame_id];

disk_manager_->WritePage(page->GetPageId(), page->GetData());

page->is_dirty_ = false;

}

}

TODO 3 NewPgImp

Page *BufferPoolManagerInstance::NewPgImp(page_id_t *page_id) {

// 0. Make sure you call AllocatePage!

// 1. If all the pages in the buffer pool are pinned, return nullptr.

// 2. Pick a victim page P from either the free list or the replacer. Always pick from the free list first.

// 3. Update P's metadata, zero out memory and add P to the page table.

// 4. Set the page ID output parameter. Return a pointer to P.

std::lock_guard<std::mutex> lock_guard(latch_);

frame_id_t frame_id;

Page *page = nullptr;

if (!free_list_.empty()) {

frame_id = free_list_.front();

free_list_.pop_front();

page = &pages_[frame_id];

} else if (replacer_->Victim(&frame_id)) {

page = &pages_[frame_id];

if (page->IsDirty()) {

disk_manager_->WritePage(page->GetPageId(), page->GetData());

}

page_table_.erase(page->GetPageId());

} else {

return nullptr;

}

page_id_t new_page_id = AllocatePage();

page->page_id_ = new_page_id;

page->is_dirty_ = false;

page->pin_count_ = 1;

page->ResetMemory();

page_table_[page->GetPageId()] = frame_id;

*page_id = page->GetPageId();

replacer_->Pin(frame_id);

return page;

}

TODO 4 FetchPgImp

Page *BufferPoolManagerInstance::FetchPgImp(page_id_t page_id) {

// 1. Search the page table for the requested page (P).

// 1.1 If P exists, pin it and return it immediately.

// 1.2 If P does not exist, find a replacement page (R) from either the free list or the replacer.

// Note that pages are always found from the free list first.

// 2. If R is dirty, write it back to the disk.

// 3. Delete R from the page table and insert P.

// 4. Update P's metadata, read in the page content from disk, and then return a pointer to P.

std::lock_guard<std::mutex> lock_guard(latch_);

frame_id_t frame_id;

Page *page = nullptr;

auto item = page_table_.find(page_id);

if (item != page_table_.end()) {

frame_id = item->second;

page = &pages_[frame_id];

page->pin_count_++;

replacer_->Pin(frame_id);

return page;

}

if (!free_list_.empty()) {

frame_id = free_list_.front();

free_list_.pop_front();

page = &pages_[frame_id];

} else if (replacer_->Victim(&frame_id)) {

page = &pages_[frame_id];

if (page->IsDirty()) {

disk_manager_->WritePage(page->GetPageId(), page->GetData());

}

page_table_.erase(page->GetPageId());

} else {

return nullptr;

}

page->page_id_ = page_id;

page->pin_count_ = 1;

page->is_dirty_ = false;

disk_manager_->ReadPage(page->GetPageId(), page->GetData());

page_table_[page->GetPageId()] = frame_id;

replacer_->Pin(frame_id);

return page;

}

TODO 5 DeletePgImp

bool BufferPoolManagerInstance::DeletePgImp(page_id_t page_id) {

// 0. Make sure you call DeallocatePage!

// 1. Search the page table for the requested page (P).

// 1. If P does not exist, return true.

// 2. If P exists, but has a non-zero pin-count, return false. Someone is using the page.

// 3. Otherwise, P can be deleted. Remove P from the page table, reset its metadata and return it to the free list.

std::lock_guard<std::mutex> lock_guard(latch_);

DeallocatePage(page_id);

auto item = page_table_.find(page_id);

if (item == page_table_.end()) {

return true;

}

frame_id_t frame_id = item->second;

Page *page = &pages_[frame_id];

if (page->GetPinCount() != 0) {

return false;

}

if (page->IsDirty()) {

disk_manager_->WritePage(page->GetPageId(), page->GetData());

}

replacer_->Pin(frame_id);

page_table_.erase(page->GetPageId());

page->pin_count_ = 0;

page->is_dirty_ = false;

page->ResetMemory();

page->page_id_ = INVALID_PAGE_ID;

free_list_.push_back(frame_id);

return true;

}

TODO 6 UnpinPgImp

bool BufferPoolManagerInstance::UnpinPgImp(page_id_t page_id, bool is_dirty) {

std::lock_guard<std::mutex> lock_guard(latch_);

auto item = page_table_.find(page_id);

if (item == page_table_.end()) {

return false;

}

frame_id_t frame_id = item->second;

Page *page = &pages_[frame_id];

if (page->GetPinCount() <= 0) {

return false;

}

page->pin_count_--;

if (is_dirty) {

page->is_dirty_ = true;

}

if (page->GetPinCount() <= 0) {

replacer_->Unpin(frame_id);

}

return true;

}

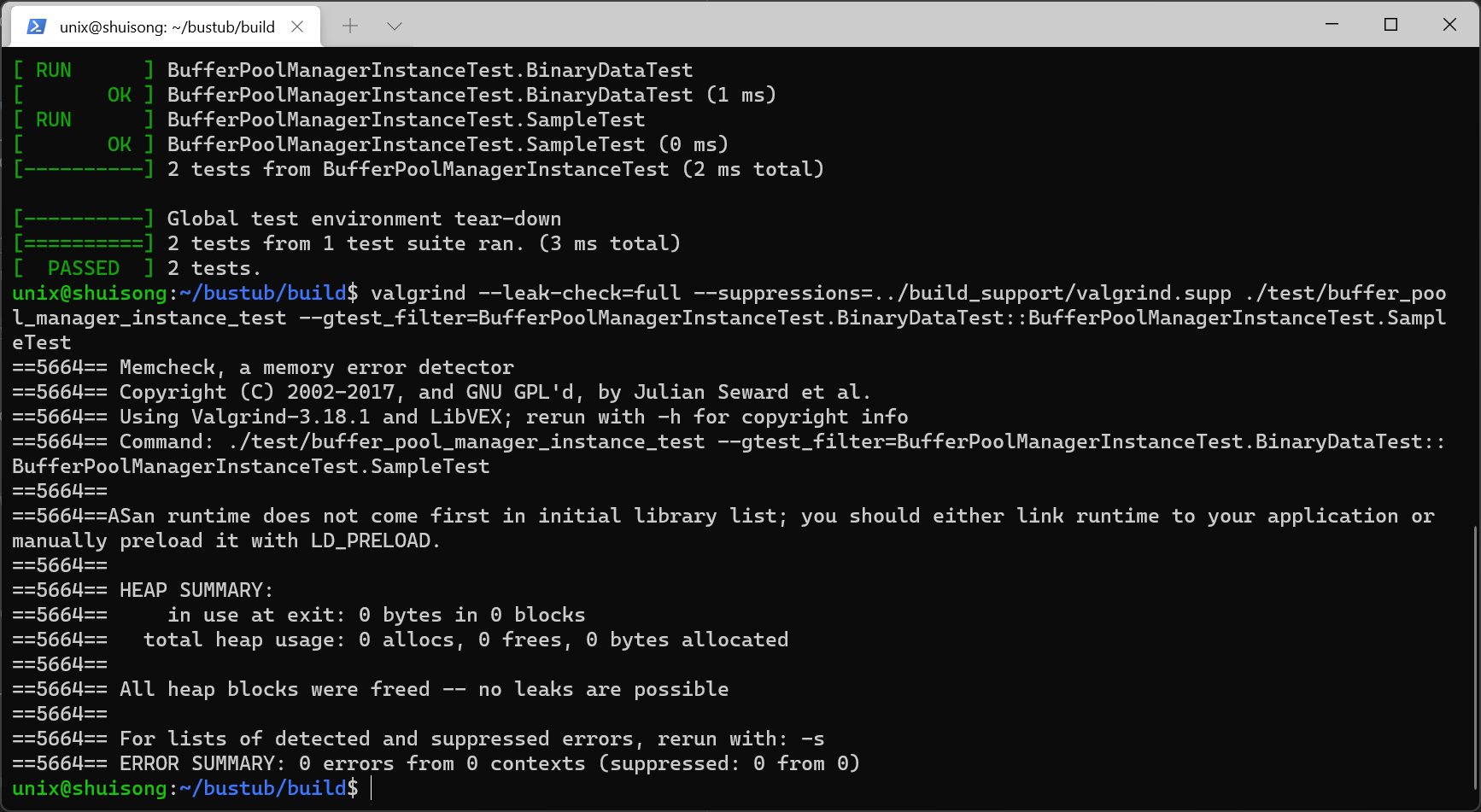

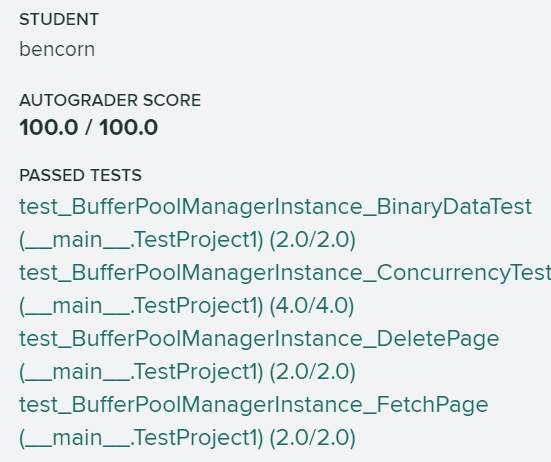

Test

make buffer_pool_manager_instance_test

./test/buffer_pool_manager_instance_test

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-ITbGpFMT-1663687677342)(https://cdn.jsdelivr.net/gh/shatanyumi/imgs@master/imgs/2022/02/28/20220228170639.png)]

valgrind --leak-check=full --suppressions=../build_support/valgrind.supp ./test/buffer_pool_manager_instance_test --gtest_filter=BufferPoolManagerInstanceTest.BinaryDataTest:BufferPoolManagerInstanceTest.SampleTest

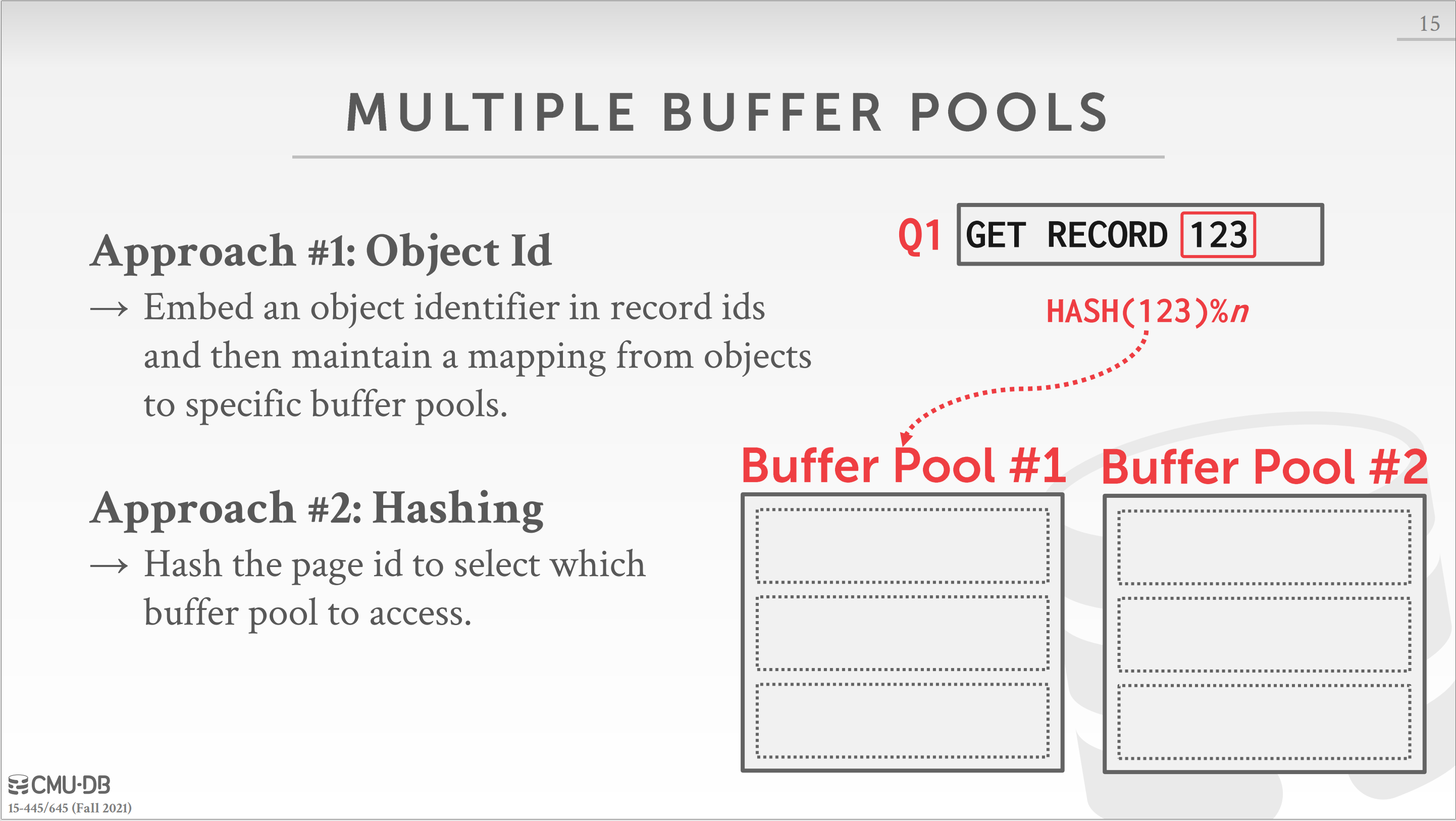

Parallel Buffer Pool Manager

个人理解

并行buffer pool manager,这个部分其实是想让我们实现一个并行的数据库优化,也就是四个数据库优化中的第一个,multiple buffer pools,估计后边儿的实验老师会围绕这几个优化分别进行项目的制作,太牛b啊,这个部分的实现是采用了第二个Hash的方式来实现对每一个page的分配,也就是说当我们拿到一个page的时候,根据page_id来确定该page保存在那个buffer pool,注意:

page_id_t BufferPoolManagerInstance::AllocatePage() {

const page_id_t next_page_id = next_page_id_;

next_page_id_ += num_instances_;

ValidatePageId(next_page_id);

return next_page_id;

}

void BufferPoolManagerInstance::ValidatePageId(const page_id_t page_id) const {

assert(page_id % num_instances_ == instance_index_); // allocated pages mod back to this BPI

}

通过观察上述的代码跟踪,可以分析得出,每次对page_id的分配就是对其进行hash取模来判断是存在哪个pool;然后为了达到并行,我们直接每个函数内操作该buffer pool instance内地数据就好了,其他的就是分配页儿的策略了,看如何达到比较好的性能,哈哈哈我不会,我就每次newpage的时候去每个instance挨个找,性能可能会极差。

实现细节

TODO 1 Structure

private:

std::mutex parallel_buffer_pool_latch_;

size_t num_instance_;

size_t pool_size_;

size_t start_index_;

std::vector<BufferPoolManagerInstance *> buffer_pool_manager_;

TODO 2 Constructor

ParallelBufferPoolManager::ParallelBufferPoolManager(size_t num_instances, size_t pool_size, DiskManager *disk_manager,

LogManager *log_manager) {

// Allocate and create individual BufferPoolManagerInstances

std::lock_guard<std::mutex> lock_guard(parallel_buffer_pool_latch_);

num_instance_ = num_instances;

pool_size_ = pool_size;

start_index_ = 0;

for (size_t i = 0; i < num_instance_; ++i) {

buffer_pool_manager_.push_back(

new BufferPoolManagerInstance(pool_size_, num_instance_, i, disk_manager, log_manager));

}

}

TODO 3 Destructor

// Update constructor to destruct all BufferPoolManagerInstances and deallocate any associated memory

ParallelBufferPoolManager::~ParallelBufferPoolManager() {

for (size_t i = 0; i < num_instance_; ++i) {

delete buffer_pool_manager_[i];

}

}

TODO 4 GetPoolSize

size_t ParallelBufferPoolManager::GetPoolSize() {

// Get size of all BufferPoolManagerInstances

return pool_size_ * num_instances_;

}

TODO 5 GetBufferPoolManager

BufferPoolManager *ParallelBufferPoolManager::GetBufferPoolManager(page_id_t page_id) {

// Get BufferPoolManager responsible for handling given page id. You can use this method in your other methods.

return buffer_pool_manager_[page_id % num_instance_];

}

TODO 6 FetchPgImp

Page *ParallelBufferPoolManager::FetchPgImp(page_id_t page_id) {

// Fetch page for page_id from responsible BufferPoolManagerInstance

return GetBufferPoolManager(page_id)->FetchPage(page_id);

}

TODO 7 UnpinPgImp

bool ParallelBufferPoolManager::UnpinPgImp(page_id_t page_id, bool is_dirty) {

// Unpin page_id from responsible BufferPoolManagerInstance

return GetBufferPoolManager(page_id)->UnpinPage(page_id, is_dirty);

}

TODO 8 FlushPgImp

bool ParallelBufferPoolManager::FlushPgImp(page_id_t page_id) {

// Flush page_id from responsible BufferPoolManagerInstance

return GetBufferPoolManager(page_id)->FlushPage(page_id);

}

TODO 9 NewPgImp

Page *ParallelBufferPoolManager::NewPgImp(page_id_t *page_id) {

// create new page. We will request page allocation in a round robin manner from the underlying

// BufferPoolManagerInstances

// 1. From a starting index of the BPMIs, call NewPageImpl until either 1) success and return 2) looped around to

// starting index and return nullptr

// 2. Bump the starting index (mod number of instances) to start search at a different BPMI each time this function

// is called

std::lock_guard<std::mutex> lock_guard(parallel_buffer_pool_latch_);

for (size_t i = start_index_; i < start_index_ + num_instance_; ++i) {

BufferPoolManager *manager = buffer_pool_manager_[i % num_instance_];

Page *page = manager->NewPage(page_id);

if (page != nullptr) {

start_index_ = (i + 1) % num_instance_;

return page;

}

}

return nullptr;

}

TODO 10 DeletePgImp

bool ParallelBufferPoolManager::DeletePgImp(page_id_t page_id) {

// Delete page_id from responsible BufferPoolManagerInstance

return GetBufferPoolManager(page_id)->DeletePage(page_id);

}

TODO 11 FlushAllPgsImp

void ParallelBufferPoolManager::FlushAllPgsImp() {

// flush all pages from all BufferPoolManagerInstances

for (size_t i = 0; i < num_instance_; ++i) {

buffer_pool_manager_[i]->FlushAllPages();

}

}

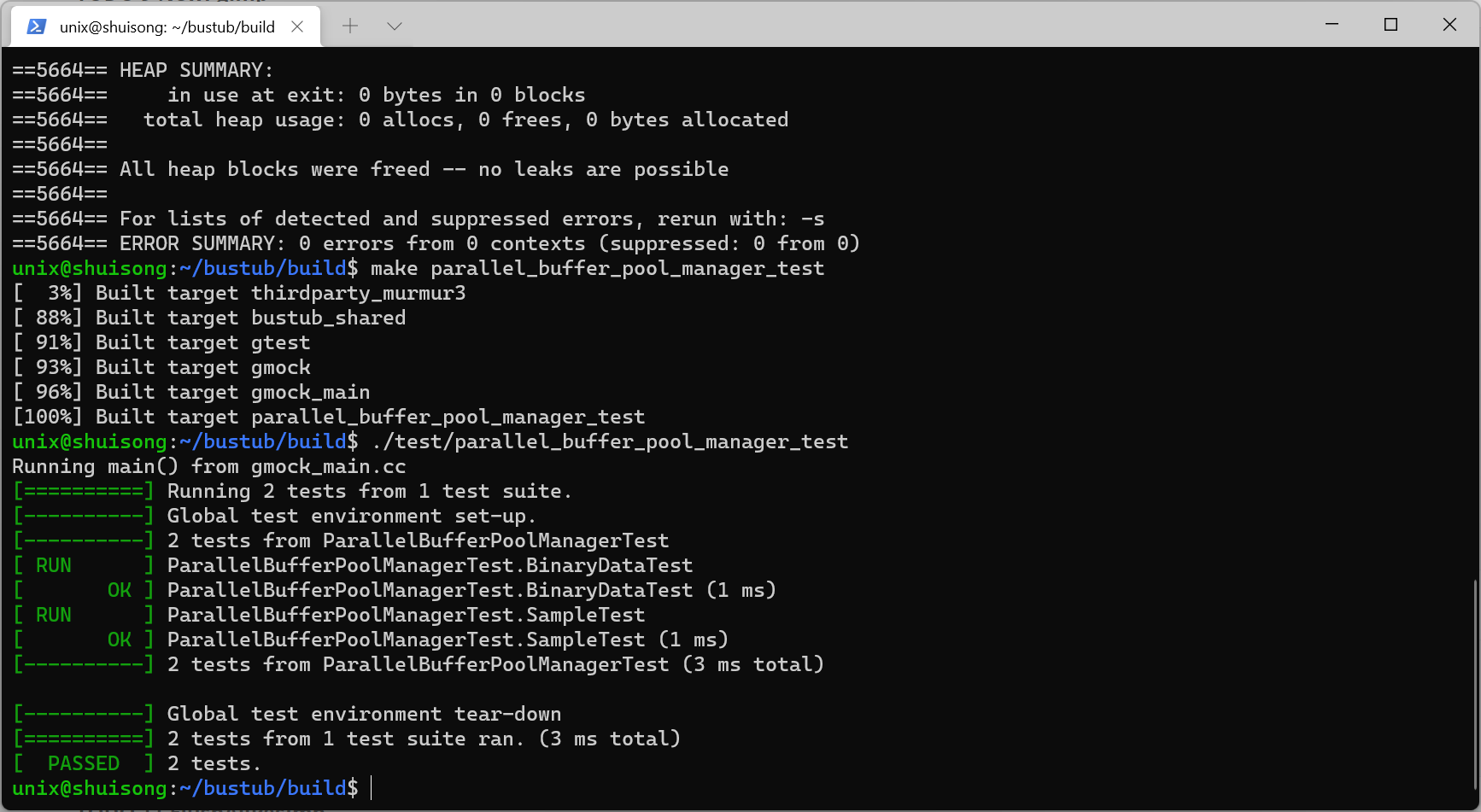

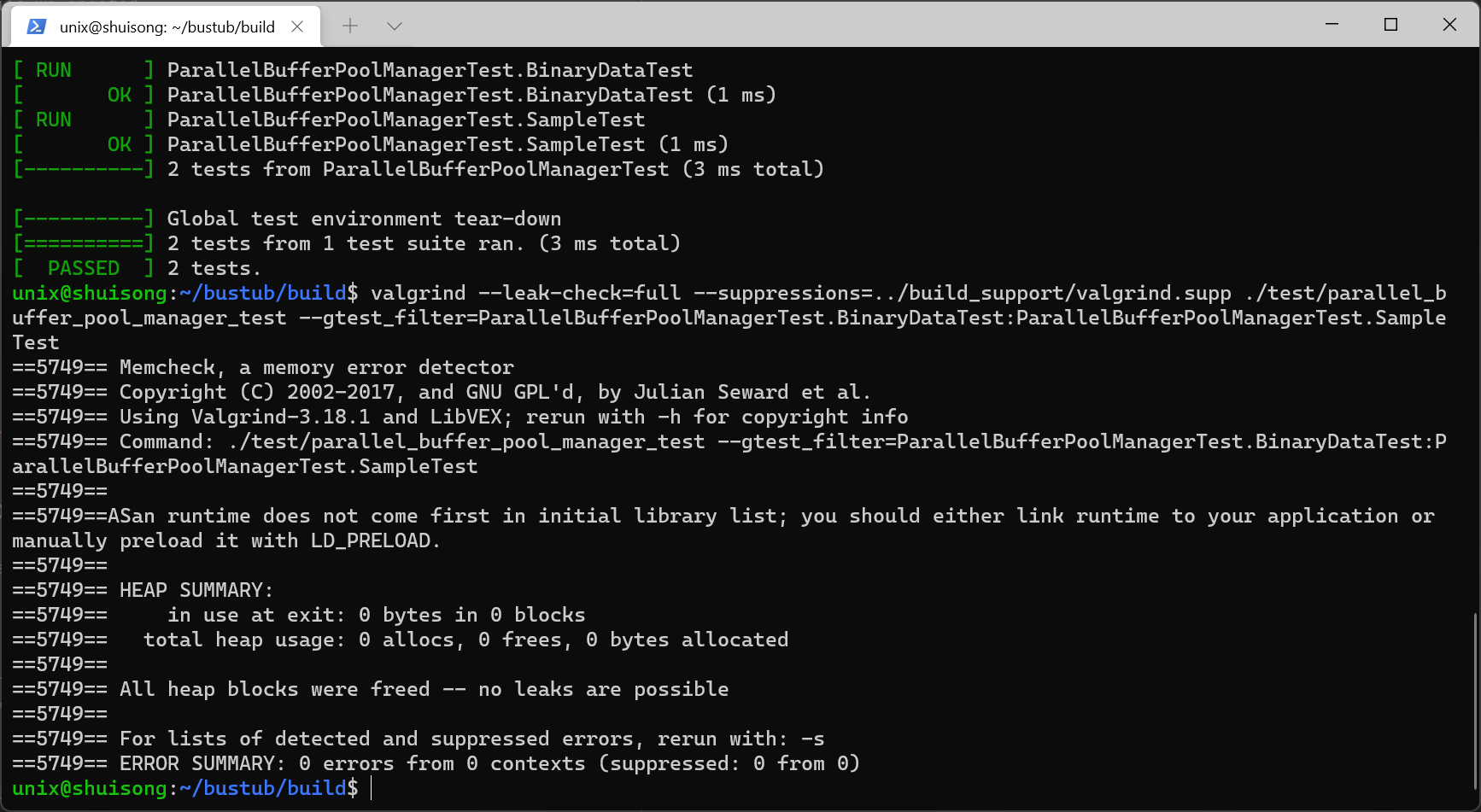

Test

make parallel_buffer_pool_manager_test

./test/parallel_buffer_pool_manager_test

valgrind --leak-check=full --suppressions=../build_support/valgrind.supp ./test/parallel_buffer_pool_manager_test --gtest_filter=ParallelBufferPoolManagerTest.BinaryDataTest:ParallelBufferPoolManagerTest.Sample

Test

Gradescope

make format

make check-lint

make check-clang-tidy

zip project1-submission.zip \

src/include/buffer/lru_replacer.h \

src/buffer/lru_replacer.cpp \

src/include/buffer/buffer_pool_manager_instance.h \

src/buffer/buffer_pool_manager_instance.cpp \

src/include/buffer/parallel_buffer_pool_manager.h \

src/buffer/parallel_buffer_pool_manager.cpp

总结

仿佛打开新世界的大门啊,嗯怎么说,总结几点需要注意的吧:

- LRU的析构函数(其他的也是)还是手动释放一下内存比较好,但是不要愚蠢

- 并行buffer pool的页分配,参照代码注释采用轮询的时候,可以考虑分配的更加均匀,反正我是上一次分配buffer pool的下一个来,挨着来找;可能一开始会比较均匀,但是最终由于读取那些page每个被访问的概率不一样,其实这也是假想的负载均衡,反正我象不太清楚,能大概的做一个global policy就好了,达到目的

- 然后就是反复看视频和PPT,加深理解

行,基本Over,继续学习,太菜了~

194

194

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?