最近要跑一个PCR-GLOBWB的30min的模型数据,但是里面的数据很多,下载量也比较大,我就根据PCR-GLOBWB github上面给的网址,这个网址是散装文件(有一个zenodo网址给的是一个45G大文件,实在是不得行),于是就写了一个批量下载的脚本,这样就不用一个个单击下载了。

1.导入包和基础网址

from selenium import webdriver

import os

import subprocess

import time

raw_html='https://opendap.4tu.nl/thredds/catalog/data2/pcrglobwb/version_2019_11_beta/pcrglobwb2_input/global_30min/catalog.html'我这边只想跑30min的模型,所以只下载了这个文件夹下的东西。

2.控制下载参数

chrome_options = webdriver.ChromeOptions()

prefs = {'profile.default_content_settings.popups': 0, #防止保存弹窗

'download.default_directory':r'E:\PCR_download\download',#设置默认下载路径

"profile.default_content_setting_values.automatic_downloads":1#允许多文件下载

}

chrome_options.add_experimental_option('prefs', prefs)

#修改windows.navigator.webdriver,防机器人识别机制,selenium自动登陆判别机制

chrome_options.add_experimental_option('excludeSwitches', ['enable-automation'])

dr= webdriver.Chrome(chrome_options=chrome_options)这段没啥说的,改下路径就OK

3.获取网页中所有与PCR有关的链接

dr.get(raw_html)

these_html_zip=dr.find_elements_by_xpath("//*[@href]")

these_htmls=[]

for link in these_html_zip:

this_link_text=link.get_attribute('href')

if 'version_2019'in this_link_text:

these_htmls.append(this_link_text)

these_htmls2=[]

for i_html in these_htmls[:]:

this_file_path=i_html.split('version_2019_11_beta')[0]+'version_2019_11_beta'+i_html.split('version_2019_11_beta')[-1]

this_file_path.replace('catalog','fileServer')

these_htmls2.append(this_file_path)

these_htmls3=these_htmls2[1:]4.获取第2,3,4,5层的链接

没写循环,直接复制了,所以比较长哈哈

#%%获取第一层的链接

these_htmls6=[]

for i_th3 in these_htmls3:

this_3_html=i_th3

dr.get(this_3_html)

these_html_zip=dr.find_elements_by_xpath("//*[@href]")

these_htmls=[]

for link in these_html_zip:

this_link_text=link.get_attribute('href')

if 'version_2019'in this_link_text:

these_htmls.append(this_link_text)

these_htmls4=[]

for i_html in these_htmls:

this_file_path=i_html.split('version_2019_11_beta')[0]+'version_2019_11_beta'+i_html.split('version_2019_11_beta')[-1]

if '.html'not in this_file_path:

this_file_path=this_file_path.replace('catalog','fileServer')

these_htmls4.append(this_file_path)

these_htmls5=these_htmls4[1:]

these_htmls6=these_htmls5+these_htmls6

#%%再来一轮

these_htmls7=[]

for i_th6 in these_htmls6:

if '.html'not in i_th6:

these_htmls7.append(i_th6)

continue

else:

dr.get(i_th6)

these_html_zip=dr.find_elements_by_xpath("//*[@href]")

these_htmls=[]

for link in these_html_zip:

this_link_text=link.get_attribute('href')

if 'version_2019'in this_link_text:

these_htmls.append(this_link_text)

these_htmls4=[]

for i_html in these_htmls:

this_file_path=i_html.split('version_2019_11_beta')[0]+'version_2019_11_beta'+i_html.split('version_2019_11_beta')[-1]

if '.html'not in this_file_path:

this_file_path=this_file_path.replace('catalog','fileServer')

these_htmls4.append(this_file_path)

these_htmls5=these_htmls4[1:]

these_htmls7=these_htmls5+these_htmls7

#%%再来一轮

these_htmls8=[]

for i_th6 in these_htmls6:

if '.html'not in i_th6:

these_htmls8.append(i_th6)

continue

else:

dr.get(i_th6)

these_html_zip=dr.find_elements_by_xpath("//*[@href]")

these_htmls=[]

for link in these_html_zip:

this_link_text=link.get_attribute('href')

if 'version_2019'in this_link_text:

these_htmls.append(this_link_text)

these_htmls4=[]

for i_html in these_htmls:

this_file_path=i_html.split('version_2019_11_beta')[0]+'version_2019_11_beta'+i_html.split('version_2019_11_beta')[-1]

if '.html'not in this_file_path:

this_file_path=this_file_path.replace('catalog','fileServer')

these_htmls4.append(this_file_path)

these_htmls5=these_htmls4[1:]

these_htmls8=these_htmls5+these_htmls8

#%%再来一轮

these_htmls9=[]

for i_th6 in these_htmls8:

if '.html'not in i_th6:

these_htmls9.append(i_th6)

continue

else:

dr.get(i_th6)

these_html_zip=dr.find_elements_by_xpath("//*[@href]")

these_htmls=[]

for link in these_html_zip:

this_link_text=link.get_attribute('href')

if 'version_2019'in this_link_text:

these_htmls.append(this_link_text)

these_htmls4=[]

for i_html in these_htmls:

this_file_path=i_html.split('version_2019_11_beta')[0]+'version_2019_11_beta'+i_html.split('version_2019_11_beta')[-1]

if '.html'not in this_file_path:

this_file_path=this_file_path.replace('catalog','fileServer')

these_htmls4.append(this_file_path)

these_htmls5=these_htmls4[1:]

these_htmls9=these_htmls5+these_htmls9

#%%再来一轮

these_htmls10=[]

for i_th6 in these_htmls9:

if '.html'not in i_th6:

these_htmls10.append(i_th6)

continue

else:

dr.get(i_th6)

these_html_zip=dr.find_elements_by_xpath("//*[@href]")

these_htmls=[]

for link in these_html_zip:

this_link_text=link.get_attribute('href')

if 'version_2019'in this_link_text:

these_htmls.append(this_link_text)

these_htmls4=[]

for i_html in these_htmls:

this_file_path=i_html.split('version_2019_11_beta')[0]+'version_2019_11_beta'+i_html.split('version_2019_11_beta')[-1]

if '.html'not in this_file_path:

this_file_path=this_file_path.replace('catalog','fileServer')

these_htmls4.append(this_file_path)

these_htmls5=these_htmls4[1:]

these_htmls10=these_htmls5+these_htmls10

#%%再来一轮

these_htmls11=[]

for i_th6 in these_htmls10:

if '.html'not in i_th6:

these_htmls11.append(i_th6)

continue

else:

dr.get(i_th6)

these_html_zip=dr.find_elements_by_xpath("//*[@href]")

these_htmls=[]

for link in these_html_zip:

this_link_text=link.get_attribute('href')

if 'version_2019'in this_link_text:

these_htmls.append(this_link_text)

these_htmls4=[]

for i_html in these_htmls:

this_file_path=i_html.split('version_2019_11_beta')[0]+'version_2019_11_beta'+i_html.split('version_2019_11_beta')[-1]

if '.html'not in this_file_path:

this_file_path=this_file_path.replace('catalog','fileServer')

these_htmls4.append(this_file_path)

these_htmls5=these_htmls4[1:]

these_htmls11=these_htmls5+these_htmls115.下载数据

for i_th11 in these_htmls11:

dr.get(i_th11)

time.sleep(5)

import pandas as pd

html_pd=pd.DataFrame(these_htmls11)

html_pd.to_csv(r'E:\PCR_download\download\html_pd.csv',index=None)

通过上述的操作,these_htmls11包含着所有的nc,map,txt文件,这个第五步就可以把他们都下载到一个文件夹里。

同时保存所有的下载链接。

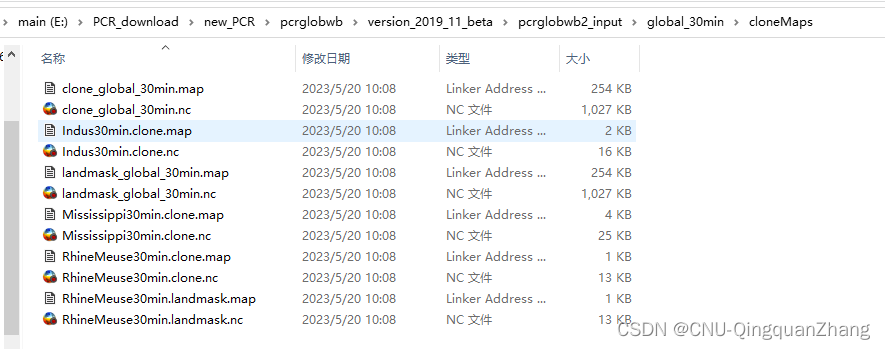

6.根据保存的下载链接将数据依次分到对应的文件夹

import os

import shutil

file_htmls=pd.read_csv(r"E:\PCR_download\PCR\html_pd.csv")

file_paths=[file_htmls.iloc[i,0].replace('https://opendap.4tu.nl/thredds/fileServer/data2/',r'E:\PCR_download\new_PCR\\')for i in range(file_htmls.shape[0])]

def filter_filename(one_path):

if '(' in one_path:

one_str=one_path.split(' (')[0]

two_str=one_path.split(')')[-1]

filter_path=one_str+two_str

else:

filter_path=one_path

return filter_path

def find_html(one_file_name,file_paths):

'''找到含有file_name的超链接'''

result=[]

two_file_name=one_file_name.split('\\')[-1]

for i_fp in file_paths:

if two_file_name in i_fp:

result.append(i_fp)

return result

def get_num(one_file_name):

'''获取路径编码'''

if '('not in one_file_name:

return 0

this_num=int(one_file_name.split('(')[-1].split(')')[0])

return this_num

old_dir=r'E:\PCR_download\PCR'

for i_ol in os.listdir(old_dir):

if 'html_pd.csv'in i_ol:

continue

this_file_path=os.path.join(old_dir,i_ol)

this_file_name=filter_filename(this_file_path)

new_paths=find_html(this_file_name,file_paths)

file_num=get_num(this_file_path)

this_new_file_path=new_paths[file_num]

this_new_dir=os.path.abspath (os.path.dirname (this_new_file_path)+os.path.sep+ ".") #this_new_file_path.split(i_ol)[0]

if os.path.exists(this_new_dir):

pass

else:

os.makedirs(this_new_dir)

#移动

shutil.copy(this_file_path,this_new_file_path)

print('######',this_file_path,'\n',this_new_file_path,'\n','Over')

7.工作完成

完工!!

1283

1283

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?