记录一下PyTorch的一些奇奇怪怪的方法和函数

Dataset&DataLoader

class MyDataSet(Dataset):

def __init__(self,sava_path):

def __len__(self):

return 0

def __getitem__(self,idx):

return 0

def collate_fn(data):

return 0

collate_fn()的参数输入是__getitem__()得到的结果,一般是管这些拿到的数据怎么拼接成一个batch。

但collate_fn()不是Dateset里的函数,是放一边用来替换Dataloader中collate_fn的函数。

# 加载到dataloader

loader = DataLoader(mydataset, batch_size=10, collate_fn=collate_fn)

# 这么写

for epoch in range(3):

for step, batch_x in enumerate(loader):

# training

print("step:{}, batch_x:{}".format(step, batch_x))torch转为pd.Dataframe

x = pd.DataFrame(out.detach().cpu().numpy())torch 初始化

F.linear(x, weight,bias) 维度一览

import torch

import torch.nn.functional as F

x = torch.rand(100,50)

weight = torch.rand(32,50)

bias = torch.rand(100,32)

output1 = F.linear(x, weight) # 100*32

output2 = F.linear(x, weight,bias) # 100*32

类 demo

class HierarchyConv(MessagePassing):

def __init__(self,args):

super().__init__()

###codes###

self.weight = Parameter(torch.Tensor("目标维度", "初始维度"))

self.bias = Parameter(torch.Tensor("初始神经元数量,或者说是样本量",1))

self.reset_parameters()

def reset_parameters(self):

torch.nn.init.kaiming_uniform_(self.weight, a=math.sqrt(5))

fan_in, _ = torch.nn.init._calculate_fan_in_and_fan_out(self.weight)

bound = 1 / math.sqrt(fan_in)

torch.nn.init.uniform_(self.bias, -bound, bound)

def forward(self,##):

###codes###

return F.linear(x, self.weight, self.bias)torch 归一化

a = torch.rand(2,3)

print(a)

b = torch.nn.functional.normalize(a)

print(b)torch loss

loss = torch.nn.CrossEntropyLoss()(self.embedding,self.label)

## 典中典之交叉熵标签平滑

class LabelSmoothingCrossEntropy(torch.nn.Module):

def __init__(self, eps=0.1, reduction='mean', ignore_index=-100):

super(LabelSmoothingCrossEntropy, self).__init__()

self.eps = eps

self.reduction = reduction

self.ignore_index = ignore_index

def forward(self, output, target):

c = output.size()[-1]

log_pred = torch.log_softmax(output, dim=-1)

if self.reduction == 'sum':

loss = -log_pred.sum()

else:

loss = -log_pred.sum(dim=-1)

if self.reduction == 'mean':

loss = loss.mean()

return loss * self.eps / c + (1 - self.eps) * torch.nn.functional.nll_loss(

log_pred, target,reduction=self.reduction,ignore_index=self.ignore_index)生物意义上,这个标签平滑非常有用

torch linear

a = torch.rand(10,32)

b = torch.nn.Linear(in_features=32,out_features=16,bias=True)(a)

print(b.size()) # torch.Size([10, 16])torch随机生成

torch生成随机矩阵,数值在0-1之间

x = torch.rand(2,3)

print(x)

# tensor([[0.9800, 0.0970, 0.0885],

# [0.5062, 0.7116, 0.0868]])用python带的random从一个范围内中挑选整数

import random

y = random.randint(0,2)

print(y)

## 0torch.stack

沿着一个新维度对输入张量序列进行连接。 序列中所有的张量都应该为相同形状。

# 两个都是 3*3

T1 = torch.tensor([[1, 2, 3],

[4, 5, 6],

[7, 8, 9]])

T2 = torch.tensor([[10, 20, 30],

[40, 50, 60],

[70, 80, 90]])

print(torch.stack((T1,T2),dim=0).shape)

print(torch.stack((T1,T2),dim=1).shape)

print(torch.stack((T1,T2),dim=2).shape)

print(torch.stack((T1,T2),dim=3).shape)

# outputs:

torch.Size([2, 3, 3])

torch.Size([3, 2, 3])

torch.Size([3, 3, 2])

'选择的dim>len(outputs),所以报错'

IndexError: Dimension out of range (expected to be in range of [-3, 2], but got 3)

torch.permute

x = torch.rand(3,5)

x = torch.unsqueeze(x,0)

x = torch.unsqueeze(x,0)

print(x.size()) ## torch.Size([1, 1, 3, 5])

x = x.permute(0,3,2,1)

print(x.size()) ## torch.Size([1, 5, 3, 1])torch.trunk

import torch

print(torch.arange(5).chunk(3))

# (tensor([0, 1]), tensor([2, 3]), tensor([4]))

# 得到一个元祖,将[1,2,3,4,5]分成3份a=torch.tensor([[[1,2],[3,4]],

[[5,6],[7,8]]])

b=torch.chunk(a,2,dim=1) # 按照a的第一维度切分

print(a.size()) # torch.Size([2, 2, 2])

print(a)

# tensor([[[1, 2],

# [3, 4]],

#

# [[5, 6],

# [7, 8]]])

# print(b.size()) # b是元祖,执行报错

print(b)

# (tensor([[[1, 2]],[[5, 6]]]), tensor([[[3, 4]],[[7, 8]]]))

torch.nn.init.uniform_

torch.nn.init.uniform(tensor, a=0, b=1)从均匀分布U(a, b)中生成值,填充输入的张量或变量

参数:

tensor - n维的torch.Tensor

a - 均匀分布的下界

b - 均匀分布的上界

torch.nn.Parameter()

self.v = torch.nn.Parameter(torch.FloatTensor(hidden_size))

含义是将一个固定不可训练的tensor转换成可以训练的类型parameter,并将这个parameter绑定到这个module里面(net.parameter()中就有这个绑定的parameter,所以在参数优化的时候可以进行优化的),所以经过类型转换这个self.v变成了模型的一部分,成为了模型中根据训练可以改动的参数了。使用这个函数的目的也是想让某些变量在学习的过程中不断的修改其值以达到最优化。

优化器简单的一句话

optimizer = torch.optim.Adam(model.parameters(), lr=0.01)torch.eq

import torch

x = torch.LongTensor([1,2,3,4])

y = torch.LongTensor([2,2,3,4])

accuracy=torch.eq(x,y).float().mean()

print("accuracy : {}%".format(accuracy*100))

### accuracy : 75.0%torch save_model & load_model

save_files = {

'model': model.state_dict(),

'optimizer': optimizer.state_dict(),

'lr_scheduler': lr_scheduler.state_dict(),

'epoch': epoch}

torch.save(save_files, "./save_weights/ssd300-{}.pth".format(epoch))保存样例

save_files = {

'args': args,

'bottom_encoder': model.bottom_encoder.state_dict(),

'p2d_encoder': model.p2d_encoder.state_dict(),

'middle_encoder': model.middle_encoder.state_dict(),

'd2poly_d_encoder': model.d2poly_d_encoder.state_dict(),

'top_encoder': model.top_encoder.state_dict(),

'optimizer': optimizer.state_dict(), }

torch.save(save_files, "./save_weights/model-{}.pth".format(run))加载样例

model_dict = torch.load("./save_weights/model-{}.pth".format(run), map_location=args.device)

args = model_dict['args']

model = BaseModel(args)

optimizer = model.__prepare_optimizer__(args)

model.bottom_encoder.load_state_dict(model_dict['bottom_encoder'])

model.p2d_encoder.load_state_dict(model_dict['p2d_encoder'])

model.middle_encoder.load_state_dict(model_dict['middle_encoder'])

model.d2poly_d_encoder.load_state_dict(model_dict['d2poly_d_encoder'])

model.top_encoder.load_state_dict(model_dict['top_encoder'])

optimizer.load_state_dict(model_dict['optimizer'])

loss, accuracy = model.train(args)

print('loss:{:.2f} accuracy : {}%'.format(loss, accuracy * 100))

torch验证时,注意加上不要梯度

with torch.no_grad():

results = model()torch去重升序

x = torch.unique(input) #升序

x = torch.unique(input,return_inverse=True) #逆序torch.tensor 转化为list

a = torch.Tensor([1,2,3])

lis = a.numpy().tolist()

print(lis)

# [1.0, 2.0, 3.0]

for i in range(len(lis)):lis[i] = int(lis[i])

# 把所有元素转成int快速将torch.Tensor生成txt

a = torch.eye(8)

pd.DataFrame(a.int().cpu().detach().numpy()).to_csv('test.csv')torch.Tensor转为numpy

numpy_array = torch_Tensor.cpu().detach().numpy()torch.squueze & torch.squueze

input = torch.randn(1, 256, 100)

x = torch.unsqueeze(input,0)

print(x.size()) # torch.Size([1, 1, 256, 100])

x = torch.squeeze(input)

print(x.size()) # torch.Size([256, 100])

torch.nn.Embedding

import torch

import torch.nn as nn

embed=nn.Embedding(10,5) # 10指x有10个不同的元素,5是指要把每个元素扩展成多少维度

x=torch.LongTensor([[0,1,2],[3,2,1],[4,5,6],[9,7,8]]) # 4*3

x_embed=embed(x)

print(x_embed.size())

# torch.Size([4, 3, 5])

print(x_embed)

torch.nn.BatchNorm1d

import torch

import torch.nn as nn

BN = torch.nn.BatchNorm1d(5)

input = torch.Tensor([[1,2,3,4,5],

[10,20,30,40,50],

[100,200,400,400,500]])

output = BN(input)

print(output.size())

# torch.Size([3, 5])

print(output)

# tensor([[-0.8054, -0.8054, -0.7803, -0.8054, -0.8054],

# [-0.6040, -0.6040, -0.6313, -0.6040, -0.6040],

# [ 1.4094, 1.4094, 1.4116, 1.4094, 1.4094]],

# grad_fn=<NativeBatchNormBackward>)快速生成torch的等差序列

X = torch.LongTensor([i for i in range(10)])

print(X)

# tensor([0, 1, 2, 3, 4, 5, 6, 7, 8, 9])torch.nn.Conv1d & torch.nn.Conv2d

pytorch之nn.Conv1d和nn.Conv2d超详解_lyj157175的博客-CSDN博客

import torch

conv1d = torch.nn.Conv1d(in_channels=19081, out_channels=600, kernel_size=1, stride=1, padding=0)

x = torch.rand(1,19081,32)

y = conv1d(x)

print(y.size()) # torch.Size([1, 600, 32])import torch

conv2d = torch.nn.Conv2d(256, 128, kernel_size=1, stride=1, padding=0, dilation=1, groups=1, bias=True)

x = torch.rand(1,256,64,32)

y = conv2d(x)

print(y.size()) ## torch.Size([1, 128, 64, 32])

torch.mul() 和 torch.mm() 的区别

torch.mul() 和 torch.mm() 的区别_Geek Fly的博客-CSDN博客_torch.mm

图可视化

import networkx as nx

import matplotlib.pyplot as plt

G = nx.Graph()

x = edge_index.numpy()

G.add_edges_from(edge_index.numpy().transpose()) # 这个格式要是 num_edge*2,numpy格式

adj = nx.adjacency_matrix(G)

print("G的节点数目",G.number_of_nodes())

print("G的边的数目",G.number_of_edges())

nx.draw(G, node_color = 'pink',with_labels=True)

plt.show()可以写成函数形式,放在utils里

import networkx as nx

import matplotlib.pyplot as plt

def present_graph(edge_index): # 接受torch.LongTensor 2*num_edge 形式

G = nx.Graph()

G.add_edges_from(edge_index.numpy().transpose())

adj = nx.adjacency_matrix(G)

print("G的节点数目",G.number_of_nodes())

print("G的边的数目",G.number_of_edges())

nx.draw(G, node_color = 'pink',with_labels=True)

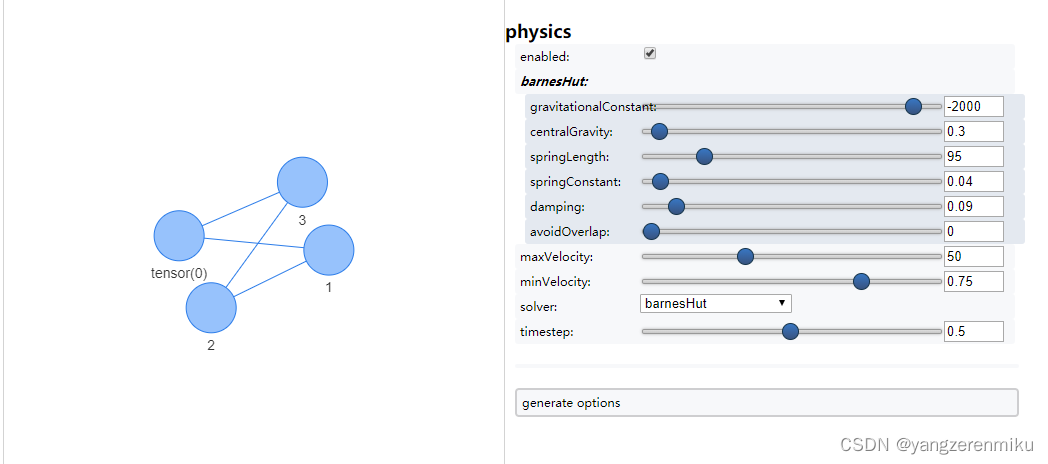

plt.show()也可以换一个库来实现,这个方法可以实现图节点的拖动,以及图片的设置等等

import torch

from pyvis.network import Network

def present_graph(n_id=None,edge_index=None,label=None,weight=None,color=None):

net = Network()

for i in range(n_id.shape[0]):

net.add_node(n_id=str(n_id[i]),label=int(label[i]))

for i in range(edge_index.shape[1]):

net.add_edge(str(edge_index[0][i]),str(edge_index[1][i]),weight=str(weight[i]))

net.show_buttons(filter_=['physics'])

net.show("a.html")

# 一些设置

n_id = torch.LongTensor([i for i in range(num_nodes]) # 节点编号

edge_index = torch.LongTensor([[0,1,2,3],[1,2,3,0]]) # 邻接矩阵 adj

weight = torch.LongTensor([0.1,0.2,0.3,0.4]) # 边权重

label = n_id # 节点label

present_graph(n_id,edge_index,label,weight)

小技巧

生成一个矩阵,这个矩阵中的每行的某个位置的数字越小,表示对应的位置的元素越大

a = torch.tensor([[10, 2, 3],

[4, 6, 5],

[7, 8, 9]])

a = torch.argsort(torch.argsort(a, dim=1, descending=True), dim=1, descending=False)

print(a)

# tensor([[0, 2, 1],

# [2, 0, 1],

# [2, 1, 0]])一行获取模型参数量

total_params = sum(p.numel() for param in model.para_list for p in param)torch.cat & torch.stack

两个一维torch张量竖直摞在一起,是

torch.stack((index1,index2),dim=1)不是torch.cat,这个不适用

torch.sparse_coo_tensor 生成稀疏矩阵

# 先生成具体的二维的adj矩阵,转化成spicy稀疏矩阵,再从这个矩阵转化为torch.Sparse矩阵

############准备数据###################

data = pd.read_csv(r'name.csv',encoding='utf-8')

CID1 = data['CID1_order']

CID2 = data['CID2_order']

CID1 = np.array(CID1)

CID2 = np.array(CID2)

num_node = max(np.max(CID1),np.max(CID2))+1

print(num_node)

adj = np.zeros((num_node,num_node))

for i in range(data.shape[0]):

adj[CID1[i],CID2[i]] = 1 # 无向图

adj[CID2[i],CID1[i]] = 1 # 对称图

adj = adj + np.eye(num_node) # 加自己

############转化为torch.sparse#########

import numpy as np

import scipy.sparse as sp

# a_matrix 是一个邻接矩阵

tmp_coo=sp.coo_matrix(adj)

values=tmp_coo.data

indices=np.vstack((tmp_coo.row,tmp_coo.col))

i=torch.LongTensor(indices)

v=torch.LongTensor(values)

edge_idx=torch.sparse_coo_tensor(i,v,tmp_coo.shape)

############save#######################

import pickle

file = open('ddi.pickle', 'wb')

pickle.dump(edge_idx, file)

file.close()

###########load#########################

import pickle

with open('ddi.pickle', 'rb') as file: #用with的优点是可以不用写关闭文件操作

matrix = pickle.load(file)

print(matrix)大图使用mini-batch,通过NeighborLoader

import torch

import torch.nn.functional as F

from torch_geometric.nn import GCNConv

####################模型#####################################

class Net(torch.nn.Module):

def __init__(self,init_dim,num_classes):

super(Net, self).__init__()

self.conv1 = GCNConv(init_dim, init_dim)

self.conv2 = GCNConv(init_dim, num_classes)

def forward(self, x, edge_index):

x = self.conv1(x, edge_index)

x = F.relu(x)

x = F.dropout(x, training=self.training)

x = self.conv2(x, edge_index)

return F.log_softmax(x, dim=1)

####################准备数据#####################################

import pickle

with open('dataset/data_dict.pickle', 'rb') as file:

dict = pickle.load(file)

ppi_edge_index = dict['ppi_edge_index']

p_feature = torch.rand(19081,16)

import random

label = []

for i in range(19081):

label.append(random.randint(0,2))

label =torch.LongTensor(label)

####################核心##############################################

from torch_geometric.data import Data

from torch_geometric.loader import NeighborLoader

data = Data(x=p_feature, edge_index=ppi_edge_index,edge_attr=None,y=label,num_nodes=19081)

loader = NeighborLoader(data, batch_size=1024,num_neighbors=[25,10])

## num_neighbors 数值慎选,不要0

## 要想得到整个图的embedding,可以在下面的输入图的部分输入data=Graph

## num_neighbors=[25,10],表示接下来适用两个GNN层,第一层采样25个邻居节点,第二层采样10个邻居节点

####################打印出图的信息,包括图的密度density##########################

from torch_geometric.transforms import ToSparseTensor

print(ToSparseTensor()(data.__copy__()).adj_t)

####################但这个使用这个函数稀疏化,就不能用NeighborLoader了##########

####################训练#####################################

model = Net(16,3)

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4)

for epoch in range(200):

loss = 0

for step, batch in enumerate(loader):

optimizer.zero_grad()

out = model(batch.x,batch.edge_index) ## sug_graph embedding

out = model(p_feature,batch.edge_index) ## all_graph embedding

loss = F.nll_loss(out, batch.y)

loss.backward()

optimizer.step()

print('epoch:{} loss:{:.2f}'.format(epoch,loss))

2832

2832

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?