首先通过代码理解,这个代码比较的是,自己写的BCEloss和官方的对比

import torch

import torch.nn.functional as F

import torch.nn as nn

import math

def validate_loss(output, target, weight=None, pos_weight=None):

# 处理正负样本不均衡问题

if pos_weight is None:

label_size = output.size()[1]

pos_weight = torch.ones(label_size)

# 处理多标签不平衡问题

if weight is None:

label_size = output.size()[1]

weight = torch.ones(label_size)

val = 0

for li_x, li_y in zip(output, target):

for i, xy in enumerate(zip(li_x, li_y)):

x, y = xy

loss_val = pos_weight[i] * y * math.log(x, math.e) + (1 - y) * math.log(1 - x, math.e)

val += weight[i] * loss_val

return -val / (output.size()[0] * output.size(1))

# 单标签二分类

m = nn.Sigmoid()

weight = torch.tensor([0.8])

loss_fct = nn.BCELoss(reduction="mean", weight=weight)

input_src = torch.Tensor([[0.8], [0.9], [0.3]])

target = torch.Tensor([[1], [1], [0]])

print(input_src.size())

print(target.size())

output = m(input_src)

loss = loss_fct(output, target)

print(loss.item())

# 验证计算

validate = validate_loss(output, target, weight)

print(validate.item())

结果

首先BCE的output是和标签target的size是相同的。output的值是一般都是经过sigmoid函数,目的是让output的数据变成概率形式,这样就可以和标签target的值比较了。

核心代码

for li_x, li_y in zip(output, target):

for i, xy in enumerate(zip(li_x, li_y)):

x, y = xy

loss_val = pos_weight[i] * y * math.log(x, math.e) + (1 - y) * math.log(1 - x, math.e)

val += weight[i] * loss_val

return -val / (output.size()[0] * output.size(1))

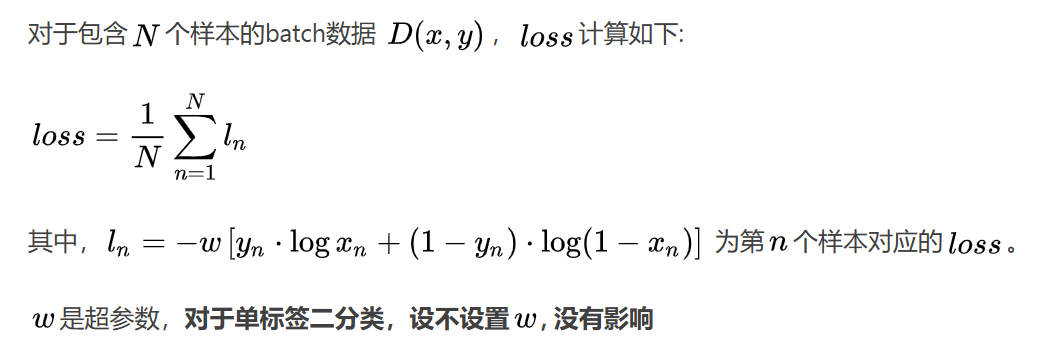

这段代码就是对公式的解释

以代码中的例子为例,output的经过sigmiod后,tensor([[0.6900], [0.7109],[0.5744]]),target还是Tensor([[1], [1], [0]])。首先第一组0.69和1比较,pos_weight[i] * y * math.log(x, math.e) + (1 - y) * math.log(1 - x, math.e),结果-0.37,同理第二组第三组。-0.34,-0.84,因为weight = torch.tensor([0.8]),所以是0.296,0.272,0.672,相加除以3等于0.41和官方结果相同。

pos_weight可以正负样本不均衡问题,因为它的值可以直接对正负样本调节。

weight可以调节不同标签的权重所以可以处理多标签不平衡问题

1515

1515