pytorch1.9, 使用多卡训练GLIP模型时,报如下错误,而单卡却可以正常训练:

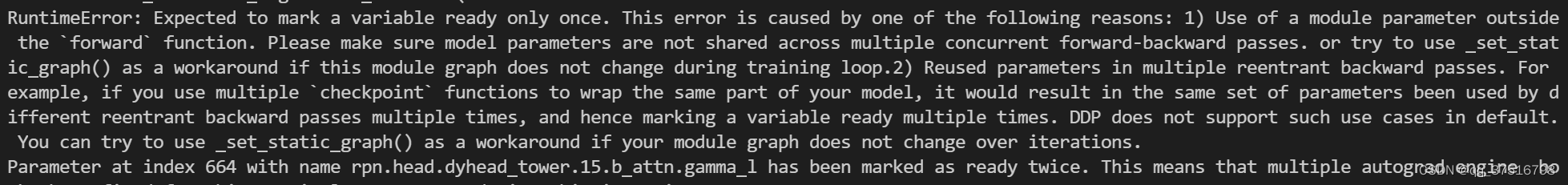

RuntimeError: Expected to mark a variable ready only once. This error is caused by one of the following reasons: 1) Use of a module parameter outside the forward function. Please make sure model parameters are not shared across multiple concurrent forward-backward passes. or try to use _set_static_graph() as a workaround if this module graph does not change during training loop.2) Reused parameters in multiple reentrant backward passes. For example, if you use multiple checkpoint functions to wrap the same part of your model, it would result in the same set of parameters been used by different reentrant backward passes multiple times, and hence marking a variable ready multiple times. DDP does not support such use cases in default. You can try to use _set_static_graph() as a workaround if your module graph does not change over iterations.

解决思路:

- 将环境变量设置TORCH_DISTRIBUTED_DEBUG为DETAIL

export TORCH_DISTRIBUTED_DEBUG=DETAIL

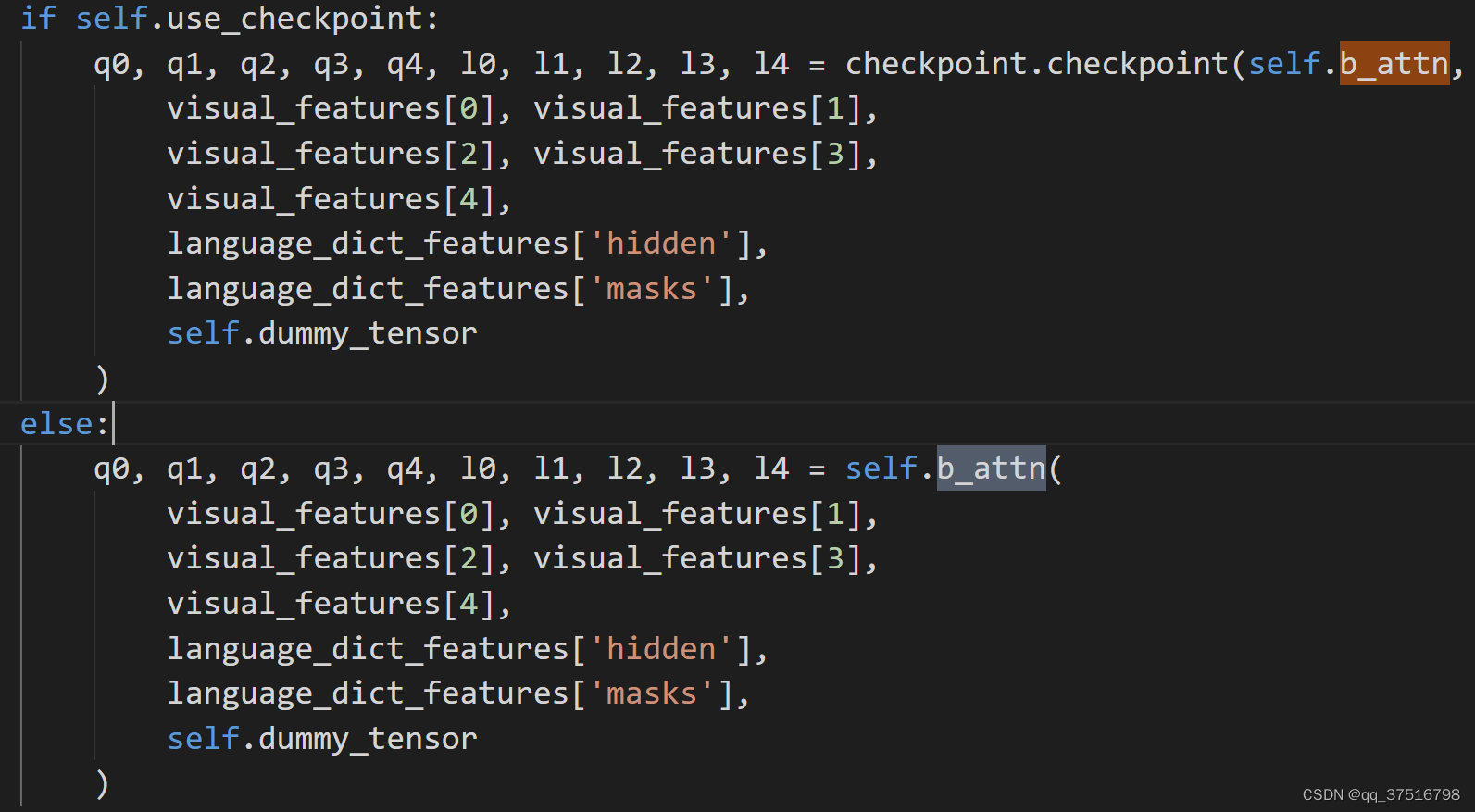

训练代码中 torch.nn.parallel.DistributedDataParallel(find_unused_parameters) 中的find_unused_parameters参数设置为True - 重新运行训练代码,会打印出具体出错的层或者算子名字,例如我这里是 b_attn这个算子,

- 在代码中找到相应的算子位置,从而定位原因,像我这里就是因为使用了pytorch的checkpoint.checkpoint()该函数的作用是以计算换取显存消耗,即不保存很多中间计算图,较少显存开销,但是会增加计算和耗时。该函数导致了分布式多卡训练时出问题。

Checkpointing works by trading compute for memory. Rather than storing all

intermediate activations of the entire computation graph for computing

backward, the checkpointed part does not save intermediate activations,

and instead recomputes them in backward pass. It can be applied on any part

of a model.

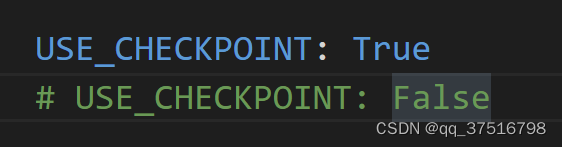

5. 在config文件中设置为false,不使用checkpoint.checkpoint()函数即可

总结:出现这个报错一般是分布式训练时,每个卡上的梯度更新同步出问题导致,需要定位到具体的算子去解决

1382

1382

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?