- You train a support vector classifier with a radial basis function kernel ( γ=0.1) on some data. Out of all training instances, the algorithm selects S={1,7,9} as support vectors:

x(1)=[1,0.5,0.9] with label r(1)=+1 and optimal α1=0.95 ,

x(7)=[0.7,0.8,−0.3] with label r(7)=−1 and optimal α7=0.2 ,

x(9)=[0.7,0.3,0] with label r(9)=+1 and optimal α9=0.35 .

The optimal bias of the classifier is b=−0.2. Compute the decision function h(x) and the class of the new example x=[0.1,0.7,0.2].

Decision function: sign(h(x))=+1 .

Class: 1

Analysis:

- The following plot shows the training instances of a binary classification problem.

What decision would improve the accuracy of a support vector classifier on this problem?

a. using a small value of parameter C to reduce the risk of overfitting.

b. using a non-linear kernel.

c. using a large value of parameter C to reduce the risk of overfitting.

d. running a forward feature selection procedure before training the classifier.

Correct answer: b

Analysis:

We could see the picture, there are two types of data in here. And we can’t find a line to divide it into two parts, but we could use the circle to unravel it. So, using non-linear kernel is a useful way to solve it.

Looking at the data, we see that the two classes are not linearly separable. Therefore, one should try a non-linear kernel, for example, a radial basis function.

- Support vector classifiers are an example of instance-based learners. They can be considered as a kind of weighted k-NN retaining ___________and using _______________as similarity measure. During the prediction phase, the contribution of each stored example (the support vectors) is weighted ______________________________.

Correct answer:

examples that are either misclassified or correctly classified with insufficient margin

examples that are either misclassified or correctly classified with insufficient margin

by the coefficients alpha that was optimized during the training phase

Analysis:

Support vector classifiers are an example of instance-based learners. They can be considered as a kind of weighted k-NN retaining examples that are either misclassified (those for which the label and the prediction have opposite signs: r(t)h(x(t))<0) or correctly classified with insufficient margin ( 0≤r(t)h(x(t))≤1). Linear SVMs use the inner product ( ⟨x(t),x⟩) while non-linear ones use a non-linear kernel ( K(x(t),x)) as similarity measure between the new example x and each stored example, i.e. support vector, x(t) with t∈S. During the prediction phase, the contribution of each stored example (the support vectors) is weighted by the corresponding coefficient αt that was optimized during the training phase.

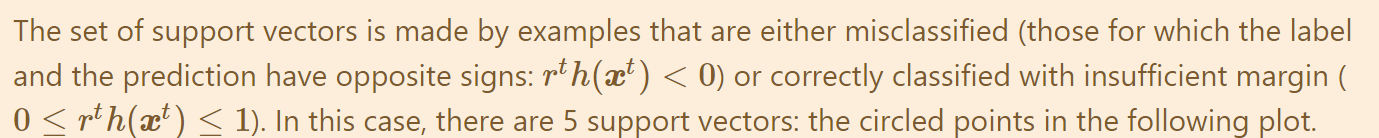

- The following plot shows the training instances, the decision boundary and the margin of a support vector classifier.

What is the number of support vectors?

a. 2.

b. 5.

c. 3.

d. 4 .

Correct answer: b

Analysis:

We could see that there are 3 lines in the picture. In this area, there are two triangles and two black points. But in the support vectors, we could think that the opposite points in a different area are also a sign for support vectors. So, the triangle which is in the under left is also the support vector. The sum of support vector is 5.

343

343

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?