TensorBoard

tensorboard:tensorflow中的可视化工具,支持图像,音频,标量,文本的可视化

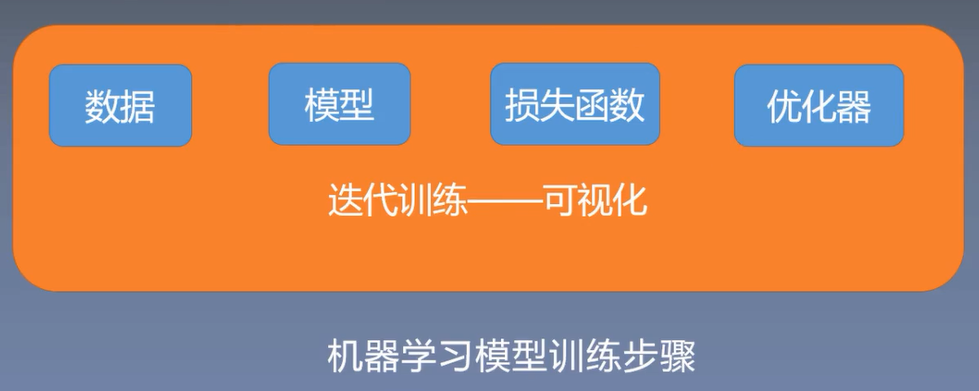

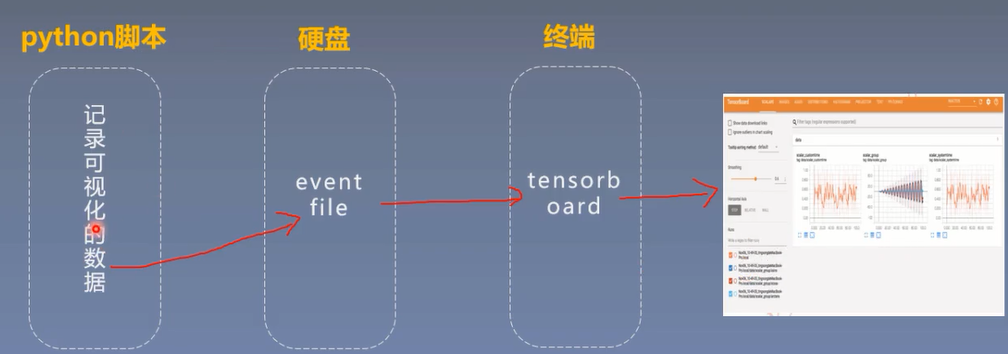

tensorboard运行机制:

pip install tensorboard 会出现错误 no model named past

解决:pip install future

tensorboard --logdir=‘./saave_path’

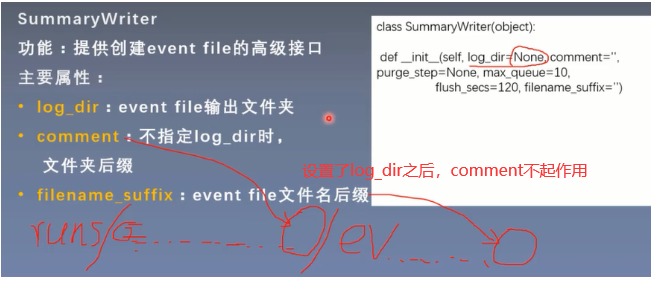

类Summarywriter

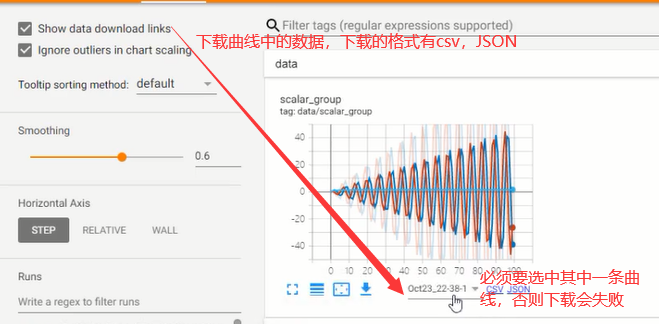

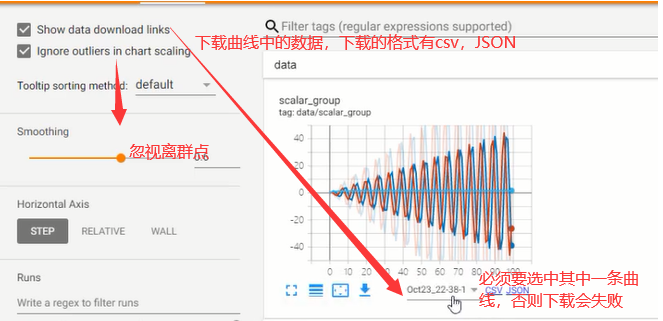

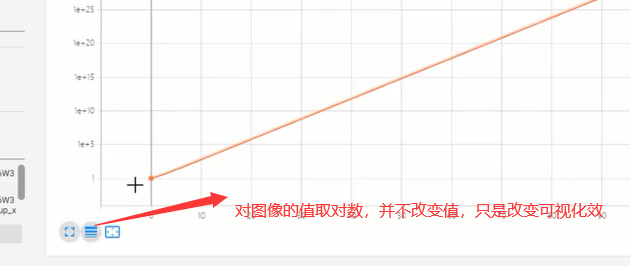

1.add_scale与add_scales

from torch.utils.tensorboard import SummaryWriter

if flag:

max_epoch = 100

path='./'

writer = SummaryWriter(path)

for x in range(max_epoch):

#add_scalar:记录标量,x*2是y轴

writer.add_scalar('y=2x', x * 2, x)

writer.add_scalar('y=pow_2_x', 2 ** x, x)

#add_scalars:记录多个曲线在一个图中

writer.add_scalars('data/scalar_group', {"xsinx": x * np.sin(x),

"xcosx": x * np.cos(x)}, x)

writer.close()

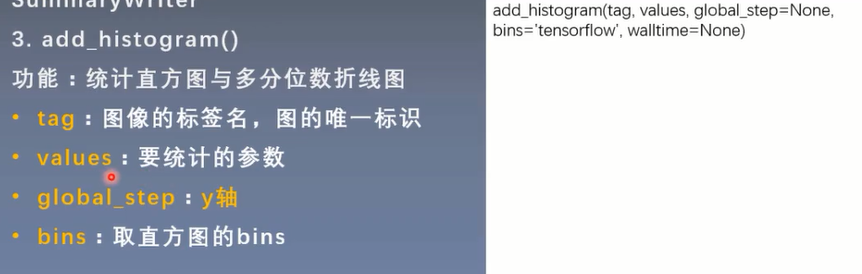

2.add_histogram

from torch.utils.tensorboard import SummaryWriter

flag = 1

if flag:

writer = SummaryWriter(comment='test_comment', filename_suffix="test_suffix")

for x in range(2):

np.random.seed(x)

#0-99的点

data_union = np.arange(100)

#nomal服从正态分布的点

data_normal = np.random.normal(size=1000)

#add_histogram统计直方图与多分位数折线图,x是globel_step即y值

writer.add_histogram('distribution union', data_union, x)

writer.add_histogram('distribution normal', data_normal, x)

plt.subplot(121).hist(data_union, label="union")

plt.subplot(122).hist(data_normal, label="normal")

plt.legend()

plt.show()

writer.close()

可视化梯度,loss,accuracy

# 记录数据,保存于event file

writer.add_scalars("Loss", {"Train": loss.item()}, iter_count)

writer.add_scalars("Accuracy", {"Train": correct / total}, iter_count)

# 每个epoch,记录梯度,权值

for name, param in net.named_parameters():

writer.add_histogram(name + '_grad', param.grad, epoch)

writer.add_histogram(name + '_data', param, epoch)

观察模型参数分布情况,前面的梯度小,后面的梯度大,发生了梯度消失,后面回传梯度时发生了消失。

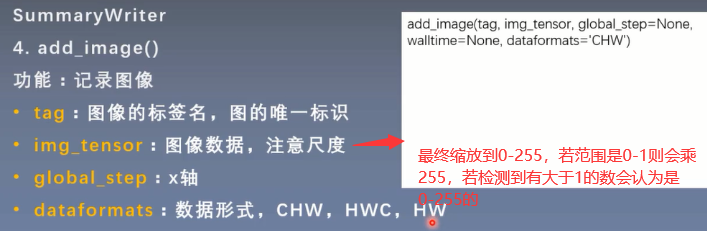

3.add_image 图像可视化

一张图中只有一张图

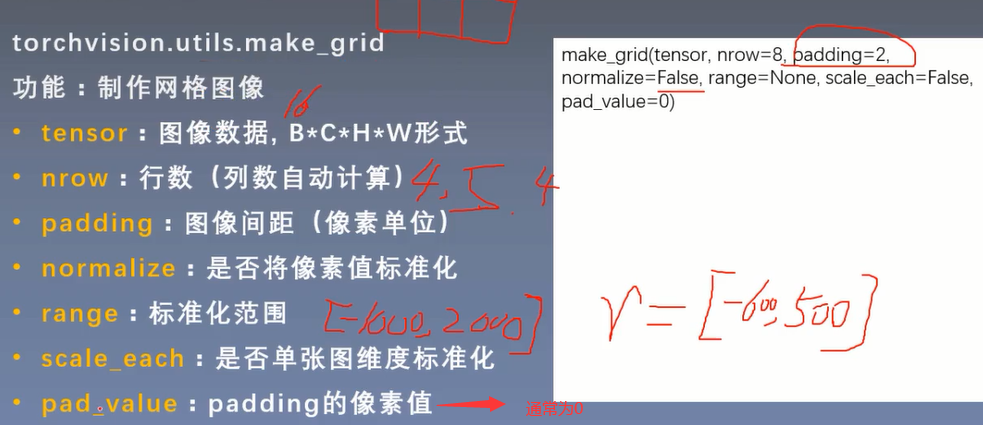

4.torchvision.utils.make_grid

一张图可视化多张图,可用于审查数据集

5.可视化卷积核与特征图

卷积核可视化

# ----------------------------------- kernel visualization -----------------------------------

# flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

alexnet = models.alexnet(pretrained=True)

kernel_num = -1

vis_max = 1

for sub_module in alexnet.modules():

if isinstance(sub_module, nn.Conv2d):

kernel_num += 1

if kernel_num > vis_max:

break

kernels = sub_module.weight

c_out, c_int, k_w, k_h = tuple(kernels.shape)

for o_idx in range(c_out):

kernel_idx = kernels[o_idx, :, :, :].unsqueeze(1) # make_grid需要 BCHW,这里拓展C维度

kernel_grid = vutils.make_grid(kernel_idx, normalize=True, scale_each=True, nrow=c_int)

writer.add_image('{}_Convlayer_split_in_channel'.format(kernel_num), kernel_grid, global_step=o_idx)

kernel_all = kernels.view(-1, 3, k_h, k_w) # 3, h, w

kernel_grid = vutils.make_grid(kernel_all, normalize=True, scale_each=True, nrow=8) # c, h, w

writer.add_image('{}_all'.format(kernel_num), kernel_grid, global_step=322)

print("{}_convlayer shape:{}".format(kernel_num, tuple(kernels.shape)))

writer.close()

特征图可视化,需手动指定层获取

# ----------------------------------- feature map visualization -----------------------------------

# flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

# 数据

path_img = "./lena.png" # your path to image

normMean = [0.49139968, 0.48215827, 0.44653124]

normStd = [0.24703233, 0.24348505, 0.26158768]

norm_transform = transforms.Normalize(normMean, normStd)

img_transforms = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

norm_transform

])

img_pil = Image.open(path_img).convert('RGB')

if img_transforms is not None:

img_tensor = img_transforms(img_pil)

img_tensor.unsqueeze_(0) # chw --> bchw

# 模型

alexnet = models.alexnet(pretrained=True)

# forward

convlayer1 = alexnet.features[0]

fmap_1 = convlayer1(img_tensor)

# 预处理

fmap_1.transpose_(0, 1) # bchw=(1, 64, 55, 55) --> (64, 1, 55, 55)

fmap_1_grid = vutils.make_grid(fmap_1, normalize=True, scale_each=True, nrow=8)

writer.add_image('feature map in conv1', fmap_1_grid, global_step=322)

writer.close()

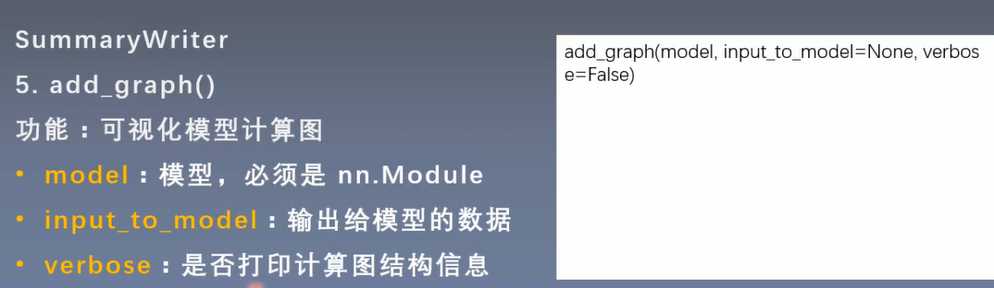

5.add_graph() 计算图,就是整个的计算流程

pytorch1.2是错的,必须构建到1.3

# flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

# 模型

fake_img = torch.randn(1, 3, 32, 32)

lenet = LeNet(classes=2)

writer.add_graph(lenet, fake_img)

writer.close()

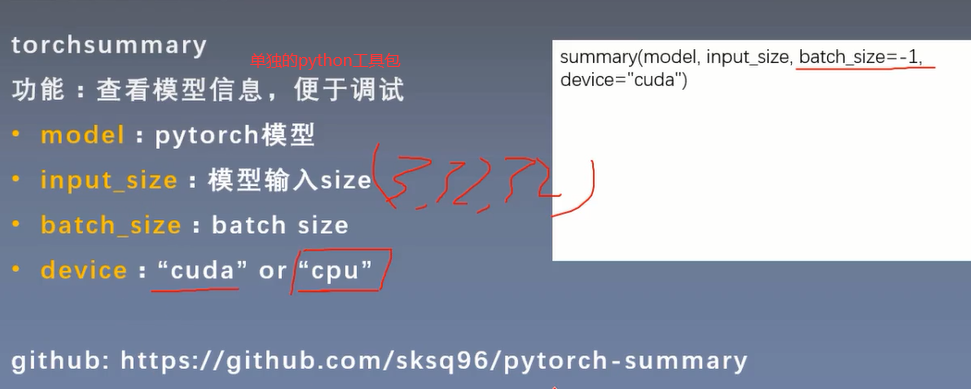

6.torchsummary 查看模型信息,便于调试

from torchsummary import summary

#模型名称,输入的size大小

print(summary(lenet, (3, 32, 32), device="cpu"))

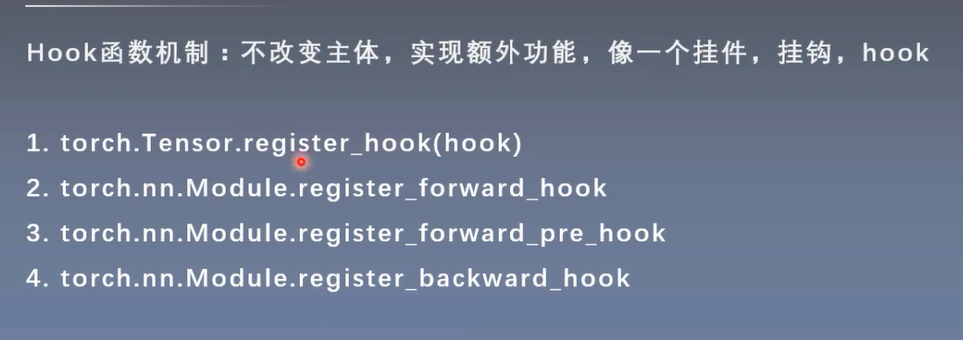

7.hook函数 输出特征图大小

前向传播或后向传播中挂上一个hook

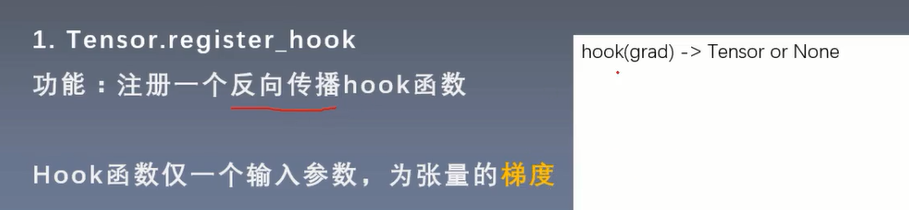

1.tensor.register_hook

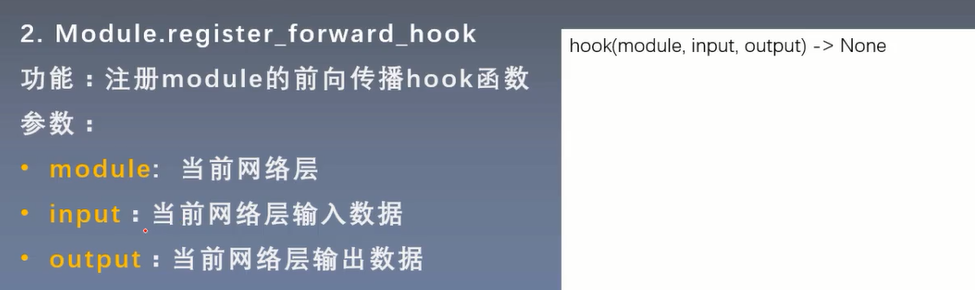

2.module.register_forward_hook

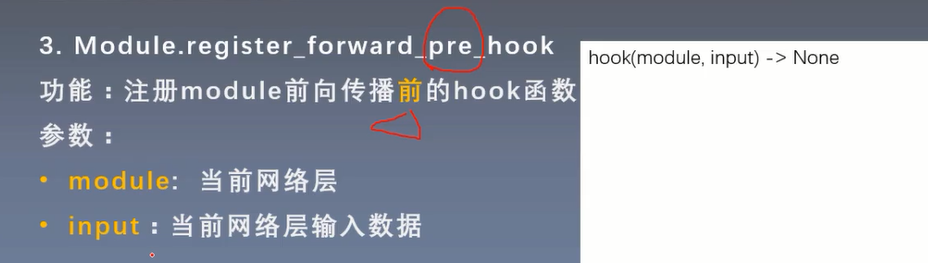

3.

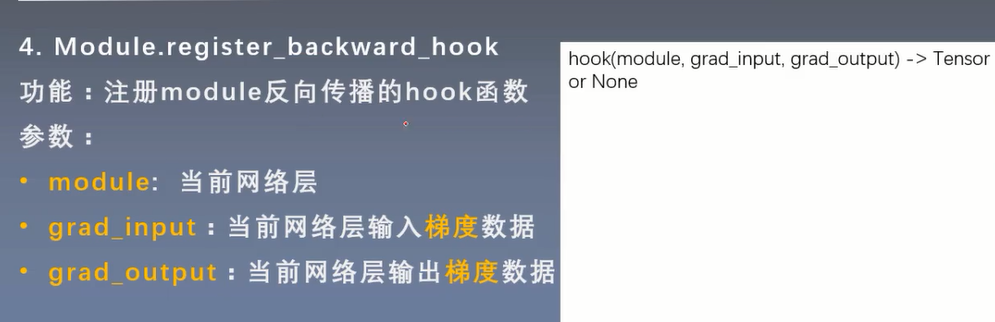

4.

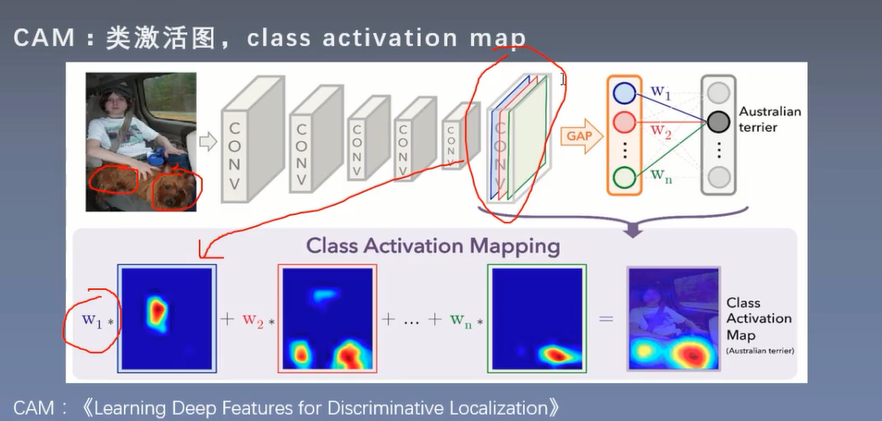

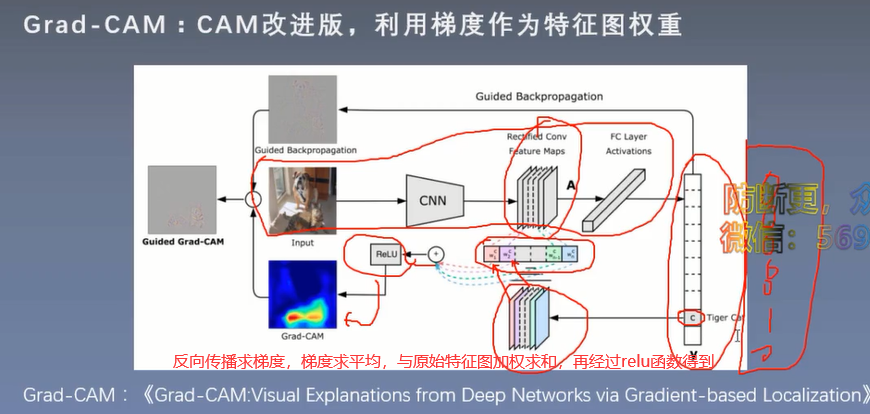

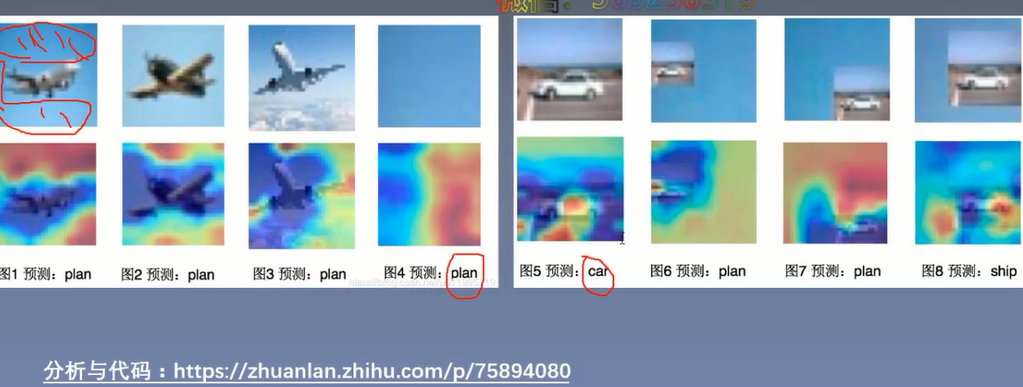

8.CAM Grad-CAM 网络关注的地方

特征图加权平均得到

获取权值w,对特征图进行全局平均池化之后加一个全连接层的输出

对最后一个特征图进行全局平均池化转换成向量,再加FC层

266752173)]

4.

[外链图片转存中…(img-83rC15eD-1619266752175)]

8.CAM Grad-CAM 网络关注的地方

[外链图片转存中…(img-wLD5Ljtx-1619266752176)]

特征图加权平均得到

获取权值w,对特征图进行全局平均池化之后加一个全连接层的输出

对最后一个特征图进行全局平均池化转换成向量,再加FC层

[外链图片转存中…(img-t899JGYI-1619266752178)]

5万+

5万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?