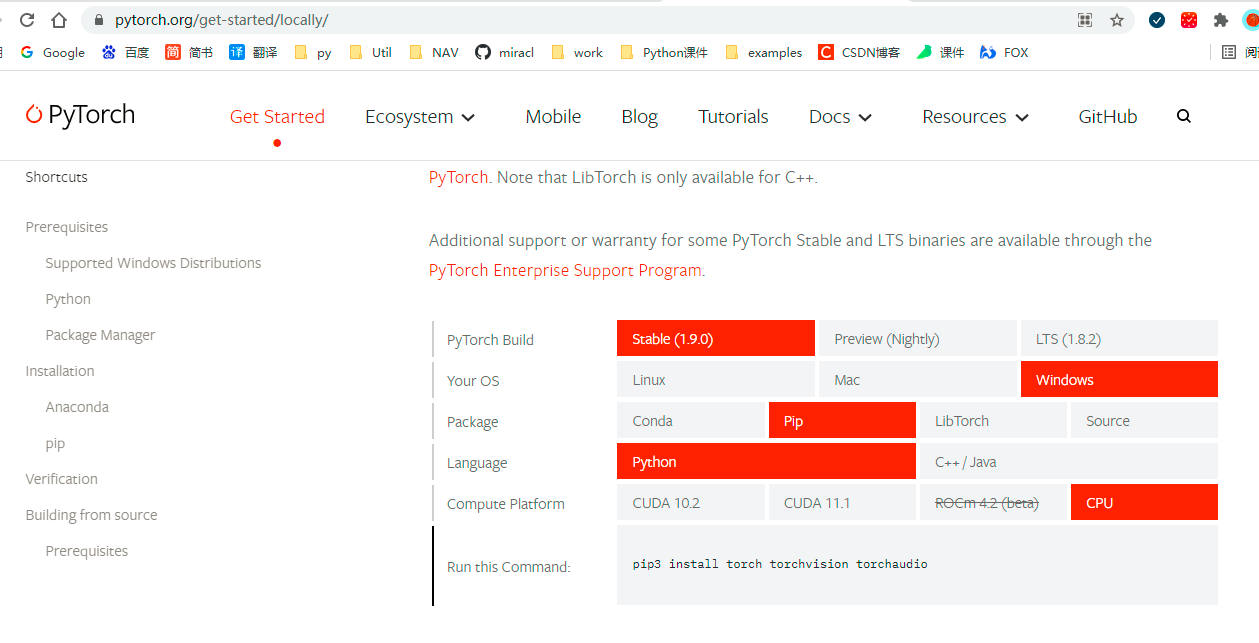

Pytorch下载

https://pytorch.org/get-started/locally/ 选择对应的版本命令

window/mac cpu

pip3 install torch torchvision torchaudio

import torch

#定义向量

vector = torch.tensor([1,2,3,4])

print('Vector:\t\t', vector)

print('Vector Shape:\t', vector.shape)

Vector: tensor([1, 2, 3, 4])

Vector Shape: torch.Size([4])

#定义矩阵

matrix = torch.tensor([[1,2],[3,4]])

print('Matrix:\n', matrix)

print('Matirx Shape:\n', matrix.shape)

Matrix:

tensor([[1, 2],

[3, 4]])

Matirx Shape:

torch.Size([2, 2])

#定义张量

tensor = torch.tensor([[[1,2],[3,4]],[[1,2],[3,4]]])

print('Tensor:\n', tensor)

print('Tensor Shape:\n', tensor.shape)

Tensor:

tensor([[[1, 2],

[3, 4]],

[[1, 2],

[3, 4]]])

Tensor Shape:

torch.Size([2, 2, 2])

# torch.tensor( )是一个function,在pycharm使用的时候会有标志

# torch.Tensor是一个class,是torch.FloatTensor的别称

a = torch.Tensor([[1, 2],[3, 4]])

print(a)

print(a.type())# 直接定义是float类型

tensor([[1., 2.],

[3., 4.]])

torch.FloatTensor

b = torch.Tensor(2, 2) #直接使用shape定义tensor

print(b)

tensor([[1.0000e+00, 0.0000e+00],

[2.9848e-43, 0.0000e+00]])

d = torch.tensor(((1, 2), (3, 4)))

print(d.type())

print(d.type_as(a))

torch.LongTensor

tensor([[1., 2.],

[3., 4.]])

d = torch.empty(2,3)

print(d.type())

print(d.type_as(a))

torch.FloatTensor

tensor([[0., 0., 0.],

[0., 0., 0.]])

d = torch.zeros(2,3)

print(d.type())

print(d.type_as(a))

torch.FloatTensor

tensor([[0., 0., 0.],

[0., 0., 0.]])

d = torch.zeros_like(d)

print(d.type())

print(d.type_as(a))

torch.FloatTensor

tensor([[0., 0., 0.],

[0., 0., 0.]])

d = torch.eye(2, 2)

print(d.type())

print(d.type_as(a))

torch.FloatTensor

tensor([[1., 0.],

[0., 1.]])

d = torch.ones(2, 2)

print(d.type())

print(d.type_as(a))

d = torch.ones_like(d)

print(d.type())

print(d.type_as(a))

torch.FloatTensor

tensor([[1., 1.],

[1., 1.]])

torch.FloatTensor

tensor([[1., 1.],

[1., 1.]])

d = torch.rand(2, 3) # 随机浮点 [0, 1)

print(d.type())

print(d.type_as(a))

torch.FloatTensor

tensor([[0.9853, 0.5354, 0.3119],

[0.5001, 0.2459, 0.6053]])

d = torch.arange(2, 10, 2)

print(d.type())

print(d.type_as(a))

torch.LongTensor

tensor([2., 4., 6., 8.])

d = torch.linspace(10, 2, 3)

print(d.type())

print(d.type_as(a))

d

torch.FloatTensor

tensor([10., 6., 2.])

tensor([10., 6., 2.])

dd = torch.normal(mean=0, std=1, size=(2, 3))

dd

tensor([[ 1.9897, -1.0289, -0.4013],

[ 0.7787, -1.2150, -2.2234]])

d = torch.normal(mean=torch.rand(5), std=torch.rand(5))

# 五组 不同均值和标准差的数据 常用于初始化数据

print(d.type())

print(d.type_as(a))

torch.FloatTensor

tensor([0.8920, 0.2696, 1.0647, 1.3374, 0.8834])

d = torch.Tensor(2, 2).uniform_(-1, 1) #均匀分布

print(d.type())

print(d.type_as(a))

torch.FloatTensor

tensor([[-0.4591, -0.0127],

[-0.2035, -0.2403]])

d = torch.randperm(10) # 随机全排列

print(d.type())

print(d.type_as(a))

torch.LongTensor

tensor([9., 6., 3., 5., 0., 8., 4., 2., 1., 7.])

# 转换为numpy

d.numpy()

array([9, 6, 3, 5, 0, 8, 4, 2, 1, 7], dtype=int64)

Tensor的属性

d.dtype

torch.int64

d.device

device(type='cpu')

d.layout

# torch.layout 表明内存布局(memory layout),即 tensor 在物理设备中的储存结构。

#学过数据结构的都知道储存结构(物理结构)简单可以分为:顺序储存、链式储存。

# torch.layout 可选torch.stried或torch.sparse_coo。分别对应顺序储存、离散储存。

# 一般说,稠密张量适用torch.stried(跨步),稀疏张量(0 比较多)适用torch.sparse_coo。

torch.strided

dev = torch.device("cpu")

# dev = torch.device("cuda")

a = torch.tensor([2, 2],

dtype=torch.float32,

device=dev)

print(a)

i = torch.tensor([[0, 1, 2], [0, 1, 2]]) # 坐标 (0, 0) (1, 1) (2, 2)对角线

v = torch.tensor([1, 2, 3])

# 定义稀疏张量 coo 非零的元素的个数, 非零越多越稀疏

# (4, 4)是形状

a = torch.sparse_coo_tensor(i, v, (4, 4),

dtype=torch.float32,

device=dev)

print(a)

a.layout

tensor([2., 2.])

tensor(indices=tensor([[0, 1, 2],

[0, 1, 2]]),

values=tensor([1., 2., 3.]),

size=(4, 4), nnz=3, layout=torch.sparse_coo)

torch.sparse_coo

a = torch.sparse_coo_tensor(i, v, (4, 4),

dtype=torch.float32,

device=dev).to_dense() # 转换成稠密张量

print(a)

a.layout

tensor([[1., 0., 0., 0.],

[0., 2., 0., 0.],

[0., 0., 3., 0.],

[0., 0., 0., 0.]])

torch.strided

基本运算

##add

a = torch.arange(1, 7).reshape((2, 3))

b = torch.arange(1, 7).reshape((2, 3))

print(a)

print(b)

tensor([[1, 2, 3],

[4, 5, 6]])

tensor([[1, 2, 3],

[4, 5, 6]])

print(a + b)

print(a.add(b))

print(torch.add(a, b))

print(a)

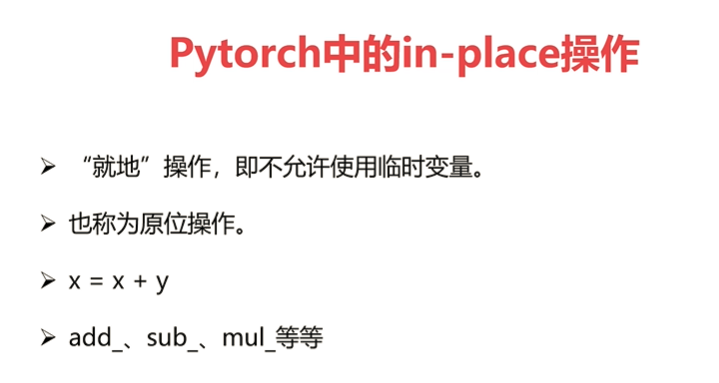

print(a.add_(b))

print(a) # a的值会变

print(b)

tensor([[ 3, 6, 9],

[12, 15, 18]])

tensor([[ 3, 6, 9],

[12, 15, 18]])

tensor([[ 3, 6, 9],

[12, 15, 18]])

tensor([[ 2, 4, 6],

[ 8, 10, 12]])

tensor([[ 3, 6, 9],

[12, 15, 18]])

tensor([[ 3, 6, 9],

[12, 15, 18]])

tensor([[1, 2, 3],

[4, 5, 6]])

#sub

print("==== sub res ====")

print(a - b)

print(torch.sub(a, b))

print(a.sub(b))

print(a.sub_(b))

print(a)

==== sub res ====

tensor([[ 2, 4, 6],

[ 8, 10, 12]])

tensor([[ 2, 4, 6],

[ 8, 10, 12]])

tensor([[ 2, 4, 6],

[ 8, 10, 12]])

tensor([[ 2, 4, 6],

[ 8, 10, 12]])

tensor([[ 2, 4, 6],

[ 8, 10, 12]])

## mul

print("===== mul ====")

print(a * b) # 哈达玛积

print(torch.mul(a, b))

print(a.mul(b))

print(a)

print(a.mul_(b))

print(a)

===== mul ====

tensor([[ 2, 16, 54],

[128, 250, 432]])

tensor([[ 2, 16, 54],

[128, 250, 432]])

tensor([[ 2, 16, 54],

[128, 250, 432]])

tensor([[ 2, 8, 18],

[32, 50, 72]])

tensor([[ 2, 16, 54],

[128, 250, 432]])

tensor([[ 2, 16, 54],

[128, 250, 432]])

# div

a = torch.rand(2, 3)

b = torch.rand(2, 3)

print("=== div ===")

print(a/b)

print(torch.div(a, b))

print(a.div(b))

print(a.div_(b))

print(a)

=== div ===

tensor([[0.4915, 0.5121, 2.2019],

[1.7804, 0.4173, 1.5148]])

tensor([[0.4915, 0.5121, 2.2019],

[1.7804, 0.4173, 1.5148]])

tensor([[0.4915, 0.5121, 2.2019],

[1.7804, 0.4173, 1.5148]])

tensor([[0.4915, 0.5121, 2.2019],

[1.7804, 0.4173, 1.5148]])

tensor([[0.4915, 0.5121, 2.2019],

[1.7804, 0.4173, 1.5148]])

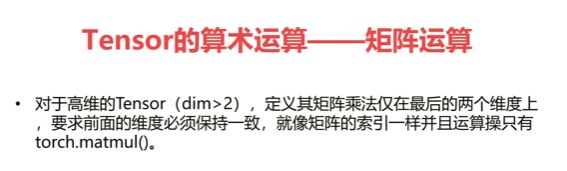

###matmul 矩阵相乘

a = torch.ones(2, 1)

b = torch.ones(1, 2)

print(a)

print(b)

## 以下都是一样的

print(a @ b)

print(a.matmul(b))

print(torch.matmul(a, b))

print(torch.mm(a, b))

print(a.mm(b))

tensor([[1.],

[1.]])

tensor([[1., 1.]])

tensor([[1., 1.],

[1., 1.]])

tensor([[1., 1.],

[1., 1.]])

tensor([[1., 1.],

[1., 1.]])

tensor([[1., 1.],

[1., 1.]])

tensor([[1., 1.],

[1., 1.]])

##高维tensor

a = torch.ones(1, 2, 3, 4)

b = torch.ones(1, 2, 4, 3)

print(a.matmul(b))

print(a.matmul(b).shape)

tensor([[[[4., 4., 4.],

[4., 4., 4.],

[4., 4., 4.]],

[[4., 4., 4.],

[4., 4., 4.],

[4., 4., 4.]]]])

torch.Size([1, 2, 3, 3])

##pow

a = torch.tensor([1, 2])

print(torch.pow(a, 3))

print(a.pow(3))

print(a**3)

print(a.pow_(3))

print(a)

tensor([1, 8])

tensor([1, 8])

tensor([1, 8])

tensor([1, 8])

tensor([1, 8])

#exp e的几次方

a = torch.tensor([1, 2],

dtype=torch.float32)

print(a.type())

print(torch.exp(a))

print(torch.exp_(a))

print(a.exp())

print(a.exp_())

torch.FloatTensor

tensor([2.7183, 7.3891])

tensor([2.7183, 7.3891])

tensor([ 15.1543, 1618.1781])

tensor([ 15.1543, 1618.1781])

##log

a = torch.tensor([10, 2],

dtype=torch.float32)

print(torch.log(a)) # 底数是e

print(torch.log2(a))

print(torch.log10(a))

print(torch.log_(a))

print(a.log())

print(a.log_())

tensor([2.3026, 0.6931])

tensor([3.3219, 1.0000])

tensor([1.0000, 0.3010])

tensor([2.3026, 0.6931])

tensor([ 0.8340, -0.3665])

tensor([ 0.8340, -0.3665])

##sqrt

a = torch.tensor([10, 2],

dtype=torch.float32)

print(torch.sqrt(a))

print(torch.sqrt_(a))

print(a.sqrt())

print(a.sqrt_())

tensor([3.1623, 1.4142])

tensor([3.1623, 1.4142])

tensor([1.7783, 1.1892])

tensor([1.7783, 1.1892])

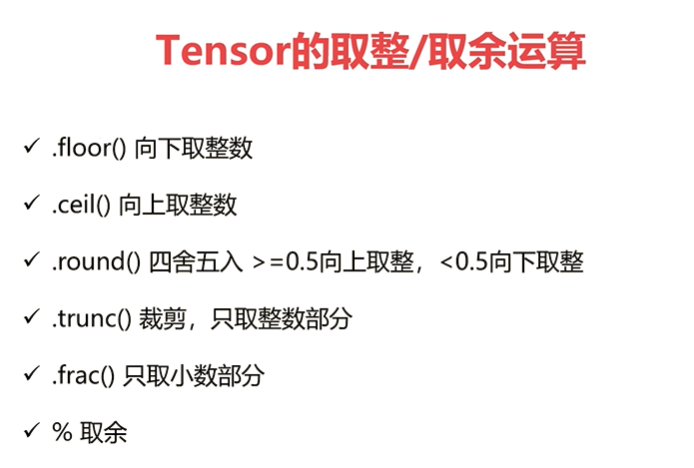

a = torch.rand(2, 2)

a = a * 10

print(a)

print(torch.floor(a))

print(torch.ceil(a))

print(torch.round(a))

print(torch.trunc(a))

print(torch.frac(a))

print(a % 2)

b = torch.tensor([[2, 3], [4, 5]],

dtype=torch.float)

tensor([[6.3697, 7.0186],

[2.7078, 9.1732]])

tensor([[6., 7.],

[2., 9.]])

tensor([[ 7., 8.],

[ 3., 10.]])

tensor([[6., 7.],

[3., 9.]])

tensor([[6., 7.],

[2., 9.]])

tensor([[0.3697, 0.0186],

[0.7078, 0.1732]])

tensor([[0.3697, 1.0186],

[0.7078, 1.1732]])

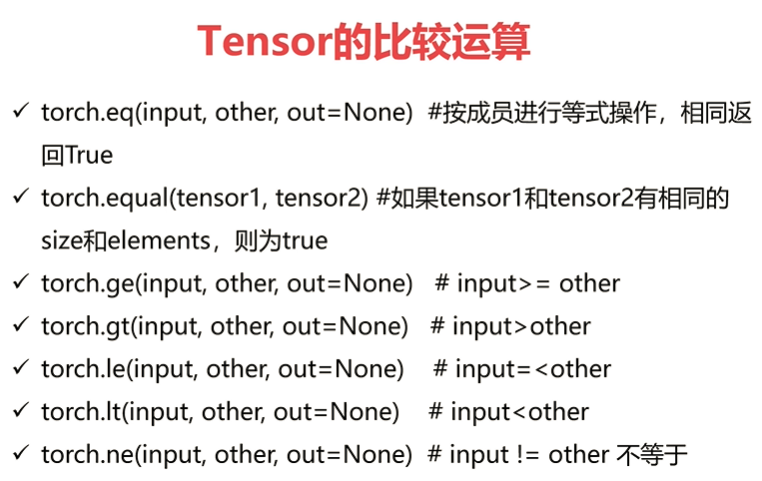

a = torch.rand(2, 3)

b = torch.rand(2, 3)

print(a)

print(b)

print(torch.eq(a, b))

print(torch.equal(a, b))

print(torch.ge(a, b))

print(torch.gt(a, b))

print(torch.le(a, b))

print(torch.lt(a, b))

print(torch.ne(a, b))

tensor([[0.9355, 0.8971, 0.8710],

[0.9562, 0.6887, 0.5133]])

tensor([[0.5730, 0.4295, 0.9110],

[0.6943, 0.3907, 0.2165]])

tensor([[False, False, False],

[False, False, False]])

False

tensor([[ True, True, False],

[ True, True, True]])

tensor([[ True, True, False],

[ True, True, True]])

tensor([[False, False, True],

[False, False, False]])

tensor([[False, False, True],

[False, False, False]])

tensor([[True, True, True],

[True, True, True]])

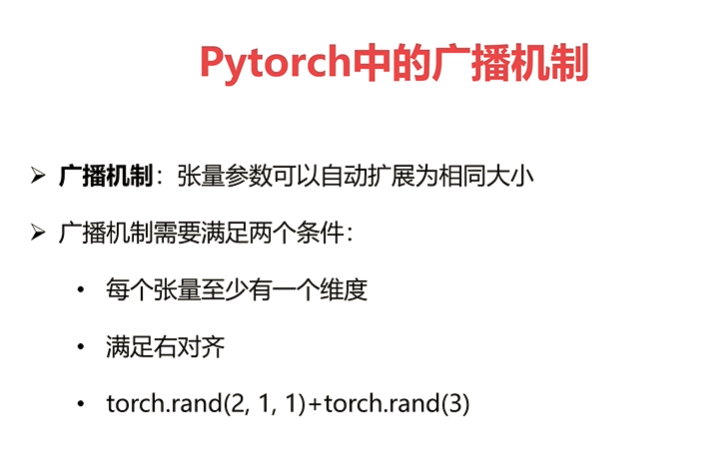

上面的rand(3)需要补充到 (1, 1, 3),从右面看满足右对齐的条件时 要么相等要么有一个是1

上面的rand(3)需要补充到 (1, 1, 3),从右面看满足右对齐的条件时 要么相等要么有一个是1

a = torch.tensor([[1, 4, 4, 3, 5],

[2, 3, 1, 3, 5]])

print(a.shape)

print(torch.sort(a, dim=1,

descending=False))

##topk

a = torch.tensor([[2, 4, 3, 1, 5],

[2, 3, 5, 1, 4]])

print(a.shape)

print(torch.topk(a, k=2, dim=1, largest=False))

print(torch.kthvalue(a, k=2, dim=0))

print(torch.kthvalue(a, k=2, dim=1))

a = torch.rand(2, 3)

print(a)

print(a/0)

print(torch.isfinite(a))

print(torch.isfinite(a/0))

print(torch.isinf(a/0))

print(torch.isnan(a))

import numpy as np

a = torch.tensor([1, 2, np.nan])

print(torch.isnan(a))

a = torch.rand(2, 3)

print(a)

print(torch.topk(a, k=2, dim=1, largest=False))

print(torch.topk(a, k=2, dim=1, largest=True))

a = torch.rand(2, 2)

b = torch.rand(1, 2)

d = torch.rand(1, 3)

# a, 2*1

# b, 1*2

# c, 2*2

# 2*4*2*3

c = a + b

print(a)

print(b)

print(c)

print(c.shape)

tensor([[0.9504, 0.3773],

[0.7862, 0.6375]])

tensor([[0.7592, 0.0868]])

tensor([[1.7096, 0.4641],

[1.5454, 0.7243]])

torch.Size([2, 2])

a + d

RuntimeError Traceback (most recent call last)

in ()

----> 1 a + d

RuntimeError: The size of tensor a (2) must match the size of tensor b (3) at non-singleton dimension 1

a = torch.rand(2, 2)

a = a * 10

print(a)

print(torch.floor(a))

print(torch.ceil(a))

print(torch.round(a))

print(torch.trunc(a))

print(torch.frac(a))

print(a % 2)

b = torch.tensor([[2, 3], [4, 5]],

dtype=torch.float)

tensor([[0.1020, 3.1624],

[5.8866, 3.4707]])

tensor([[0., 3.],

[5., 3.]])

tensor([[1., 4.],

[6., 4.]])

tensor([[0., 3.],

[6., 3.]])

tensor([[0., 3.],

[5., 3.]])

tensor([[0.1020, 0.1624],

[0.8866, 0.4707]])

tensor([[0.1020, 1.1624],

[1.8866, 1.4707]])

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?