处理数据

假如我们要处理的数据有10000张图片,我们既不能一次传进去一万张,这样显存会爆掉,也不能一次只传一张,这样效率太低了。所以我们需要把所有图片分批传进来,比如分100个batch,每个batch里面有100张,也就是batch_size=100。我们训练神经网络的时候并不是把所有数据丢进去训练一轮就行了,而是要反复丢进去学习几轮,这个轮数就叫epoch。计算机视觉方向经常处理的数据是图片,那么我们处理数据后得到的是图片以及它的标签,再将Image+label传入神经网络进行训练。

下面我们用伪代码的形式试着不用任何框架,手写一个处理数据的代码,关键是理解思路。

dir = "./data/" #100张图片

def 挤牙膏操作(dir,batch_size):

读取文件夹(dir)

image ,label=按照batch_size进行数据的拆分(batch_size)

image=数据预处理(image)

return image ,label

for i in range(5): #5个epoch

for j in range(10):

image, label = 挤牙膏操作(dir, batch_size=10)

pre_label = 神经网络(image)

损失函数(pre_label,label)

python中是真的可以用中文来命名函数名的,但是不建议这么用,上面的代码概括了基本的处理数据的思路。

下图是pycharm软件Debug的时候经常用到的一些按键。

下面看一下pytorch框架是如何实现读数据的流程的。

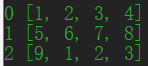

list_train = [[1,2,3,4],[5,6,7,8],[9,1,2,3]]

for i, data in enumerate(list_train):

print(i,data)

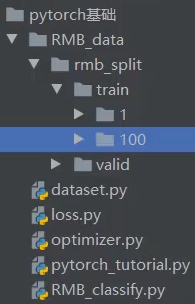

如果我们可以把数据保存成上面列表的形式,我们就可以用for循环一次取出一个batch。下面以一个人民币二分类的项目为例,看看pytorch是如何读取数据的。数据为100张1元人民币和100张100元人民币,90张用作训练集,10张用作验证集,人民币图片分别放在1和100的文件夹下。

自己定义一个类,继承pytorch的Dataset类,用来处理自己的数据。里面包含三个函数,__init__函数是实例化的时候就会执行,getitem用来获取数据,len计算数据长度。图片首先经过一个transforms函数,将图片尺寸转为统一的32*32,然后转为网络能处理的tentor张量,得到的train_data维度为(3,32,32),其中3表示彩色图片的3通道。然后用pytorch封装好的DataLoader函数把一个batch的16张图片存放到train_loader变量中,train_loader的维度为(16,3,32,32)。

class MYDataset(Dataset):

def __init__(self, data_dir, transform=None):

..

def __getitem__(self, index):

..

return ..,..

def __len__(self):

return len(..)

train_dir='./'

train_data = MYDataset(data_dir=train_dir)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=16)

for epoch in range(10):

for i, data in enumerate(train_loader):

pass

假设训练10轮,用for循环每次取出16张图片进行处理,data变量包含两个元素,第一个元素是包括16张图片的张量,第二个元素是它们分别对应的int型类别标签组成的张量。这里我们的数据标签是0,1整数型标签,像有些数据集标签是xml格式的或者txt格式,我们完全可以再自己定义的读数据类中更改获取数据标签部分的代码,来得到我们想要的信息。

import os

import random

from PIL import Image

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

import cv2

random.seed(1)

rmb_label = {"1": 0, "100": 1}

train_transform = transforms.Compose([

transforms.Resize((32, 32)),

# transforms.RandomCrop(32, padding=4),

transforms.ToTensor(),

# transforms.Normalize(norm_mean, norm_std),

])

class RMBDataset(Dataset):

def __init__(self, data_dir, transform=None):

self.label_name = {"1": 0, "100": 1}

self.data_info = self.get_img_info(data_dir) # data_info存储所有图片路径和标签,在DataLoader中通过index读取样本

self.transform = transform

def __getitem__(self, index):

path_img, label = self.data_info[index]

img = Image.open(path_img).convert('RGB') # 0~255

if self.transform is not None:

img = self.transform(img) # 在这里做transform,转为tensor等等

return img, label

def __len__(self):

return len(self.data_info)

@staticmethod

def get_img_info(data_dir):

data_info = list()

rmb_label = {"1": 0, "100": 1}

for root, dirs, _ in os.walk(data_dir): #

# 遍历类别

for sub_dir in dirs:

img_names = os.listdir(os.path.join(root, sub_dir))

img_names = list(filter(lambda x: x.endswith('.jpg'), img_names))

# 遍历图片

for i in range(len(img_names)):

img_name = img_names[i]

path_img = os.path.join(root, sub_dir, img_name)

label = rmb_label[sub_dir]

data_info.append((path_img, int(label)))

return data_info

train_dir='./RMB_data/rmb_split/train/'

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=16)

for epoch in range(10):

for i, data in enumerate(train_loader):

result = model(data[0])

loss(result,data[1])

构建网络

用pytorch实现一个LeNet网络,继承nn.Module类,现在__init__函数里定义各个卷积层和全连接层,再在forward函数将它们连接起来,用这样的方式就可以搭建任意我们需要的网络。

import os

import random

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

from collections import OrderedDict

class LeNet(nn.Module):

def __init__(self,classes):

super(LeNet, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5) #(32+2*0-5)/1+1 = 28

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16*5*5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, classes)

def forward(self, x): #4 3 32 32 ->nn.Conv2d(3, 6, 5)-> 4 6 28 28

out = F.relu(self.conv1(x)) #32->28 4 6 28 28

out = F.max_pool2d(out, 2) #4 6 14 14

out = F.relu(self.conv2(out)) # 4 16 10 10

out = F.max_pool2d(out, 2) # 4 16 5 5

out = out.view(out.size(0), -1) #4 400

out = F.relu(self.fc1(out))

out = F.relu(self.fc2(out))

out = self.fc3(out)

return out

def initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.xavier_normal_(m.weight.data)

if m.bias is not None:

m.bias.data.zero_()

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight.data, 0, 0.1)

m.bias.data.zero_()

net = LeNet(classes=3)

fake_img = torch.randn((4, 3, 32, 32), dtype=torch.float32)

output = net(fake_img)

print('over!')

神经网络更高级的搭建方法

Sequential

sequential的好处是它是有序的,可以按顺序执行,不需要我们定义forward过程。

class LeNetSequential(nn.Module):

def __init__(self, classes):

super(LeNetSequential, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 6, 5),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2),

nn.Conv2d(6, 16, 5),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2),)

self.classifier = nn.Sequential(

nn.Linear(16*5*5, 120),

nn.ReLU(),

nn.Linear(120, 84),

nn.ReLU(),

nn.Linear(84, classes),)

def forward(self, x):

x = self.features(x)

x = x.view(x.size()[0], -1)

x = self.classifier(x)

return x

class LeNetSequentialOrderDict(nn.Module):

def __init__(self, classes):

super(LeNetSequentialOrderDict, self).__init__()

self.features = nn.Sequential(OrderedDict({

'conv1': nn.Conv2d(3, 6, 5),

'relu1': nn.ReLU(inplace=True),

'pool1': nn.MaxPool2d(kernel_size=2, stride=2),

'conv2': nn.Conv2d(6, 16, 5),

'relu2': nn.ReLU(inplace=True),

'pool2': nn.MaxPool2d(kernel_size=2, stride=2),

}))

self.classifier = nn.Sequential(OrderedDict({

'fc1': nn.Linear(16*5*5, 120),

'relu3': nn.ReLU(),

'fc2': nn.Linear(120, 84),

'relu4': nn.ReLU(inplace=True),

'fc3': nn.Linear(84, classes),

}))

def forward(self, x):

x = self.features(x)

x = x.view(x.size()[0], -1)

x = self.classifier(x)

return x

# net = LeNetSequential(classes=2)

# net = LeNetSequentialOrderDict(classes=2)

# # #

# fake_img = torch.randn((4, 3, 32, 32), dtype=torch.float32)

# # #

# output = net(fake_img)

第一种实现方式的每一层操作是以0,1,2,3来命名的,没有命名的网络层进行修改的时候不太方便,故可以用第二种方式,给每一层加一个key。

ModuleList

如果我们要构建大量重复的网络层,可以考虑用ModuleList形式,但是ModuleList跟sequential不一样的是它没有帮我们自动地完成forward过程,需要我们手动地去完成前向传播过程。

class myModuleList(nn.Module):

def __init__(self):

super(myModuleList, self).__init__()

modullist_temp = [nn.Linear(10, 10) for i in range(20)]

self.linears = nn.ModuleList(modullist_temp)

def forward(self, x):

for i, linear in enumerate(self.linears):

x = linear(x)

return x

net = myModuleList()

# print(net)

fake_data = torch.ones((10, 10))

output = net(fake_data)

# print(output)

ModuleDict

利用字典的键值对,我们可以通过参数定义的方式来选择不同的网络层。

class myModuleDict(nn.Module):

def __init__(self):

super(myModuleDict, self).__init__()

self.choices = nn.ModuleDict({

'conv': nn.Conv2d(10, 10, 3),

'pool': nn.MaxPool2d(3)

})

self.activations = nn.ModuleDict({

'relu': nn.ReLU(),

'prelu': nn.PReLU()

})

def forward(self, x, choice, act):

x = self.choices[choice](x)

x = self.activations[act](x)

return x

net = myModuleDict()

fake_img = torch.randn((4, 10, 32, 32))

output = net(fake_img, 'conv', 'relu')

# print(output)

损失函数、优化器

预测的结果与真实值越接近,损失函数值越小,所以我们的目标是降低loss值,使得预测结果更准确。

可以直接调用nn.CrossEntropyLoss实现交叉熵损失函数,用optim.SGD选择SGD随机梯度下降优化函数,scheduler为学习率下降策略。在处理每个batch的时候更新优化器,在每一轮跑完后再更新学习率。

import torch

import torch.nn as nn

import torch.optim as optim

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数

# ============================ step 4/5 优化器 ============================

# /'ɒptɪmaɪzə/ /ˈskedʒuːlər/

optimizer = optim.SGD(net.parameters(), lr=LR, momentum=0.9) # 选择优化器

scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1) # 设置学习率下降策略

for epoch in range(10):

for i, data in enumerate(train_loader):

# forward

inputs, labels = data

outputs = net(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

scheduler.step() # 更新学习率

如果要自己定义损失函数也是可以的,它跟网络模型的定义很像,也是需要一个输入,然后进行前向传播,反向传播求梯度,反向传播交给框架自己来求。比如自己定义Focal Loss 函数,这个损失函数主要是解决正负样本不平衡的问题的。

class FocalLoss(nn.Module):

def __init__(self,loss_fcn,gamma=1.5,alpha=0.25):

super(FocalLoss,self).__init__()

self.loss_fcn=loss_fcn

self.gamma=gamma

self.alpha=alpha

self.reduction=loss_fcn.reduction

self.loss_fcn.reduction='none'

def forward(self,pred,true):

loss=self.loss_fcn(pred,true)

pred_prob=torch.sigmoid(pred)

p_t=true*pred_prob+(1-true)*(1-pred_prob)

alpha_factor=true*self.alpha+(1-true)*(1-self.alpha)

modulating_factor=(1.0-p_t)**self.gamma

loss*=alpha_factor*modulating_factor

if self.reduction=='mean':

return loss.mean()

elif self.reduction=='sum':

return loss.sum()

else:

return loss

下面给出人名币二分类的完整代码。

# -*- coding: utf-8 -*-

import os

import random

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.data import DataLoader

from torch.utils.data import Dataset

import torchvision.transforms as transforms

import torch.optim as optim

from matplotlib import pyplot as plt

from PIL import Image

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

class RMBDataset(Dataset):

def __init__(self, data_dir, transform=None):

self.label_name = {"1": 0, "100": 1}

self.data_info = self.get_img_info(data_dir) # data_info存储所有图片路径和标签,在DataLoader中通过index读取样本

self.transform = transform

def __getitem__(self, index):

path_img, label = self.data_info[index]

img = Image.open(path_img).convert('RGB') # 0~255

if self.transform is not None:

img = self.transform(img) # 在这里做transform,转为tensor等等

return img, label

def __len__(self):

return len(self.data_info)

@staticmethod

def get_img_info(data_dir):

data_info = list()

for root, dirs, _ in os.walk(data_dir):

# 遍历类别

for sub_dir in dirs:

img_names = os.listdir(os.path.join(root, sub_dir))

img_names = list(filter(lambda x: x.endswith('.jpg'), img_names))

# 遍历图片

for i in range(len(img_names)):

img_name = img_names[i]

path_img = os.path.join(root, sub_dir, img_name)

label = rmb_label[sub_dir]

data_info.append((path_img, int(label)))

return data_info

class LeNet(nn.Module):

def __init__(self, classes):

super(LeNet, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16*5*5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, classes)

def forward(self, x):

out = F.relu(self.conv1(x))

out = F.max_pool2d(out, 2)

out = F.relu(self.conv2(out))

out = F.max_pool2d(out, 2)

out = out.view(out.size(0), -1)

out = F.relu(self.fc1(out))

out = F.relu(self.fc2(out))

out = self.fc3(out)

return out

def initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.xavier_normal_(m.weight.data)

if m.bias is not None:

m.bias.data.zero_()

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight.data, 0, 0.1)

m.bias.data.zero_()

set_seed() # 设置随机种子

rmb_label = {"1": 0, "100": 1}

# 参数设置

MAX_EPOCH = 10

BATCH_SIZE = 5

LR = 0.01

log_interval = 10

val_interval = 1

# ============================ step 1/5 数据 ============================

split_dir = os.path.join(".", "RMB_data", "rmb_split")

train_dir = os.path.join(split_dir, "train")

valid_dir = os.path.join(split_dir, "valid")

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

train_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.RandomCrop(32, padding=4),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

valid_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

# 构建MyDataset实例

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

valid_data = RMBDataset(data_dir=valid_dir, transform=valid_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)

# ============================ step 2/5 模型 ============================

net = LeNet(classes=2)

net.initialize_weights()

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数

# ============================ step 4/5 优化器 ============================

optimizer = optim.SGD(net.parameters(), lr=LR, momentum=0.9) # 选择优化器

scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1) # 设置学习率下降策略

# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()

for epoch in range(MAX_EPOCH):

loss_mean = 0.

correct = 0.

total = 0.

net.train()

for i, data in enumerate(train_loader):

# forward

inputs, labels = data

outputs = net(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

# 统计分类情况

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().sum().numpy()

# 打印训练信息

loss_mean += loss.item()

train_curve.append(loss.item())

if (i+1) % log_interval == 0:

loss_mean = loss_mean / log_interval

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, i+1, len(train_loader), loss_mean, correct / total))

loss_mean = 0.

scheduler.step() # 更新学习率

# validate the model

if (epoch+1) % val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

net.eval()

with torch.no_grad():

for j, data in enumerate(valid_loader):

inputs, labels = data

outputs = net(inputs)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).squeeze().sum().numpy()

loss_val += loss.item()

valid_curve.append(loss_val)

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, j+1, len(valid_loader), loss_val, correct / total))

train_x = range(len(train_curve))

train_y = train_curve

train_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval # 由于valid中记录的是epochloss,需要对记录点进行转换到iterations

valid_y = valid_curve

plt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')

plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()

根据深度学习框架完成上面的源代码就是从0到1的过程,但是如果把所有的代码都写到一起的话,代码的可读性就不是很好。如果我们去网上找比较大的开源项目的代码,就会发现它们是拆分成很多个文件的,把功能独立或者类似的函数放到一个单独的文件里,这个优化代码的过程就是代码从1到2的过程。

train.py

import argparse

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

from dataset import RMBDataset

from model import LeNet

import torch.optim as optim

import utils

def train():

utils.set_seed()

# ============================ step 1/5 数据 ============================

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

train_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.RandomCrop(32, padding=4),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

valid_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

# 构建MyDataset实例

train_data = RMBDataset(data_dir=opt.train_dir, transform=train_transform)

valid_data = RMBDataset(data_dir=opt.valid_dir, transform=valid_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=opt.batch_size, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=opt.batch_size)

# ============================ step 2/5 模型 ============================

net = LeNet(classes=2)

net.initialize_weights()

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数

# ============================ step 4/5 优化器 ============================

optimizer = optim.SGD(net.parameters(), lr=opt.lr, momentum=0.9) # 选择优化器

scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1) # 设置学习率下降策略

# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()

for epoch in range(opt.epochs):

loss_mean = 0.

correct = 0.

total = 0.

net.train()

for i, data in enumerate(train_loader):

# forward

inputs, labels = data

outputs = net(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

# 统计分类情况

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().sum().numpy()

# 打印训练信息

loss_mean += loss.item()

train_curve.append(loss.item())

if (i + 1) % opt.log_interval == 0:

loss_mean = loss_mean / opt.log_interval

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, opt.epochs, i + 1, len(train_loader), loss_mean, correct / total))

loss_mean = 0.

scheduler.step() # 更新学习率

# validate the model

if (epoch + 1) % opt.val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

net.eval()

with torch.no_grad():

for j, data in enumerate(valid_loader):

inputs, labels = data

outputs = net(inputs)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).squeeze().sum().numpy()

loss_val += loss.item()

valid_curve.append(loss_val)

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, opt.epochs, j + 1, len(valid_loader), loss_val, correct / total))

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--epochs', type=int, default=10) # 500200 batches at bs 16, 117263 COCO images = 273 epochs

parser.add_argument('--batch-size', type=int, default=5) # effective bs = batch_size * accumulate = 16 * 4 = 64

parser.add_argument('--lr', type=float, default=0.01)

parser.add_argument('--log_interval', type=int, default=10)

parser.add_argument('--val_interval', type=int, default=1)

parser.add_argument('--train_dir', type=str, default='./RMB_data/rmb_split/train')

parser.add_argument('--valid_dir', type=str, default='./RMB_data/rmb_split/valid')

opt = parser.parse_args()

train()

dataset.py

# -*- coding: utf-8 -*-

import os

import random

from PIL import Image

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

import cv2

random.seed(1)

rmb_label = {"1": 0, "100": 1}

train_transform = transforms.Compose([

transforms.Resize((32, 32)),

# transforms.RandomCrop(32, padding=4),

transforms.ToTensor(),

# transforms.Normalize(norm_mean, norm_std),

])

class RMBDataset(Dataset):

def __init__(self, data_dir, transform=None):

self.label_name = {"1": 0, "100": 1}

self.data_info = self.get_img_info(data_dir) # data_info存储所有图片路径和标签,在DataLoader中通过index读取样本

self.transform = transform

def __getitem__(self, index):

path_img, label = self.data_info[index]

img = Image.open(path_img).convert('RGB') # 0~255

if self.transform is not None:

img = self.transform(img) # 在这里做transform,转为tensor等等

return img, label

def __len__(self):

return len(self.data_info)

@staticmethod

def get_img_info(data_dir):

data_info = list()

rmb_label = {"1": 0, "100": 1}

for root, dirs, _ in os.walk(data_dir): #

# 遍历类别

for sub_dir in dirs:

img_names = os.listdir(os.path.join(root, sub_dir))

img_names = list(filter(lambda x: x.endswith('.jpg'), img_names))

# 遍历图片

for i in range(len(img_names)):

img_name = img_names[i]

path_img = os.path.join(root, sub_dir, img_name)

label = rmb_label[sub_dir]

data_info.append((path_img, int(label)))

return data_info

train_dir='./RMB_data/rmb_split/train/'

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=16)

model.py

import os

import random

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

from collections import OrderedDict

class LeNet(nn.Module):

def __init__(self,classes):

super(LeNet, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5) #(32+2*0-5)/1+1 = 28

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16*5*5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, classes)

def forward(self, x): #4 3 32 32 ->nn.Conv2d(3, 6, 5)-> 4 6 28 28

out = F.relu(self.conv1(x)) #32->28 4 6 28 28

out = F.max_pool2d(out, 2) #4 6 14 14

out = F.relu(self.conv2(out)) # 4 16 10 10

out = F.max_pool2d(out, 2) # 4 16 5 5

out = out.view(out.size(0), -1) #4 400

out = F.relu(self.fc1(out))

out = F.relu(self.fc2(out))

out = self.fc3(out)

return out

def initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.xavier_normal_(m.weight.data)

if m.bias is not None:

m.bias.data.zero_()

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight.data, 0, 0.1)

m.bias.data.zero_()

utils.py

utils.py主要是存放一些比如绘图之类的功能性函数。

import random

import numpy as np

import torch

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

模型保存、gpu训练

如果要用gpu训练的话只需要将网络和输入数据同时送到gpu即可。

# ============================ step 2/5 模型 ============================

net = LeNet(classes=2)

net.to("cuda:0") # 将网络送到gpu是inplace操作,不需要赋值

如果要再次送回cpu,只需要添加一句net.to("cpu")。

for i, data in enumerate(train_loader):

# forward

inputs, labels = data

inputs = inputs.to("cuda:0")

labels = labels.to("cuda:0")

outputs = net(inputs)

这样网络和输入数据都是在gpu的,得到的结果也是存放在gpu的,由于将tensor转成numpy的操作只能在cpu中操作,故计算准确率的时候得在numpy前加.to(“cpu”)将结果送回到cpu。

correct += (predicted == labels).to("cpu").squeeze().sum().numpy()

但上面的写法如果没有gpu,要将所有的"cuda:0"全部改成"cpu",不是很方便,所以常用下面的写法。

def train():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# ============================ step 2/5 模型 ============================

net = LeNet(classes=2).to(device)

for i, data in enumerate(train_loader):

# forward

inputs, labels = data

inputs = inputs.to(device)

labels = labels.to(device)

outputs = net(inputs)

保存模型只需使用下面的代码:

# 保存模型参数

net_state_dict = net.state_dict()

torch.save(net_state_dict, opt.path_state_dict)

print("save~")

得到的net_state_dict是一个列表,存放了网络的各层参数,用torch.save将net_state_dict保存到opt.path_state_dict定义的路径下。在预测的时候用torch.load调用训练后保存的模型参数。

import argparse

import torch

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

from dataset import RMBDataset

from model import LeNet

def detect():

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

valid_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

test_data = RMBDataset(data_dir=opt.test_dir, transform=valid_transform)

valid_loader = DataLoader(dataset=test_data, batch_size=1)

net = LeNet(classes=2)

state_dict_load = torch.load(opt.path_state_dict)

net.load_state_dict(state_dict_load)

for i, data in enumerate(valid_loader):

# forward

inputs, labels = data

outputs = net(inputs)

_, predicted = torch.max(outputs.data, 1)

rmb = 1 if predicted.numpy()[0] == 0 else 100

print("模型获得{}元".format(rmb))

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--test_dir', type=str, default='./RMB_data/rmb_split/valid')

parser.add_argument('--path_state_dict', type=str, default='./model_state_dict.pkl')

opt = parser.parse_args()

detect()

接下来就是目标检测部分的内容了。

b站原视频链接

1420

1420

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?