OpenCv专栏:https://blog.csdn.net/qq_40515692/article/details/102885061

总结一下:

cv::KeyPoint——关键点

cv::Feature2D——找到关键点或计算描述符的抽象类,如上一节的FastFeatureDetector即派生于Feature2D,定义了detect、compute、detectAndCompute等方法

cv::DMatch——匹配器

cv::DescriptorMatcher——关键点匹配的抽象类,在这一节我们将在代码中具体使用它们,它定义了match、knnMatch、radiusMatch等方法

(1).首先是官方文档给的AKAZE算法。

#include <opencv2/features2d.hpp>

#include <opencv2/imgcodecs.hpp>

#include <opencv2/opencv.hpp>

#include <vector>

#include <iostream>

using namespace std;

using namespace cv;

const float inlier_threshold = 2.5f; // Distance threshold to identify inliers

const float nn_match_ratio = 0.8f; // Nearest neighbor matching ratio

int main(void)

{

//1.加载图片和homography矩阵,注意更改文件位置

Mat img1 = imread("C:\\Users\\ttp\\Desktop\\5.jpg", IMREAD_GRAYSCALE);

Mat img2 = imread("C:\\Users\\ttp\\Desktop\\6.jpg", IMREAD_GRAYSCALE);

Mat homography;

/*FileStorage fs("../data/H1to3p.xml", FileStorage::READ);

fs.getFirstTopLevelNode() >> homography;*/

homography = (Mat_<double>(3, 3) << 7.6285898e-01, -2.9922929e-01, 2.2567123e+02,

3.3443473e-01, 1.0143901e+00, -7.6999973e+01,

3.4663091e-04, -1.4364524e-05, 1.0000000e+00);

//2.使用AKAZE检测关键点(keypoints)和计算描述符(descriptors)

vector<KeyPoint> kpts1, kpts2; //关键点

Mat desc1, desc2; //描述符

Ptr<AKAZE> akaze = AKAZE::create();

akaze->detectAndCompute(img1, noArray(), kpts1, desc1);

akaze->detectAndCompute(img2, noArray(), kpts2, desc2);

//3.使用brute-force适配器来找到 2-nn 匹配

BFMatcher matcher(NORM_HAMMING); //暴力匹配

vector< vector<DMatch> > nn_matches;

matcher.knnMatch(desc1, desc2, nn_matches, 2);

//4.Use 2-nn matches to find correct keypoint matches

vector<KeyPoint> matched1, matched2, inliers1, inliers2;

vector<DMatch> good_matches;

for (size_t i = 0; i < nn_matches.size(); i++) {

DMatch first = nn_matches[i][0];

float dist1 = nn_matches[i][0].distance;

float dist2 = nn_matches[i][1].distance;

if (dist1 < nn_match_ratio * dist2) {

matched1.push_back(kpts1[first.queryIdx]);

matched2.push_back(kpts2[first.trainIdx]);

}

}

//5.Check if our matches fit in the homography model

for (unsigned i = 0; i < matched1.size(); i++) {

Mat col = Mat::ones(3, 1, CV_64F);

col.at<double>(0) = matched1[i].pt.x;

col.at<double>(1) = matched1[i].pt.y;

col = homography * col;

col /= col.at<double>(2);

double dist = sqrt(pow(col.at<double>(0) - matched2[i].pt.x, 2) +

pow(col.at<double>(1) - matched2[i].pt.y, 2));

if (dist < inlier_threshold) {

int new_i = static_cast<int>(inliers1.size());

inliers1.push_back(matched1[i]);

inliers2.push_back(matched2[i]);

good_matches.push_back(DMatch(new_i, new_i, 0));

}

}

Mat res;

drawMatches(img1, inliers1, img2, inliers2, good_matches, res);

imshow("res", res);

double inlier_ratio = inliers1.size() * 1.0 / matched1.size();

cout << "A-KAZE Matching Results" << endl;

cout << "*******************************" << endl;

cout << "# Keypoints 1: \t" << kpts1.size() << endl;

cout << "# Keypoints 2: \t" << kpts2.size() << endl;

cout << "# Matches: \t" << matched1.size() << endl;

cout << "# Inliers: \t" << inliers1.size() << endl;

cout << "# Inliers Ratio: \t" << inlier_ratio << endl;

cout << endl;

waitKey(0);

return 0;

}(2).这里给一个较完整的使用AKAZE算法,包含匹配和识别的程序。

#include<opencv2\opencv.hpp>

using namespace cv;

using namespace std;

double ImgCmp(const char* srcPath, const char* dstPath, const char* saveGrayPath, const char* saveDstPath, int myX = 300, int myY = 486)

{

Mat src1, src2, dst;

src1 = imread(srcPath, IMREAD_GRAYSCALE);

src2 = imread(dstPath, IMREAD_GRAYSCALE);

resize(src1, src1, Size(myX, myY));

resize(src2, src2, Size(myX, myY));

//1.AKAZE

Ptr<AKAZE>akaze = AKAZE::create();

vector<KeyPoint> keypoints1, keypoints2;

Mat descriptors1, descriptors2;

akaze->detectAndCompute(src1, Mat(), keypoints1, descriptors1);

akaze->detectAndCompute(src2, Mat(), keypoints2, descriptors2);

//2.暴力匹配

BFMatcher matcher;

vector<DMatch>matches;

matcher.match(descriptors1, descriptors2, matches);

double minDist = 1000;

for (int i = 0; i < descriptors1.rows; i++)

{

double dist = matches[i].distance;

if (dist < minDist)

{

minDist = dist;

}

}

//3.挑选较好的匹配DMatch

vector<DMatch>goodMatches;

for (int i = 0; i < descriptors1.rows; i++)

{

double dist = matches[i].distance;

if (dist < max(1.5 * minDist, 0.02))

{

goodMatches.push_back(matches[i]);

}

}

//4.绘制saveGrayPath路径的good_match_img

Mat good_match_img;

drawMatches(src1, keypoints1, src2, keypoints2, goodMatches, good_match_img, Scalar::all(-1), Scalar::all(-1), vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

//perspective transform

vector<Point2f>src1GoodPoints;

vector<Point2f>src2GoodPoints;

for (int i = 0; i < goodMatches.size(); i++)

{

src1GoodPoints.push_back(keypoints1[goodMatches[i].queryIdx].pt);

src2GoodPoints.push_back(keypoints2[goodMatches[i].trainIdx].pt);

}

Mat P = findHomography(src1GoodPoints, src2GoodPoints, RANSAC);//有不良匹配点时用RANSAC

vector<Point2f>src1corner(4);

vector<Point2f>src2corner(4);

src1corner[0] = Point(0, 0);

src1corner[1] = Point(src1.cols, 0);

src1corner[2] = Point(src1.cols, src1.rows);

src1corner[3] = Point(0, src1.rows);

perspectiveTransform(src1corner, src2corner, P);

//在匹配图上画

line(good_match_img, Point(src2corner[0].x + src1.cols, src2corner[0].y), Point(src2corner[1].x + src1.cols, src2corner[1].y), Scalar(0, 0, 255), 2);

line(good_match_img, Point(src2corner[1].x + src1.cols, src2corner[1].y), Point(src2corner[2].x + src1.cols, src2corner[2].y), Scalar(0, 0, 255), 2);

line(good_match_img, Point(src2corner[2].x + src1.cols, src2corner[2].y), Point(src2corner[3].x + src1.cols, src2corner[3].y), Scalar(0, 0, 255), 2);

line(good_match_img, Point(src2corner[3].x + src1.cols, src2corner[3].y), Point(src2corner[0].x + src1.cols, src2corner[0].y), Scalar(0, 0, 255), 2);

//在原图上画

Mat srcRes = imread(dstPath);

resize(srcRes, srcRes, Size(myX, myY));

line(srcRes, src2corner[0], src2corner[1], Scalar(0, 0, 255), 2);

line(srcRes, src2corner[1], src2corner[2], Scalar(0, 0, 255), 2);

line(srcRes, src2corner[2], src2corner[3], Scalar(0, 0, 255), 2);

line(srcRes, src2corner[3], src2corner[0], Scalar(0, 0, 255), 2);

imwrite(saveGrayPath, good_match_img);

imwrite(saveDstPath, srcRes);

return minDist;

}

int main(int arc, char** argv)

{

// 注意更改文件位置

printf("%f", ImgCmp("C:\\Users\\ttp\\Desktop\\5.jpg", //src源图片

"C:\\Users\\ttp\\Desktop\\8.jpg", //dst将框选出目标的图片

"C:\\Users\\ttp\\Desktop\\Gray.jpg", //在指定位置保存的图片

"C:\\Users\\ttp\\Desktop\\Dst.jpg", //在指定位置保存的图片

300, 486)

);

system("pause");

return 0;

}

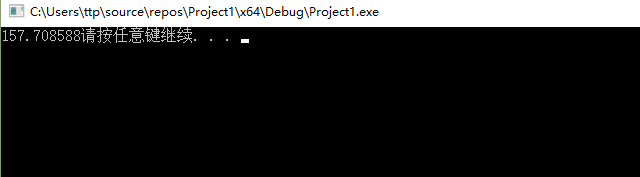

结果:

我的输入图片是:

C:\\Users\\ttp\\Desktop\\5.jpg

C:\\Users\\ttp\\Desktop\\8.jpg

程序的输出是:

值越小越相似,当为0时图片相同。

C:\\Users\\ttp\\Desktop\\Gray.jpg

C:\\Users\\ttp\\Desktop\\Dst.jpg

欢迎大家批判指正!

1430

1430

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?