车联网数据实时收集与分析平台测试

1、开启Kafka并创建相应主题

依据 9. ubuntu16.04安装配置confluent平台并使用Kafka 配置完平台后,开始进行测试。

本次仅介绍单机测试,三台主机的集群测试,依据之前的配置完成即可进行测试。

配置并开启完Kafka后,首先,创建一个名为CarSensor的Kafka主题

bin/kafka-topics --create --zookeeper localhost:2181 --replication-factor 3 --partitions 1 --topic CarSensor

注:

若为三台主机的集群,则输入:

bin/kafka-console-consumer --bootstrap-server host1:2181,host2:2181,host3:2181 --replication-factor 3 --partitions 1 --topic CarSensor

查看一下创建情况

bin/kafka-topics --describe --zookeeper localhost:2181 --topic CarSensor

2、配置EMQ

依照11. 构建EMQ连接Kafka的插件配置完对应的配置后,即可实现EMQ桥接Kafka

注:

这里需要先创建Kafka主题后再配置EMQ,因为配置桥接Kafka时,需要保证Kafka开启并且已创建了该主题才行。

3、构建数据模拟器

使用Python构建三个MQTT客户端,其中一个发送数据给EMQ

# -*- coding: utf-8 -*-

"""

Created on Mon Apr 6 23:28:04 2020

@author: ASUS

"""

# -*- coding: utf-8 -*-

import paho.mqtt.client as mqtt

class MqttClient:

def __init__(self, host, port, mqttClient):

self._host = host

self._port = port

self._mqttClient = mqttClient

# 连接MQTT服务器

def on_mqtt_connect(self, username="tester", password="tester"):

self._mqttClient.username_pw_set(username, password)

self._mqttClient.connect(self._host, self._port, 60)

self._mqttClient.loop_start()

# publish 消息

def on_publish(self, topic, msg, qos):

self._mqttClient.publish(topic, msg, qos)

# 消息处理函数

def on_message_come(client, userdata, msg):

print(msg.topic + " " + ":" + str(msg.data))

# subscribe 消息

def on_subscribe(self, topic):

self._mqttClient.subscribe(topic, 1)

self._mqttClient.on_message = self.on_message_come # 消息到来处理函数

'''

def main():

self.on_mqtt_connect()

on_publish("/test/server", "Hello Python!", 1)

self.on_subscribe()

print("connect success!\n")

while True:

pass

'''

if __name__ == '__main__':

# main()

host = "123.57.8.28"

port = 1883

#topic = "CarTest"

topic = "user3"

# msg = "Hello Python!"

qos = 1

username = "tester"

password = "tester"

mqttClient = mqtt.Client("tester")

client = MqttClient(host, port, mqttClient)

client.on_mqtt_connect(username, password)

# client.on_publish(topic, msg, qos)

client.on_subscribe(topic)

# 多客户端连接

mqttClient_2 = mqtt.Client("tester-2")

username_2 = username + '2'

password_2 = password + '2'

client_2 = MqttClient(host, port, mqttClient_2)

client_2.on_mqtt_connect(username_2, password_2)

client_2.on_subscribe(topic)

mqttClient_3 = mqtt.Client("tester-3")

username_3 = username + '3'

password_3 = password + '3'

client_3 = MqttClient(host, port, mqttClient_3)

client_3.on_mqtt_connect(username_3, password_3)

client_3.on_subscribe(topic)

with open('sensor_data-Copy1.csv', 'r') as f:

# next(f) # skip header line

count = 0

for line in f:

msg = line.rstrip().encode()

client.on_publish(topic, msg, qos)

# producer.send('creditcard-test-0', line.rstrip().encode())

count += 1

print(count, "records has been produced in 'CarTest'")

print("connect success!\n")

while True:

pass

4、Kafka消费者查看数据

bin/kafka-console-consumer --bootstrap-server localhost:9092 --from-beginning --topic CarSensor

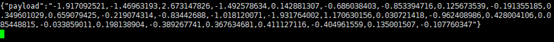

输入命令后,可查看到数据已由EMQ传递至Kafka当中存储。

5、使用KSQL进行流式处理

依据 9. KSQL进行流式操作 对KSQL进行配置

配置完后,启动KSQL,再创建Stream

CREATE STREAM CARSENSOR ( \

V1 VARCHAR, V2 VARCHAR, V3 VARCHAR, V4 VARCHAR, \

V5 VARCHAR, V6 VARCHAR, V7 VARCHAR, V8 VARCHAR, \

V9 VARCHAR, V10 VARCHAR, V11 VARCHAR, V12 VARCHAR, \

V13 VARCHAR,V14 VARCHAR, V15 VARCHAR, V16 VARCHAR, \

V17 VARCHAR, V18 VARCHAR,V19 VARCHAR, V20 VARCHAR, \

V21 VARCHAR, V22 VARCHAR, V23 VARCHAR, V24 VARCHAR, \

V25 VARCHAR, V26 VARCHAR, V27 VARCHAR, V28 VARCHAR) \

WITH (KAFKA_TOPIC=' CarSensor ', VALUE_FORMAT ='DELIMITED') ;

由于本次数据源为28列,因此创建28列数据源,由于数据源通过EMQ桥接至Kafka后数据会被以字符型来保存,因此规定类型为VARCHAR。

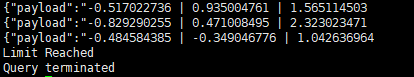

创建完成后,输入SHOW CARSENSOR查看创建情况,发现创建完成后,查询V1-V6的三列数据

SELECT V1,V2,V3 FROM CARSENSOR LIMIT 3;

6、使用Tensorflow进行预测

(1)构建Kafka消费者客户端读取数据

# -*- coding: utf-8 -*-

"""

Created on Fri Apr 10 22:03:00 2020

@author: ASUS

"""

from kafka import KafkaConsumer

from kafka.structs import TopicPartition

Topic = 'CarSensor'

group_name = Topic

server = 'localhost:9092'

'''

consumer = KafkaConsumer('CarSensor',

bootstrap_servers='localhost:9092',

group_id='CarSensor',

auto_offset_reset='earliest')

print('consumer start to consuming...')

consumer.subscribe(('CarSensor', ))

#consumer.seek(TopicPartition(topic='CarSensor', partition=0), 0)

'''

consumer = KafkaConsumer(Topic,

bootstrap_servers=server,

group_id=group_name,

auto_offset_reset='earliest')

print('consumer start to consuming...')

consumer.subscribe((Topic, ))

consumer.seek(TopicPartition(topic=Topic, partition=0), 0)

#while True:

for i in range(3):

msg = consumer.poll(timeout_ms=5) #从kafka获取消息

print(msg)

filename = 'data-sensor.txt'

output = open(filename,'w')

count = 0

for message in consumer:

# print(message.topic, message.offset, message.key, message.value, message.value, message.partition)

print(bytes.decode(message.value))

output.write(bytes.decode(message.value))

output.write("\n")

count += 1

if count == 10001:

break

output.close()

print("================================")

print("message: %d" %(count))

'''

msg = consumer.poll(timeout_ms=3)

print(msg["values"])

list(msg.keys())

output = open('data.txt','w')

output.write(msg.values())

'''

读取CarSensor主题中的数据,再将数据写入至data-sensor.txt中。

(2)将数据由txt转换成csv格式

# -*- coding: utf-8 -*-

"""

Created on Sat Apr 11 12:36:40 2020

@author: ASUS

"""

import csv

# 非字典型数据的txt 转换成 csv方式

# not dict

with open('data-sensor.csv', 'w+', newline='') as csvfile:

spamwriter = csv.writer(csvfile)

# 读要转换的txt文件,文件每行各词间以字符分隔

with open('data-sensor.txt', 'r') as filein:

count = 0

for line in filein:

if count == 0:

line = line.replace('"','')

line_list = line.strip('\n').split(',') #我这里的数据之间是以 , 间隔的

spamwriter.writerow(line_list)

count += 1

'''

# 字典型数据的txt 转换成 csv方式

# dict

with open('data-sensor.csv', 'w+', newline='') as csvfile:

spamwriter = csv.writer(csvfile)

with open('data-sensor.txt','r') as filein:

count = 0

for line in filein:

line_dict = eval(line)

line_list = line_dict['payload'].split(',')

spamwriter.writerow(line_list)

count += 1

'''

'''

data = lines[1]

b = eval(data)

f3 = b['payload']

with open('data-sensor.txt','r') as filein:

f1 = filein.readline()

f2 = filein.readline()

with open('raw-data-sensor.txt','r') as data_file:

count = 0

for line in filein:

line_dict = eval(line)

line_list = line_dict['payload'].split(',')

spamwriter.writerow(line_list)

count += 1

'''

由此,完成了将Kafka中的数据读取到本地并以.csv的形式保存。

(3)读取数据进行预测

首先,构建模型

使用tensorflow2,通过keras构建LSTM神经网络模型

# -*- coding: utf-8 -*-

"""

Created on Sat Apr 11 12:39:06 2020

@author: ASUS

"""

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.preprocessing import MinMaxScaler

import os

import tensorflow as tf

from tensorflow import keras

# set GPU

tf.debugging.set_log_device_placement(True)

gpus = tf.config.experimental.list_physical_devices('GPU')

tf.config.experimental.set_visible_devices(gpus[0], 'GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

print(len(gpus))

logical_gpus = tf.config.experimental.list_logical_devices('GPU')

print(len(logical_gpus))

# read data-sensor.csv

dataframe = pd.read_csv('data-sensor.csv')

pd_value = dataframe.values

# ========= set dataset ===================

train_size = int(len(pd_value) * 0.8)

trainlist = pd_value[:train_size]

testlist = pd_value[train_size:]

#testlist = targetlist

look_back = 4

features = 28

step_out = 1

# ========= numpy train ===========

def create_dataset(dataset, look_back):

#这里的look_back与timestep相同

dataX, dataY = [], []

for i in range(len(dataset)-look_back-1):

a = dataset[i:(i+look_back)]

dataX.append(a)

dataY.append(dataset[i + look_back])

# return numpy.array(dataX),numpy.array(dataY)

return np.array(dataX),np.array(dataY)

#训练数据太少 look_back并不能过大

trainX,trainY = create_dataset(trainlist,look_back)

testX,testY = create_dataset(testlist,look_back)

# ========== set dataset ======================

#trainX = numpy.reshape(trainX, (trainX.shape[0], trainX.shape[1], features))

#testX = numpy.reshape(testX, (testX.shape[0], testX.shape[1] , features))

trainX = np.reshape(trainX, (trainX.shape[0], trainX.shape[1], features))

testX = np.reshape(testX, (testX.shape[0], testX.shape[1] , features))

# create and fit the LSTM network

model = tf.keras.Sequential()

model.add(tf.keras.layers.LSTM(64, activation='relu', return_sequences=True, input_shape=(look_back, features)))

model.add(tf.keras.layers.Dropout(0.2))

model.add(tf.keras.layers.LSTM(32, activation='relu'))

model.add(tf.keras.layers.Dense(features))

model.compile(metrics=['accuracy'], loss='mean_squared_error', optimizer='adam')

model.summary()

# load weights

model.load_weights('lstm-model.h5')

# train model

history = model.fit(trainX, trainY, validation_data=(testX, testY),epochs=15, verbose=1).history

model.save("lstm-model.h5")

# loss plot

plt.plot(history['loss'], linewidth=2, label='Train')

plt.plot(history['val_loss'], linewidth=2, label='Test')

plt.legend(loc='upper right')

plt.title('Model loss')

plt.ylabel('Loss')

plt.xlabel('Epoch')

#plt.ylim(ymin=0.70,ymax=1)

plt.show()

# test model

trainPredict = model.predict(trainX)

testPredict = model.predict(testX)

# plot test

plt.plot(trainY[:100,1])

plt.plot(trainPredict[:100,1])

plt.show()

plt.plot(testY[:100,1])

plt.plot(testPredict[:100,1])

plt.plot()

该模型以时间序列的方式进行预测,最终得到loss图如下。

然后,构建预测集进行预测

# set predict_data

predict_begin = 1

predict_num = 100

predict_result = np.zeros((predict_num+look_back,features),dtype=float)

for i in range(look_back):

predict_result[i] = testX[-predict_begin:][0,i]

# predict

for i in range(predict_num):

begin_data = np.reshape(predict_result[i:i+look_back,], (predict_begin, look_back, features))

predict_data = model.predict(begin_data)

predict_result[look_back+i] = predict_data

buff = predict_result[i+1:i+look_back]

predict_call_back = np.append(buff,predict_data,axis=0)

# show plot

plt.plot(predict_result[-predict_num:,27])

plt.plot()

以滚动的方式进行预测,预测了后10次数据的情况

上图为本次预测的折线图。

总结

本次设计使用Python语言构建了一个数据模拟器,用于产生汽车的传感器数据。

本次平台的数据采集部分,使用MQTT作为传输协议,搭建EMQ作为其Broker对数据传输过程进行管理。

数据缓冲区部分,使用Kafka作为消息中间件,实现对海量数据的高效存储。

流式数据处理部分,使用KSQL作为处理工具,实现对流数据的流式处理。

数据分析预测部分,使用Tensorflow框架构建LSTM模型,实现对汽车的传感器数据进行实时预测。

2387

2387

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?