HMM 博客汇总

隐马尔可夫模型的学习问题分为监督学习和非监督学习问题,监督学习采用极大似然估计,非监督学习采用Baum-Welch算法,我们直接先讲Baum-Welch算法。

Baum-Welch算法(EM算法)

事实上,Baum-Welch算法是EM算法在HMM学习问题中的应用。

我们要估计的

λ

^

\hat \lambda

λ^应该为

λ

^

=

a

r

g

m

a

x

λ

P

(

O

∣

λ

)

\hat \lambda = \underset{\lambda}{argmax} P(O|\lambda)

λ^=λargmaxP(O∣λ)

我们还是先把EM算法的公式写出来先

θ

t

+

1

=

a

r

g

m

a

x

θ

∫

z

l

o

g

P

(

X

,

Z

∣

θ

)

⋅

P

(

Z

∣

X

,

θ

)

d

z

\theta^{t+1} = \underset{\theta}{argmax}\int_zlogP(X,Z|\theta)\cdot P(Z|X,\theta)dz

θt+1=θargmax∫zlogP(X,Z∣θ)⋅P(Z∣X,θ)dz

其中Z表示隐变量,X表示观测变量,那在HMM中,隐变量是I,观测变量是O,且是离散的

λ

t

+

1

=

a

r

g

m

a

x

θ

∑

I

l

o

g

P

(

O

,

I

∣

λ

)

⋅

P

(

I

∣

O

,

λ

t

)

=

a

r

g

m

a

x

θ

∑

I

l

o

g

P

(

O

,

I

∣

λ

)

⋅

P

(

O

,

I

∣

λ

t

)

P

(

O

∣

λ

t

)

=

a

r

g

m

a

x

θ

1

P

(

O

∣

λ

t

)

∑

I

l

o

g

P

(

O

,

I

∣

λ

)

⋅

P

(

O

,

I

∣

λ

t

)

=

a

r

g

m

a

x

θ

∑

I

l

o

g

P

(

O

,

I

∣

λ

)

⋅

P

(

O

,

I

∣

λ

t

)

\begin{aligned} \lambda^{t+1} &= \underset{\theta}{argmax}\sum_{I}logP(O,I|\lambda)\cdot P(I|O,\lambda^t) \\ &= \underset{\theta}{argmax}\sum_{I}logP(O,I|\lambda)\cdot \frac{P(O,I|\lambda^t)}{P(O|\lambda^t)} \\ &= \underset{\theta}{argmax}\frac{1}{P(O|\lambda^t)}\sum_{I}logP(O,I|\lambda)\cdot P(O,I|\lambda^t) \\ &= \underset{\theta}{argmax}\sum_{I}logP(O,I|\lambda)\cdot P(O,I|\lambda^t) \\ \end{aligned}

λt+1=θargmaxI∑logP(O,I∣λ)⋅P(I∣O,λt)=θargmaxI∑logP(O,I∣λ)⋅P(O∣λt)P(O,I∣λt)=θargmaxP(O∣λt)1I∑logP(O,I∣λ)⋅P(O,I∣λt)=θargmaxI∑logP(O,I∣λ)⋅P(O,I∣λt)

其中

F

(

T

)

=

P

(

O

,

I

∣

λ

)

=

P

(

o

1

,

o

2

,

.

.

.

,

o

T

,

i

1

,

i

2

,

.

.

.

,

i

T

∣

λ

)

=

P

(

o

T

∣

o

1

,

o

2

,

.

.

.

,

o

T

−

1

,

i

1

,

i

2

,

.

.

.

,

i

T

,

λ

)

⋅

P

(

o

1

,

o

2

,

.

.

.

,

o

T

−

1

,

i

1

,

i

2

,

.

.

.

,

i

T

∣

λ

)

=

P

(

o

T

∣

i

T

,

λ

)

⋅

P

(

o

1

,

o

2

,

.

.

.

,

o

T

−

1

,

i

1

,

i

2

,

.

.

.

,

i

T

−

1

∣

λ

)

⋅

P

(

i

T

∣

o

1

,

o

2

,

.

.

.

,

o

T

−

1

,

i

1

,

i

2

,

.

.

.

i

T

−

1

,

λ

)

=

b

i

T

(

o

T

)

⋅

F

(

T

−

1

)

⋅

α

i

T

−

1

i

T

\begin{aligned} F(T) = P(O,I\mid \lambda) &= P(o_1,o_2,...,o_T,i_1,i_2,...,i_T|\lambda) \\ &= P(o_T\mid o_1,o_2,...,o_{T-1},i_1,i_2,...,i_T,\lambda)\cdot P(o_1,o_2,...,o_{T-1},i_1,i_2,...,i_T|\lambda) \\ &= P(o_T\mid i_T,\lambda)\cdot P(o_1,o_2,...,o_{T-1},i_1,i_2,...,i_{T-1}|\lambda)\cdot P(i_T|o_1,o_2,...,o_{T-1},i_1,i_2,...i_{T-1},\lambda) \\ &= b_{i_T}(o_T)\cdot F(T-1)\cdot \alpha_{i_{T-1}i_T} \end{aligned}

F(T)=P(O,I∣λ)=P(o1,o2,...,oT,i1,i2,...,iT∣λ)=P(oT∣o1,o2,...,oT−1,i1,i2,...,iT,λ)⋅P(o1,o2,...,oT−1,i1,i2,...,iT∣λ)=P(oT∣iT,λ)⋅P(o1,o2,...,oT−1,i1,i2,...,iT−1∣λ)⋅P(iT∣o1,o2,...,oT−1,i1,i2,...iT−1,λ)=biT(oT)⋅F(T−1)⋅αiT−1iT

故(简单的等比数列)

F

(

T

)

=

∏

t

=

2

T

b

i

t

(

o

t

)

⋅

α

i

t

−

1

i

t

⋅

F

(

1

)

=

[

∏

t

=

2

T

b

i

t

(

o

t

)

⋅

α

i

t

−

1

i

t

]

⋅

P

(

o

1

,

i

1

∣

λ

)

=

[

∏

t

=

2

T

b

i

t

(

o

t

)

⋅

α

i

t

−

1

i

t

]

⋅

P

(

o

1

∣

i

1

,

λ

)

⋅

P

(

i

1

∣

λ

)

=

[

∏

t

=

2

T

b

i

t

(

o

t

)

⋅

α

i

t

−

1

i

t

]

⋅

b

i

1

(

o

1

)

⋅

π

i

1

=

[

∏

t

=

1

T

b

i

t

(

o

t

)

]

⋅

[

∏

t

=

2

T

α

i

t

−

1

i

t

]

⋅

π

i

1

\begin{aligned} F(T) &= \prod_{t=2}^{T}b_{i_t}(o_t)\cdot \alpha_{i_{t-1}i_t}\cdot F(1) \\ &= [\prod_{t=2}^{T}b_{i_t}(o_t)\cdot \alpha_{i_{t-1}i_t}]\cdot P(o_1,i_1\mid \lambda) \\ &= [\prod_{t=2}^{T}b_{i_t}(o_t)\cdot \alpha_{i_{t-1}i_t}]\cdot P(o_1\mid i_1,\lambda)\cdot P(i_1\mid \lambda) \\ &= [\prod_{t=2}^{T}b_{i_t}(o_t)\cdot \alpha_{i_{t-1}i_t}]\cdot b_{i_1}(o_1)\cdot \pi_{i_1} \\ &= [\prod_{t=1}^{T}b_{i_t}(o_t)]\cdot [\prod_{t=2}^{T}\alpha_{i_{t-1}i_t}]\cdot \pi_{i_1} \\ \end{aligned}

F(T)=t=2∏Tbit(ot)⋅αit−1it⋅F(1)=[t=2∏Tbit(ot)⋅αit−1it]⋅P(o1,i1∣λ)=[t=2∏Tbit(ot)⋅αit−1it]⋅P(o1∣i1,λ)⋅P(i1∣λ)=[t=2∏Tbit(ot)⋅αit−1it]⋅bi1(o1)⋅πi1=[t=1∏Tbit(ot)]⋅[t=2∏Tαit−1it]⋅πi1

现在我们已经得到

P

(

O

,

I

∣

λ

)

=

[

∏

t

=

1

T

b

i

t

(

o

t

)

]

⋅

[

∏

t

=

2

T

α

i

t

−

1

i

t

]

⋅

π

i

1

P(O,I\mid \lambda) = [\prod_{t=1}^{T}b_{i_t}(o_t)]\cdot [\prod_{t=2}^{T}\alpha_{i_{t-1}i_t}]\cdot \pi_{i_1}

P(O,I∣λ)=[t=1∏Tbit(ot)]⋅[t=2∏Tαit−1it]⋅πi1

然后

λ

t

+

1

=

a

r

g

m

a

x

θ

∑

I

l

o

g

P

(

O

,

I

∣

λ

)

⋅

P

(

O

,

I

∣

λ

t

)

=

a

r

g

m

a

x

θ

∑

I

(

∑

t

=

1

T

l

o

g

(

b

i

t

(

o

t

)

)

+

∑

t

=

2

T

l

o

g

(

α

i

t

−

1

i

t

)

+

l

o

g

(

π

i

1

)

)

⋅

P

(

O

,

I

∣

λ

t

)

\begin{aligned} \lambda^{t+1} &= \underset{\theta}{argmax}\sum_{I}logP(O,I|\lambda)\cdot P(O,I|\lambda^t) \\ &= \underset{\theta}{argmax}\sum_{I}(\sum_{t=1}^Tlog(b_{i_t}(o_t))+\sum_{t=2}^{T}log(\alpha_{i_{t-1}i_t})+log(\pi_{i_1}))\cdot P(O,I|\lambda^t) \end{aligned}

λt+1=θargmaxI∑logP(O,I∣λ)⋅P(O,I∣λt)=θargmaxI∑(t=1∑Tlog(bit(ot))+t=2∑Tlog(αit−1it)+log(πi1))⋅P(O,I∣λt)

记

Q

(

λ

,

λ

t

)

=

a

r

g

m

a

x

θ

∑

I

(

∑

t

=

1

T

l

o

g

(

b

i

t

(

o

t

)

)

+

∑

t

=

2

T

l

o

g

(

α

i

t

−

1

i

t

)

+

l

o

g

(

π

i

1

)

)

⋅

P

(

O

,

I

∣

λ

t

)

Q(\lambda,\lambda^t) = \underset{\theta}{argmax}\sum_{I}(\sum_{t=1}^Tlog(b_{i_t}(o_t))+\sum_{t=2}^{T}log(\alpha_{i_{t-1}i_t})+log(\pi_{i_1}))\cdot P(O,I|\lambda^t)

Q(λ,λt)=θargmaxI∑(t=1∑Tlog(bit(ot))+t=2∑Tlog(αit−1it)+log(πi1))⋅P(O,I∣λt)

为了求

π

t

+

1

\pi^{t+1}

πt+1,我们需要求

π

i

t

+

1

\pi_i^{t+1}

πit+1

在 ∑ i = 1 N π i = 1 \sum_{i=1}^N \pi_i = 1 ∑i=1Nπi=1的约束下,

记拉格朗日函数

L

(

π

,

η

)

=

∑

I

(

l

o

g

(

π

i

1

)

⋅

P

(

O

∣

I

,

λ

t

)

)

+

η

⋅

(

∑

i

=

1

N

π

i

−

1

)

=

∑

i

1

.

.

.

∑

i

T

(

l

o

g

(

π

i

1

)

⋅

P

(

O

∣

I

,

λ

t

)

)

+

η

⋅

(

∑

i

=

1

N

π

i

−

1

)

=

∑

i

1

l

o

g

(

π

i

1

)

⋅

P

(

O

∣

i

1

,

λ

t

)

+

η

⋅

(

∑

i

=

1

N

π

i

−

1

)

=

∑

i

=

1

N

l

o

g

(

π

i

)

⋅

P

(

O

∣

i

1

=

q

i

,

λ

t

)

+

η

⋅

(

∑

i

=

1

N

π

i

−

1

)

\begin{aligned} L(\pi,\eta) &= \sum_{I}(log(\pi_{i_1})\cdot P(O|I,\lambda^t)) +\eta\cdot (\sum_{i=1}^N \pi_i-1)\\ &= \sum_{i_1}...\sum_{i_T}(log(\pi_{i_1})\cdot P(O|I,\lambda^t)) +\eta\cdot (\sum_{i=1}^N \pi_i-1)\\ &= \sum_{i_1}log(\pi_{i_1})\cdot P(O|i_1,\lambda^t)+\eta\cdot (\sum_{i=1}^N \pi_i-1) \\ &= \sum_{i=1}^Nlog(\pi_i)\cdot P(O|i_1=q_i,\lambda^t) +\eta\cdot (\sum_{i=1}^N \pi_i-1) \end{aligned}

L(π,η)=I∑(log(πi1)⋅P(O∣I,λt))+η⋅(i=1∑Nπi−1)=i1∑...iT∑(log(πi1)⋅P(O∣I,λt))+η⋅(i=1∑Nπi−1)=i1∑log(πi1)⋅P(O∣i1,λt)+η⋅(i=1∑Nπi−1)=i=1∑Nlog(πi)⋅P(O∣i1=qi,λt)+η⋅(i=1∑Nπi−1)

∂ L ∂ π i = P ( O ∣ i 1 = q i , λ t ) π i + η = 0 \begin{aligned} \frac{\partial L}{\partial \pi_i} &= \frac{P(O|i_1=q_i,\lambda^t)}{\pi_i} +\eta = 0 \end{aligned} ∂πi∂L=πiP(O∣i1=qi,λt)+η=0

我们对得到的N个式子求和即可求出

η

\eta

η

∑

i

=

1

N

(

P

(

O

∣

i

1

=

q

i

,

λ

t

)

+

η

⋅

π

i

)

=

∑

i

=

1

N

P

(

O

∣

i

1

=

q

i

,

λ

t

)

+

η

=

0

\begin{aligned} \sum_{i=1}^{N}(P(O|i_1=q_i,\lambda^t) +\eta\cdot \pi_i) \\ =\sum_{i=1}^{N}P(O|i_1=q_i,\lambda^t) +\eta = 0 \end{aligned}

i=1∑N(P(O∣i1=qi,λt)+η⋅πi)=i=1∑NP(O∣i1=qi,λt)+η=0

得

η

=

−

∑

i

=

1

N

P

(

O

∣

i

1

=

q

i

,

λ

t

)

=

−

P

(

O

∣

λ

t

)

\eta = -\sum_{i=1}^{N}P(O|i_1=q_i,\lambda^t) =-P(O|\lambda^t)

η=−i=1∑NP(O∣i1=qi,λt)=−P(O∣λt)

得

π

i

t

+

1

=

−

P

(

O

∣

i

1

=

q

i

,

λ

t

)

η

=

P

(

O

∣

i

1

=

q

i

,

λ

t

)

P

(

O

∣

λ

t

)

\pi_i^{t+1} = -\frac{P(O|i_1=q_i,\lambda^t)}{\eta} = \frac{P(O|i_1=q_i,\lambda^t)}{P(O|\lambda^t)}

πit+1=−ηP(O∣i1=qi,λt)=P(O∣λt)P(O∣i1=qi,λt)

为了求

A

t

+

1

A^{t+1}

At+1,我们即需要求

α

i

j

t

+

1

\alpha_{ij}^{t+1}

αijt+1

在状态转移矩阵的每一行和为1的约束下,定义拉格朗日函数

L

(

α

,

η

)

=

∑

I

(

∑

t

=

2

T

l

o

g

(

α

i

t

−

1

i

t

)

⋅

P

(

O

∣

I

,

λ

t

)

)

+

∑

i

=

1

N

η

i

⋅

(

∑

j

=

1

N

α

i

j

−

1

)

=

∑

i

=

1

N

∑

j

=

1

N

∑

t

=

2

T

log

a

i

j

P

(

O

,

i

t

−

1

=

i

,

i

t

=

j

∣

λ

t

)

+

∑

i

=

1

N

η

i

⋅

(

∑

j

=

1

N

α

i

j

−

1

)

\begin{aligned} L(\alpha,\eta) &= \sum_{I}(\sum_{t=2}^{T}log(\alpha_{i_{t-1}i_t})\cdot P(O|I,\lambda^t)) + \sum_{i=1}^{N}\eta_i\cdot (\sum_{j=1}^N \alpha_{ij}-1)\\ &=\sum_{i=1}^{N} \sum_{j=1}^{N} \sum_{t=2}^{T} \log a_{i j} P\left(O, i_{t-1}=i, i_{t}=j \mid \lambda^t\right)+\sum_{i=1}^{N}\eta_i\cdot (\sum_{j=1}^N \alpha_{ij}-1)\\ \end{aligned}

L(α,η)=I∑(t=2∑Tlog(αit−1it)⋅P(O∣I,λt))+i=1∑Nηi⋅(j=1∑Nαij−1)=i=1∑Nj=1∑Nt=2∑TlogaijP(O,it−1=i,it=j∣λt)+i=1∑Nηi⋅(j=1∑Nαij−1)

然后对

α

i

j

\alpha_{ij}

αij求偏导

∂

L

∂

α

i

j

=

∑

t

=

2

T

P

(

O

,

i

t

−

1

=

i

,

i

t

=

j

∣

λ

t

)

α

i

j

+

η

i

=

0

\frac{\partial L}{\partial \alpha_{ij}} = \sum_{t=2}^{T}\frac{P\left(O, i_{t-1}=i, i_{t}=j \mid \lambda^t\right)}{\alpha_{ij}}+\eta_i = 0

∂αij∂L=t=2∑TαijP(O,it−1=i,it=j∣λt)+ηi=0

∑ t = 2 T P ( O , i t − 1 = i , i t = j ∣ λ t ) + η i ⋅ α i j = 0 \sum_{t=2}^{T}P\left(O, i_{t-1}=i, i_{t}=j \mid \lambda^t\right) + \eta_i\cdot \alpha_{ij} = 0 t=2∑TP(O,it−1=i,it=j∣λt)+ηi⋅αij=0

我们对j求和

∑

j

=

1

N

∑

t

=

2

T

P

(

O

,

i

t

−

1

=

i

,

i

t

=

j

∣

λ

t

)

+

η

i

⋅

∑

j

=

1

N

α

i

j

=

0

\sum_{j=1}^{N}\sum_{t=2}^{T}P\left(O, i_{t-1}=i, i_{t}=j \mid \lambda^t\right)+\eta_i\cdot \sum_{j=1}^N \alpha_{ij} = 0

j=1∑Nt=2∑TP(O,it−1=i,it=j∣λt)+ηi⋅j=1∑Nαij=0

得到

η

i

=

−

∑

j

=

1

N

∑

t

=

2

T

P

(

O

,

i

t

−

1

=

q

i

,

i

t

=

q

j

∣

λ

t

)

=

∑

t

=

2

T

P

(

O

,

i

t

−

1

=

q

i

∣

λ

t

)

\eta_i = -\sum_{j=1}^{N}\sum_{t=2}^{T}P\left(O, i_{t-1}=q_i, i_{t}=q_j \mid \lambda^t\right) = \sum_{t=2}^{T}P(O,i_{t-1}=q_i|\lambda^t)

ηi=−j=1∑Nt=2∑TP(O,it−1=qi,it=qj∣λt)=t=2∑TP(O,it−1=qi∣λt)

故

α

i

j

t

+

1

=

−

∑

t

=

2

T

P

(

O

,

i

t

−

1

=

i

,

i

t

=

j

∣

λ

t

)

η

i

=

∑

t

=

2

T

P

(

O

,

i

t

−

1

=

i

,

i

t

=

j

∣

λ

t

)

∑

t

=

2

T

P

(

O

,

i

t

−

1

=

q

i

∣

λ

t

)

\begin{aligned} \alpha_{ij}^{t+1} &= -\frac{\sum_{t=2}^{T}P\left(O, i_{t-1}=i, i_{t}=j \mid \lambda^t\right)}{\eta_i} \\ &=\frac{\sum_{t=2}^{T}P\left(O, i_{t-1}=i, i_{t}=j \mid \lambda^t\right)}{\sum_{t=2}^{T}P(O,i_{t-1}=q_i|\lambda^t)} \end{aligned}

αijt+1=−ηi∑t=2TP(O,it−1=i,it=j∣λt)=∑t=2TP(O,it−1=qi∣λt)∑t=2TP(O,it−1=i,it=j∣λt)

故

A

t

+

1

=

[

α

i

j

t

+

1

]

N

×

N

A^{t+1} = [\alpha_{ij}^{t+1}]_{N\times N}

At+1=[αijt+1]N×N

为了求

B

t

+

1

B^{t+1}

Bt+1,我们需要求

b

j

(

k

)

t

+

1

b_j(k)^{t+1}

bj(k)t+1

在每行和为1的约束下,得到拉格朗日函数如下

L

(

b

,

η

)

=

∑

I

[

∑

t

=

1

T

l

o

g

(

b

i

t

(

o

t

)

)

]

⋅

P

(

O

,

I

∣

λ

t

)

+

∑

j

=

1

N

η

j

⋅

[

∑

k

=

1

M

b

j

(

k

)

−

1

]

=

∑

j

=

1

N

∑

t

=

1

T

log

b

j

(

o

t

)

P

(

O

,

i

t

=

q

j

∣

λ

t

)

+

∑

j

=

1

N

η

j

⋅

[

∑

k

=

1

M

b

j

(

k

)

−

1

]

\begin{aligned} L(b,\eta) &= \sum_{I}[\sum_{t=1}^Tlog(b_{i_t}(o_t))]\cdot P(O,I|\lambda^t) + \sum_{j=1}^{N}\eta_j\cdot [\sum_{k=1}^{M}b_j(k)-1]\\ &=\sum_{j=1}^{N} \sum_{t=1}^{T} \log b_{j}\left(o_{t}\right) P(O, i_{t}=q_j \mid \lambda^t)+\sum_{j=1}^{N}\eta_j\cdot [\sum_{k=1}^{M}b_j(k)-1]\\ \end{aligned}

L(b,η)=I∑[t=1∑Tlog(bit(ot))]⋅P(O,I∣λt)+j=1∑Nηj⋅[k=1∑Mbj(k)−1]=j=1∑Nt=1∑Tlogbj(ot)P(O,it=qj∣λt)+j=1∑Nηj⋅[k=1∑Mbj(k)−1]

然后对

b

j

(

k

)

b_j(k)

bj(k)求偏导

∂

L

∂

b

j

(

k

)

=

∑

t

=

1

T

P

(

O

,

i

t

=

q

j

∣

λ

t

)

⋅

I

(

o

t

=

v

k

)

b

j

(

k

)

+

η

j

=

0

\begin{aligned} \frac{\partial L}{\partial b_j(k)} = \sum_{t=1}^{T}\frac{P(O,i_t=q_j\mid \lambda^t)\cdot I(o_t=v_k)}{b_j(k)}+\eta_j = 0 \end{aligned}

∂bj(k)∂L=t=1∑Tbj(k)P(O,it=qj∣λt)⋅I(ot=vk)+ηj=0

∑ t = 1 T P ( O , i t = q j ∣ λ t ) ⋅ I ( o t = v k ) + η j ⋅ b j ( k ) = 0 \sum_{t=1}^{T}P(O,i_t=q_j\mid \lambda^t)\cdot I(o_t=v_k)+\eta_j\cdot b_j(k)= 0 t=1∑TP(O,it=qj∣λt)⋅I(ot=vk)+ηj⋅bj(k)=0

对k求和

∑

k

=

1

M

∑

t

=

1

T

P

(

O

,

i

t

=

q

j

∣

λ

t

)

⋅

I

(

o

t

=

v

k

)

+

η

j

=

0

\sum_{k=1}^{M}\sum_{t=1}^{T}P(O,i_t=q_j\mid \lambda^t)\cdot I(o_t=v_k)+\eta_j = 0

k=1∑Mt=1∑TP(O,it=qj∣λt)⋅I(ot=vk)+ηj=0

得

η

j

=

−

∑

k

=

1

M

∑

t

=

1

T

P

(

O

,

i

t

=

q

j

∣

λ

t

)

⋅

I

(

o

t

=

v

k

)

=

∑

t

=

1

T

P

(

O

,

i

t

=

q

j

∣

λ

t

)

∑

k

=

1

M

I

(

o

t

=

v

k

)

=

∑

t

=

1

T

P

(

O

,

i

t

=

q

j

∣

λ

t

)

\begin{aligned} \eta_j &= -\sum_{k=1}^{M}\sum_{t=1}^{T}P(O,i_t=q_j\mid \lambda^t)\cdot I(o_t=v_k) \\ &=\sum_{t=1}^{T}P(O,i_t=q_j\mid \lambda^t)\sum_{k=1}^MI(o_t=v_k) \\ &=\sum_{t=1}^{T}P(O,i_t=q_j\mid \lambda^t) \end{aligned}

ηj=−k=1∑Mt=1∑TP(O,it=qj∣λt)⋅I(ot=vk)=t=1∑TP(O,it=qj∣λt)k=1∑MI(ot=vk)=t=1∑TP(O,it=qj∣λt)

从而得

b

j

(

k

)

t

+

1

=

−

∑

t

=

1

T

P

(

O

,

i

t

=

q

j

∣

λ

t

)

⋅

I

(

o

t

=

v

k

)

η

j

=

∑

t

=

1

T

P

(

O

,

i

t

=

q

j

∣

λ

t

)

⋅

I

(

o

t

=

v

k

)

∑

t

=

1

T

P

(

O

,

i

t

=

q

j

∣

λ

t

)

\begin{aligned} b_j(k)^{t+1} &= -\frac{\sum_{t=1}^{T}P(O,i_t=q_j\mid \lambda^t)\cdot I(o_t=v_k)}{\eta_j} \\ &=\frac{\sum_{t=1}^{T}P(O,i_t=q_j\mid \lambda^t)\cdot I(o_t=v_k)}{\sum_{t=1}^{T}P(O,i_t=q_j\mid \lambda^t)} \end{aligned}

bj(k)t+1=−ηj∑t=1TP(O,it=qj∣λt)⋅I(ot=vk)=∑t=1TP(O,it=qj∣λt)∑t=1TP(O,it=qj∣λt)⋅I(ot=vk)

综上,得到递推式如下:

π

i

t

+

1

=

P

(

O

∣

i

1

=

q

i

,

λ

t

)

P

(

O

∣

λ

t

)

α

i

j

t

+

1

=

∑

t

=

2

T

P

(

O

,

i

t

−

1

=

i

,

i

t

=

j

∣

λ

t

)

∑

t

=

2

T

P

(

O

,

i

t

−

1

=

q

i

∣

λ

t

)

b

j

(

k

)

t

+

1

=

∑

t

=

1

T

P

(

O

,

i

t

=

q

j

∣

λ

t

)

⋅

I

(

o

t

=

v

k

)

∑

t

=

1

T

P

(

O

,

i

t

=

q

j

∣

λ

t

)

\begin{aligned} &\pi_i^{t+1} = \frac{P(O|i_1=q_i,\lambda^t)}{P(O|\lambda^t)} \\ \\ &\alpha_{ij}^{t+1} =\frac{\sum_{t=2}^{T}P\left(O, i_{t-1}=i, i_{t}=j \mid \lambda^t\right)}{\sum_{t=2}^{T}P(O,i_{t-1}=q_i|\lambda^t)} \\ \\ &b_j(k)^{t+1} = \frac{\sum_{t=1}^{T}P(O,i_t=q_j\mid \lambda^t)\cdot I(o_t=v_k)}{\sum_{t=1}^{T}P(O,i_t=q_j\mid \lambda^t)} \end{aligned}

πit+1=P(O∣λt)P(O∣i1=qi,λt)αijt+1=∑t=2TP(O,it−1=qi∣λt)∑t=2TP(O,it−1=i,it=j∣λt)bj(k)t+1=∑t=1TP(O,it=qj∣λt)∑t=1TP(O,it=qj∣λt)⋅I(ot=vk)

定义

ξ

t

(

i

,

j

)

=

p

(

O

,

i

t

=

q

i

,

i

t

+

1

=

q

j

∣

λ

t

)

P

(

O

∣

λ

t

)

\begin{aligned} \xi_t(i,j) = \frac{p(O,i_{t}=q_i,i_{t+1}=q_j|\lambda^t)}{P(O|\lambda^t)} \end{aligned}

ξt(i,j)=P(O∣λt)p(O,it=qi,it+1=qj∣λt)

γ t ( i ) = P ( O ∣ i t = q i , λ t ) P ( O ∣ λ t ) \gamma_t(i) = \frac{P(O|i_t=q_i,\lambda^t)}{P(O|\lambda^t)} γt(i)=P(O∣λt)P(O∣it=qi,λt)

于是

π

i

t

+

1

=

γ

1

(

i

)

α

i

j

t

+

1

=

∑

t

=

1

T

−

1

ξ

t

(

i

,

j

)

∑

1

T

−

1

γ

t

(

i

)

b

j

(

k

)

t

+

1

=

∑

t

=

1

T

γ

t

(

j

)

⋅

I

(

o

t

=

v

k

)

∑

t

=

1

T

γ

t

(

j

)

\begin{aligned} &\pi_i^{t+1} =\gamma_1(i) \\ \\ &\alpha_{ij}^{t+1} =\frac{\sum_{t=1}^{T-1}\xi_t(i,j)}{\sum_{1}^{T-1}\gamma_t(i)} \\ \\ &b_j(k)^{t+1} = \frac{\sum_{t=1}^{T}\gamma_t(j)\cdot I(o_t=v_k)}{\sum_{t=1}^{T}\gamma_t(j)} \end{aligned}

πit+1=γ1(i)αijt+1=∑1T−1γt(i)∑t=1T−1ξt(i,j)bj(k)t+1=∑t=1Tγt(j)∑t=1Tγt(j)⋅I(ot=vk)

算法过程总结

(1)初始化

选取 α i j 0 , b j ( k ) 0 , π i 0 \alpha_{ij}^0,b_j(k)^0,\pi_i^0 αij0,bj(k)0,πi0

(2)递推

π

i

t

+

1

=

γ

1

(

i

)

α

i

j

t

+

1

=

∑

t

=

1

T

−

1

ξ

t

(

i

,

j

)

∑

1

T

−

1

γ

t

(

i

)

b

j

(

k

)

t

+

1

=

∑

t

=

1

T

γ

t

(

j

)

⋅

I

(

o

t

=

v

k

)

∑

t

=

1

T

γ

t

(

j

)

\begin{aligned} &\pi_i^{t+1} =\gamma_1(i) \\ \\ &\alpha_{ij}^{t+1} =\frac{\sum_{t=1}^{T-1}\xi_t(i,j)}{\sum_{1}^{T-1}\gamma_t(i)} \\ \\ &b_j(k)^{t+1} = \frac{\sum_{t=1}^{T}\gamma_t(j)\cdot I(o_t=v_k)}{\sum_{t=1}^{T}\gamma_t(j)} \end{aligned}

πit+1=γ1(i)αijt+1=∑1T−1γt(i)∑t=1T−1ξt(i,j)bj(k)t+1=∑t=1Tγt(j)∑t=1Tγt(j)⋅I(ot=vk)

(3)终止

得到模型参数 λ T = ( π i T , A T , B T ) \lambda^T = (\pi_i^T,A^T,B^T) λT=(πiT,AT,BT)

补充:忘了讲 γ \gamma γ和 ξ \xi ξ的求法,读者可尝试自行推导,或者查看《统计学习方法》书中hmm本节内容,然后可与下方_gamma函数和_xi对照

算法实现

我们还是以盒子和球模型为例

生成观测序列

def generate(T):

boxes = [

[0,0,0,0,0,1,1,1,1,1],

[0,0,0,1,1,1,1,1,1,1],

[0,0,0,0,0,0,1,1,1,1],

[0,0,0,0,0,0,0,0,1,1]

]

I = []

O = []

# 第一步,选择一个盒子

index = np.random.choice(len(boxes))

for t in range(T):

I.append(index)

# 第二步,抽球

O.append(np.random.choice(boxes[index]))

# 第三步,重新选择盒子

if index==0:

index = 1

elif index==1 or index==2:

index = np.random.choice([index-1,index-1,index+1,index+1,index+1])

elif index==3:

index = np.random.choice([index,index-1])

return I,O

定义模型

class Model:

def __init__(self,M,N) -> None:

# 状态集合

self.N = N

# 观测集合

self.M = M

def _gamma(self,t,i):

return self.alpha[t][i]*self.beta[t][i]/np.sum(self.alpha[t]*self.beta[t])

def _xi(self,t,i,j):

fenmu = 0

for i1 in range(self.N):

for j1 in range(self.N):

fenmu += self.alpha[t][i1]*self.A[i1][j1]*self.B[j1][self.O[t+1]]*self.beta[t+1][j1]

return (self.alpha[t][i]*self.A[i][j]*self.B[j][self.O[t+1]]*self.beta[t+1][j])/fenmu

def backward(self):

# 初值

self.beta = np.ones(shape=(self.T,self.N))

# 递推

for t in reversed(range(1,self.T)):

for i in range(self.N):

for j in range(self.N):

self.beta[t-1][i] += self.beta[t][j]*self.B[j][self.O[t]]*self.A[i][j]

def forward(self):

self.alpha = np.zeros(shape=(self.T,self.N),dtype=float)

# 初值

for i in range(self.N):

self.alpha[0][i] = self.pi[i]*self.B[i][self.O[0]]

# 递推

for t in range(self.T-1):

for i in range(self.N):

for j in range(self.N):

self.alpha[t+1][i] += self.alpha[t][j]*self.A[j][i]

self.alpha[t+1][i] = self.alpha[t+1][i]*self.B[i][self.O[t]]

def train(self,O,max_iter=100,toler=1e-5):

self.O = O

self.T = len(O)

# 初始化

# pi是一个N维的向量

self.pi = np.ones(shape=(self.N,))/self.N

# A是一个NxN的矩阵

self.A = np.ones(shape=(self.N,self.N))/self.N

# B是一个NxM的矩阵

self.B = np.ones(shape=(self.N,self.M))/self.M

# pi是一个N维的向量

pi = np.ones(shape=(self.N,))/self.N

# A是一个NxN的矩阵

A = np.ones(shape=(self.N,self.N))/self.N

# B是一个NxM的矩阵

B = np.ones(shape=(self.N,self.M))/self.M

## 递推

for epoch in range(max_iter):

# 计算前向概率和后向概率

self.forward()

self.backward()

# 计算gamma

for i in range(self.N):

pi[i] = self._gamma(1,i)

for j in range(self.N):

fenzi = 0

fenmu = 0

for t2 in range(self.T-1):

fenzi += self._xi(t2,i,j)

fenmu += self._gamma(t2,j)

A[i][j] = fenzi/fenmu

for j in range(self.N):

for k in range(self.M):

fenzi = 0

fenmu = 0

for t2 in range(self.T):

fenzi += self._gamma(t2,j)*(self.O[t2]==k)

fenmu += self._gamma(t2,j)

B[j][k] = fenzi/fenmu

if np.max(abs(self.pi - pi)) < toler and \

np.max(abs(self.A - A)) < toler and \

np.max(abs(self.B - B)) < toler:

self.pi = pi

self.A = A

self.B = B

return pi,A,B

self.pi = pi.copy()

self.A = A.copy()

self.B = B.copy()

return self.pi,self.A,self.B

def predict(self,O):

self.O = O

self.T = len(O)

self.forward()

return np.sum(self.alpha[self.T-1])

训练

model = Model(2,4)

model.train(O,toler=1e-10)

输出如下

(array([0.25, 0.25, 0.25, 0.25]),

array([[0.25, 0.25, 0.25, 0.25],

[0.25, 0.25, 0.25, 0.25],

[0.25, 0.25, 0.25, 0.25],

[0.25, 0.25, 0.25, 0.25]]),

array([[0.625, 0.375],

[0.625, 0.375],

[0.625, 0.375],

[0.625, 0.375]]))

完全不对劲,但是可以理解。

监督式学习算法-极大似然估计

推导过Baum-Welch算法之后推导极大似然估计就轻松很多了

假设我们的样本如下

{

(

O

1

,

I

1

)

,

(

O

2

,

I

2

)

,

⋯

,

(

O

S

,

I

S

)

}

\left\{\left(O_{1}, I_{1}\right),\left(O_{2}, I_{2}\right), \cdots,\left(O_{S}, I_{S}\right)\right\}

{(O1,I1),(O2,I2),⋯,(OS,IS)}

根据极大似然估计则有

λ

^

=

a

r

g

m

a

x

λ

P

(

O

,

I

∣

λ

)

=

a

r

g

m

a

x

λ

∏

j

=

1

S

P

(

O

j

,

I

j

∣

λ

)

=

a

r

g

m

a

x

λ

∑

j

=

1

S

l

o

g

P

(

O

j

,

I

j

∣

λ

)

=

a

r

g

m

a

x

λ

∑

j

=

1

S

l

o

g

(

∏

t

=

1

T

b

i

t

j

(

o

t

)

⋅

∏

t

=

2

T

α

i

t

−

1

t

t

j

⋅

π

i

1

j

)

=

a

r

g

m

a

x

λ

∑

j

=

1

S

[

∑

t

=

1

T

l

o

g

(

b

i

t

j

(

o

t

)

)

+

∑

t

=

2

T

l

o

g

(

α

i

t

−

1

t

t

j

)

+

l

o

g

(

π

i

1

j

)

]

\begin{aligned} \hat\lambda &= \underset{\lambda}{argmax}\ P(O,I|\lambda) \\ &= \underset{\lambda}{argmax}\ \prod_{j=1}^{S}P(O_j,I_j|\lambda) \\ &= \underset{\lambda}{argmax}\ \sum_{j=1}^{S}logP(O_j,I_j|\lambda) \\ &= \underset{\lambda}{argmax}\ \sum_{j=1}^{S}log\left(\prod_{t=1}^{T}b_{i_t}^j(o_t)\cdot \prod_{t=2}^{T}\alpha_{i_{t-1}t_t}^j\cdot \pi_{i_1}^j\right) \\ &=\underset{\lambda}{argmax}\ \sum_{j=1}^{S}\left[\sum_{t=1}^{T}log(b_{i_t}^j(o_t))+ \sum_{t=2}^{T}log(\alpha_{i_{t-1}t_t}^j)+log(\pi_{i_1}^j)\right] \\ \end{aligned}

λ^=λargmax P(O,I∣λ)=λargmax j=1∏SP(Oj,Ij∣λ)=λargmax j=1∑SlogP(Oj,Ij∣λ)=λargmax j=1∑Slog(t=1∏Tbitj(ot)⋅t=2∏Tαit−1ttj⋅πi1j)=λargmax j=1∑S[t=1∑Tlog(bitj(ot))+t=2∑Tlog(αit−1ttj)+log(πi1j)]

我们先估计

π

\pi

π(i1省略不写),与

π

\pi

π相关的只有最后一项

π

^

=

a

r

g

m

a

x

π

∑

j

=

1

S

log

π

j

\hat{\pi}=\underset{\pi}{argmax} \sum_{j=1}^{S} \log \pi^{j}

π^=πargmaxj=1∑Slogπj

对

π

\pi

π有约束

∑

i

=

1

N

π

i

=

1

\sum_{i=1}^{N}\pi_i = 1

i=1∑Nπi=1

记拉格朗日函数

L

(

π

,

η

)

=

∑

j

=

1

S

log

π

j

+

η

⋅

(

∑

i

=

1

N

π

i

−

1

)

L(\pi, \eta)=\sum_{j=1}^{S} \log \pi^{j}+\eta \cdot\left(\sum_{i=1}^{N} \pi_i-1\right)

L(π,η)=j=1∑Slogπj+η⋅(i=1∑Nπi−1)

对

π

i

\pi_i

πi求偏导

∂

L

∂

π

i

=

∑

j

=

1

S

I

(

I

1

j

=

i

)

π

i

+

η

=

0

\frac{\partial L}{\partial \pi_{i}}=\frac{\sum_{j=1}^{S} I\left(I_{1 j}=i\right)}{\pi_{i}}+\eta=0

∂πi∂L=πi∑j=1SI(I1j=i)+η=0

即

∑

j

=

1

S

I

(

I

1

j

=

i

)

+

η

⋅

π

i

=

0

\sum_{j=1}^{S} I\left(I_{1 j}=i\right) + \eta \cdot \pi_i = 0

j=1∑SI(I1j=i)+η⋅πi=0

对i求和

∑

i

=

1

N

∑

j

=

1

S

I

(

I

1

j

=

i

)

+

η

⋅

∑

i

=

1

N

π

i

=

0

\sum_{i=1}^{N}\sum_{j=1}^{S}I(I_{1j}=i) + \eta \cdot \sum_{i=1}^{N}\pi_i = 0

i=1∑Nj=1∑SI(I1j=i)+η⋅i=1∑Nπi=0

即

∑

j

=

1

S

∑

i

=

1

N

I

(

I

1

j

=

i

)

+

η

=

0

∑

j

=

1

S

∑

i

=

1

N

I

(

I

1

j

=

i

)

+

η

=

0

∑

j

=

1

S

1

+

η

=

0

η

=

−

S

\begin{aligned} &\sum_{j=1}^{S}\sum_{i=1}^{N}I(I_{1j}=i) + \eta = 0 \\ &\sum_{j=1}^{S}\sum_{i=1}^{N}I(I_{1j}=i) + \eta = 0 \\ &\sum_{j=1}^{S}1 +\eta = 0 \\ &\eta = -S \end{aligned}

j=1∑Si=1∑NI(I1j=i)+η=0j=1∑Si=1∑NI(I1j=i)+η=0j=1∑S1+η=0η=−S

所以

π

^

i

=

−

∑

j

=

1

S

I

(

I

1

j

=

i

)

η

=

∑

j

=

1

S

I

(

I

1

j

=

i

)

S

\hat \pi_i = -\frac{\sum_{j=1}^{S} I\left(I_{1 j}=i\right)}{\eta} = \frac{\sum_{j=1}^{S} I\left(I_{1 j}=i\right)}{S}

π^i=−η∑j=1SI(I1j=i)=S∑j=1SI(I1j=i)

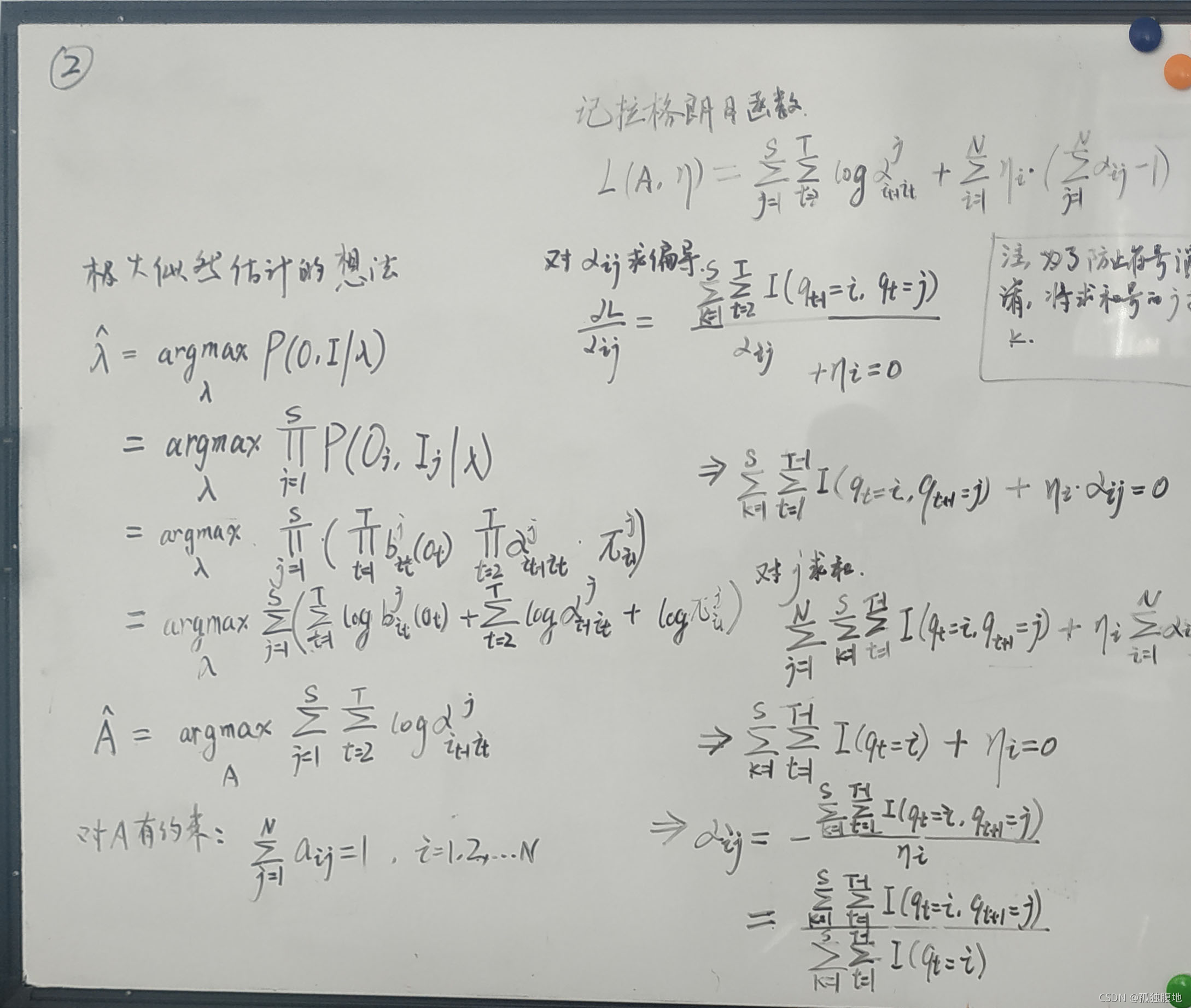

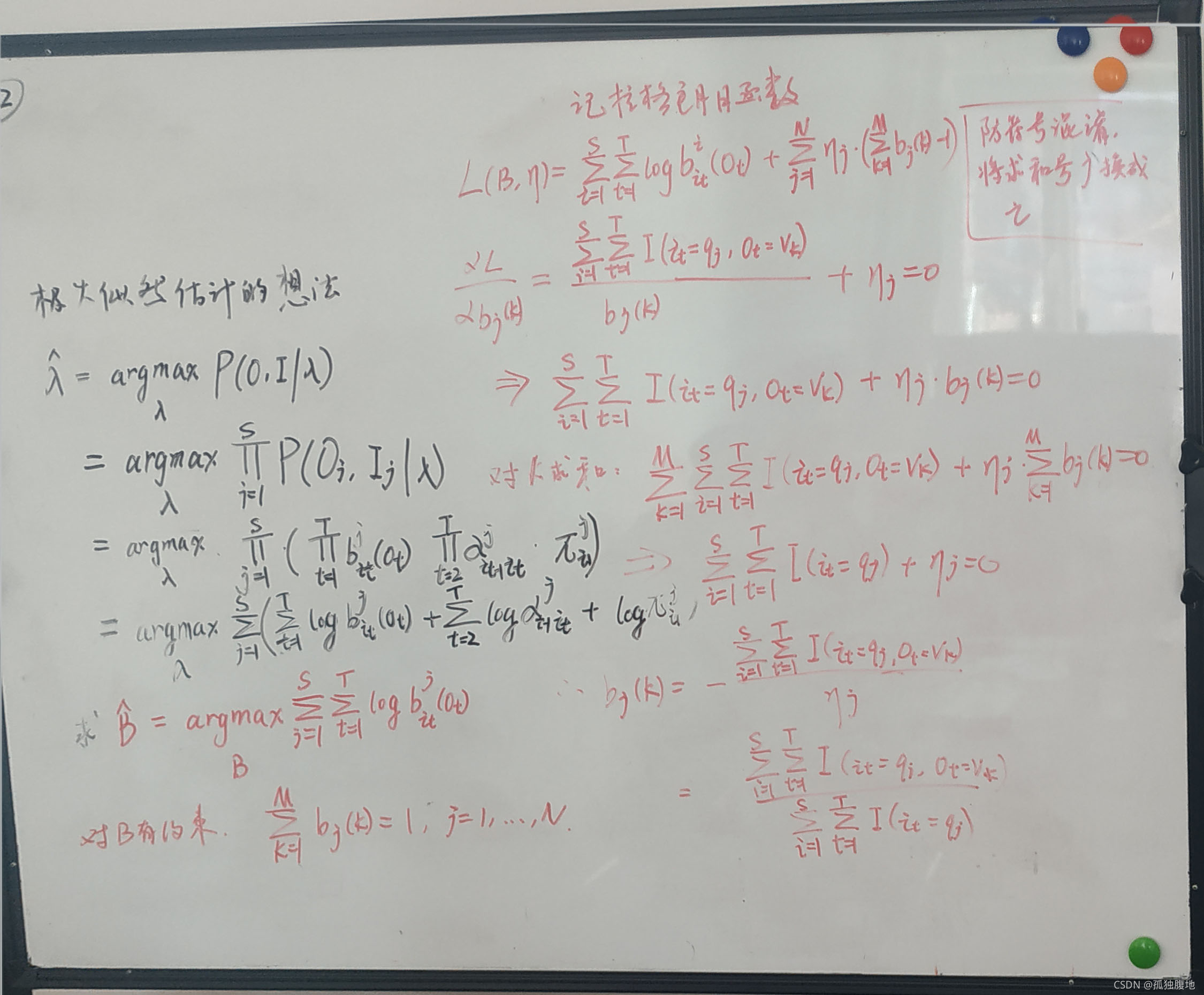

剩下A,B的推导过程,请允许我贴两张图,打公式打烦了

图里面写的有一些问题,希望读者阅读过程中可以自行发现,如果阅读的时候没发现,你看到下面也会发现的

于是我们得到最终估计为

π

^

i

=

∑

j

=

1

S

I

(

i

1

j

=

q

i

)

S

α

^

i

j

=

∑

k

=

1

S

∑

t

=

1

T

−

1

I

(

i

t

k

=

q

i

,

i

t

+

1

k

=

q

j

)

∑

k

=

1

S

∑

t

=

1

T

−

1

I

(

i

t

k

=

q

i

)

b

^

j

(

k

)

=

∑

i

=

1

S

∑

t

=

1

T

I

(

i

t

i

=

q

j

,

o

t

i

=

v

k

)

∑

i

=

1

S

∑

t

=

1

T

I

(

i

t

i

=

q

j

)

\begin{aligned} &\hat \pi_i = \frac{\sum_{j=1}^{S} I\left(i_1^j=q_i\right)}{S} \\ &\hat \alpha_{ij} = \frac{\sum_{k=1}^{S} \sum_{t=1}^{T-1} I\left(i_{t}^k=q_i, i_{t+1}^k=q_j\right)}{\sum_{k=1}^{S} \sum_{t=1}^{T-1} I\left(i_{t}^k=q_i\right)} \\ &\hat b_j(k) =\frac{\sum_{i=1}^{S} \sum_{t=1}^{T} I\left(i_t^i=q_{j}, o_{t}^i=v_{k}\right) }{\sum_{i=1}^{S} \sum_{t=1}^{T} I\left(i_{t}^i=q_{j}\right)} \end{aligned}

π^i=S∑j=1SI(i1j=qi)α^ij=∑k=1S∑t=1T−1I(itk=qi)∑k=1S∑t=1T−1I(itk=qi,it+1k=qj)b^j(k)=∑i=1S∑t=1TI(iti=qj)∑i=1S∑t=1TI(iti=qj,oti=vk)

算法实现

生成数据

data = []

for i in range(100):

I,O = generate(100)

data.append([I,O])

定义模型

class SupervisedModel:

def __init__(self,M,N) -> None:

self.M = M

self.N = N

def train(self,data):

# data:Sx2xT

# [[[i1,i2,...],[o1,o2,...]],[],[]]

self.pi = np.zeros(shape=(self.N,))

self.A = np.zeros(shape=(self.N,self.N))

self.B = np.zeros(shape=(self.N,self.M))

S = len(data)

self.T = len(data[0][0])

# 求 pi

for i in range(self.N):

for j in range(S):

self.pi[i] += data[j][0][0]==i

self.pi[i] = self.pi[i]/S

# 求 A

for i in range(self.N):

for j in range(self.N):

fenzi = 0

fenmu = 0

for k in range(S):

for t in range(self.T-1):

fenzi += data[k][0][t]==i and data[k][0][t+1]==j

fenmu += data[k][0][t]==i

self.A[i][j] = fenzi/fenmu

# 求 B

for j in range(self.N):

for k in range(self.M):

fenzi = 0

fenmu = 0

for i in range(S):

for t in range(self.T):

fenzi += data[i][0][t]==j and data[i][1][t]==k

fenmu += data[i][0][t]==j

self.B[j][k] = fenzi/fenmu

return self.pi,self.A,self.B

def predict(self,O):

# 初值

beta = np.ones(shape=(self.T,))

# 递推

for o in reversed(O):

temp = np.zeros_like(beta)

for i in range(self.T):

for j in range(self.T):

temp[i] += beta[j]*self.B[j][o]*self.A[i][j]

beta = temp

# 终止

res = 0

for i in range(self.T):

res += self.B[i][O[0]]*beta[i]*self.pi[i]

return res

训练模型

model = SupervisedModel(2,4)

model.train(data)

(array([0.19, 0.25, 0.2 , 0.36]),

array([[0. , 1. , 0. , 0. ],

[0.38930233, 0. , 0.61069767, 0. ],

[0. , 0.4073957 , 0. , 0.5926043 ],

[0. , 0. , 0.49933066, 0.50066934]]),

array([[0.47313084, 0.52686916],

[0.30207852, 0.69792148],

[0.60162602, 0.39837398],

[0.80851627, 0.19148373]]))

可以看到监督学习的效果非常好

1169

1169

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?