这篇博客主要讲述使用srs_librtmp拉流,大概会提一下librtmp拉流,详情请关注大神雷神(雷霄骅)的博客https://blog.csdn.net/leixiaohua1020/article/details/12971635

此外,此篇博客讲的是RTMP拉流保存为h264格式,所以要确保流中视频的格式是AVC(H.264)

使用librtmp拉流

librtmp是通过调用int RTMP_Read(RTMP *r, char *buf, int size); 拉出来的流直接是flv格式,保存下来就能直接播放。

RTMP_Read内部调用Read_1_Packet,它的功能是从网络上读取一个RTMPPacket的数据,RTMP_Read内部只是在基础上加了13个字节的flv的头。

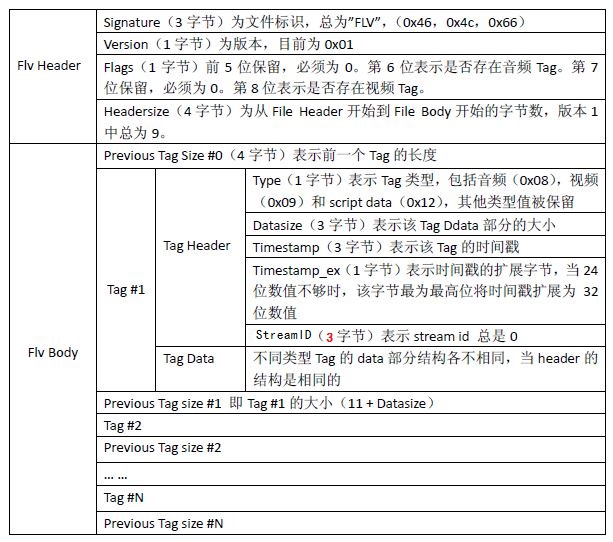

关于flv的格式,这里征用了大神雷神(雷霄骅)博客的表,详情看https://blog.csdn.net/leixiaohua1020/article/details/17934487

讲的肯定比我讲的生动形象具体。

librtmp源码中能看见FLV头信息

static const char flvHeader[] = { 'F', 'L', 'V', 0x01,

0x00, /* 0x04代表有音频, 0x01代表有视频 */

0x00, 0x00, 0x00, 0x09,

0x00, 0x00, 0x00, 0x00

};可以看见,图标中flv的头其实只有9个字节,但是为什么这里是13个字节呢?

那是因为除开9个字节的flv头之后,是一个又一个的Tag,每个Tag的开头都有4个字节,表示上个Tag(前一个Tag)的长度,即使是第一个Tag也必须有4个字节来填充,但是第一个Tag是没有任何Tag在他之前的,所以这4个字节都为0x00,就能与源码中的flvHeader对应上了。

srs_librtmp拉流

这里使用的是srs v2.0-r6 版本 (v2.0-r7版本加入了ipv6功能,个人觉得不太好用,连接rtmp服务器时总是失败,可能是我蠢不太用的来)

从srs导出srs_librtmp客户端可以详见https://github.com/ossrs/srs/wiki/v2_CN_SrsLibrtmp#export-srs-librtmp

导出后,会发现research/librtmp下有作者写的一些demo,其中srs_rtmp_dump.c是从rtmp服务器拉流并且保存为flv文件。

下面是demo的源码,我简化了一下:

【重要】下面代码在vs2017下能编译能跑,但是保存的flv文件播放不了,在linux下能正常播放。

int main(int argc, char** argv)

{

srs_flv_t flv = NULL;

srs_rtmp_t rtmp = NULL;

const char* rtmp_url = "rtmp://192.168.96.128:1935/live/aaa"; //改成自己的rtmp url

const char* output_flv = "D:\\test.flv"; //改成自己的保存flv的文件路径

rtmp = srs_rtmp_create(rtmp_url);

if (srs_rtmp_dns_resolve(rtmp) != 0) {

srs_human_trace("dns resolve failed.");

goto rtmp_destroy;

}

//连接rtmp服务器

if (srs_rtmp_connect_server(rtmp) != 0) {

srs_human_trace("connect to server failed.");

goto rtmp_destroy;

}

//简单rtmp握手

if (srs_rtmp_do_simple_handshake(rtmp) != 0) {

srs_human_trace("simple handshake failed.");

goto rtmp_destroy;

}

srs_human_trace("do simple handshake success");

if (srs_rtmp_connect_app(rtmp) != 0) {

srs_human_trace("connect vhost/app failed.");

goto rtmp_destroy;

}

srs_human_trace("connect vhost/app success");

//请求服务器发流

if (srs_rtmp_play_stream(rtmp) != 0) {

srs_human_trace("play stream failed.");

goto rtmp_destroy;

}

srs_human_trace("play stream success");

//打开一个可写的文件

if (output_flv) {

flv = srs_flv_open_write(output_flv);

}

//添加flv的头信息,可以看见只有9个字节

if (flv) {

// flv header

char header[9];

// 3bytes, signature, "FLV",

header[0] = 'F';

header[1] = 'L';

header[2] = 'V';

// 1bytes, version, 0x01,

header[3] = 0x01;

// 1bytes, flags, UB[5] 0, UB[1] audio present, UB[1] 0, UB[1] video present.

header[4] = 0x03; // audio + video. 这里demo应该写错了 应该是0x05

// 4bytes, dataoffset

header[5] = 0x00;

header[6] = 0x00;

header[7] = 0x00;

header[8] = 0x09;

//虽然header[9]只有9个字节,但是在调用srs_flv_write_header时,把剩余的4个0x00写了进

//去,总共写了13个字节

if (srs_flv_write_header(flv, header) != 0) {

srs_human_trace("write flv header failed.");

goto rtmp_destroy;

}

}

//循环收流写flv

for (;;) {

int size;

char type;

char* data;

u_int32_t timestamp;

//读取一个data,并获取时间戳,data的类型

if (srs_rtmp_read_packet(rtmp, &type, ×tamp, &data, &size) != 0) {

srs_human_trace("read rtmp packet failed.");

goto rtmp_destroy;

}

//只有当type是音频、视频、以及TYPE_SCRIPT时才需要写进flv

// we only write some types of messages to flv file.

int is_flv_msg = type == SRS_RTMP_TYPE_AUDIO

|| type == SRS_RTMP_TYPE_VIDEO || type == SRS_RTMP_TYPE_SCRIPT;

//TYPE_SCRIPT类型的data,我们又只要其中的onMetaData,因为flv格式的第一个tag就是他

// for script data, ignore except onMetaData

if (type == SRS_RTMP_TYPE_SCRIPT) {

if (!srs_rtmp_is_onMetaData(type, data, size)) {

is_flv_msg = 0;

}

}

if (flv) {

if (is_flv_msg) {

//写到flv文件里去

if (srs_flv_write_tag(flv, type, timestamp, data, size) != 0) {

srs_human_trace("dump rtmp packet failed.");

goto rtmp_destroy;

}

} else {

srs_human_trace("drop message type=%#x, size=%dB", type, size);

}

}

free(data);

}

rtmp_destroy:

srs_rtmp_destroy(rtmp);

srs_flv_close(flv);

srs_human_trace("completed");

return 0;

}代码部分我注释了自己的理解,当然如果有错请轻喷。此外demo中的flv头header[4]应该是0x05,而demo写的0x03。但是ffplay显然没有鸟这个字段,都能播放成功。

解析video Tag Data

追踪srs_librtmp源码,可以发现srs_flv_write_tag是通过收到的data,封装成一个又一个的tag,然后写进flv文件中。不难推测出,srs_rtmp_read_packet读取到的数据就是flv文件中的tag data

Tag Data分为3种,Audio Tag Data, Video Tag Data, Script Tag Data(控制帧),这里只讲述Video Tag Data

| Field | Type | Comment |

| Frame Type | UB [4] | Type of video frame. The following values are defined: 1 = key frame (for AVC, a seekable frame) 2 = inter frame (for AVC, a non-seekable frame) 3 = disposable inter frame (H.263 only) 4 = generated key frame (reserved for server use only) 5 = video info/command frame |

| CodecID | UB [4] | Codec Identifier. The following values are defined: 2 = Sorenson H.263 3 = Screen video 4 = On2 VP6 5 = On2 VP6 with alpha channel 6 = Screen video version 2 7 = AVC |

| AVCPacketType | IF CodecID == 7 UI8 | The following values are defined: |

| CompositionTime | IF CodecID == 7 SI24 | IF AVCPacketType == 1 Composition time offset ELSE 0 See ISO 14496-12, 8.15.3 for an explanation of composition times. The offset in an FLV file is always in milliseconds. |

VideoTagHeader的头1个字节,也就是接跟着StreamID的1个字节包含着视频帧类型及视频CodecID最基本信息.表里列的十分清楚.

VideoTagHeader之后跟着的就是VIDEODATA数据了,也就是video payload.当然就像音频AAC一样,这里也有特例就是如果视频的格式是AVC(H.264)的话,VideoTagHeader会多出4个字节的信息.

AVCPacketType 和 CompositionTime。AVCPacketType 表示接下来 VIDEODATA (AVCVIDEOPACKET)的内容:

IF AVCPacketType == 0 AVCDecoderConfigurationRecord(AVC sequence header)

IF AVCPacketType == 1 One or more NALUs (Full frames are required)

AVCDecoderConfigurationRecord.包含着是H.264解码相关比较重要的sps和pps信息,再给AVC解码器送数据流之前一定要把sps和pps信息送出,否则的话解码器不能正常解码。而且在解码器stop之后再次start之前,如seek、快进快退状态切换等,都需要重新送一遍sps和pps的信息.AVCDecoderConfigurationRecord在FLV文件中一般情况也是出现1次,也就是第一个video tag. 但是有些视频流,sps和pps可能会发生变化,所以会出现好几次。

详情看这里https://www.cnblogs.com/fuland/p/3884932.html

AVCDecoderConfigurationRecord的定义在ISO 14496-15, 5.2.4.1中,我贴下原文,英语太烂,这里就不翻译了。

5.2.4.1 AVC decoder configuration record

This record contains the size of the length field used in each sample to indicate the length of its contained

NAL units as well as the initial parameter sets. This record is externally framed (its size must be supplied by

the structure which contains it).

This record contains a version field. This version of the specification defines version 1 of this record.

Incompatible changes to the record will be indicated by a change of version number. Readers must not

attempt to decode this record or the streams to which it applies if the version number is unrecognised.

Compatible extensions to this record will extend it and will not change the configuration version code. Readers

should be prepared to ignore unrecognised data beyond the definition of the data they understand (e.g. after

the parameter sets in this specification).

When used to provide the configuration of

a parameter set elementary stream,

a video elementary stream used in conjunction with a parameter set elementary stream,

the configuration record shall contain no sequence or picture parameter sets

(numOfSequenceParameterSets and numOfPictureParameterSets shall both have the value 0).

The values for AVCProfileIndication, AVCLevelIndication, and the flags which indicate profile compatibility

must be valid for all parameter sets of the stream described by this record. The level indication must indicate a

level of capability equal to or greater than the highest level indicated in the included parameter sets; each

profile compatibility flag may only be set if all the included parameter sets set that flag. The profile indication

must indicate a profile to which the entire stream conforms. If the sequence parameter sets are marked with

different profiles, and the relevant profile compatibility flags are all zero, then the stream may need

examination to determine which profile, if any, the stream conforms to. If the stream is not examined, or the

examination reveals that there is no profile to which the stream conforms, then the stream must be split into

two or more sub-streams with separate configuration records in which these rules can be met.

5.2.4.1.1 Syntax

aligned(8) class AVCDecoderConfigurationRecord {

unsigned int(8) configurationVersion = 1;

unsigned int(8) AVCProfileIndication;

unsigned int(8) profile_compatibility;

unsigned int(8) AVCLevelIndication;

bit(6) reserved = ‘111111’b;

unsigned int(2) lengthSizeMinusOne;

bit(3) reserved = ‘111’b;

unsigned int(5) numOfSequenceParameterSets;

for (i=0; i< numOfSequenceParameterSets; i++) {

unsigned int(16) sequenceParameterSetLength ;

bit(8*sequenceParameterSetLength) sequenceParameterSetNALUnit;

}

unsigned int(8) numOfPictureParameterSets;

for (i=0; i< numOfPictureParameterSets; i++) {

unsigned int(16) pictureParameterSetLength;

bit(8*pictureParameterSetLength) pictureParameterSetNALUnit;

}

}

5.2.4.1.2 Semantics

AVCProfileIndication contains the profile code as defined in ISO/IEC 14496-10.

profile_compatibility is a byte defined exactly the same as the byte which occurs between the

profile_IDC and level_IDC in a sequence parameter set (SPS), as defined in ISO/IEC 14496-10.

AVCLevelIndication contains the level code as defined in ISO/IEC 14496-10.

lengthSizeMinusOne indicates the length in bytes of the NALUnitLength field in an AVC video

sample or AVC parameter set sample of the associated stream minus one. For example, a size of one

byte is indicated with a value of 0. The value of this field shall be one of 0, 1, or 3 corresponding to a

length encoded with 1, 2, or 4 bytes, respectively.

numOfSequenceParameterSets indicates the number of SPSs that are used as the initial set of SPSs

for decoding the AVC elementary stream.

sequenceParameterSetLength indicates the length in bytes of the SPS NAL unit as defined in

ISO/IEC 14496-10.

sequenceParameterSetNALUnit contains a SPS NAL unit, as specified in ISO/IEC 14496-10. SPSs

shall occur in order of ascending parameter set identifier with gaps being allowed.

numOfPictureParameterSets indicates the number of picture parameter sets (PPSs) that are used

as the initial set of PPSs for decoding the AVC elementary stream.

pictureParameterSetLength indicates the length in bytes of the PPS NAL unit as defined in

ISO/IEC 14496-10.

pictureParameterSetNALUnit contains a PPS NAL unit, as specified in ISO/IEC 14496-10. PPSs

shall occur in order of ascending parameter set identifier with gaps being allowed.

Composition Time这个资料比较少,我又蠢,解释的不好请纠正:

PES流中有PTS(presentation time stamps)和DTS(decoder timestamps),时间单位是1/90000秒。

例如:

I帧、P帧、B帧的实际顺序为(按照该顺序显示)

I B B B P B B B P ... 因为B帧是参考前一个I帧(或P帧),以及后一个I帧(或P帧)编码的帧,所以需要先编码前后的I帧和P帧,再编码B帧

因此编码顺序为:

I P B B B P B B B ... PES流中一般是按照DTS的顺序传输的,所以传输顺序和编码顺序一样。

而在flv格式中,timestamp用于告诉帧何时应该提供给解码器,单位是毫秒milliseconds

timestamp = DTS / 90.0Composition Time告诉渲染器 在该视频帧进入解码器后 多长时间之后 再在设备上显示(单位为毫秒),因此

compositionTime = (PTS - DTS) / 90.0

(因为PTS >= DTS,所以结果不会是负的。)

(也有说在MP4中compositionTime 是unsigned int, 在flv中 compositionTime 是signed int, 因为在flv中,PTS是可以小于DTS的)

参考:https://stackoverflow.com/questions/7054954/the-composition-timects-when-wrapping-h-264-nalu

根据上面的信息,可以做如下总结:

1.用srs_librtmp拉流,拉出来的数据就是一个又一个的Tag Data

2.可以用type与宏值进行比较(type == SRS_RTMP_TYPE_VIDEO)判断该Tag Data 是否是Video Tag Data。

3.连接到rtmp服务器拉流时,收到的第一个Video Tag Data通常情况就是包含了PPS和SPS信息。

4.每个h264编码的Video Tag Data比其他编码格式的多了4个字节的Tag Data头信息(AVCPacketType, CompositionTime),其中CompositionTime是用于B帧的,我们这里暂时忽略他吧,我们就支持P帧和I帧即可。

5.Frame Type在h264编码中只能是1或者2, Frame Type == 1时是关键帧或者是包含PPS和SPS信息的Video Tag Data

6.CodecID在h264编码中只能是7(AVC)

7.当AVCPacketType == 0时, Video Tag Data包含SPS和PPS信息; 当AVCPacketType == 1时,是帧数据。

获取PPS和SPS信息

总结完毕,我们知道PPS和SPS非常关键,如果不把该信息告诉解码器,是根本播放不出视频的。下面贴上我写的蹩脚的代码(技术有限,多多见谅):

//aligned(8) class AVCDecoderConfigurationRecord {

// unsigned int(8) configurationVersion = 1;

// unsigned int(8) AVCProfileIndication;

// unsigned int(8) profile_compatibility;

// unsigned int(8) AVCLevelIndication;

// bit(6) reserved = ‘111111’b;

// unsigned int(2) lengthSizeMinusOne;

// bit(3) reserved = ‘111’b;

// unsigned int(5) numOfSequenceParameterSets;

// for (i=0; i< numOfSequenceParameterSets; i++) {

// unsigned int(16) sequenceParameterSetLength ;

// bit(8*sequenceParameterSetLength) sequenceParameterSetNALUnit;

// }

// unsigned int(8) numOfPictureParameterSets;

// for (i=0; i< numOfPictureParameterSets; i++) {

// unsigned int(16) pictureParameterSetLength;

// bit(8*pictureParameterSetLength) pictureParameterSetNALUnit;

// }

//}

typedef struct {

unsigned char configurationVersion;

unsigned char AVCProfileIndication;

unsigned char profile_compatibility;

unsigned char AVCLevelIndication;

unsigned char lengthSizeMinusOne;

}AVCDecoderConfiguration;

//解析data中sps和pps信息,并存于容器中

int VideoTools::parseSPSAndPPS(char *&data, int &size, AVCDecoderConfiguration & avcHeader) {

if(!data || size <= 9)

return -1;

avcHeader.configurationVersion = *data++;

avcHeader.AVCProfileIndication = *data++;

avcHeader.profile_compatibility = *data++;

avcHeader.AVCLevelIndication = *data++;

avcHeader.lengthSizeMinusOne = (*data++) & 0x03;

unsigned char spsNum = 0, ppsNum = 0;

spsNum = (*data++) & 0x1F;

if(spsNum <= 0)

return -1;

size -= 6;

for(auto i = 0; i < spsNum; i++) {

unsigned short sigSpsLen = (*data&0xff) << 8 | (*(data+1)&0xff);

data+=2;

size-=2;

if(sigSpsLen+3 >= size)

return -1;

std::string spsStr(frameHeaderStr);

spsStr.append(data, sigSpsLen);

if(i+1 > SPS.size()) {

SPS.push_back(std::move(spsStr));

} else {

SPS[i].swap(spsStr);

}

data+=sigSpsLen;

size-=sigSpsLen;

}

ppsNum = (*data++) & 0x1F;

if(ppsNum <= 0)

return -1;

size--;

for(auto i = 0; i < ppsNum; i++) {

unsigned short sigPpsLen = (*data&0xff) << 8 | (*(data+1)&0xff);

data+=2;

size-=2;

if(sigPpsLen > size)

return -1;

std::string ppsStr(frameHeaderStr);

ppsStr.append(data, sigPpsLen);

if(i+1 > PPS.size()) {

PPS.push_back(std::move(ppsStr));

} else {

PPS[i].swap(ppsStr);

}

data+=sigPpsLen;

size-=sigPpsLen;

}

return 0;

}

获取NALU

AVCPacketType == 1 表示 Video Tag Body的内容是NALU

Frame Type == 1表示NALU内容是关键帧, Frame Type == 2表示NALU内容是非关键帧

NALU的开头的4个字节表示的是NALU的长度 (nalu_length)

nalu_length之后是一个字节的nalu header

nalu header

详情见https://yumichan.net/video-processing/video-compression/introduction-to-h264-nal-unit/

| forbidden_zero_bit | 1bit | 强制0 |

| nal_ref_idc | 2bit | 优先级 |

| nal_unit_type | 5bit | 见下表 |

nal_ref_idc表示优先级,范围在00~11(2进制);值越大,越重要。00值指示NAL单元的内容不用于重建影响图像的帧间图像预测。这样的NAL单元可以被丢弃而不用冒影响图像完整性的风险,解码器在解码处理不过来的时候,可以丢掉重要性为0的NALU。大于00的值指示NAL单元的解码要求维护引用图像的完整性。

特别是, H.264规范要求对于nal_unit_type为6,9,10,11,12的NAL单元的NRI的值应该为0。对于nal_unit_type等于7,8 (指示顺序参数集或图像参数集)的NAL单元,H.264编码器应该设置NRI为11 (二进制格式)对于nal_unit_type等于5的主编码图像的编码片NAL单元(指示编码片属于一个IDR图像), H.264编码器应设置NRI为11。

参考:https://blog.csdn.net/heanyu/article/details/6109957

nal_unit_type 表示nalu类型

SPS开头是0x67 表示的是 nal_ref_idc为 3, nal_unit_type 为 7

PPS开头是0x68 表示的是 nal_ref_idc为 3, nal_unit_type 为 8

关键帧开头是0x65 表示的是 nal_ref_idc为 3, nal_unit_type 为 5

非关键帧开头是0x41 表示的是 nal_ref_idc为 2, nal_unit_type 为 1

| nal_unit_type | Content of NAL unit and RBSP syntax structure | C |

| 0 | Unspecified | |

| 1 | Coded slice of a non-IDR picture slice_layer_without_partitioning_rbsp( ) | 2, 3, 4 |

| 2 | Coded slice data partition A slice_data_partition_a_layer_rbsp( ) | 2 |

| 3 | Coded slice data partition B slice_data_partition_b_layer_rbsp( ) | 3 |

| 4 | Coded slice data partition C slice_data_partition_c_layer_rbsp( ) | 4 |

| 5 | Coded slice of an IDR picture slice_layer_without_partitioning_rbsp( ) | 2, 3 |

| 6 | Supplemental enhancement information (SEI) sei_rbsp( ) | 5 |

| 7 | Sequence parameter set seq_parameter_set_rbsp( ) | 0 |

| 8 | Picture parameter set pic_parameter_set_rbsp( ) | 1 |

| 9 | Access unit delimiter access_unit_delimiter_rbsp( ) | 6 |

| 10 | End of sequence end_of_seq_rbsp( ) | 7 |

| 11 | End of stream end_of_stream_rbsp( ) | 8 |

| 12 | Filler data filler_data_rbsp( ) | 9 |

| 13..23 | Reserved | |

| 24..31 | Unspecified |

nal_unit_type 为 5时表示idr帧,idr帧具有随机访问能力,所以每个idr帧前需要加上sps和pps。

startcode起始码

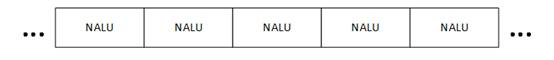

H.264原始码流(又称为“裸流”)是由一个一个的NALU组成的。他们的结构如下图所示。

其中每个NALU之间通过startcode(起始码)进行分隔,起始码分成两种:0x000001(3Byte)或者0x00000001(4Byte)。

具体什么时候用3个字节的起始码,什么时候用4个字节的起始码,这个我没太弄明白,有资料说具体哪种开头是由编码器实现的

0x000001 is the NAL start prefix code (it can also be 0x00000001, depends on the encoder implementation).

每个NAL前有一个起始码 0x000001,解码器检测每个起始码,作为一个NAL的起始标识,当检测到下一个起始码时,当前NAL结束。同时H.264规定,当检测到0x000000时,也可以表征当前NAL的结束。对于NAL中数据出现0x000001或0x000000时,H.264引入了防止竞争机制,如果编码器检测到NAL数据存在0x000001或0x000000时(非起始码,而是真正的音视频数据),编码器会在最后个字节前插入一个新的字节0x03,这样当遇到0x000001或0x000000时就一定是起始码了:

0x000000->0x00000300

0x000001->0x00000301

0x000002->0x00000302

0x000003->0x00000303

解码器检测到0x000003时,把03抛弃,恢复原始数据。

所以组装H264变成了以下步骤:

1.读取 tag data 并判断是否是video tag data

2.判断frameType 和 AVCPacketType, 区分video tag data是AVCDecoderConfigurationRecord还是NALU

3.如果是AVCDecoderConfigurationRecord则解析PPS和SPS保存在内存中, 并加上startcode(我这里加的是0x00000001)

4.如果是NALU,则判断nal_unit_type (有些NALU的流比较奇怪,依然包含PPS、SPS信息,甚至还有SEI信息_有些NALU的流比较奇怪,依然包含PPS、SPS信息,甚至还有SEI信息)

5.switch case根据不同的nal_unit_type 来解析(别忘记加上startcode)

6.如果nal_unit_type == 0x05, 则是idr帧, 还需要加上PPS和SPS信息(即一个idr通常包含3个startcode, SPS一个PPS一个idr帧数据一个)

贴上完整代码如下:

rtmpTo264.h

#ifndef RTMPTO264_H

#define RTMPTO264_H

#include <string>

#include <vector>

#include <list>

#include "srs_librtmp.hpp"

typedef struct {

unsigned char configurationVersion;

unsigned char AVCProfileIndication;

unsigned char profile_compatibility;

unsigned char AVCLevelIndication;

unsigned char lengthSizeMinusOne;

}AVCDecoderConfiguration;

namespace useLess{

static AVCDecoderConfiguration avcCfg;

static u_int32_t timestamp;

}

class rtmpTo264 {

public:

rtmpTo264(const std::string &url);

virtual ~rtmpTo264()= default;;

public:

int connectRtmpServer();

int readSigTagVDataToNalu(u_int32_t ×tamp);

int readOneVideoTagData(char* &data, int &size, u_int32_t ×tamp = useLess::timestamp);

int memNaluPopFront(std::string &nalu);

protected:

int parseSPSAndPPS(char* &data, int &size, AVCDecoderConfiguration & avcHeader = useLess::avcCfg);

int parseSigNALU(char* &data, int &size);

int parseSps(char* &data, int &spsLen);

int parsePps(char *&data, int &ppsLen);

void naluPushBackInMem(std::string && nalu);

private:

std::string RtmpUrl;

srs_rtmp_t rtmp;

bool parseSigNaluStatus;

std::string frameHeaderStr;

std::vector<std::string> SPS;

std::vector<std::string> PPS;

std::list<std::string> naluInMemory;

};

#endif //RTMPTO264_H

——————————————————————————————————————————————————————

rtmpTo264.cpp

#include "rtmpTo264.h"

rtmpTo264::rtmpTo264(const std::string &url)

: RtmpUrl(url)

, rtmp(nullptr)

{

char frameHeader[4] = {0};

frameHeader[3] = 0x01;

frameHeaderStr.append(frameHeader, 4);

}

int rtmpTo264::connectRtmpServer() {

rtmp = srs_rtmp_create(RtmpUrl.c_str());

if (srs_rtmp_dns_resolve(rtmp) != 0) {

fprintf(stderr, "dns resolve failed.\n");

srs_rtmp_destroy(rtmp);

return -1;

}

if (srs_rtmp_connect_server(rtmp) != 0) {

fprintf(stderr, "connect to server failed.\n");

srs_rtmp_destroy(rtmp);

return -1;

}

if (srs_rtmp_do_simple_handshake(rtmp) != 0) {

fprintf(stderr, "simple handshake failed.\n");

srs_rtmp_destroy(rtmp);

return -1;

}

if (srs_rtmp_connect_app(rtmp) != 0) {

fprintf(stderr, "connect vhost/app failed.\n");

srs_rtmp_destroy(rtmp);

return -1;

}

fprintf(stderr, "connect vhost/app success\n");

if (srs_rtmp_play_stream(rtmp) != 0) {

fprintf(stderr, "play stream failed.\n");

srs_rtmp_destroy(rtmp);

return -1;

}

return 0;

}

//if data != nullptr, need free

int rtmpTo264::readOneVideoTagData(char *&data, int &size, u_int32_t ×tamp) {

data = nullptr;

size = 0;

timestamp = 0;

while(true) {

char type;

if (srs_rtmp_read_packet(rtmp, &type, ×tamp, &data, &size) != 0) {

fprintf(stderr, "read rtmp packet failed.\n");

return -1;

}

if(type == SRS_RTMP_TYPE_VIDEO)

return 0;

else {

free(data);

data = nullptr;

size = 0;

}

}

}

//aligned(8) class AVCDecoderConfigurationRecord {

// unsigned int(8) configurationVersion = 1;

// unsigned int(8) AVCProfileIndication;

// unsigned int(8) profile_compatibility;

// unsigned int(8) AVCLevelIndication;

// bit(6) reserved = ‘111111’b;

// unsigned int(2) lengthSizeMinusOne;

// bit(3) reserved = ‘111’b;

// unsigned int(5) numOfSequenceParameterSets;

// for (i=0; i< numOfSequenceParameterSets; i++) {

// unsigned int(16) sequenceParameterSetLength ;

// bit(8*sequenceParameterSetLength) sequenceParameterSetNALUnit;

// }

// unsigned int(8) numOfPictureParameterSets;

// for (i=0; i< numOfPictureParameterSets; i++) {

// unsigned int(16) pictureParameterSetLength;

// bit(8*pictureParameterSetLength) pictureParameterSetNALUnit;

// }

//}

int rtmpTo264::parseSPSAndPPS(char *&data, int &size, AVCDecoderConfiguration & avcHeader) {

if(!data || size <= 9)

return -1;

avcHeader.configurationVersion = *data++;

avcHeader.AVCProfileIndication = *data++;

avcHeader.profile_compatibility = *data++;

avcHeader.AVCLevelIndication = *data++;

avcHeader.lengthSizeMinusOne = (*data++) & 0x03;

unsigned char spsNum = 0, ppsNum = 0;

spsNum = (*data++) & 0x1F;

if(spsNum <= 0)

return -1;

size -= 6;

for(auto i = 0; i < spsNum; i++) {

unsigned short sigSpsLen = (*data&0xff) << 8 | (*(data+1)&0xff);

data+=2;

size-=2;

if(sigSpsLen+3 >= size)

return -1;

std::string spsStr(frameHeaderStr);

spsStr.append(data, sigSpsLen);

if(i+1 > SPS.size()) { //unsigned 不能 size()-1比较

SPS.push_back(std::move(spsStr));

} else {

SPS[i].swap(spsStr);

}

data+=sigSpsLen;

size-=sigSpsLen;

}

ppsNum = (*data++) & 0x1F;

if(ppsNum <= 0)

return -1;

size--;

for(auto i = 0; i < ppsNum; i++) {

unsigned short sigPpsLen = (*data&0xff) << 8 | (*(data+1)&0xff);

data+=2;

size-=2;

if(sigPpsLen > size)

return -1;

std::string ppsStr(frameHeaderStr);

ppsStr.append(data, sigPpsLen);

if(i+1 > PPS.size()) {

PPS.push_back(std::move(ppsStr));

} else {

PPS[i].swap(ppsStr);

}

data+=sigPpsLen;

size-=sigPpsLen;

}

return 0;

}

int rtmpTo264::readSigTagVDataToNalu(u_int32_t ×tamp) {

char *data = nullptr;

int size = 0;

while(true) {

if (readOneVideoTagData(data, size, timestamp) < 0) {

fprintf(stderr, "readOneVideoTagData failed.\n");

return -1;

}

char *ptr = data;

char frameType = *ptr >> 4;

char codeType = *ptr & 0x0f;

char AVCPacketType = *(ptr+1);

ptr+=5; //frameType|codeType(1), AVCPacketType(1), Composition Time(3)

if(codeType != 0x07) { //not AVC codeType

free(data);

continue;

}

int restSize = size - (int)(ptr-data);

if(frameType != 0x01 && frameType != 0x02) {

fprintf(stderr, "frameType == %#02x error\n", frameType);

} else if(AVCPacketType != 0x00 && AVCPacketType != 0x01) {

fprintf(stderr, "AVCPacketType == %#02x error\n", AVCPacketType);

} else if(frameType == 0x01 && AVCPacketType == 0x00) {

if(parseSPSAndPPS(ptr, restSize) < 0 ) {

free(data);

continue;

}

} else {

if(parseSigNALU(ptr, restSize) < 0 && !parseSigNaluStatus) {

free(data);

continue;

}

}

break;

}

if(data)

free(data);

return 0;

}

int rtmpTo264::parseSigNALU(char *&data, int &size) {

parseSigNaluStatus = false;

while(true) {

if(!data || size <= 4) {

return -1;

}

int length = (*data&0xff) << 24 | (*(data+1)&0xff) << 16 | (*(data+2)&0xff) << 8| (*(data+3)&0xff);

data+=4;

size-=4;

if(size < length) {

return -1;

}

uint8_t NALUheader = *data;

uint8_t nal_unit_type = NALUheader & 0x1F;

std::string naluData;

switch (nal_unit_type) {

case 0x05: //idr frame

{

for(const auto & sig : SPS) {

naluData.append(sig);

}

for(const auto & sig : PPS) {

naluData.append(sig);

}

}

case 0x01: //none-idr frame

case 0x06: //SEI

{

naluData.append(frameHeaderStr);

naluData.append(data, length);

naluPushBackInMem(std::move(naluData));

break;

}

case 0x07: //SPS

{

parseSps(data, length);

break;

}

case 0x08: //PPS

{

parsePps(data, length);

break;

}

default:

fprintf(stderr, "its not a frame(nal_unit_type = %d), ignore it\n", nal_unit_type);

return -1;

}

parseSigNaluStatus = true;

size -= length;

data += length;

}

}

int rtmpTo264::parseSps(char *&data, int &spsLen) {

std::string spsStr(frameHeaderStr);

spsStr.append(data, spsLen);

SPS.clear();

SPS.push_back(spsStr);

return 0;

}

int rtmpTo264::parsePps(char *&data, int &ppsLen) {

std::string ppsStr(frameHeaderStr);

ppsStr.append(data, ppsLen);

PPS.clear();

PPS.push_back(ppsStr);

return 0;

}

void rtmpTo264::naluPushBackInMem(std::string && nalu) {

if(nalu.empty())

return;

if(naluInMemory.size() < 20)

naluInMemory.push_back(nalu);

else {

naluInMemory.erase(naluInMemory.begin());

naluInMemory.push_back(nalu);

}

}

int rtmpTo264::memNaluPopFront(std::string &nalu) {

nalu.clear();

if(naluInMemory.empty())

return -1;

nalu.swap(*naluInMemory.begin());

naluInMemory.erase(naluInMemory.begin());

return 0;

}

——————————————————————————————————————————————————————

main.cpp

#include <string>

#include <cstdio>

#include "rtmpTo264.h"

using namespace std;

int main(int argc, char** argv)

{

string url = "rtmp://192.168.96.128:1935/live/aaa";

u_int32_t timestamp = 0;

string sigNALUData, sigPsData;

FILE *fp = fopen("D:\\out123.h264", "wb");

if(!fp) {

perror("fopen");

return -1;

}

auto pRt264 = new rtmpTo264(url);

if(pRt264->connectRtmpServer()) {

fprintf(stderr, "connectRtmpServer failed.\n");

goto testERROR;

}

printf("connectRtmpServer success\n");

while(true) {

int ret = pRt264->readSigTagVDataToNalu(timestamp);

if(ret != 0) {

fprintf(stderr, "readSigTagVDataToNalu failed.\n");

goto testERROR;

}

while(!pRt264->memNaluPopFront(sigNALUData)) {

fwrite(sigNALUData.c_str(), 1, sigNALUData.size(), fp);

}

}

testERROR:

free(pRt264);

fclose(fp);

return 0;

}

2349

2349

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?